Compare commits

376 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

242d79e49d | ||

|

|

4f01ecde5a | ||

|

|

61141c7479 | ||

|

|

62429ff710 | ||

|

|

82a1f45cc1 | ||

|

|

1a95fc2a9c | ||

|

|

13816eeafa | ||

|

|

5279f73c17 | ||

|

|

d196bb2856 | ||

|

|

3f8f634a1b | ||

|

|

5ba27c898e | ||

|

|

57f1b63fa1 | ||

|

|

d69e203479 | ||

|

|

4d7fae39a8 | ||

|

|

2dc554c92a | ||

|

|

21c394ef7f | ||

|

|

2173bfc1a0 | ||

|

|

a19d016e14 | ||

|

|

8f1b5df9e2 | ||

|

|

2d6b8ecfdf | ||

|

|

8093612011 | ||

|

|

39dc761e32 | ||

|

|

0c68983c62 | ||

|

|

c7539f6e4b | ||

|

|

8cebc0acee | ||

|

|

f60c4d60cf | ||

|

|

662f9cba2e | ||

|

|

4a82e1e223 | ||

|

|

b60b912bf8 | ||

|

|

093348bc60 | ||

|

|

37ebbf14f9 | ||

|

|

156488c8d5 | ||

|

|

68d1f583cc | ||

|

|

3492b07d9a | ||

|

|

d0b582048f | ||

|

|

a82eb7b01f | ||

|

|

cd08afcbeb | ||

|

|

331942a4ed | ||

|

|

aa24d6ff7e | ||

|

|

58c2c19f1e | ||

|

|

2a91149211 | ||

|

|

868482c240 | ||

|

|

4e387fa943 | ||

|

|

15484363d6 | ||

|

|

50b7b74480 | ||

|

|

adb53c63dd | ||

|

|

bdc3a32e96 | ||

|

|

65f716182b | ||

|

|

6ef72e2550 | ||

|

|

60f51ad7d5 | ||

|

|

a09dc2cbd8 | ||

|

|

825d07aa54 | ||

|

|

f46882c778 | ||

|

|

663fa08cc1 | ||

|

|

19e625d38e | ||

|

|

edcff9cd15 | ||

|

|

e0fc5ecb39 | ||

|

|

4ac6629969 | ||

|

|

68d8dad7c8 | ||

|

|

4ab9ceafc1 | ||

|

|

352ed898d4 | ||

|

|

e091d6a50d | ||

|

|

c651ef00c9 | ||

|

|

4b17788a77 | ||

|

|

e5612bca50 | ||

|

|

d21fb1afe8 | ||

|

|

89d0a533e2 | ||

|

|

db673dddd9 | ||

|

|

88ad457e87 | ||

|

|

126b68559e | ||

|

|

2cd3fe47e6 | ||

|

|

15eb7cce55 | ||

|

|

13f923aabf | ||

|

|

0ffb112063 | ||

|

|

b4ea6af110 | ||

|

|

611c8f7374 | ||

|

|

1cc73f37e7 | ||

|

|

ca37fc0eb5 | ||

|

|

5380624da9 | ||

|

|

aaece0bd44 | ||

|

|

de7cc17f5d | ||

|

|

66efa39d27 | ||

|

|

ff7c0a105d | ||

|

|

7b29253df4 | ||

|

|

7ef63b341e | ||

|

|

e7bfaa4f1a | ||

|

|

3a9a408941 | ||

|

|

3e43963daa | ||

|

|

69a6e260f5 | ||

|

|

664e7ad555 | ||

|

|

ee4a009a06 | ||

|

|

36dfd4dd35 | ||

|

|

dbf36082b2 | ||

|

|

3a1018cff6 | ||

|

|

fc10745a1a | ||

|

|

347cfd06de | ||

|

|

ec759ce467 | ||

|

|

f211e0fe31 | ||

|

|

c91a128b65 | ||

|

|

6a080f3032 | ||

|

|

b2c12c1131 | ||

|

|

b945b37089 | ||

|

|

9a5529a0aa | ||

|

|

025785389d | ||

|

|

48d9a0dede | ||

|

|

fbdf38e990 | ||

|

|

ef5bf70386 | ||

|

|

274c1469b4 | ||

|

|

960d506360 | ||

|

|

79a6421178 | ||

|

|

8b5c004860 | ||

|

|

f54768772e | ||

|

|

b9075dc6f9 | ||

|

|

107596ad54 | ||

|

|

3c6a2b1508 | ||

|

|

f996cba354 | ||

|

|

bdd864fbdd | ||

|

|

ca074ef13f | ||

|

|

ddd3a8251e | ||

|

|

f5b862dc1b | ||

|

|

d45d475f61 | ||

|

|

d0f72ea3fa | ||

|

|

5ed5d1e5b6 | ||

|

|

311b14026e | ||

|

|

67cd722b54 | ||

|

|

7f6247eb7b | ||

|

|

f3fd515521 | ||

|

|

9db5dd0d7f | ||

|

|

d07925d79d | ||

|

|

662d0f31ff | ||

|

|

2c5ad0bf8f | ||

|

|

0ea76b986a | ||

|

|

3c4253c336 | ||

|

|

77ba28e91c | ||

|

|

6399e7586c | ||

|

|

1caa62adc8 | ||

|

|

8fa558f124 | ||

|

|

191228633b | ||

|

|

8a981f935a | ||

|

|

a8ea9adbcc | ||

|

|

685d94c44b | ||

|

|

153ed1b044 | ||

|

|

7788f3a1ba | ||

|

|

cd99225f9b | ||

|

|

33ba3b8d4a | ||

|

|

d222dd1069 | ||

|

|

39fd3d46ba | ||

|

|

419c1804b6 | ||

|

|

ae0351ddad | ||

|

|

941be15762 | ||

|

|

578ebcf6ed | ||

|

|

27ab4b08f9 | ||

|

|

428b2208ba | ||

|

|

438c553d60 | ||

|

|

90cb293182 | ||

|

|

1f9f93ebe4 | ||

|

|

f5b97fbb74 | ||

|

|

ce79244126 | ||

|

|

3af5d767d8 | ||

|

|

3ce3efd2f2 | ||

|

|

8108edea31 | ||

|

|

5d80087ab3 | ||

|

|

6593be584d | ||

|

|

a0f63f858f | ||

|

|

49914f3bd5 | ||

|

|

71988a8b98 | ||

|

|

d65be6ef58 | ||

|

|

38c40d02e7 | ||

|

|

9e071b9d60 | ||

|

|

b4ae060122 | ||

|

|

436656e81b | ||

|

|

d7e111b7d4 | ||

|

|

4b6126dd1a | ||

|

|

89faa70196 | ||

|

|

6ed9d4a1db | ||

|

|

9d0e38c2e1 | ||

|

|

8b758fd616 | ||

|

|

14369d8be3 | ||

|

|

7b4153113e | ||

|

|

7d340c5e61 | ||

|

|

337c94376d | ||

|

|

0ef1d0b2f1 | ||

|

|

5cf67bd4e0 | ||

|

|

f22be17852 | ||

|

|

48e79d5dd4 | ||

|

|

59f5a0654a | ||

|

|

6da2e11683 | ||

|

|

802c087a4b | ||

|

|

ed2048e9f3 | ||

|

|

437b1d30c0 | ||

|

|

ba1788cbc5 | ||

|

|

773094a20d | ||

|

|

5aa39106a0 | ||

|

|

a9167801ba | ||

|

|

62f4a6cb96 | ||

|

|

ea2b41e96e | ||

|

|

d28ce650e9 | ||

|

|

1bfcdba499 | ||

|

|

e48faa9144 | ||

|

|

33fbe99561 | ||

|

|

989925b484 | ||

|

|

7dd66559e7 | ||

|

|

2ef1c5608e | ||

|

|

b5932e8905 | ||

|

|

37999d3250 | ||

|

|

83985ae482 | ||

|

|

3adfcc837e | ||

|

|

c720fee3ab | ||

|

|

881387e522 | ||

|

|

d9f3378e29 | ||

|

|

ba87620225 | ||

|

|

1cd0c49872 | ||

|

|

12ac96deeb | ||

|

|

17e6f35785 | ||

|

|

bd115633a3 | ||

|

|

86ea172380 | ||

|

|

d87bbbbc1e | ||

|

|

6196f69f4d | ||

|

|

be31bcf22f | ||

|

|

cba2135c69 | ||

|

|

2e52573499 | ||

|

|

b2ce1ed1fb | ||

|

|

77a485af74 | ||

|

|

d8b847a973 | ||

|

|

e80a3d3232 | ||

|

|

780ba82385 | ||

|

|

6ba69dce0a | ||

|

|

3c7a561db8 | ||

|

|

49c942bea0 | ||

|

|

bf1ca293dc | ||

|

|

62b906d30b | ||

|

|

65bf048189 | ||

|

|

a498ed8200 | ||

|

|

9f12bbcd98 | ||

|

|

fcd520787d | ||

|

|

e2417e4e40 | ||

|

|

70a2cbf1c6 | ||

|

|

fa0c6af6aa | ||

|

|

4f1abd0c8d | ||

|

|

41e839aa36 | ||

|

|

2fd1593ad2 | ||

|

|

27b601c5aa | ||

|

|

5fc69134e3 | ||

|

|

9adc0698bb | ||

|

|

119c2ff464 | ||

|

|

f3a4201c7d | ||

|

|

8b6aa73df0 | ||

|

|

1d4dfb0883 | ||

|

|

eab7f126a6 | ||

|

|

fe7547d83e | ||

|

|

7d0df82861 | ||

|

|

7f0cd27591 | ||

|

|

e094c2ae14 | ||

|

|

a5d438257f | ||

|

|

d8cb8f1064 | ||

|

|

a8d8bb2d6f | ||

|

|

a76ea5917c | ||

|

|

b0b6198ec8 | ||

|

|

eda97f35d2 | ||

|

|

2b6507d35a | ||

|

|

f7c4d5aa0b | ||

|

|

74f07cffa6 | ||

|

|

79c8ff0af8 | ||

|

|

ac544eea4b | ||

|

|

231a32331b | ||

|

|

104e8ef050 | ||

|

|

296015faff | ||

|

|

9a9964c968 | ||

|

|

0d05d86e32 | ||

|

|

9680ca98f2 | ||

|

|

42b850ca52 | ||

|

|

3f5c22d863 | ||

|

|

535a92e871 | ||

|

|

3411a6a981 | ||

|

|

b5adee271c | ||

|

|

e2abcd1323 | ||

|

|

25fbe7ecb6 | ||

|

|

6befee79c2 | ||

|

|

f09c5a60f1 | ||

|

|

52e89ff509 | ||

|

|

35e20406ef | ||

|

|

c6e96ff1bb | ||

|

|

793ab524b0 | ||

|

|

5a479d0187 | ||

|

|

a23e4f1d2a | ||

|

|

bd35a3f61c | ||

|

|

197e987d5f | ||

|

|

7f29beb639 | ||

|

|

1140af8dc7 | ||

|

|

a2688c3910 | ||

|

|

75b27ab3f3 | ||

|

|

59d3f55fb2 | ||

|

|

f34739f334 | ||

|

|

90c71ec18f | ||

|

|

395234d7c8 | ||

|

|

e322ba0065 | ||

|

|

6db8b96f72 | ||

|

|

44d7e96e96 | ||

|

|

1662479c8d | ||

|

|

2e351fcf0d | ||

|

|

5d81876d07 | ||

|

|

c81e6989ec | ||

|

|

4d61a896c3 | ||

|

|

d148933ab3 | ||

|

|

04a56a3591 | ||

|

|

4a354e74d4 | ||

|

|

1e3e6427d5 | ||

|

|

38826108c8 | ||

|

|

4c4752f907 | ||

|

|

94dcd6c94d | ||

|

|

eabef3db30 | ||

|

|

6750f10ffa | ||

|

|

56cb888cbf | ||

|

|

b3e7fb3417 | ||

|

|

2c6e1baca2 | ||

|

|

c8358929d1 | ||

|

|

1dc7677dfb | ||

|

|

8e699a7543 | ||

|

|

cbbabdfac0 | ||

|

|

9d92de234c | ||

|

|

ba65975fb5 | ||

|

|

ef423b2078 | ||

|

|

f451b4e36c | ||

|

|

0856e13ee6 | ||

|

|

87b9fa8ca7 | ||

|

|

5b43d3d314 | ||

|

|

ac4972dd8d | ||

|

|

8a8f68af5d | ||

|

|

c669dc0c4b | ||

|

|

863a5466cc | ||

|

|

e2347c84e3 | ||

|

|

e0e673f565 | ||

|

|

30cbf2a741 | ||

|

|

f58de3801c | ||

|

|

7c6b88d4c1 | ||

|

|

0c0ebaecd5 | ||

|

|

1925f99118 | ||

|

|

6f2a22a1cc | ||

|

|

ee04082cd7 | ||

|

|

efd901ac3a | ||

|

|

e565789ae8 | ||

|

|

d3953004f6 | ||

|

|

df1d9e3011 | ||

|

|

631c55fa6e | ||

|

|

29cdd43288 | ||

|

|

9b79af9fcd | ||

|

|

2c9c1adb47 | ||

|

|

5dfb5808c4 | ||

|

|

bb0175aebf | ||

|

|

adaf4c99c0 | ||

|

|

bed6ed09d5 | ||

|

|

4ff67a85ce | ||

|

|

702f4fcd14 | ||

|

|

8a03ae153d | ||

|

|

434c6149ab | ||

|

|

97fc4a90ae | ||

|

|

217ef06930 | ||

|

|

71057946e6 | ||

|

|

a74ad52c72 | ||

|

|

12d26874f8 | ||

|

|

27de9ce151 | ||

|

|

9e7cd5a8c5 | ||

|

|

38cb487b64 | ||

|

|

05ca266c5e | ||

|

|

5cc26de645 | ||

|

|

2b9a195fa3 | ||

|

|

4454749eec | ||

|

|

b435a03fab | ||

|

|

7c166e2b40 | ||

|

|

f7a7963dcf | ||

|

|

9c77c0d69c | ||

|

|

e8a9555346 | ||

|

|

59751dd007 | ||

|

|

9c4d4d16b6 | ||

|

|

0e3d1b3e8f | ||

|

|

f119b78940 |

38

.circleci/config.yml

Normal file

@@ -0,0 +1,38 @@

|

||||

version: 2.1

|

||||

jobs:

|

||||

e2e-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-istio.sh

|

||||

- run: test/e2e-build.sh

|

||||

- run: test/e2e-tests.sh

|

||||

|

||||

e2e-supergloo-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-supergloo.sh

|

||||

- run: test/e2e-build.sh supergloo:test.supergloo-system

|

||||

- run: test/e2e-tests.sh canary

|

||||

|

||||

workflows:

|

||||

version: 2

|

||||

build-and-test:

|

||||

jobs:

|

||||

- e2e-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

- e2e-supergloo-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

@@ -6,3 +6,6 @@ coverage:

|

||||

threshold: 50

|

||||

base: auto

|

||||

patch: off

|

||||

|

||||

comment:

|

||||

require_changes: yes

|

||||

1

.github/CODEOWNERS

vendored

Normal file

@@ -0,0 +1 @@

|

||||

* @stefanprodan

|

||||

4

.gitignore

vendored

@@ -11,3 +11,7 @@

|

||||

# Output of the go coverage tool, specifically when used with LiteIDE

|

||||

*.out

|

||||

.DS_Store

|

||||

|

||||

bin/

|

||||

artifacts/gcloud/

|

||||

.idea

|

||||

@@ -1,7 +1,7 @@

|

||||

builds:

|

||||

- main: ./cmd/flagger

|

||||

binary: flagger

|

||||

ldflags: -s -w -X github.com/stefanprodan/flagger/pkg/version.REVISION={{.Commit}}

|

||||

ldflags: -s -w -X github.com/weaveworks/flagger/pkg/version.REVISION={{.Commit}}

|

||||

goos:

|

||||

- linux

|

||||

goarch:

|

||||

|

||||

28

.travis.yml

@@ -1,8 +1,13 @@

|

||||

sudo: required

|

||||

language: go

|

||||

|

||||

branches:

|

||||

except:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

|

||||

go:

|

||||

- 1.11.x

|

||||

- 1.12.x

|

||||

|

||||

services:

|

||||

- docker

|

||||

@@ -13,27 +18,26 @@ addons:

|

||||

- docker-ce

|

||||

|

||||

script:

|

||||

- set -e

|

||||

- make test-fmt

|

||||

- make test-codegen

|

||||

- go test -race -coverprofile=coverage.txt -covermode=atomic ./pkg/controller/

|

||||

- make build

|

||||

- make test-fmt

|

||||

- make test-codegen

|

||||

- go test -race -coverprofile=coverage.txt -covermode=atomic $(go list ./pkg/...)

|

||||

- make build

|

||||

|

||||

after_success:

|

||||

- if [ -z "$DOCKER_USER" ]; then

|

||||

echo "PR build, skipping image push";

|

||||

else

|

||||

BRANCH_COMMIT=${TRAVIS_BRANCH}-$(echo ${TRAVIS_COMMIT} | head -c7);

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${BRANCH_COMMIT};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin quay.io;

|

||||

docker push quay.io/stefanprodan/flagger:${BRANCH_COMMIT};

|

||||

docker tag weaveworks/flagger:latest weaveworks/flagger:${BRANCH_COMMIT};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin;

|

||||

docker push weaveworks/flagger:${BRANCH_COMMIT};

|

||||

fi

|

||||

- if [ -z "$TRAVIS_TAG" ]; then

|

||||

echo "Not a release, skipping image push";

|

||||

else

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${TRAVIS_TAG};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin quay.io;

|

||||

docker push quay.io/stefanprodan/flagger:$TRAVIS_TAG;

|

||||

docker tag weaveworks/flagger:latest weaveworks/flagger:${TRAVIS_TAG};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin;

|

||||

docker push weaveworks/flagger:$TRAVIS_TAG;

|

||||

fi

|

||||

- bash <(curl -s https://codecov.io/bash)

|

||||

- rm coverage.txt

|

||||

|

||||

245

CHANGELOG.md

Normal file

@@ -0,0 +1,245 @@

|

||||

# Changelog

|

||||

|

||||

All notable changes to this project are documented in this file.

|

||||

|

||||

## 0.12.0 (2019-04-29)

|

||||

|

||||

Adds support for [SuperGloo](https://docs.flagger.app/install/flagger-install-with-supergloo)

|

||||

|

||||

#### Features

|

||||

|

||||

- Supergloo support for canary deployment (weighted traffic) [#151](https://github.com/weaveworks/flagger/pull/151)

|

||||

|

||||

## 0.11.1 (2019-04-18)

|

||||

|

||||

Move Flagger and the load tester container images to Docker Hub

|

||||

|

||||

#### Features

|

||||

|

||||

- Add Bash Automated Testing System support to Flagger tester for running acceptance tests as pre-rollout hooks

|

||||

|

||||

## 0.11.0 (2019-04-17)

|

||||

|

||||

Adds pre/post rollout [webhooks](https://docs.flagger.app/how-it-works#webhooks)

|

||||

|

||||

#### Features

|

||||

|

||||

- Add `pre-rollout` and `post-rollout` webhook types [#147](https://github.com/weaveworks/flagger/pull/147)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Unify App Mesh and Istio builtin metric checks [#146](https://github.com/weaveworks/flagger/pull/146)

|

||||

- Make the pod selector label configurable [#148](https://github.com/weaveworks/flagger/pull/148)

|

||||

|

||||

#### Breaking changes

|

||||

|

||||

- Set default `mesh` Istio gateway only if no gateway is specified [#141](https://github.com/weaveworks/flagger/pull/141)

|

||||

|

||||

## 0.10.0 (2019-03-27)

|

||||

|

||||

Adds support for App Mesh

|

||||

|

||||

#### Features

|

||||

|

||||

- AWS App Mesh integration

|

||||

[#107](https://github.com/weaveworks/flagger/pull/107)

|

||||

[#123](https://github.com/weaveworks/flagger/pull/123)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Reconcile Kubernetes ClusterIP services [#122](https://github.com/weaveworks/flagger/pull/122)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Preserve pod labels on canary promotion [#105](https://github.com/weaveworks/flagger/pull/105)

|

||||

- Fix canary status Prometheus metric [#121](https://github.com/weaveworks/flagger/pull/121)

|

||||

|

||||

## 0.9.0 (2019-03-11)

|

||||

|

||||

Allows A/B testing scenarios where instead of weighted routing, the traffic is split between the

|

||||

primary and canary based on HTTP headers or cookies.

|

||||

|

||||

#### Features

|

||||

|

||||

- A/B testing - canary with session affinity [#88](https://github.com/weaveworks/flagger/pull/88)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Update the analysis interval when the custom resource changes [#91](https://github.com/weaveworks/flagger/pull/91)

|

||||

|

||||

## 0.8.0 (2019-03-06)

|

||||

|

||||

Adds support for CORS policy and HTTP request headers manipulation

|

||||

|

||||

#### Features

|

||||

|

||||

- CORS policy support [#83](https://github.com/weaveworks/flagger/pull/83)

|

||||

- Allow headers to be appended to HTTP requests [#82](https://github.com/weaveworks/flagger/pull/82)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Refactor the routing management

|

||||

[#72](https://github.com/weaveworks/flagger/pull/72)

|

||||

[#80](https://github.com/weaveworks/flagger/pull/80)

|

||||

- Fine-grained RBAC [#73](https://github.com/weaveworks/flagger/pull/73)

|

||||

- Add option to limit Flagger to a single namespace [#78](https://github.com/weaveworks/flagger/pull/78)

|

||||

|

||||

## 0.7.0 (2019-02-28)

|

||||

|

||||

Adds support for custom metric checks, HTTP timeouts and HTTP retries

|

||||

|

||||

#### Features

|

||||

|

||||

- Allow custom promql queries in the canary analysis spec [#60](https://github.com/weaveworks/flagger/pull/60)

|

||||

- Add HTTP timeout and retries to canary service spec [#62](https://github.com/weaveworks/flagger/pull/62)

|

||||

|

||||

## 0.6.0 (2019-02-25)

|

||||

|

||||

Allows for [HTTPMatchRequests](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#HTTPMatchRequest)

|

||||

and [HTTPRewrite](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#HTTPRewrite)

|

||||

to be customized in the service spec of the canary custom resource.

|

||||

|

||||

#### Features

|

||||

|

||||

- Add HTTP match conditions and URI rewrite to the canary service spec [#55](https://github.com/weaveworks/flagger/pull/55)

|

||||

- Update virtual service when the canary service spec changes

|

||||

[#54](https://github.com/weaveworks/flagger/pull/54)

|

||||

[#51](https://github.com/weaveworks/flagger/pull/51)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Run e2e testing on [Kubernetes Kind](https://github.com/kubernetes-sigs/kind) for canary promotion

|

||||

[#53](https://github.com/weaveworks/flagger/pull/53)

|

||||

|

||||

## 0.5.1 (2019-02-14)

|

||||

|

||||

Allows skipping the analysis phase to ship changes directly to production

|

||||

|

||||

#### Features

|

||||

|

||||

- Add option to skip the canary analysis [#46](https://github.com/weaveworks/flagger/pull/46)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Reject deployment if the pod label selector doesn't match `app: <DEPLOYMENT_NAME>` [#43](https://github.com/weaveworks/flagger/pull/43)

|

||||

|

||||

## 0.5.0 (2019-01-30)

|

||||

|

||||

Track changes in ConfigMaps and Secrets [#37](https://github.com/weaveworks/flagger/pull/37)

|

||||

|

||||

#### Features

|

||||

|

||||

- Promote configmaps and secrets changes from canary to primary

|

||||

- Detect changes in configmaps and/or secrets and (re)start canary analysis

|

||||

- Add configs checksum to Canary CRD status

|

||||

- Create primary configmaps and secrets at bootstrap

|

||||

- Scan canary volumes and containers for configmaps and secrets

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Copy deployment labels from canary to primary at bootstrap and promotion

|

||||

|

||||

## 0.4.1 (2019-01-24)

|

||||

|

||||

Load testing webhook [#35](https://github.com/weaveworks/flagger/pull/35)

|

||||

|

||||

#### Features

|

||||

|

||||

- Add the load tester chart to Flagger Helm repository

|

||||

- Implement a load test runner based on [rakyll/hey](https://github.com/rakyll/hey)

|

||||

- Log warning when no values are found for Istio metric due to lack of traffic

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Run wekbooks before the metrics checks to avoid failures when using a load tester

|

||||

|

||||

## 0.4.0 (2019-01-18)

|

||||

|

||||

Restart canary analysis if revision changes [#31](https://github.com/weaveworks/flagger/pull/31)

|

||||

|

||||

#### Breaking changes

|

||||

|

||||

- Drop support for Kubernetes 1.10

|

||||

|

||||

#### Features

|

||||

|

||||

- Detect changes during canary analysis and reset advancement

|

||||

- Add status and additional printer columns to CRD

|

||||

- Add canary name and namespace to controller structured logs

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Allow canary name to be different to the target name

|

||||

- Check if multiple canaries have the same target and log error

|

||||

- Use deep copy when updating Kubernetes objects

|

||||

- Skip readiness checks if canary analysis has finished

|

||||

|

||||

## 0.3.0 (2019-01-11)

|

||||

|

||||

Configurable canary analysis duration [#20](https://github.com/weaveworks/flagger/pull/20)

|

||||

|

||||

#### Breaking changes

|

||||

|

||||

- Helm chart: flag `controlLoopInterval` has been removed

|

||||

|

||||

#### Features

|

||||

|

||||

- CRD: canaries.flagger.app v1alpha3

|

||||

- Schedule canary analysis independently based on `canaryAnalysis.interval`

|

||||

- Add analysis interval to Canary CRD (defaults to one minute)

|

||||

- Make autoscaler (HPA) reference optional

|

||||

|

||||

## 0.2.0 (2019-01-04)

|

||||

|

||||

Webhooks [#18](https://github.com/weaveworks/flagger/pull/18)

|

||||

|

||||

#### Features

|

||||

|

||||

- CRD: canaries.flagger.app v1alpha2

|

||||

- Implement canary external checks based on webhooks HTTP POST calls

|

||||

- Add webhooks to Canary CRD

|

||||

- Move docs to gitbook [docs.flagger.app](https://docs.flagger.app)

|

||||

|

||||

## 0.1.2 (2018-12-06)

|

||||

|

||||

Improve Slack notifications [#14](https://github.com/weaveworks/flagger/pull/14)

|

||||

|

||||

#### Features

|

||||

|

||||

- Add canary analysis metadata to init and start Slack messages

|

||||

- Add rollback reason to failed canary Slack messages

|

||||

|

||||

## 0.1.1 (2018-11-28)

|

||||

|

||||

Canary progress deadline [#10](https://github.com/weaveworks/flagger/pull/10)

|

||||

|

||||

#### Features

|

||||

|

||||

- Rollback canary based on the deployment progress deadline check

|

||||

- Add progress deadline to Canary CRD (defaults to 10 minutes)

|

||||

|

||||

## 0.1.0 (2018-11-25)

|

||||

|

||||

First stable release

|

||||

|

||||

#### Features

|

||||

|

||||

- CRD: canaries.flagger.app v1alpha1

|

||||

- Notifications: post canary events to Slack

|

||||

- Instrumentation: expose Prometheus metrics for canary status and traffic weight percentage

|

||||

- Autoscaling: add HPA reference to CRD and create primary HPA at bootstrap

|

||||

- Bootstrap: create primary deployment, ClusterIP services and Istio virtual service based on CRD spec

|

||||

|

||||

|

||||

## 0.0.1 (2018-10-07)

|

||||

|

||||

Initial semver release

|

||||

|

||||

#### Features

|

||||

|

||||

- Implement canary rollback based on failed checks threshold

|

||||

- Scale up the deployment when canary revision changes

|

||||

- Add OpenAPI v3 schema validation to Canary CRD

|

||||

- Use CRD status for canary state persistence

|

||||

- Add Helm charts for Flagger and Grafana

|

||||

- Add canary analysis Grafana dashboard

|

||||

12

Dockerfile

@@ -1,17 +1,17 @@

|

||||

FROM golang:1.11

|

||||

FROM golang:1.12

|

||||

|

||||

RUN mkdir -p /go/src/github.com/stefanprodan/flagger/

|

||||

RUN mkdir -p /go/src/github.com/weaveworks/flagger/

|

||||

|

||||

WORKDIR /go/src/github.com/stefanprodan/flagger

|

||||

WORKDIR /go/src/github.com/weaveworks/flagger

|

||||

|

||||

COPY . .

|

||||

|

||||

RUN GIT_COMMIT=$(git rev-list -1 HEAD) && \

|

||||

CGO_ENABLED=0 GOOS=linux go build -ldflags "-s -w \

|

||||

-X github.com/stefanprodan/flagger/pkg/version.REVISION=${GIT_COMMIT}" \

|

||||

-X github.com/weaveworks/flagger/pkg/version.REVISION=${GIT_COMMIT}" \

|

||||

-a -installsuffix cgo -o flagger ./cmd/flagger/*

|

||||

|

||||

FROM alpine:3.8

|

||||

FROM alpine:3.9

|

||||

|

||||

RUN addgroup -S flagger \

|

||||

&& adduser -S -g flagger flagger \

|

||||

@@ -19,7 +19,7 @@ RUN addgroup -S flagger \

|

||||

|

||||

WORKDIR /home/flagger

|

||||

|

||||

COPY --from=0 /go/src/github.com/stefanprodan/flagger/flagger .

|

||||

COPY --from=0 /go/src/github.com/weaveworks/flagger/flagger .

|

||||

|

||||

RUN chown -R flagger:flagger ./

|

||||

|

||||

|

||||

@@ -1,24 +1,8 @@

|

||||

FROM golang:1.11 AS hey-builder

|

||||

FROM golang:1.12 AS builder

|

||||

|

||||

RUN mkdir -p /go/src/github.com/rakyll/hey/

|

||||

RUN mkdir -p /go/src/github.com/weaveworks/flagger/

|

||||

|

||||

WORKDIR /go/src/github.com/rakyll/hey

|

||||

|

||||

ADD https://github.com/rakyll/hey/archive/v0.1.1.tar.gz .

|

||||

|

||||

RUN tar xzf v0.1.1.tar.gz --strip 1

|

||||

|

||||

RUN go get ./...

|

||||

|

||||

RUN CGO_ENABLED=0 GOOS=linux GOARCH=amd64 \

|

||||

go install -ldflags '-w -extldflags "-static"' \

|

||||

/go/src/github.com/rakyll/hey

|

||||

|

||||

FROM golang:1.11 AS builder

|

||||

|

||||

RUN mkdir -p /go/src/github.com/stefanprodan/flagger/

|

||||

|

||||

WORKDIR /go/src/github.com/stefanprodan/flagger

|

||||

WORKDIR /go/src/github.com/weaveworks/flagger

|

||||

|

||||

COPY . .

|

||||

|

||||

@@ -26,16 +10,18 @@ RUN go test -race ./pkg/loadtester/

|

||||

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o loadtester ./cmd/loadtester/*

|

||||

|

||||

FROM alpine:3.8

|

||||

FROM bats/bats:v1.1.0

|

||||

|

||||

RUN addgroup -S app \

|

||||

&& adduser -S -g app app \

|

||||

&& apk --no-cache add ca-certificates curl

|

||||

&& apk --no-cache add ca-certificates curl jq

|

||||

|

||||

WORKDIR /home/app

|

||||

|

||||

COPY --from=hey-builder /go/bin/hey /usr/local/bin/hey

|

||||

COPY --from=builder /go/src/github.com/stefanprodan/flagger/loadtester .

|

||||

RUN curl -sSLo hey "https://storage.googleapis.com/jblabs/dist/hey_linux_v0.1.2" && \

|

||||

chmod +x hey && mv hey /usr/local/bin/hey

|

||||

|

||||

COPY --from=builder /go/src/github.com/weaveworks/flagger/loadtester .

|

||||

|

||||

RUN chown -R app:app ./

|

||||

|

||||

|

||||

694

Gopkg.lock

generated

38

Gopkg.toml

@@ -11,31 +11,37 @@ required = [

|

||||

name = "go.uber.org/zap"

|

||||

version = "v1.9.1"

|

||||

|

||||

[[constraint]]

|

||||

name = "gopkg.in/h2non/gock.v1"

|

||||

version = "v1.0.14"

|

||||

|

||||

[[override]]

|

||||

name = "gopkg.in/yaml.v2"

|

||||

version = "v2.2.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/api"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/apimachinery"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/code-generator"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/client-go"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "github.com/json-iterator/go"

|

||||

# This is the commit at which k8s depends on this in 1.11

|

||||

# It seems to be broken at HEAD.

|

||||

revision = "f2b4162afba35581b6d4a50d3b8f34e33c144682"

|

||||

name = "k8s.io/apiextensions-apiserver"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/apiserver"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/prometheus/client_golang"

|

||||

@@ -45,13 +51,9 @@ required = [

|

||||

name = "github.com/google/go-cmp"

|

||||

version = "v0.2.0"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/knative/pkg"

|

||||

revision = "c15d7c8f2220a7578b33504df6edefa948c845ae"

|

||||

|

||||

[[override]]

|

||||

name = "github.com/golang/glog"

|

||||

source = "github.com/istio/glog"

|

||||

name = "k8s.io/klog"

|

||||

source = "github.com/stefanprodan/klog"

|

||||

|

||||

[prune]

|

||||

go-tests = true

|

||||

@@ -62,3 +64,11 @@ required = [

|

||||

name = "k8s.io/code-generator"

|

||||

unused-packages = false

|

||||

non-go = false

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/solo-io/supergloo"

|

||||

version = "v0.3.11"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/solo-io/solo-kit"

|

||||

version = "v0.6.3"

|

||||

|

||||

5

MAINTAINERS

Normal file

@@ -0,0 +1,5 @@

|

||||

The maintainers are generally available in Slack at

|

||||

https://weave-community.slack.com/messages/flagger/ (obtain an invitation

|

||||

at https://slack.weave.works/).

|

||||

|

||||

Stefan Prodan, Weaveworks <stefan@weave.works> (Slack: @stefan Twitter: @stefanprodan)

|

||||

23

Makefile

@@ -4,19 +4,26 @@ VERSION_MINOR:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4

|

||||

PATCH:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | tr -d '"' | awk -F. '{print $$NF}')

|

||||

SOURCE_DIRS = cmd pkg/apis pkg/controller pkg/server pkg/logging pkg/version

|

||||

LT_VERSION?=$(shell grep 'VERSION' cmd/loadtester/main.go | awk '{ print $$4 }' | tr -d '"' | head -n1)

|

||||

TS=$(shell date +%Y-%m-%d_%H-%M-%S)

|

||||

|

||||

run:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info \

|

||||

-metrics-server=https://prometheus.iowa.weavedx.com \

|

||||

-metrics-server=https://prometheus.istio.weavedx.com \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

run-appmesh:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=appmesh \

|

||||

-metrics-server=http://acfc235624ca911e9a94c02c4171f346-1585187926.us-west-2.elb.amazonaws.com:9090 \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

build:

|

||||

docker build -t stefanprodan/flagger:$(TAG) . -f Dockerfile

|

||||

docker build -t weaveworks/flagger:$(TAG) . -f Dockerfile

|

||||

|

||||

push:

|

||||

docker tag stefanprodan/flagger:$(TAG) quay.io/stefanprodan/flagger:$(VERSION)

|

||||

docker push quay.io/stefanprodan/flagger:$(VERSION)

|

||||

docker tag weaveworks/flagger:$(TAG) weaveworks/flagger:$(VERSION)

|

||||

docker push weaveworks/flagger:$(VERSION)

|

||||

|

||||

fmt:

|

||||

gofmt -l -s -w $(SOURCE_DIRS)

|

||||

@@ -31,9 +38,9 @@ test: test-fmt test-codegen

|

||||

go test ./...

|

||||

|

||||

helm-package:

|

||||

cd charts/ && helm package flagger/ && helm package grafana/ && helm package loadtester/

|

||||

cd charts/ && helm package ./*

|

||||

mv charts/*.tgz docs/

|

||||

helm repo index docs --url https://stefanprodan.github.io/flagger --merge ./docs/index.yaml

|

||||

helm repo index docs --url https://weaveworks.github.io/flagger --merge ./docs/index.yaml

|

||||

|

||||

helm-up:

|

||||

helm upgrade --install flagger ./charts/flagger --namespace=istio-system --set crd.create=false

|

||||

@@ -82,5 +89,5 @@ reset-test:

|

||||

kubectl apply -f ./artifacts/canaries

|

||||

|

||||

loadtester-push:

|

||||

docker build -t quay.io/stefanprodan/flagger-loadtester:$(LT_VERSION) . -f Dockerfile.loadtester

|

||||

docker push quay.io/stefanprodan/flagger-loadtester:$(LT_VERSION)

|

||||

docker build -t weaveworks/flagger-loadtester:$(LT_VERSION) . -f Dockerfile.loadtester

|

||||

docker push weaveworks/flagger-loadtester:$(LT_VERSION)

|

||||

473

README.md

@@ -1,79 +1,58 @@

|

||||

# flagger

|

||||

|

||||

[](https://travis-ci.org/stefanprodan/flagger)

|

||||

[](https://goreportcard.com/report/github.com/stefanprodan/flagger)

|

||||

[](https://codecov.io/gh/stefanprodan/flagger)

|

||||

[](https://github.com/stefanprodan/flagger/blob/master/LICENSE)

|

||||

[](https://github.com/stefanprodan/flagger/releases)

|

||||

[](https://travis-ci.org/weaveworks/flagger)

|

||||

[](https://goreportcard.com/report/github.com/weaveworks/flagger)

|

||||

[](https://codecov.io/gh/weaveworks/flagger)

|

||||

[](https://github.com/weaveworks/flagger/blob/master/LICENSE)

|

||||

[](https://github.com/weaveworks/flagger/releases)

|

||||

|

||||

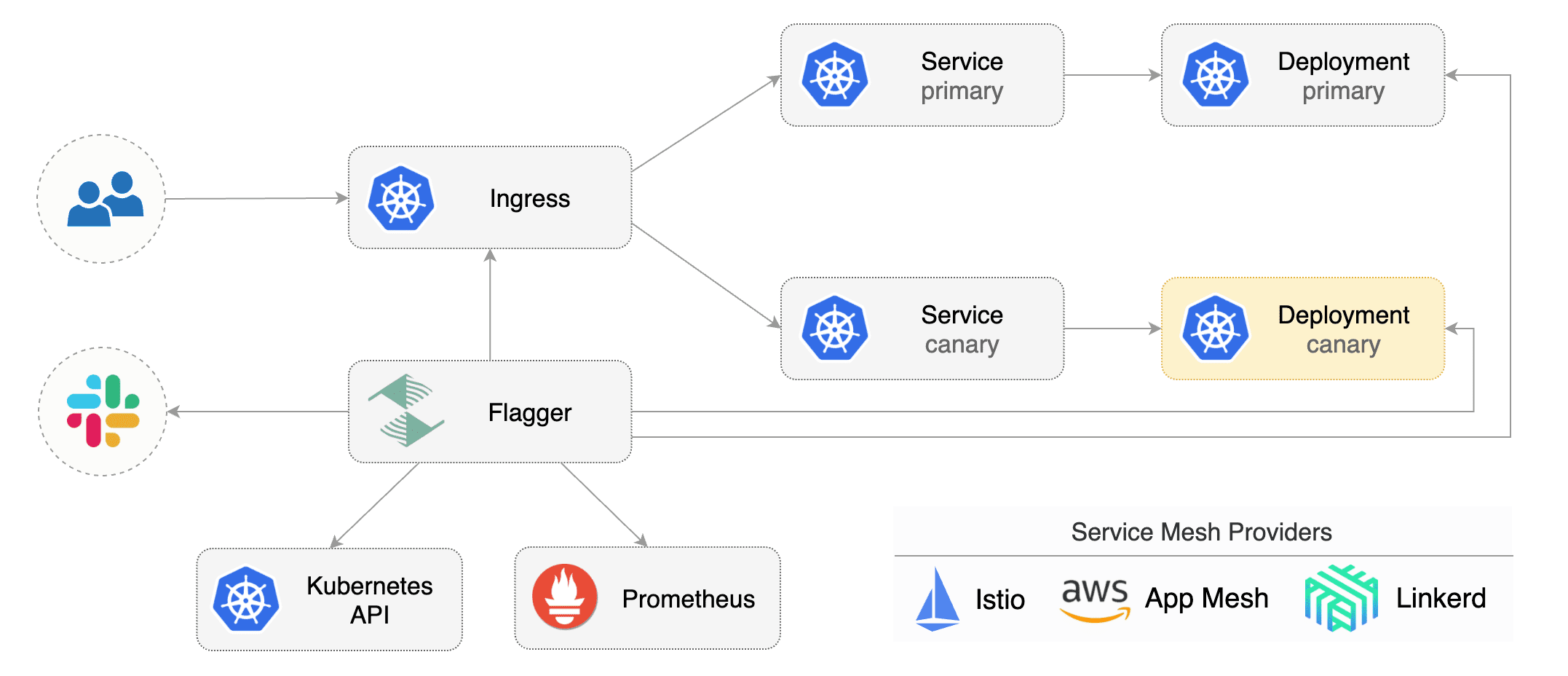

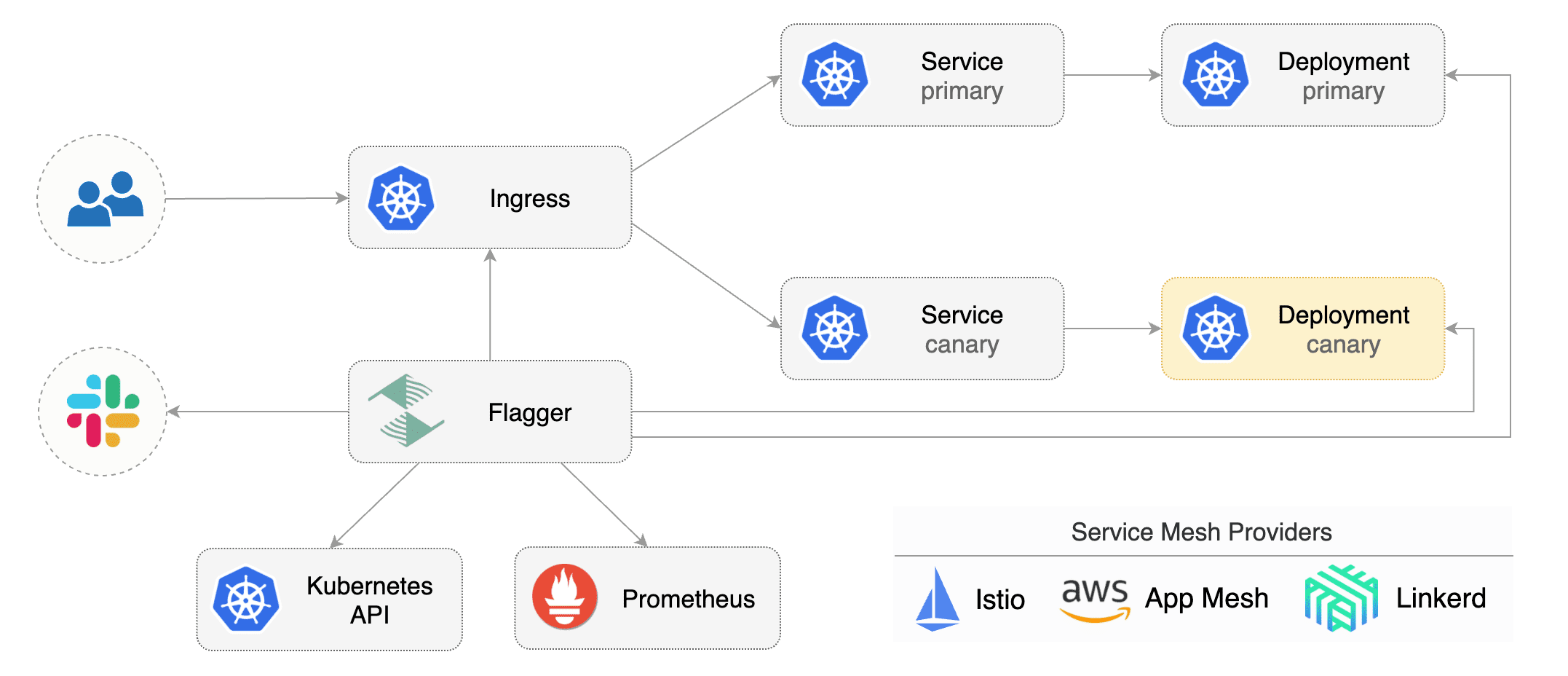

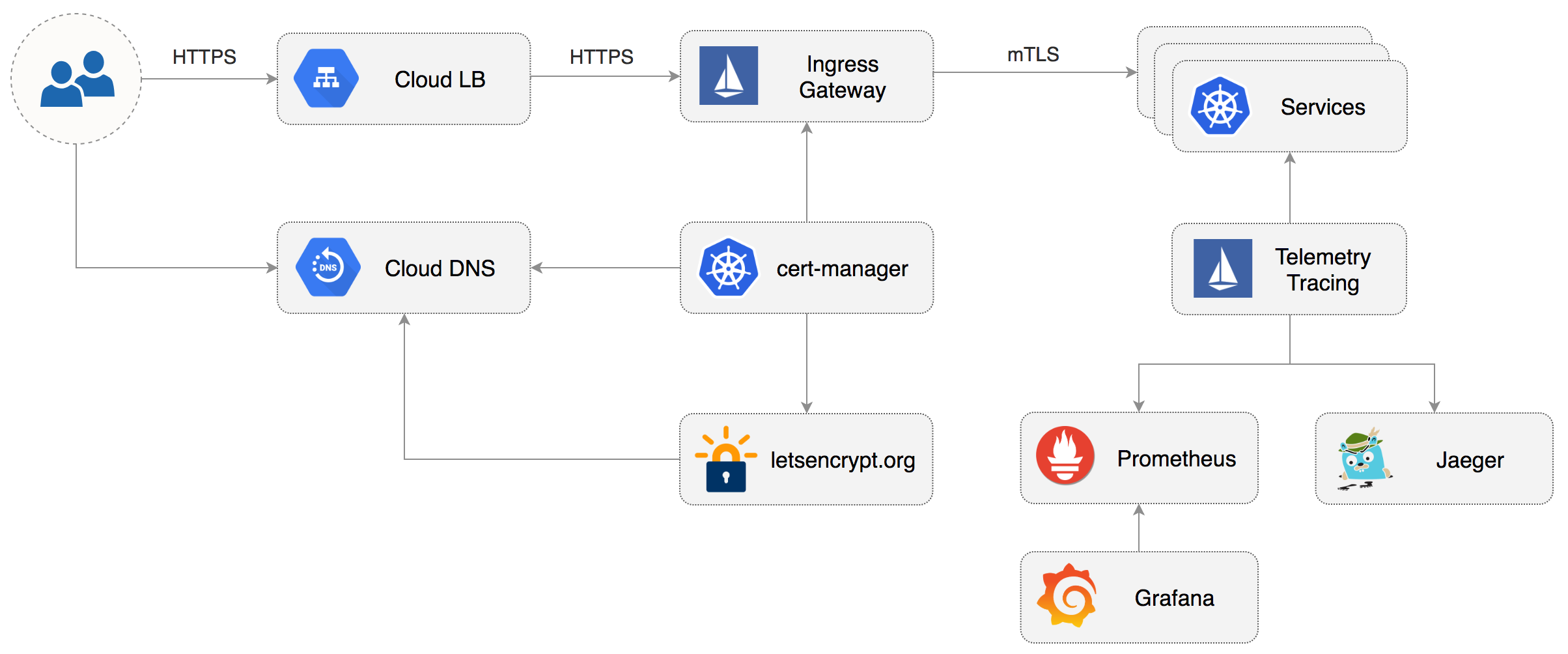

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

using Istio or App Mesh routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running acceptance tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

### Install

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance

|

||||

indicators like HTTP requests success rate, requests average duration and pods health.

|

||||

Based on analysis of the KPIs a canary is promoted or aborted, and the analysis result is published to Slack.

|

||||

|

||||

Before installing Flagger make sure you have Istio setup up with Prometheus enabled.

|

||||

If you are new to Istio you can follow my [Istio service mesh walk-through](https://github.com/stefanprodan/istio-gke).

|

||||

|

||||

|

||||

Deploy Flagger in the `istio-system` namespace using Helm:

|

||||

## Documentation

|

||||

|

||||

```bash

|

||||

# add the Helm repository

|

||||

helm repo add flagger https://flagger.app

|

||||

Flagger documentation can be found at [docs.flagger.app](https://docs.flagger.app)

|

||||

|

||||

# install or upgrade

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace=istio-system \

|

||||

--set metricsServer=http://prometheus.istio-system:9090

|

||||

```

|

||||

* Install

|

||||

* [Flagger install on Kubernetes](https://docs.flagger.app/install/flagger-install-on-kubernetes)

|

||||

* [Flagger install on GKE Istio](https://docs.flagger.app/install/flagger-install-on-google-cloud)

|

||||

* [Flagger install on EKS App Mesh](https://docs.flagger.app/install/flagger-install-on-eks-appmesh)

|

||||

* [Flagger install with SuperGloo](https://docs.flagger.app/install/flagger-install-with-supergloo)

|

||||

* How it works

|

||||

* [Canary custom resource](https://docs.flagger.app/how-it-works#canary-custom-resource)

|

||||

* [Routing](https://docs.flagger.app/how-it-works#istio-routing)

|

||||

* [Canary deployment stages](https://docs.flagger.app/how-it-works#canary-deployment)

|

||||

* [Canary analysis](https://docs.flagger.app/how-it-works#canary-analysis)

|

||||

* [HTTP metrics](https://docs.flagger.app/how-it-works#http-metrics)

|

||||

* [Custom metrics](https://docs.flagger.app/how-it-works#custom-metrics)

|

||||

* [Webhooks](https://docs.flagger.app/how-it-works#webhooks)

|

||||

* [Load testing](https://docs.flagger.app/how-it-works#load-testing)

|

||||

* Usage

|

||||

* [Istio canary deployments](https://docs.flagger.app/usage/progressive-delivery)

|

||||

* [Istio A/B testing](https://docs.flagger.app/usage/ab-testing)

|

||||

* [App Mesh canary deployments](https://docs.flagger.app/usage/appmesh-progressive-delivery)

|

||||

* [Monitoring](https://docs.flagger.app/usage/monitoring)

|

||||

* [Alerting](https://docs.flagger.app/usage/alerting)

|

||||

* Tutorials

|

||||

* [Canary deployments with Helm charts and Weave Flux](https://docs.flagger.app/tutorials/canary-helm-gitops)

|

||||

|

||||

Flagger is compatible with Kubernetes >1.11.0 and Istio >1.0.0.

|

||||

## Canary CRD

|

||||

|

||||

### Usage

|

||||

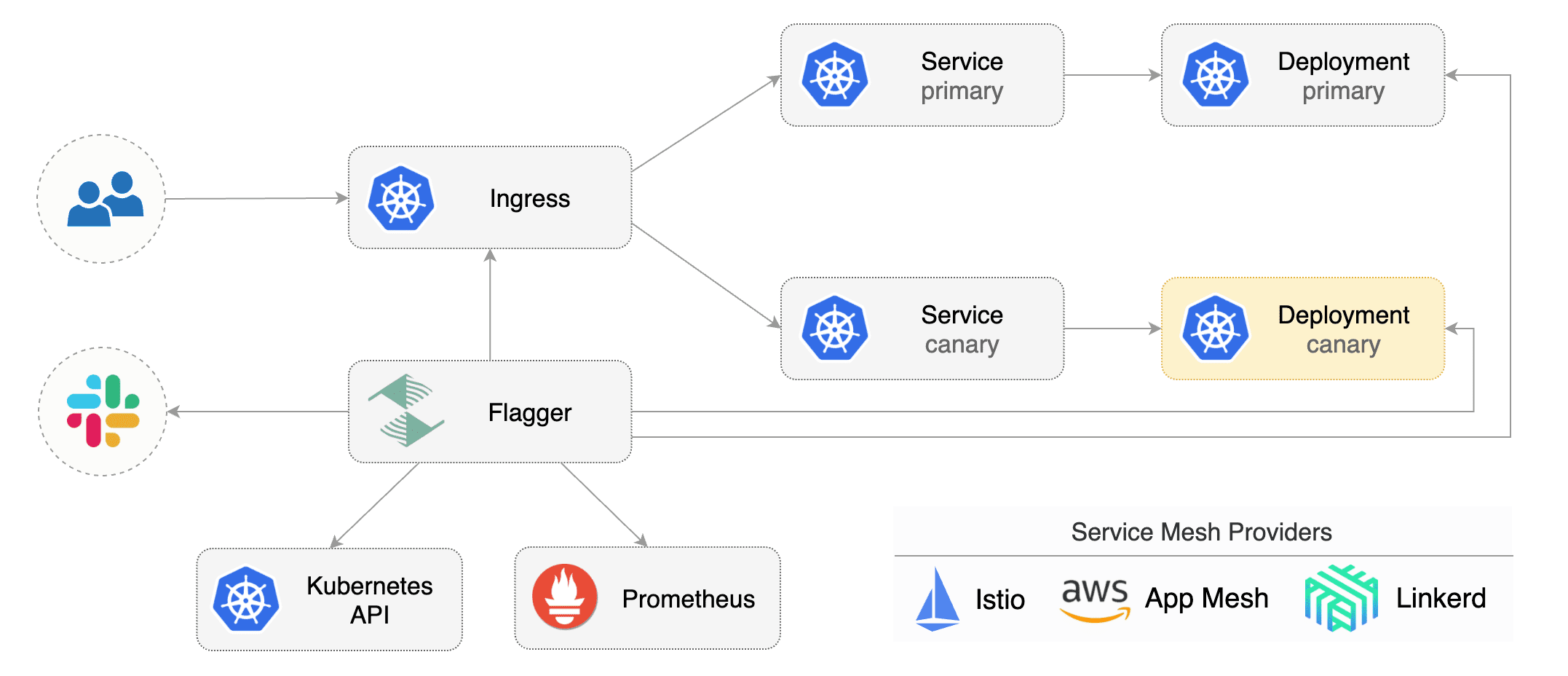

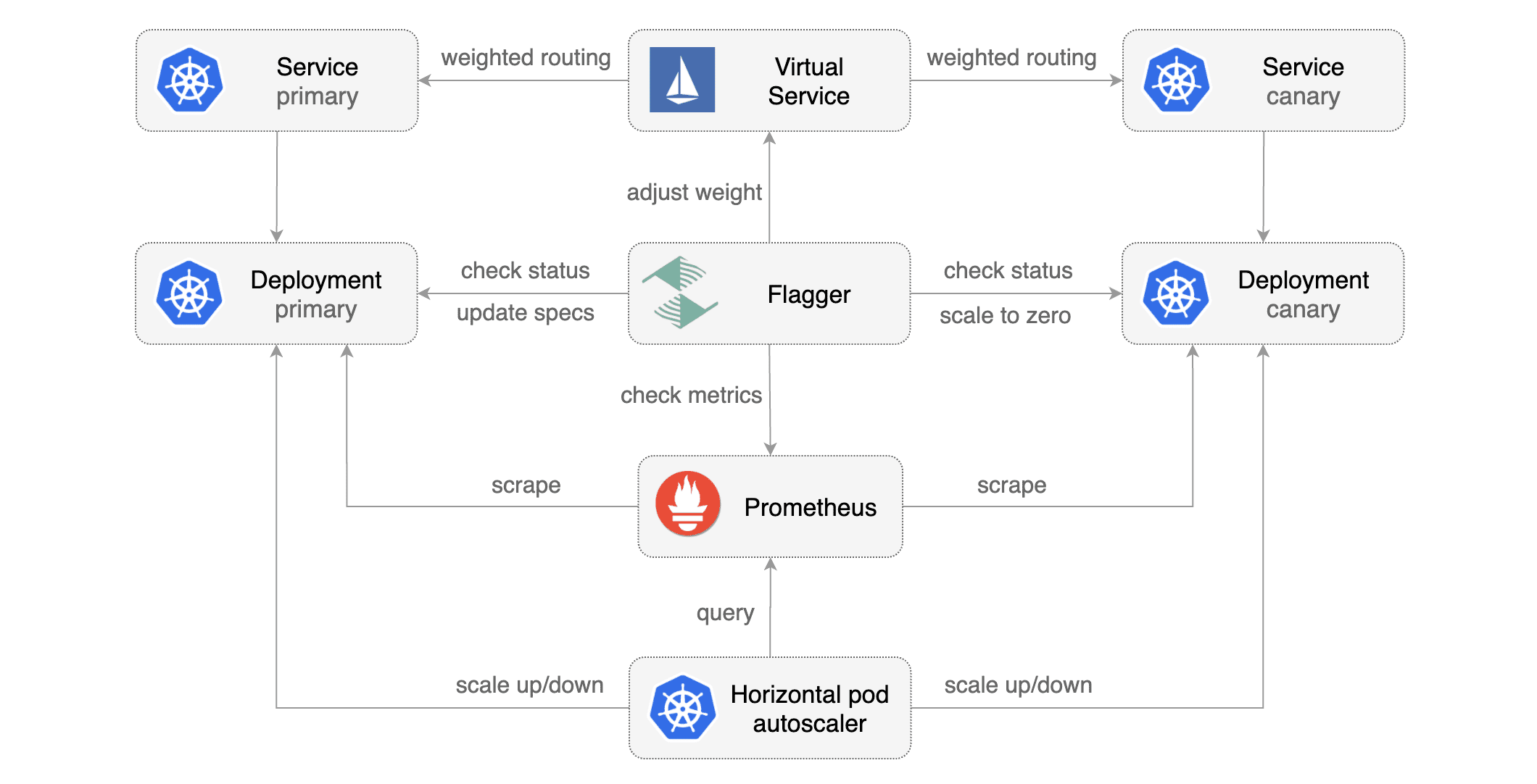

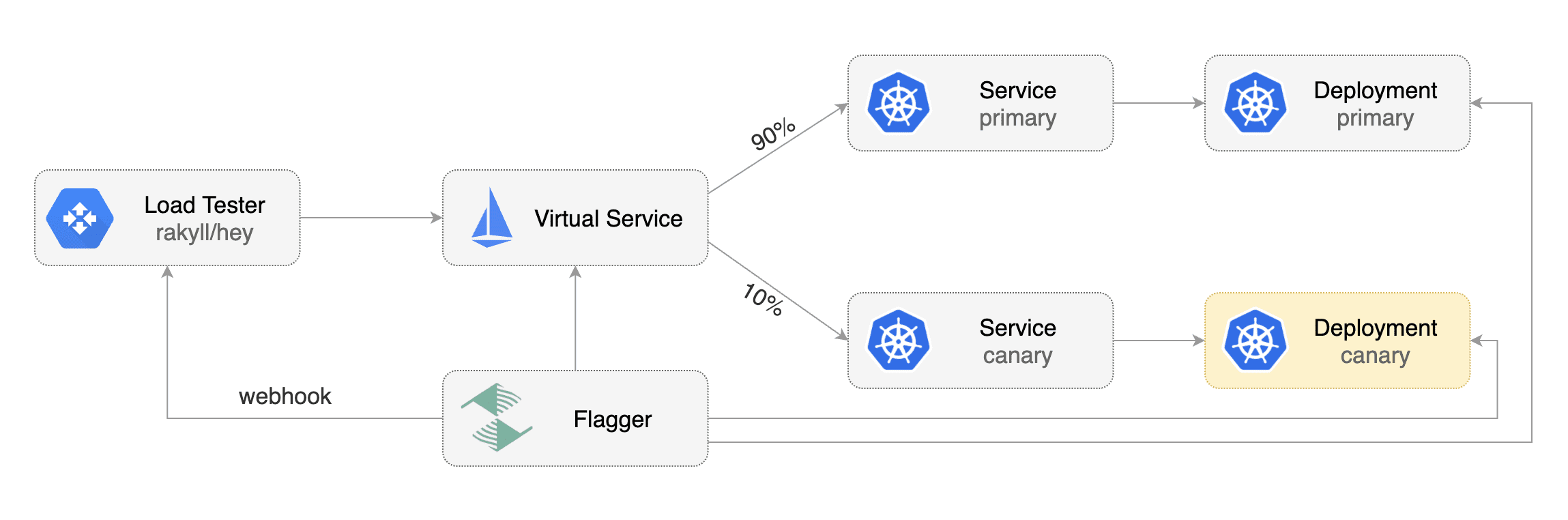

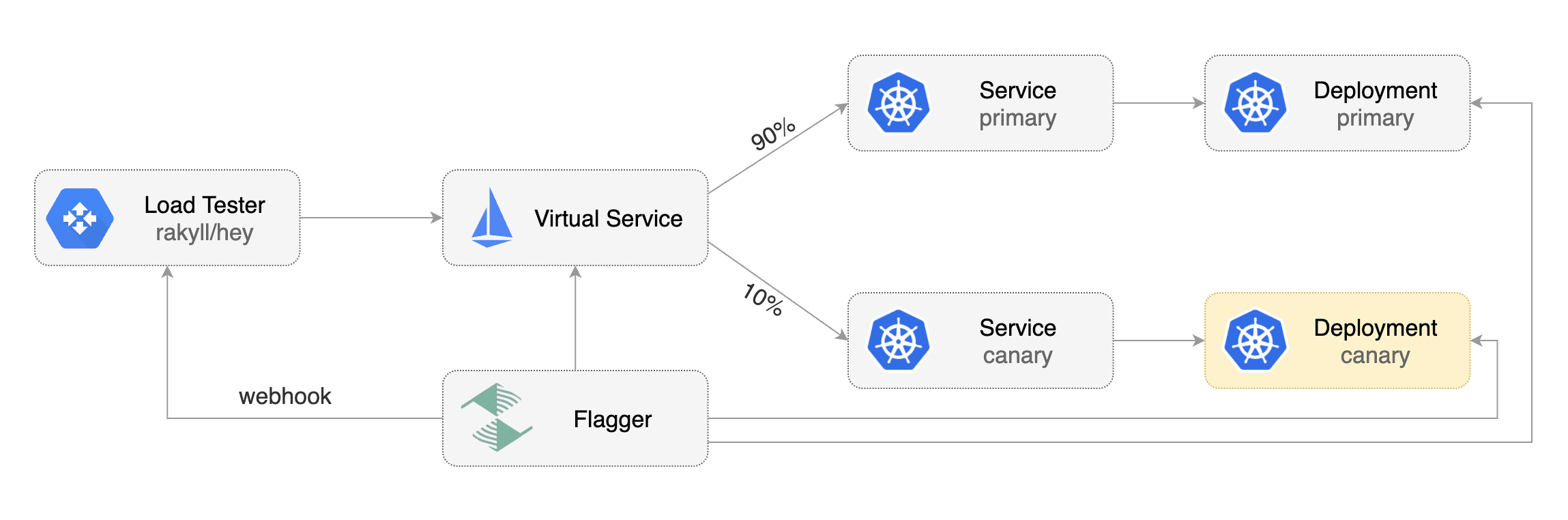

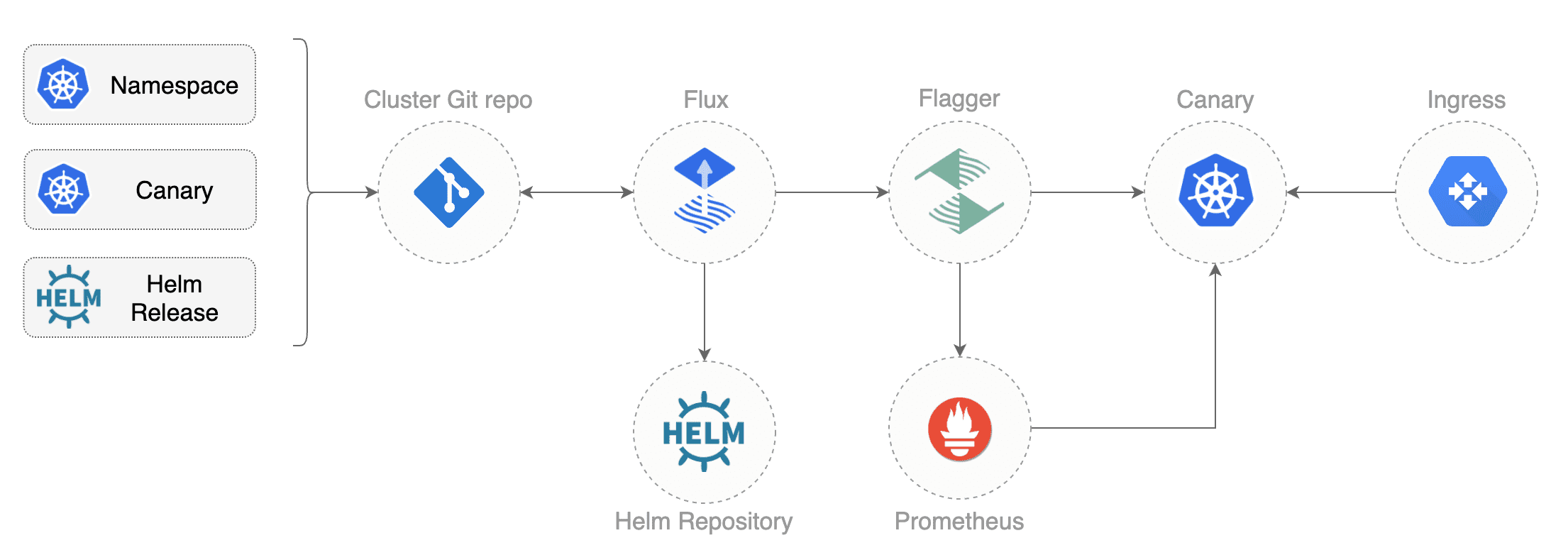

Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler (HPA),

|

||||

then creates a series of objects (Kubernetes deployments, ClusterIP services and Istio or App Mesh virtual services).

|

||||

These objects expose the application on the mesh and drive the canary analysis and promotion.

|

||||

|

||||

Flagger takes a Kubernetes deployment and creates a series of objects

|

||||

(Kubernetes [deployments](https://kubernetes.io/docs/concepts/workloads/controllers/deployment/),

|

||||

ClusterIP [services](https://kubernetes.io/docs/concepts/services-networking/service/) and

|

||||

Istio [virtual services](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#VirtualService))

|

||||

to drive the canary analysis and promotion.

|

||||

|

||||

Flagger keeps track of ConfigMaps and Secrets referenced by a Kubernetes Deployment and triggers a canary analysis if any of those objects change.

|

||||

Flagger keeps track of ConfigMaps and Secrets referenced by a Kubernetes Deployment and triggers a canary analysis if any of those objects change.

|

||||

When promoting a workload in production, both code (container images) and configuration (config maps and secrets) are being synchronised.

|

||||

|

||||

|

||||

|

||||

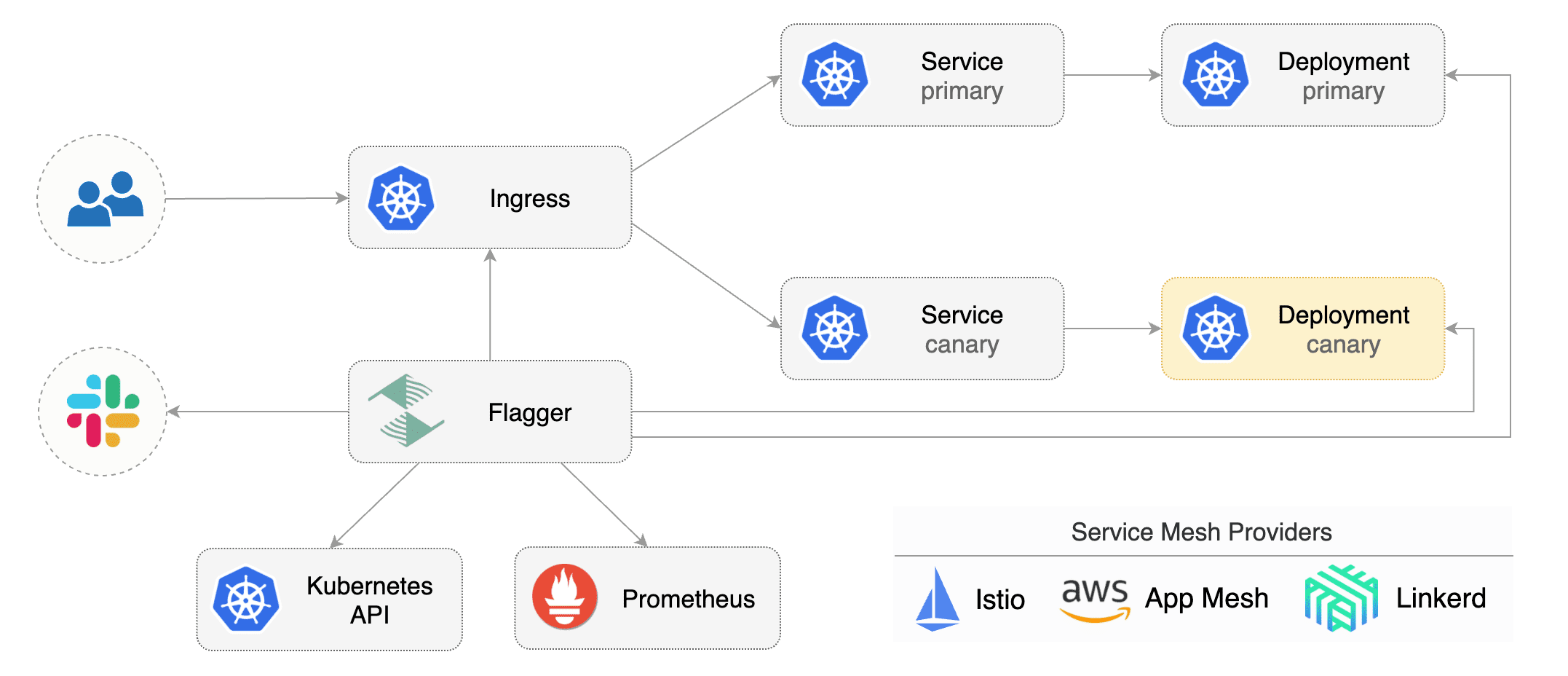

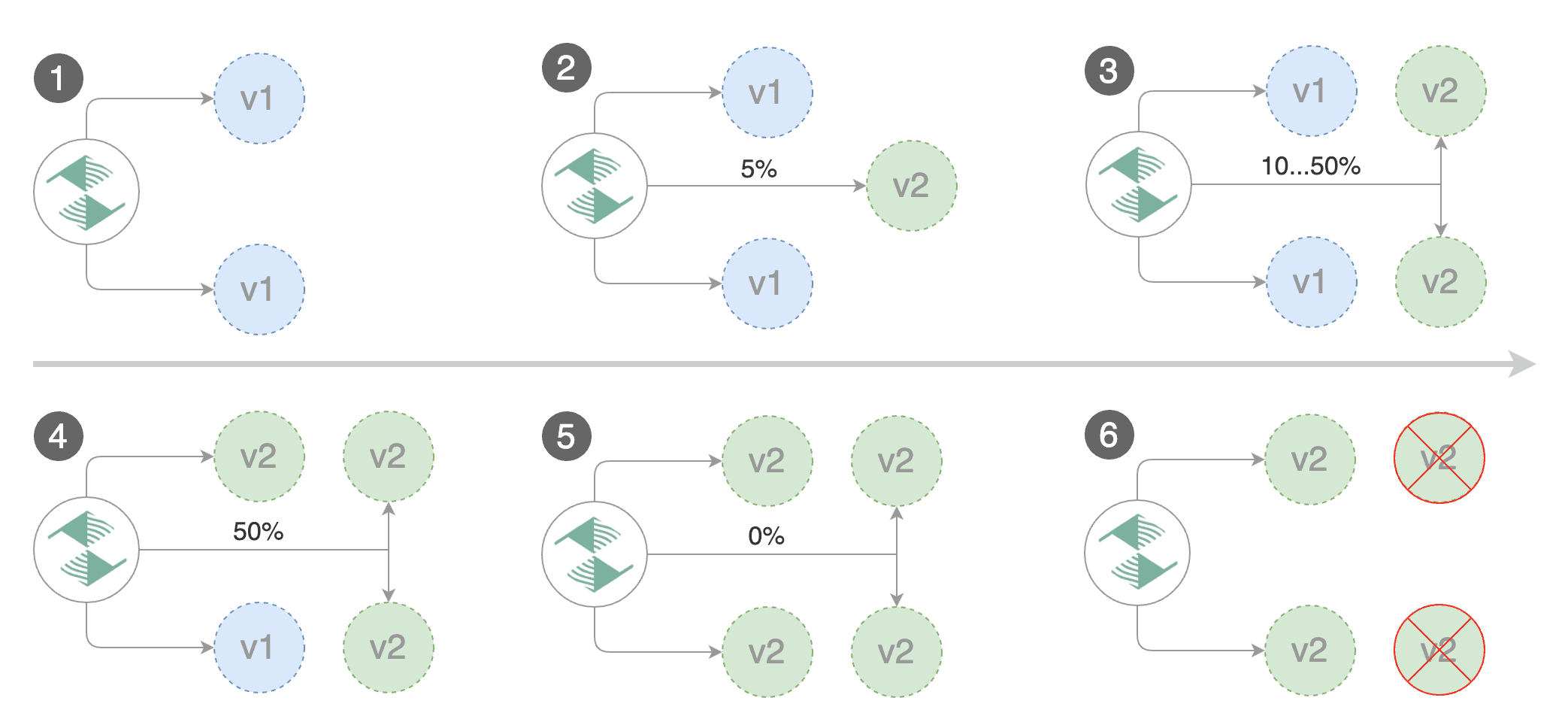

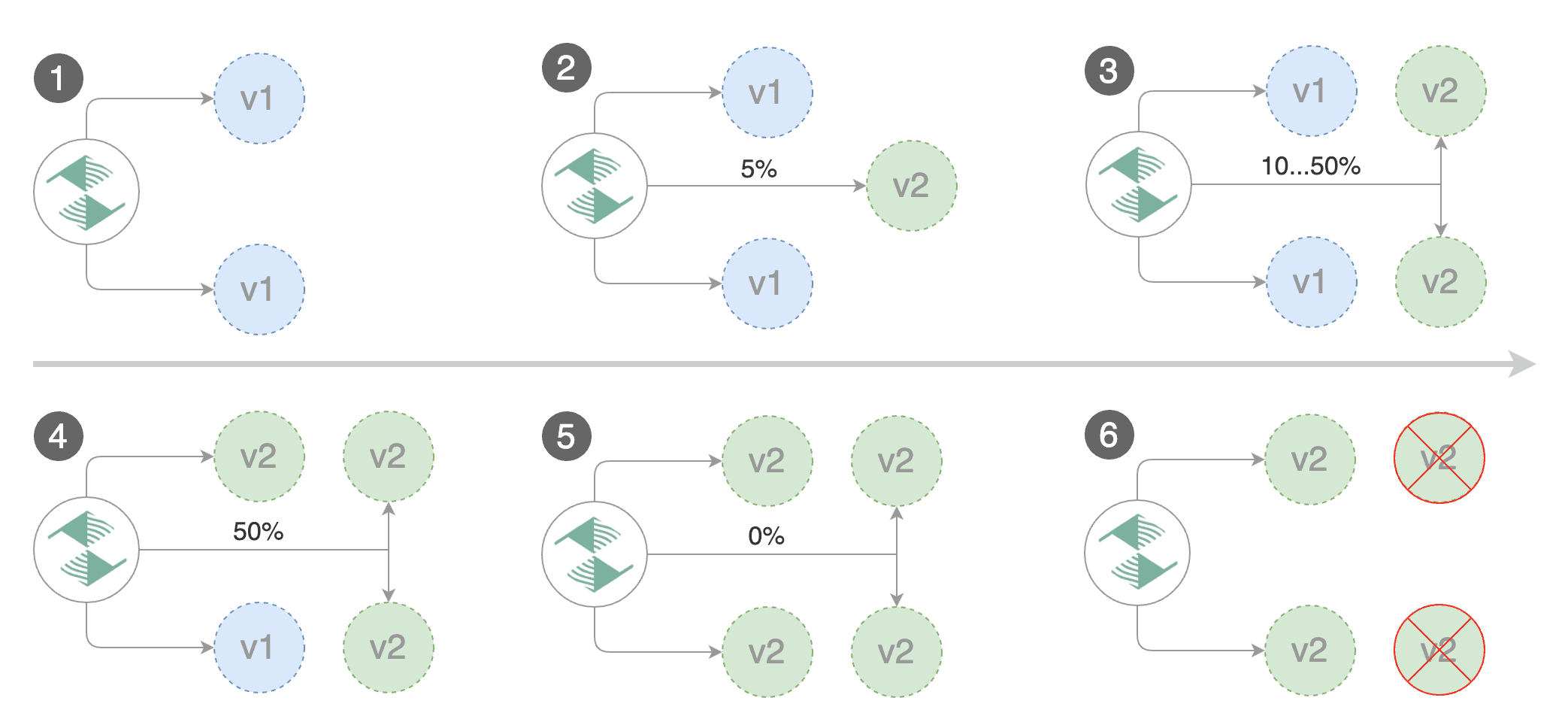

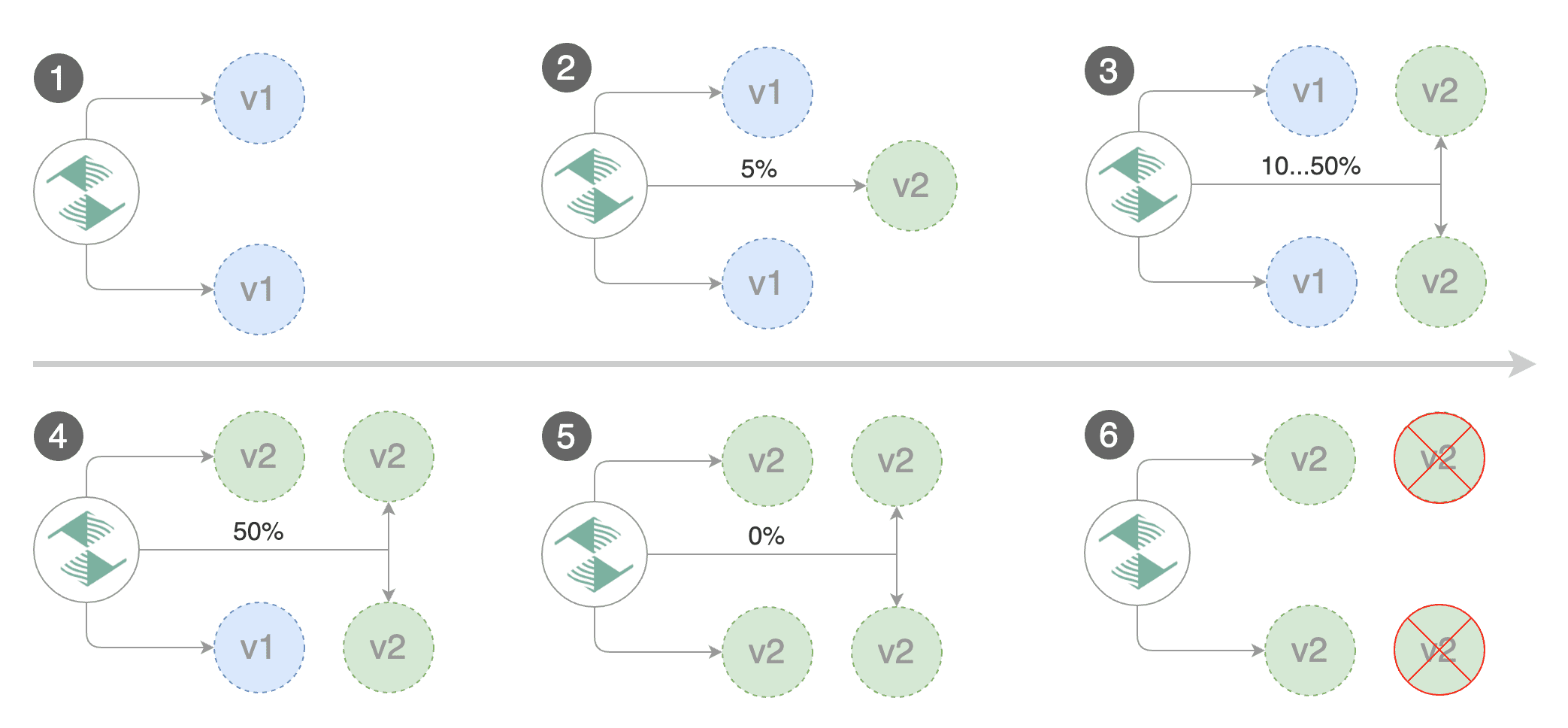

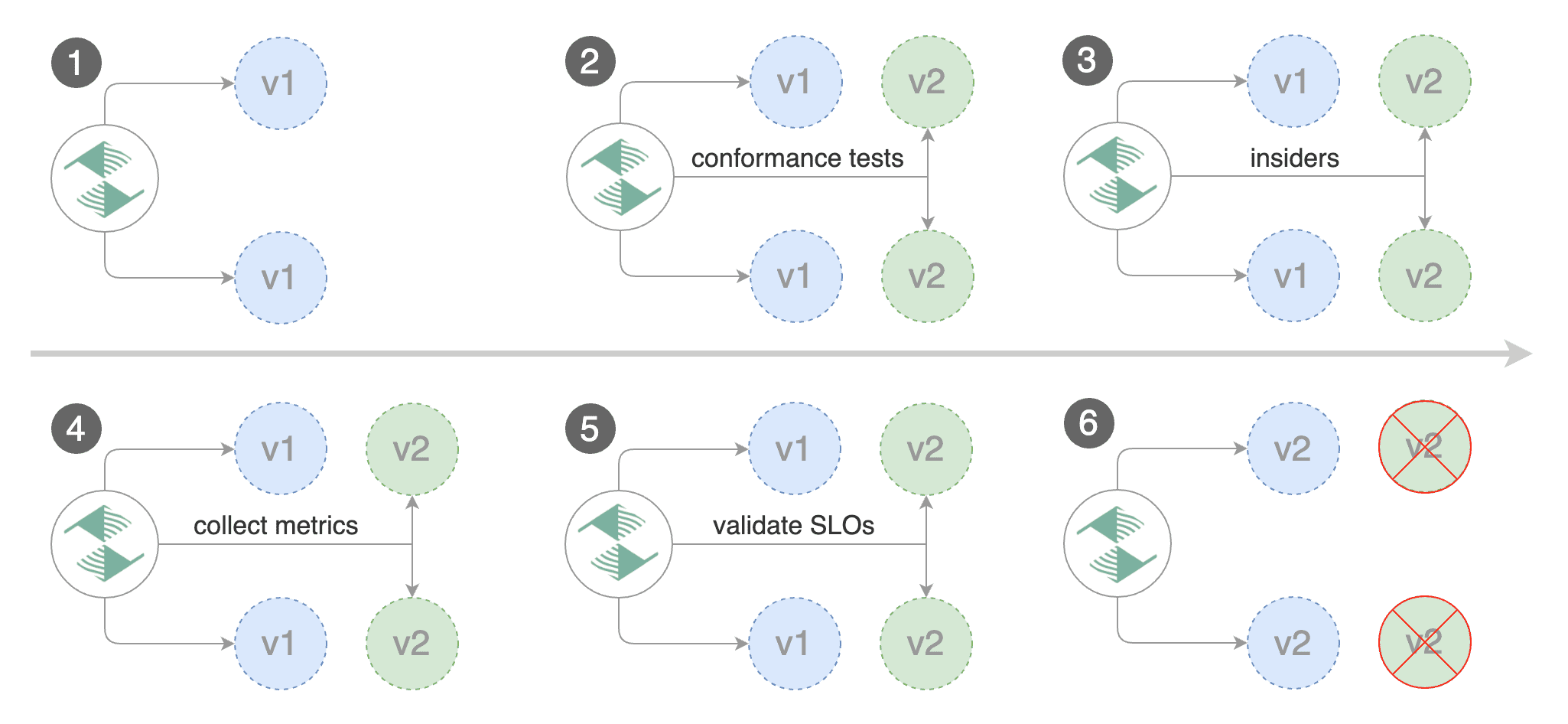

Gated canary promotion stages:

|

||||

|

||||

* scan for canary deployments

|

||||

* check Istio virtual service routes are mapped to primary and canary ClusterIP services

|

||||

* check primary and canary deployments status

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% (step weight)

|

||||

* call webhooks and check results

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated and start over

|

||||

* increase canary traffic weight by 5% (step weight) till it reaches 50% (max weight)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* promote canary to primary

|

||||

* copy ConfigMaps and Secrets from canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark rollout as finished

|

||||

* wait for the canary deployment to be updated and start over

|

||||

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

```yaml

|

||||

@@ -102,9 +81,31 @@ spec:

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- podinfo.example.com

|

||||

# HTTP match conditions (optional)

|

||||

match:

|

||||

- uri:

|

||||

prefix: /

|

||||

# HTTP rewrite (optional)

|

||||

rewrite:

|

||||

uri: /

|

||||

# Envoy timeout and retry policy (optional)

|

||||

headers:

|

||||

request:

|

||||

add:

|

||||

x-envoy-upstream-rq-timeout-ms: "15000"

|

||||

x-envoy-max-retries: "10"

|

||||

x-envoy-retry-on: "gateway-error,connect-failure,refused-stream"

|

||||

# cross-origin resource sharing policy (optional)

|

||||

corsPolicy:

|

||||

allowOrigin:

|

||||

- example.com

|

||||

# promote the canary without analysing it (default false)

|

||||

skipAnalysis: false

|

||||

# define the canary analysis timing and KPIs

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

@@ -118,16 +119,27 @@ spec:

|

||||

stepWeight: 5

|

||||

# Istio Prometheus checks

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# builtin checks

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

- name: request-duration

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# custom check

|

||||

- name: "kafka lag"

|

||||

threshold: 100

|

||||

query: |

|

||||

avg_over_time(

|

||||

kafka_consumergroup_lag{

|

||||

consumergroup=~"podinfo-consumer-.*",

|

||||

topic="podinfo"

|

||||

}[1m]

|

||||

)

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

@@ -137,322 +149,47 @@ spec:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

The canary analysis is using the following promql queries:

|

||||

|

||||

_HTTP requests success rate percentage_

|

||||

For more details on how the canary analysis and promotion works please [read the docs](https://docs.flagger.app/how-it-works).

|

||||

|

||||

```sql

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload",

|

||||

response_code!~"5.*"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

```

|

||||

## Features

|

||||

|

||||

_HTTP requests milliseconds duration P99_

|

||||

| Feature | Istio | App Mesh | SuperGloo |

|

||||

| -------------------------------------------- | ------------------ | ------------------ |------------------ |

|

||||

| Canary deployments (weighted traffic) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| A/B testing (headers and cookies filters) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: |

|

||||

| Load testing | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Webhooks (custom acceptance tests) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request success rate check (Envoy metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request duration check (Envoy metric) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_check_mark: |

|

||||

| Custom promql checks | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Ingress gateway (CORS, retries and timeouts) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_check_mark: |

|

||||

|

||||

```sql

|

||||

histogram_quantile(0.99,

|

||||

sum(

|

||||

irate(

|

||||

istio_request_duration_seconds_bucket{

|

||||

reporter="destination",

|

||||

destination_workload=~"$workload",

|

||||

destination_workload_namespace=~"$namespace"

|

||||

}[$interval]

|

||||

)

|

||||

) by (le)

|

||||

)

|

||||

```

|

||||

## Roadmap

|

||||

|

||||

The canary analysis can be extended with webhooks.

|

||||

Flagger will call the webhooks (HTTP POST) and determine from the response status code (HTTP 2xx) if the canary is failing or not.

|

||||

|

||||

Webhook payload:

|

||||

|

||||

```json

|

||||

{

|

||||

"name": "podinfo",

|

||||

"namespace": "test",

|

||||

"metadata": {

|

||||

"test": "all",

|

||||

"token": "16688eb5e9f289f1991c"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Automated canary analysis, promotions and rollbacks

|

||||

|

||||

Create a test namespace with Istio sidecar injection enabled:

|

||||

|

||||

```bash

|

||||

export REPO=https://raw.githubusercontent.com/stefanprodan/flagger/master

|

||||

|

||||

kubectl apply -f ${REPO}/artifacts/namespaces/test.yaml

|

||||

```

|

||||

|

||||

Create a deployment and a horizontal pod autoscaler:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary promotion custom resource (replace the Istio gateway and the internet domain with your own):

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/canary.yaml

|

||||

```

|

||||

|

||||

After a couple of seconds Flagger will create the canary objects:

|

||||

|

||||

```bash

|

||||

# applied

|

||||

deployment.apps/podinfo

|

||||

horizontalpodautoscaler.autoscaling/podinfo

|

||||

canary.flagger.app/podinfo

|

||||

# generated

|

||||

deployment.apps/podinfo-primary

|

||||

horizontalpodautoscaler.autoscaling/podinfo-primary

|

||||

service/podinfo

|

||||

service/podinfo-canary

|

||||

service/podinfo-primary

|

||||

virtualservice.networking.istio.io/podinfo

|

||||

```

|

||||

|

||||

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.0

|

||||

```

|

||||

|

||||

**Note** that Flagger tracks changes in the deployment `PodSpec` but also in `ConfigMaps` and `Secrets`

|

||||

that are referenced in the pod's volumes and containers environment variables.

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new canary analysis:

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger New revision detected podinfo.test

|

||||

Normal Synced 3m flagger Scaling up podinfo.test

|

||||

Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 20

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 25

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 30

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 35

|

||||

Normal Synced 55s flagger Advance podinfo.test canary weight 40

|

||||

Normal Synced 45s flagger Advance podinfo.test canary weight 45

|

||||

Normal Synced 35s flagger Advance podinfo.test canary weight 50

|

||||

Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test

|

||||

Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 5 2019-01-16T14:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

|

||||

```bash

|

||||

kubectl -n test run tester --image=quay.io/stefanprodan/podinfo:1.2.1 -- ./podinfo --port=9898

|

||||

kubectl -n test exec -it tester-xx-xx sh

|

||||

```

|

||||

|

||||

Generate HTTP 500 errors:

|

||||

|

||||

```bash

|

||||

watch curl http://podinfo-canary:9898/status/500

|

||||

```

|

||||

|

||||

Generate latency:

|

||||

|

||||

```bash

|

||||

watch curl http://podinfo-canary:9898/delay/1

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger Starting canary deployment for podinfo.test

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 3m flagger Halt podinfo.test advancement success rate 69.17% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 61.39% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 55.06% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 47.00% < 99%

|

||||

Normal Synced 2m flagger (combined from similar events): Halt podinfo.test advancement success rate 38.08% < 99%

|

||||

Warning Synced 1m flagger Rolling back podinfo.test failed checks threshold reached 10

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

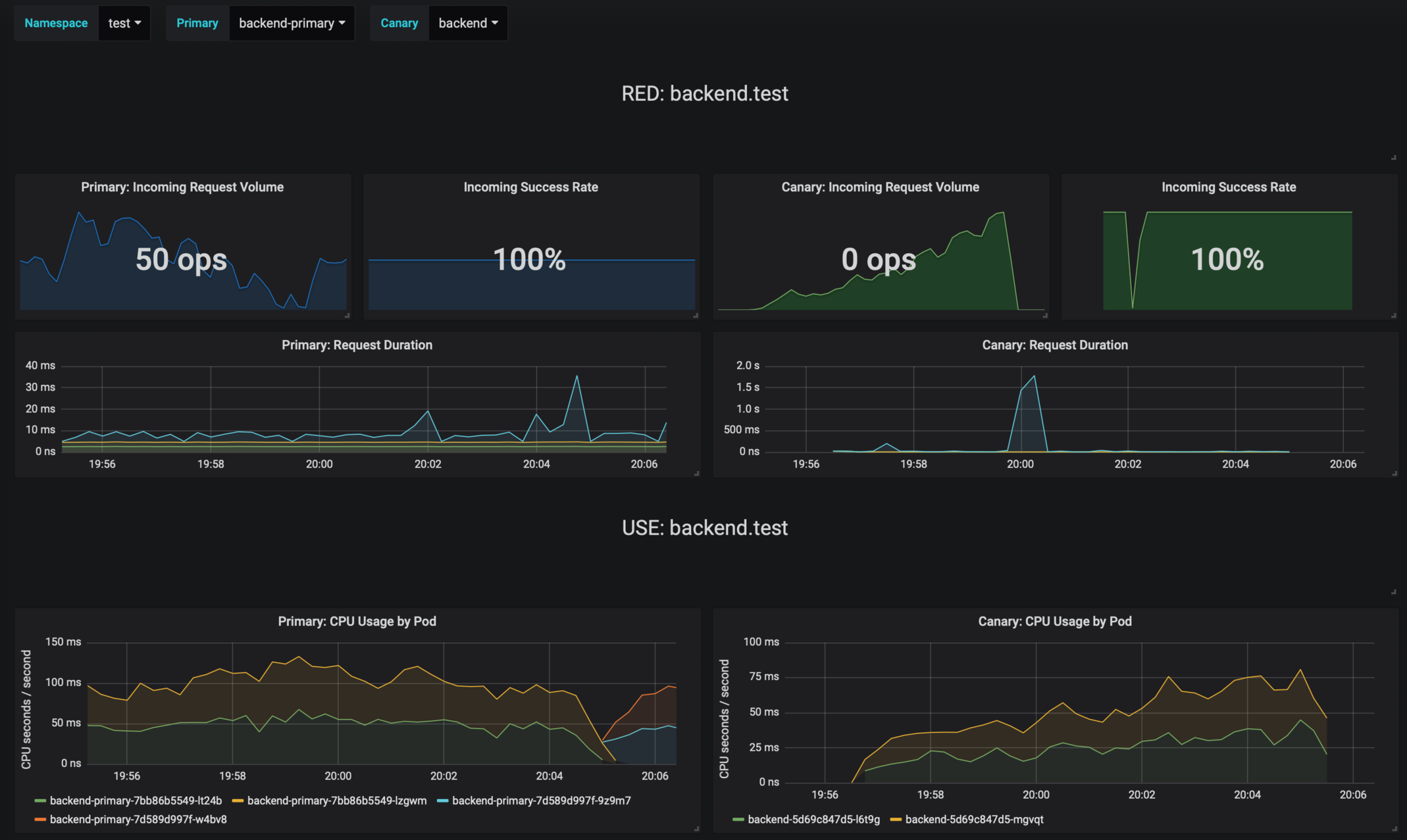

### Monitoring

|

||||

|

||||

Flagger comes with a Grafana dashboard made for canary analysis.

|

||||

|

||||

Install Grafana with Helm:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger-grafana flagger/grafana \

|

||||

--namespace=istio-system \

|

||||

--set url=http://prometheus.istio-system:9090

|

||||

```

|

||||

|

||||

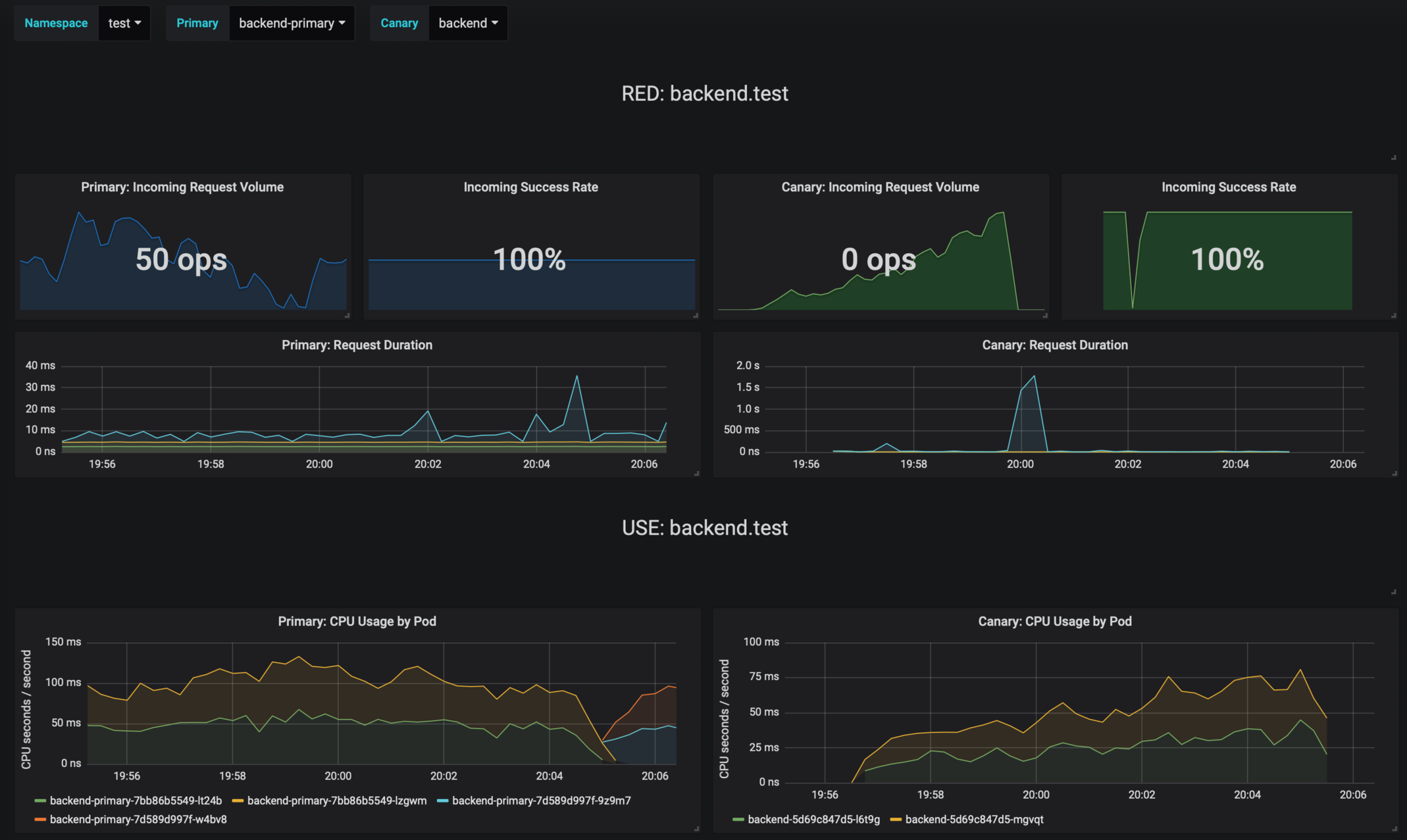

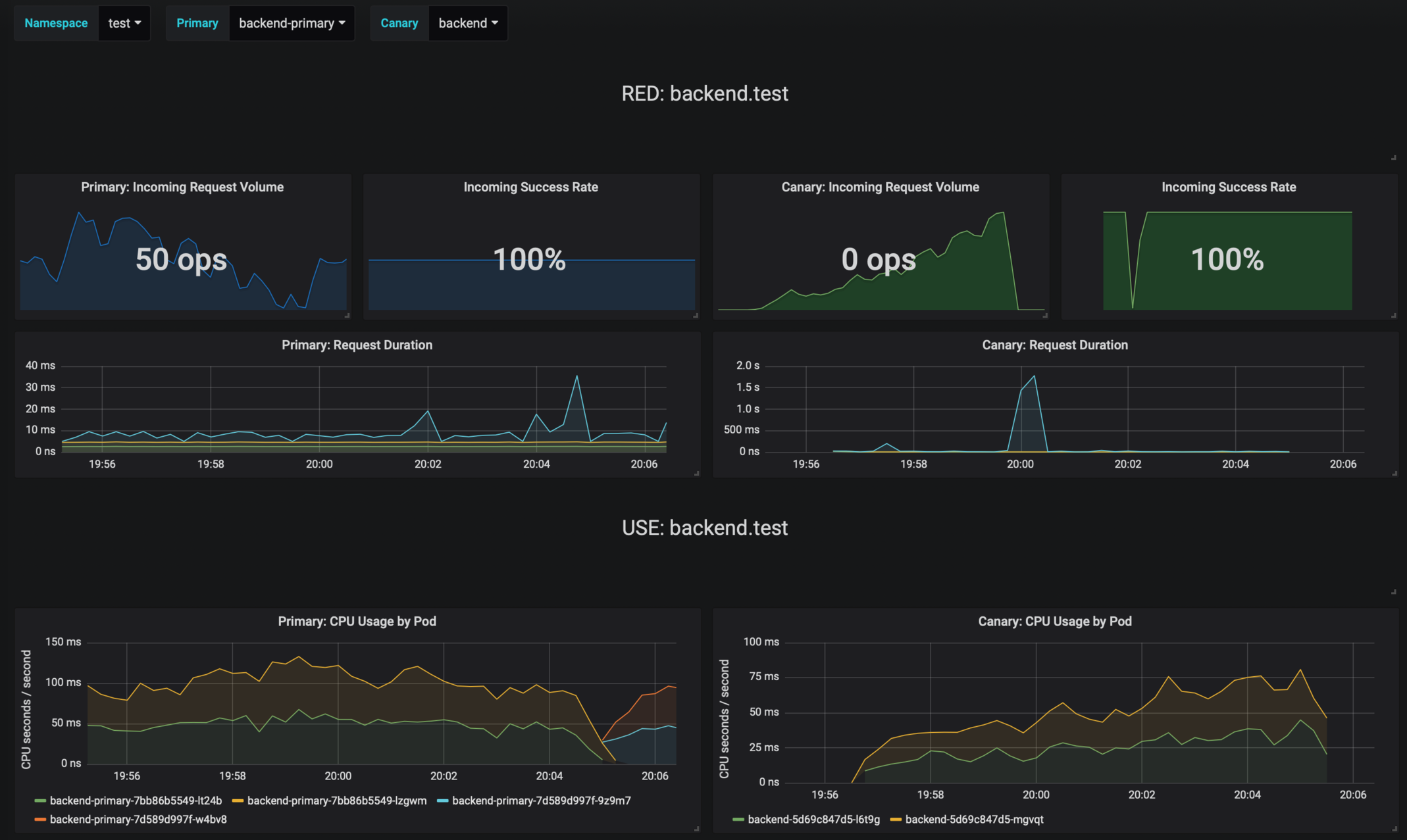

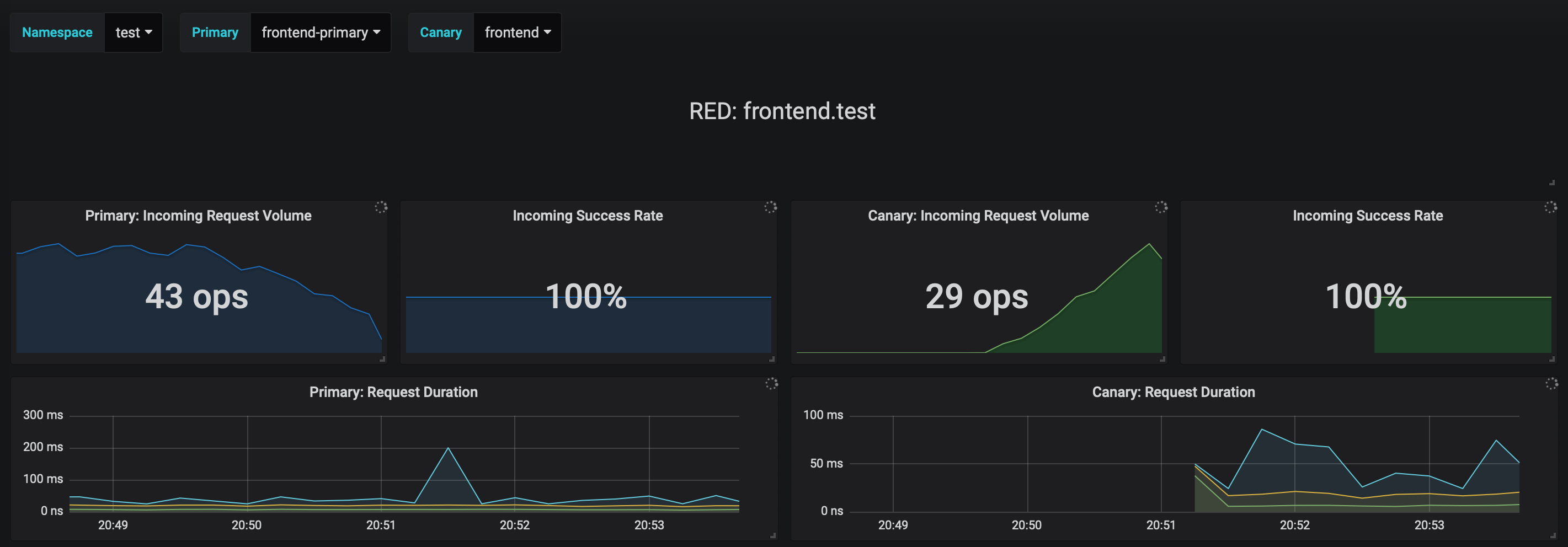

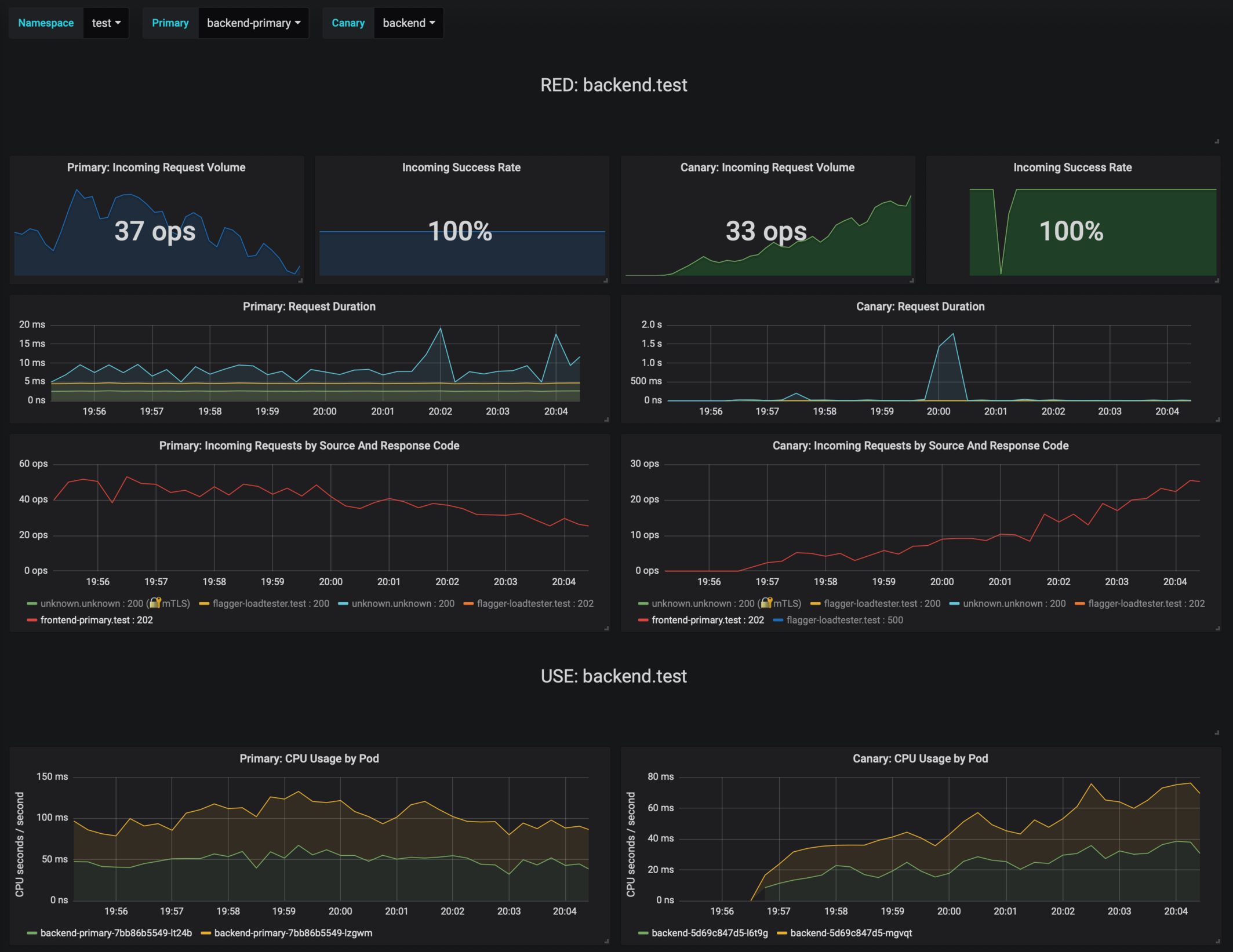

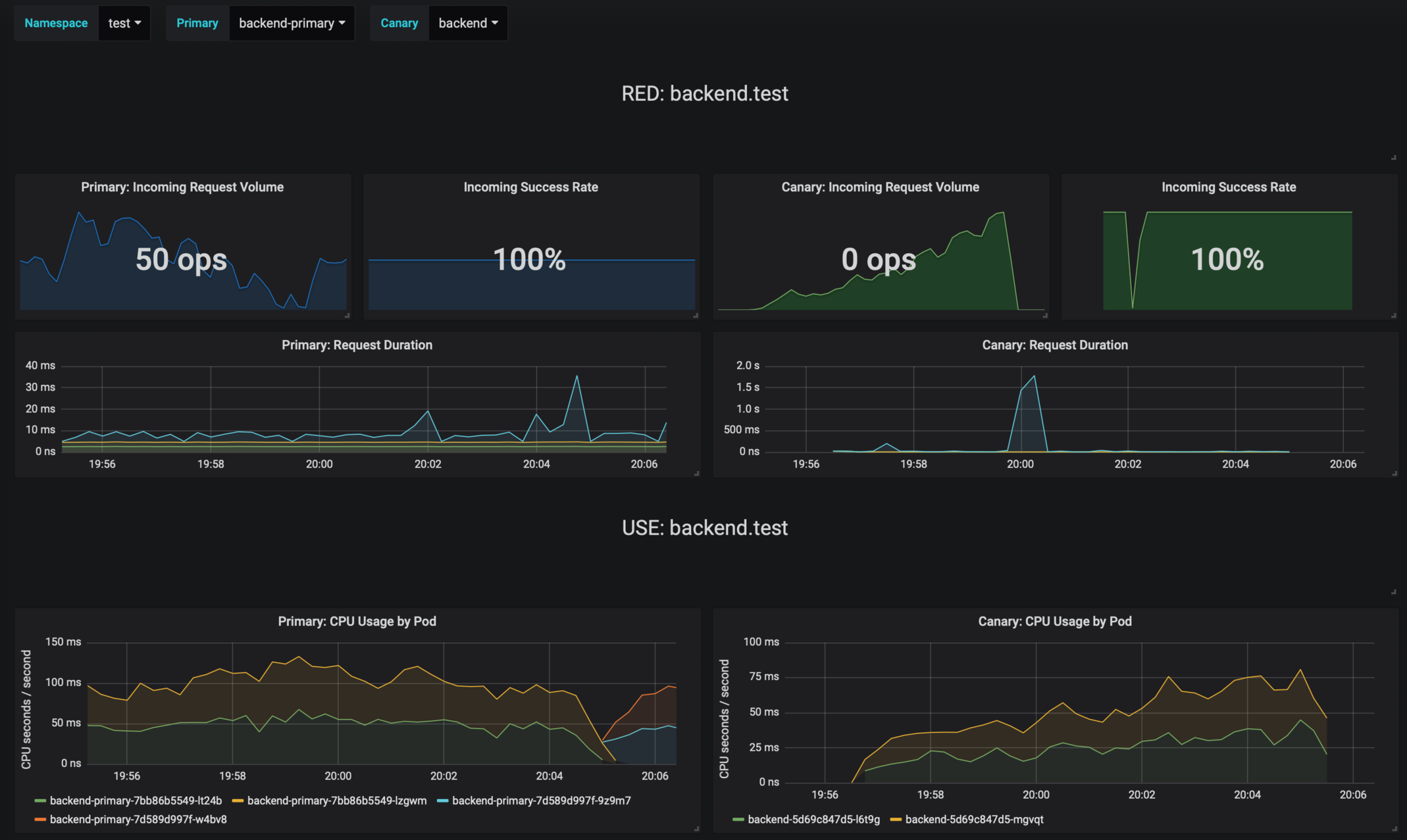

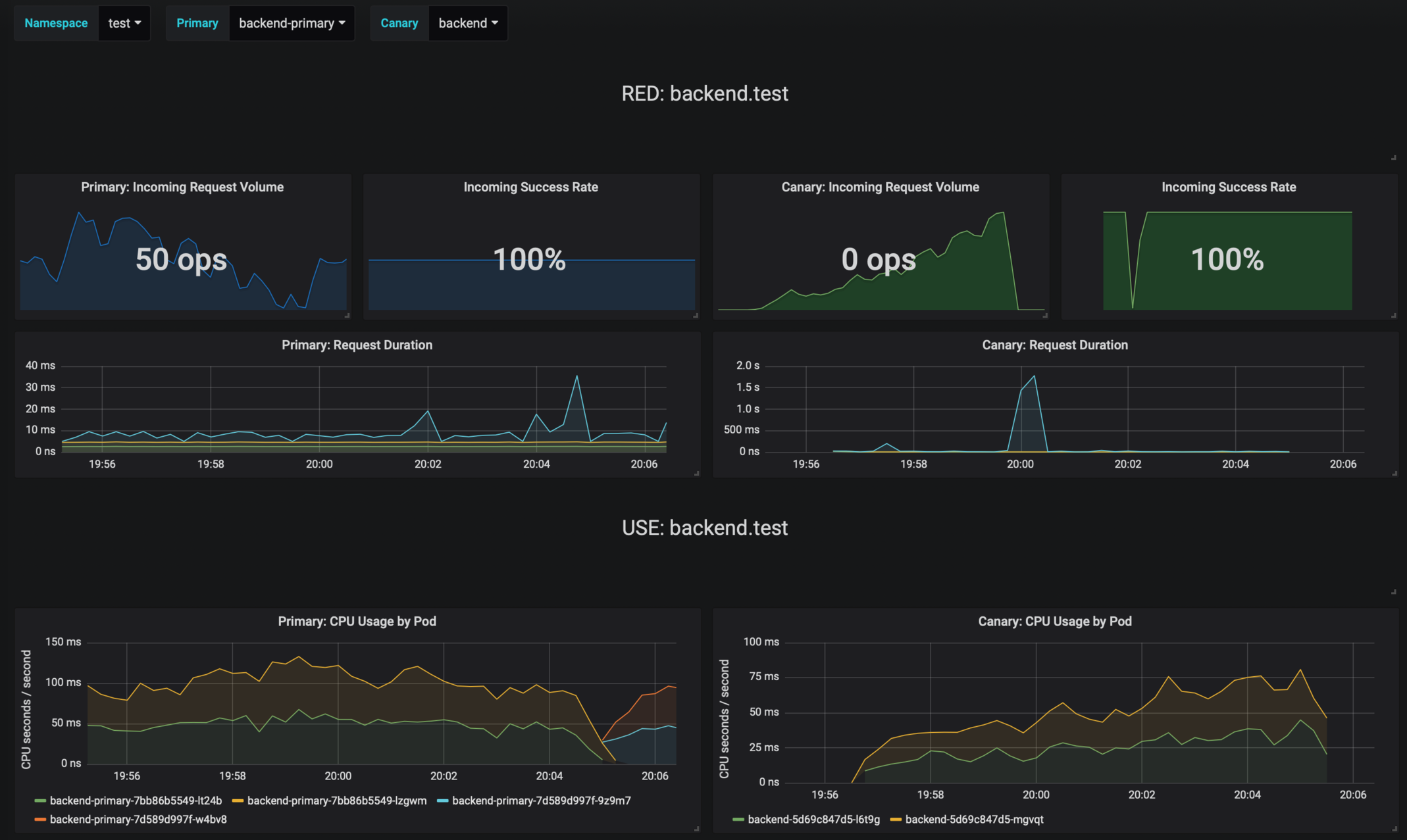

The dashboard shows the RED and USE metrics for the primary and canary workloads:

|

||||

|

||||

|

||||

|

||||

The canary errors and latency spikes have been recorded as Kubernetes events and logged by Flagger in json format:

|

||||

|

||||

```

|

||||

kubectl -n istio-system logs deployment/flagger --tail=100 | jq .msg

|

||||

|

||||

Starting canary deployment for podinfo.test

|

||||

Advance podinfo.test canary weight 5

|

||||

Advance podinfo.test canary weight 10

|

||||

Advance podinfo.test canary weight 15

|

||||

Advance podinfo.test canary weight 20

|

||||

Advance podinfo.test canary weight 25

|

||||

Advance podinfo.test canary weight 30

|

||||

Advance podinfo.test canary weight 35

|

||||

Halt podinfo.test advancement success rate 98.69% < 99%

|

||||

Advance podinfo.test canary weight 40

|

||||

Halt podinfo.test advancement request duration 1.515s > 500ms

|

||||

Advance podinfo.test canary weight 45

|

||||

Advance podinfo.test canary weight 50

|

||||

Copying podinfo.test template spec to podinfo-primary.test

|

||||

Halt podinfo-primary.test advancement waiting for rollout to finish: 1 old replicas are pending termination

|

||||

Scaling down podinfo.test

|

||||

Promotion completed! podinfo.test

|

||||

```

|

||||

|

||||

Flagger exposes Prometheus metrics that can be used to determine the canary analysis status and the destination weight values:

|

||||

|

||||

```bash

|

||||

# Canaries total gauge

|

||||

flagger_canary_total{namespace="test"} 1

|

||||

|

||||

# Canary promotion last known status gauge

|

||||

# 0 - running, 1 - successful, 2 - failed

|

||||

flagger_canary_status{name="podinfo" namespace="test"} 1

|

||||

|

||||

# Canary traffic weight gauge

|

||||

flagger_canary_weight{workload="podinfo-primary" namespace="test"} 95

|

||||

flagger_canary_weight{workload="podinfo" namespace="test"} 5

|

||||

|

||||

# Seconds spent performing canary analysis histogram

|

||||

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="10"} 6

|

||||

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="+Inf"} 6

|

||||

flagger_canary_duration_seconds_sum{name="podinfo",namespace="test"} 17.3561329

|

||||

flagger_canary_duration_seconds_count{name="podinfo",namespace="test"} 6

|

||||

```

|

||||

|

||||

### Alerting

|

||||

|

||||

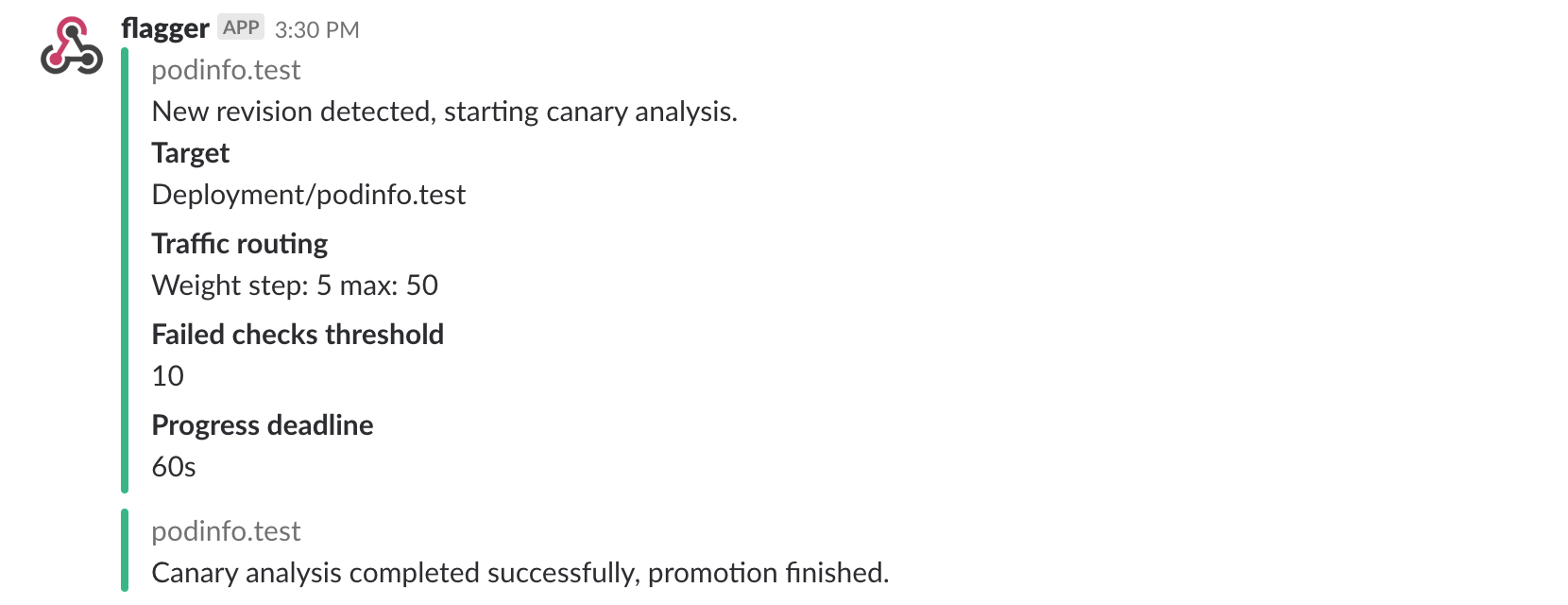

Flagger can be configured to send Slack notifications:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace=istio-system \

|

||||

--set slack.url=https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK \

|

||||

--set slack.channel=general \

|

||||

--set slack.user=flagger

|

||||

```

|

||||

|

||||

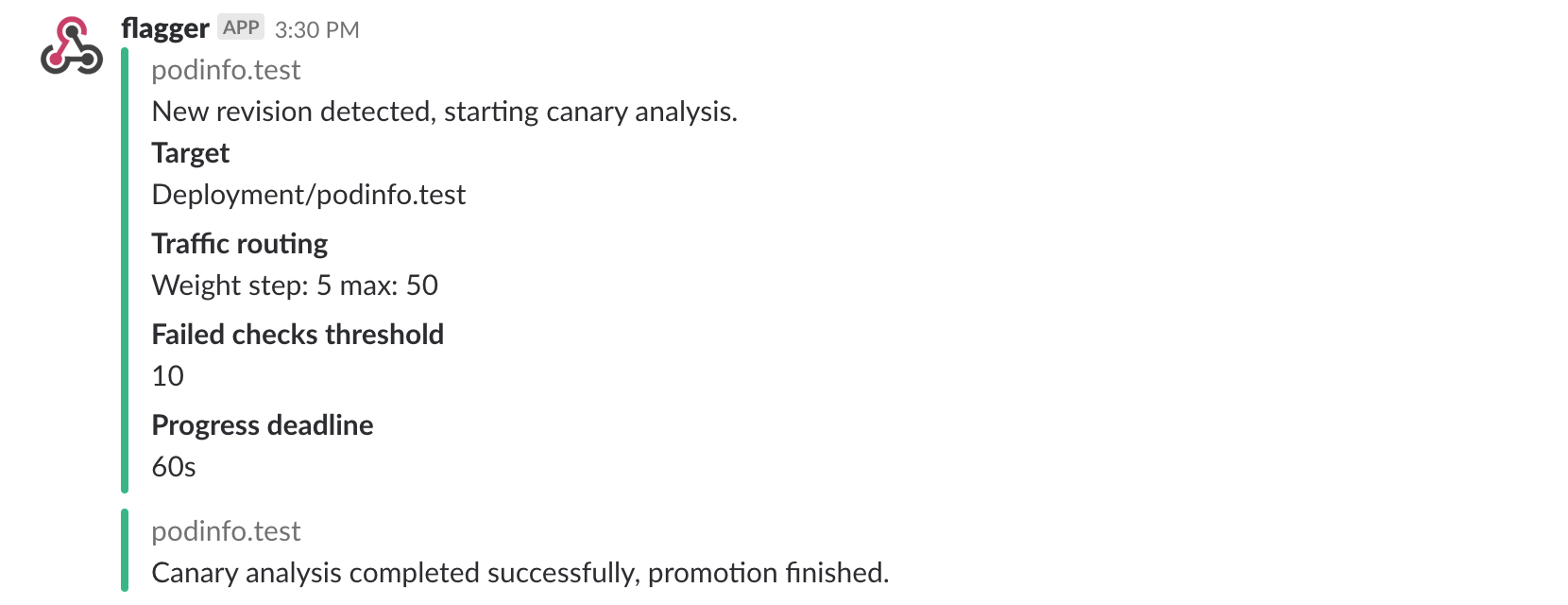

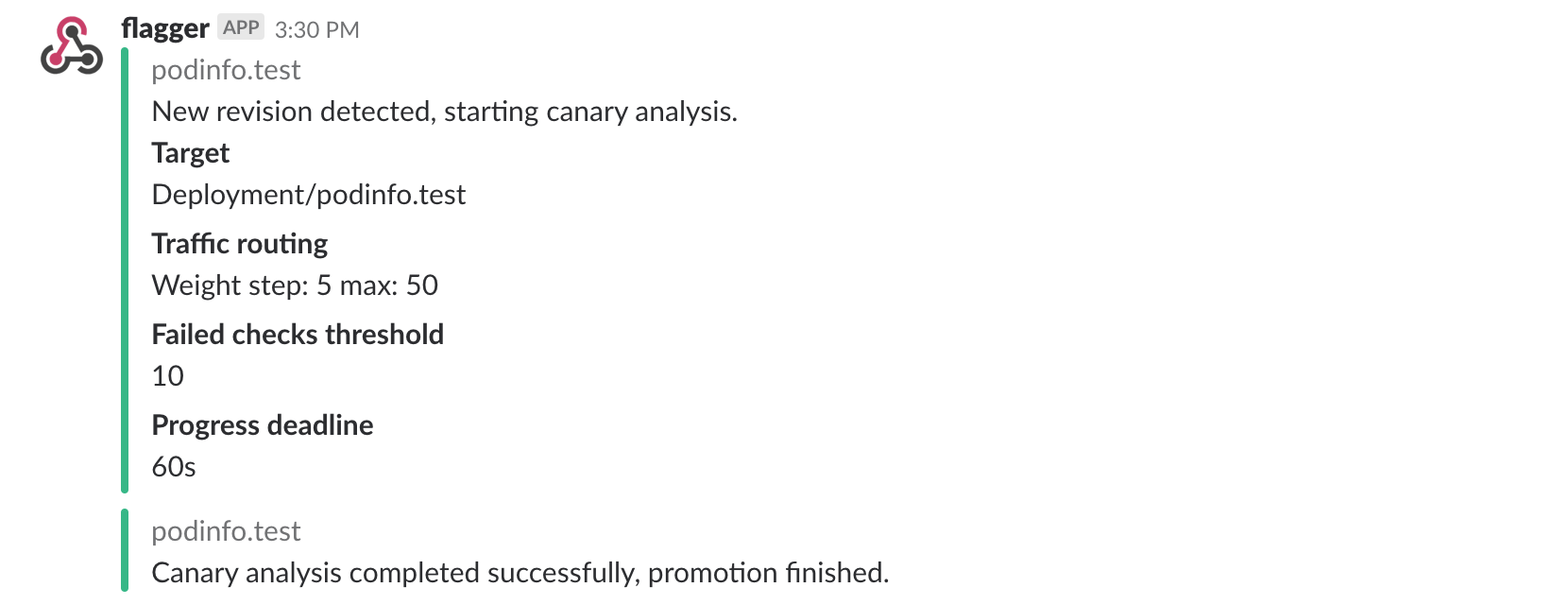

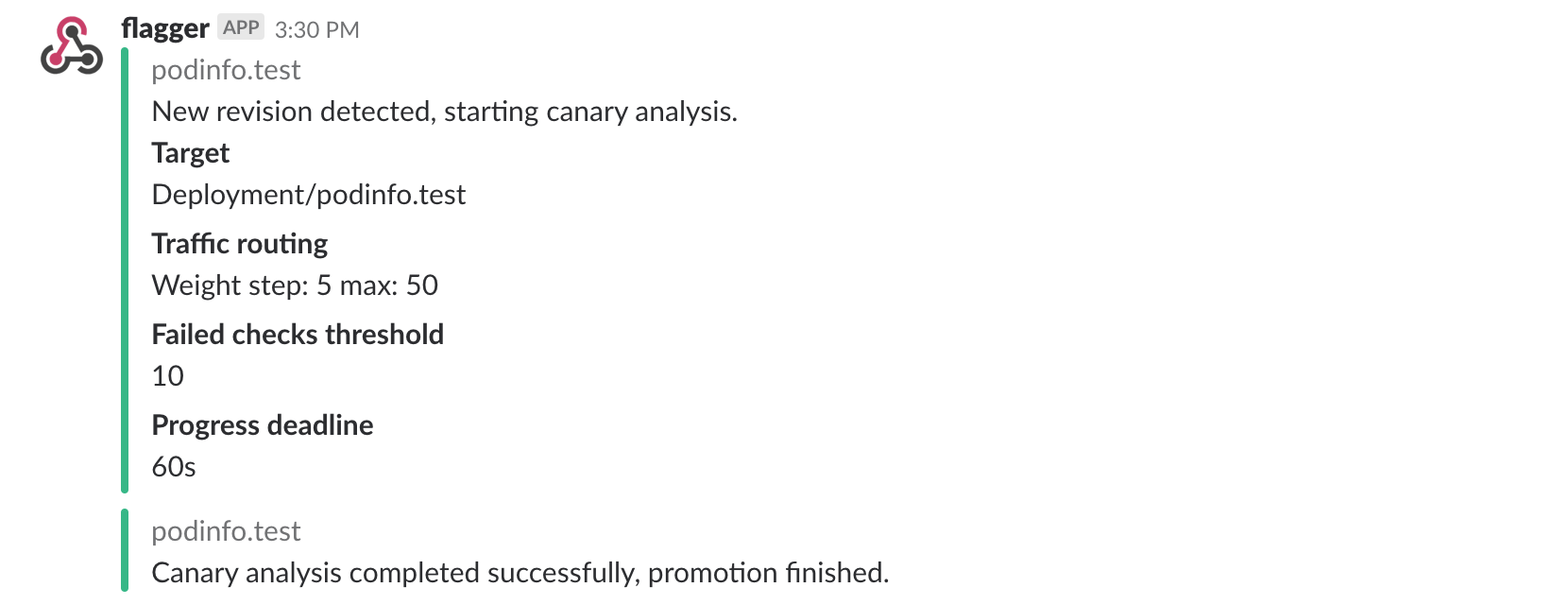

Once configured with a Slack incoming webhook, Flagger will post messages when a canary deployment has been initialized,

|

||||

when a new revision has been detected and if the canary analysis failed or succeeded.

|

||||

|

||||

|

||||

|

||||

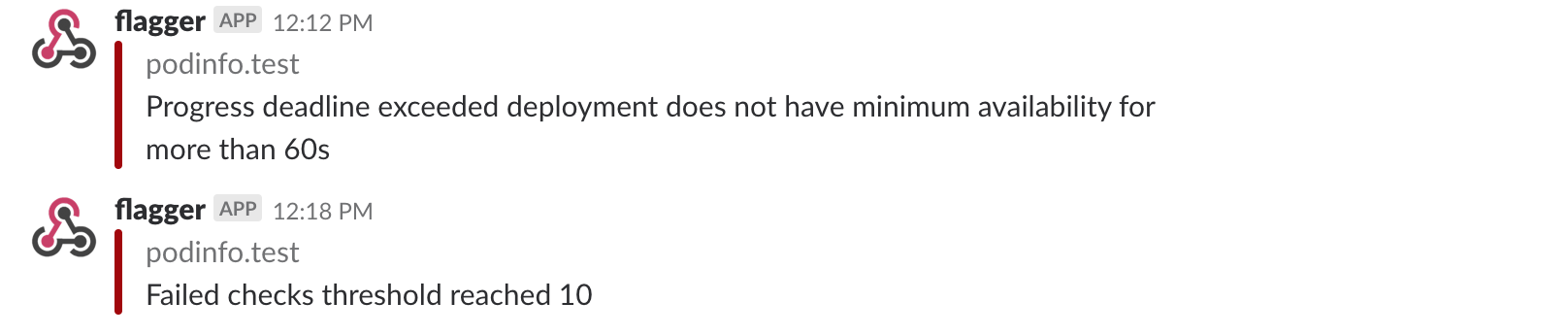

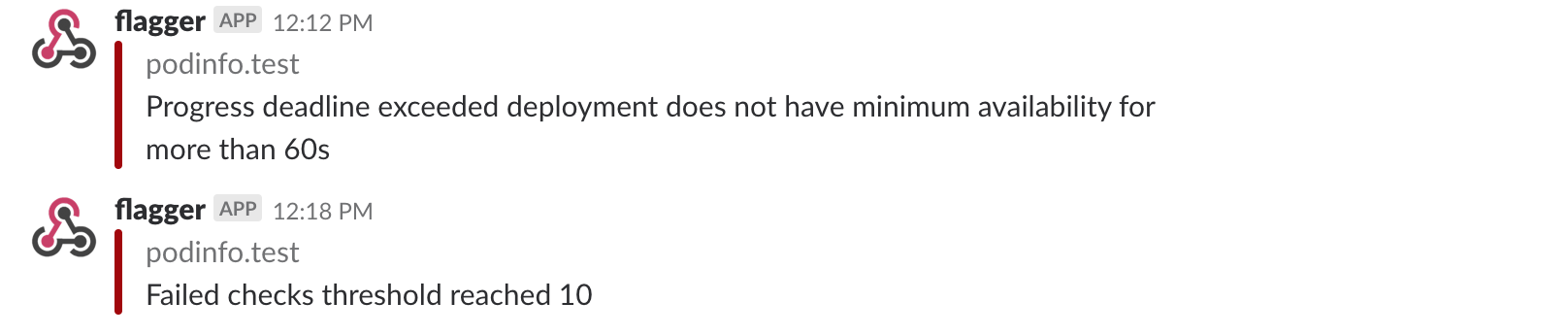

A canary deployment will be rolled back if the progress deadline exceeded or if the analysis

|

||||

reached the maximum number of failed checks:

|

||||

|

||||

|

||||

|

||||

Besides Slack, you can use Alertmanager to trigger alerts when a canary deployment failed:

|

||||

|

||||

```yaml

|

||||

- alert: canary_rollback

|

||||

expr: flagger_canary_status > 1

|

||||

for: 1m

|

||||

labels:

|

||||

severity: warning

|

||||

annotations:

|

||||

summary: "Canary failed"

|

||||

description: "Workload {{ $labels.name }} namespace {{ $labels.namespace }}"

|

||||

```

|

||||

|

||||

### Roadmap

|

||||

|

||||

* Extend the validation mechanism to support other metrics than HTTP success rate and latency

|

||||

* Integrate with other service mesh technologies like Linkerd v2

|

||||

* Add support for comparing the canary metrics to the primary ones and do the validation based on the derivation between the two

|

||||

* Extend the canary analysis and promotion to other types than Kubernetes deployments such as Flux Helm releases or OpenFaaS functions

|

||||

|

||||

### Contributing

|

||||

## Contributing

|

||||

|

||||

Flagger is Apache 2.0 licensed and accepts contributions via GitHub pull requests.

|

||||

|

||||

When submitting bug reports please include as much details as possible:

|

||||

When submitting bug reports please include as much details as possible:

|

||||

|

||||

* which Flagger version

|

||||

* which Flagger CRD version

|

||||

* which Kubernetes/Istio version

|

||||

* what configuration (canary, virtual service and workloads definitions)

|

||||

* what happened (Flagger, Istio Pilot and Proxy logs)

|

||||

|

||||

## Getting Help

|

||||

|

||||

If you have any questions about Flagger and progressive delivery:

|

||||

|

||||

* Read the Flagger [docs](https://docs.flagger.app).

|

||||

* Invite yourself to the [Weave community slack](https://slack.weave.works/)