Compare commits

87 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

04a56a3591 | ||

|

|

4a354e74d4 | ||

|

|

1e3e6427d5 | ||

|

|

38826108c8 | ||

|

|

4c4752f907 | ||

|

|

94dcd6c94d | ||

|

|

eabef3db30 | ||

|

|

6750f10ffa | ||

|

|

56cb888cbf | ||

|

|

b3e7fb3417 | ||

|

|

2c6e1baca2 | ||

|

|

c8358929d1 | ||

|

|

1dc7677dfb | ||

|

|

8e699a7543 | ||

|

|

cbbabdfac0 | ||

|

|

9d92de234c | ||

|

|

ba65975fb5 | ||

|

|

ef423b2078 | ||

|

|

f451b4e36c | ||

|

|

0856e13ee6 | ||

|

|

87b9fa8ca7 | ||

|

|

5b43d3d314 | ||

|

|

ac4972dd8d | ||

|

|

8a8f68af5d | ||

|

|

c669dc0c4b | ||

|

|

863a5466cc | ||

|

|

e2347c84e3 | ||

|

|

e0e673f565 | ||

|

|

30cbf2a741 | ||

|

|

f58de3801c | ||

|

|

7c6b88d4c1 | ||

|

|

0c0ebaecd5 | ||

|

|

1925f99118 | ||

|

|

6f2a22a1cc | ||

|

|

ee04082cd7 | ||

|

|

efd901ac3a | ||

|

|

e565789ae8 | ||

|

|

d3953004f6 | ||

|

|

df1d9e3011 | ||

|

|

631c55fa6e | ||

|

|

29cdd43288 | ||

|

|

9b79af9fcd | ||

|

|

2c9c1adb47 | ||

|

|

5dfb5808c4 | ||

|

|

bb0175aebf | ||

|

|

adaf4c99c0 | ||

|

|

bed6ed09d5 | ||

|

|

4ff67a85ce | ||

|

|

702f4fcd14 | ||

|

|

8a03ae153d | ||

|

|

434c6149ab | ||

|

|

97fc4a90ae | ||

|

|

217ef06930 | ||

|

|

71057946e6 | ||

|

|

a74ad52c72 | ||

|

|

12d26874f8 | ||

|

|

27de9ce151 | ||

|

|

9e7cd5a8c5 | ||

|

|

38cb487b64 | ||

|

|

05ca266c5e | ||

|

|

5cc26de645 | ||

|

|

2b9a195fa3 | ||

|

|

4454749eec | ||

|

|

b435a03fab | ||

|

|

7c166e2b40 | ||

|

|

f7a7963dcf | ||

|

|

9c77c0d69c | ||

|

|

e8a9555346 | ||

|

|

59751dd007 | ||

|

|

9c4d4d16b6 | ||

|

|

0e3d1b3e8f | ||

|

|

f119b78940 | ||

|

|

456d914c35 | ||

|

|

737507b0fe | ||

|

|

4bcf82d295 | ||

|

|

e9cd7afc8a | ||

|

|

0830abd51d | ||

|

|

5b296e01b3 | ||

|

|

3fd039afd1 | ||

|

|

5904348ba5 | ||

|

|

1a98e93723 | ||

|

|

c9685fbd13 | ||

|

|

dc347e273d | ||

|

|

8170916897 | ||

|

|

71cd4e0cb7 | ||

|

|

0109788ccc | ||

|

|

1649dea468 |

16

.circleci/config.yml

Normal file

@@ -0,0 +1,16 @@

|

||||

version: 2.1

|

||||

jobs:

|

||||

e2e-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-istio.sh

|

||||

- run: test/e2e-build.sh

|

||||

- run: test/e2e-tests.sh

|

||||

|

||||

workflows:

|

||||

version: 2

|

||||

build-and-test:

|

||||

jobs:

|

||||

- e2e-testing

|

||||

3

.gitignore

vendored

@@ -11,3 +11,6 @@

|

||||

# Output of the go coverage tool, specifically when used with LiteIDE

|

||||

*.out

|

||||

.DS_Store

|

||||

|

||||

bin/

|

||||

artifacts/gcloud/

|

||||

15

.travis.yml

@@ -12,12 +12,17 @@ addons:

|

||||

packages:

|

||||

- docker-ce

|

||||

|

||||

#before_script:

|

||||

# - go get -u sigs.k8s.io/kind

|

||||

# - curl https://raw.githubusercontent.com/kubernetes/helm/master/scripts/get | bash

|

||||

# - curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl && chmod +x kubectl && sudo mv kubectl /usr/local/bin/

|

||||

|

||||

script:

|

||||

- set -e

|

||||

- make test-fmt

|

||||

- make test-codegen

|

||||

- go test -race -coverprofile=coverage.txt -covermode=atomic ./pkg/controller/

|

||||

- make build

|

||||

- set -e

|

||||

- make test-fmt

|

||||

- make test-codegen

|

||||

- go test -race -coverprofile=coverage.txt -covermode=atomic ./pkg/controller/

|

||||

- make build

|

||||

|

||||

after_success:

|

||||

- if [ -z "$DOCKER_USER" ]; then

|

||||

|

||||

155

CHANGELOG.md

Normal file

@@ -0,0 +1,155 @@

|

||||

# Changelog

|

||||

|

||||

All notable changes to this project are documented in this file.

|

||||

|

||||

## 0.6.0 (2019-02-25)

|

||||

|

||||

Allows for [HTTPMatchRequests](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#HTTPMatchRequest)

|

||||

and [HTTPRewrite](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#HTTPRewrite)

|

||||

to be customized in the service spec of the canary custom resource.

|

||||

|

||||

#### Features

|

||||

|

||||

- Add HTTP match conditions and URI rewrite to the canary service spec [#55](https://github.com/stefanprodan/flagger/pull/55)

|

||||

- Update virtual service when the canary service spec changes

|

||||

[#54](https://github.com/stefanprodan/flagger/pull/54)

|

||||

[#51](https://github.com/stefanprodan/flagger/pull/51)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Run e2e testing on [Kubernetes Kind](https://github.com/kubernetes-sigs/kind) for canary promotion

|

||||

[#53](https://github.com/stefanprodan/flagger/pull/53)

|

||||

|

||||

|

||||

## 0.5.1 (2019-02-14)

|

||||

|

||||

Allows skipping the analysis phase to ship changes directly to production

|

||||

|

||||

#### Features

|

||||

|

||||

- Add option to skip the canary analysis [#46](https://github.com/stefanprodan/flagger/pull/46)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Reject deployment if the pod label selector doesn't match `app: <DEPLOYMENT_NAME>` [#43](https://github.com/stefanprodan/flagger/pull/43)

|

||||

|

||||

## 0.5.0 (2019-01-30)

|

||||

|

||||

Track changes in ConfigMaps and Secrets [#37](https://github.com/stefanprodan/flagger/pull/37)

|

||||

|

||||

#### Features

|

||||

|

||||

- Promote configmaps and secrets changes from canary to primary

|

||||

- Detect changes in configmaps and/or secrets and (re)start canary analysis

|

||||

- Add configs checksum to Canary CRD status

|

||||

- Create primary configmaps and secrets at bootstrap

|

||||

- Scan canary volumes and containers for configmaps and secrets

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Copy deployment labels from canary to primary at bootstrap and promotion

|

||||

|

||||

## 0.4.1 (2019-01-24)

|

||||

|

||||

Load testing webhook [#35](https://github.com/stefanprodan/flagger/pull/35)

|

||||

|

||||

#### Features

|

||||

|

||||

- Add the load tester chart to Flagger Helm repository

|

||||

- Implement a load test runner based on [rakyll/hey](https://github.com/rakyll/hey)

|

||||

- Log warning when no values are found for Istio metric due to lack of traffic

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Run wekbooks before the metrics checks to avoid failures when using a load tester

|

||||

|

||||

## 0.4.0 (2019-01-18)

|

||||

|

||||

Restart canary analysis if revision changes [#31](https://github.com/stefanprodan/flagger/pull/31)

|

||||

|

||||

#### Breaking changes

|

||||

|

||||

- Drop support for Kubernetes 1.10

|

||||

|

||||

#### Features

|

||||

|

||||

- Detect changes during canary analysis and reset advancement

|

||||

- Add status and additional printer columns to CRD

|

||||

- Add canary name and namespace to controller structured logs

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Allow canary name to be different to the target name

|

||||

- Check if multiple canaries have the same target and log error

|

||||

- Use deep copy when updating Kubernetes objects

|

||||

- Skip readiness checks if canary analysis has finished

|

||||

|

||||

## 0.3.0 (2019-01-11)

|

||||

|

||||

Configurable canary analysis duration [#20](https://github.com/stefanprodan/flagger/pull/20)

|

||||

|

||||

#### Breaking changes

|

||||

|

||||

- Helm chart: flag `controlLoopInterval` has been removed

|

||||

|

||||

#### Features

|

||||

|

||||

- CRD: canaries.flagger.app v1alpha3

|

||||

- Schedule canary analysis independently based on `canaryAnalysis.interval`

|

||||

- Add analysis interval to Canary CRD (defaults to one minute)

|

||||

- Make autoscaler (HPA) reference optional

|

||||

|

||||

## 0.2.0 (2019-01-04)

|

||||

|

||||

Webhooks [#18](https://github.com/stefanprodan/flagger/pull/18)

|

||||

|

||||

#### Features

|

||||

|

||||

- CRD: canaries.flagger.app v1alpha2

|

||||

- Implement canary external checks based on webhooks HTTP POST calls

|

||||

- Add webhooks to Canary CRD

|

||||

- Move docs to gitbook [docs.flagger.app](https://docs.flagger.app)

|

||||

|

||||

## 0.1.2 (2018-12-06)

|

||||

|

||||

Improve Slack notifications [#14](https://github.com/stefanprodan/flagger/pull/14)

|

||||

|

||||

#### Features

|

||||

|

||||

- Add canary analysis metadata to init and start Slack messages

|

||||

- Add rollback reason to failed canary Slack messages

|

||||

|

||||

## 0.1.1 (2018-11-28)

|

||||

|

||||

Canary progress deadline [#10](https://github.com/stefanprodan/flagger/pull/10)

|

||||

|

||||

#### Features

|

||||

|

||||

- Rollback canary based on the deployment progress deadline check

|

||||

- Add progress deadline to Canary CRD (defaults to 10 minutes)

|

||||

|

||||

## 0.1.0 (2018-11-25)

|

||||

|

||||

First stable release

|

||||

|

||||

#### Features

|

||||

|

||||

- CRD: canaries.flagger.app v1alpha1

|

||||

- Notifications: post canary events to Slack

|

||||

- Instrumentation: expose Prometheus metrics for canary status and traffic weight percentage

|

||||

- Autoscaling: add HPA reference to CRD and create primary HPA at bootstrap

|

||||

- Bootstrap: create primary deployment, ClusterIP services and Istio virtual service based on CRD spec

|

||||

|

||||

|

||||

## 0.0.1 (2018-10-07)

|

||||

|

||||

Initial semver release

|

||||

|

||||

#### Features

|

||||

|

||||

- Implement canary rollback based on failed checks threshold

|

||||

- Scale up the deployment when canary revision changes

|

||||

- Add OpenAPI v3 schema validation to Canary CRD

|

||||

- Use CRD status for canary state persistence

|

||||

- Add Helm charts for Flagger and Grafana

|

||||

- Add canary analysis Grafana dashboard

|

||||

13

Gopkg.lock

generated

@@ -163,12 +163,9 @@

|

||||

revision = "f2b4162afba35581b6d4a50d3b8f34e33c144682"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:03a74b0d86021c8269b52b7c908eb9bb3852ff590b363dad0a807cf58cec2f89"

|

||||

digest = "1:05ddd9088c0cfb8eaa3adf3626977caa6d96b3959a3bd8c91fef932fd1696c34"

|

||||

name = "github.com/knative/pkg"

|

||||

packages = [

|

||||

"apis",

|

||||

"apis/duck",

|

||||

"apis/duck/v1alpha1",

|

||||

"apis/istio",

|

||||

"apis/istio/authentication",

|

||||

"apis/istio/authentication/v1alpha1",

|

||||

@@ -179,14 +176,12 @@

|

||||

"client/clientset/versioned/scheme",

|

||||

"client/clientset/versioned/typed/authentication/v1alpha1",

|

||||

"client/clientset/versioned/typed/authentication/v1alpha1/fake",

|

||||

"client/clientset/versioned/typed/duck/v1alpha1",

|

||||

"client/clientset/versioned/typed/duck/v1alpha1/fake",

|

||||

"client/clientset/versioned/typed/istio/v1alpha3",

|

||||

"client/clientset/versioned/typed/istio/v1alpha3/fake",

|

||||

"signals",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "c15d7c8f2220a7578b33504df6edefa948c845ae"

|

||||

revision = "f9612ef73847258e381e749c4f45b0f5e03b66e9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:5985ef4caf91ece5d54817c11ea25f182697534f8ae6521eadcd628c142ac4b6"

|

||||

@@ -476,10 +471,9 @@

|

||||

version = "kubernetes-1.11.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:4b0d523ee389c762d02febbcfa0734c4530ebe87abe925db18f05422adcb33e8"

|

||||

digest = "1:83b01e3d6f85c4e911de84febd69a2d3ece614c5a4a518fbc2b5d59000645980"

|

||||

name = "k8s.io/apimachinery"

|

||||

packages = [

|

||||

"pkg/api/equality",

|

||||

"pkg/api/errors",

|

||||

"pkg/api/meta",

|

||||

"pkg/api/resource",

|

||||

@@ -693,6 +687,7 @@

|

||||

"github.com/knative/pkg/client/clientset/versioned",

|

||||

"github.com/knative/pkg/client/clientset/versioned/fake",

|

||||

"github.com/knative/pkg/signals",

|

||||

"github.com/prometheus/client_golang/prometheus",

|

||||

"github.com/prometheus/client_golang/prometheus/promhttp",

|

||||

"go.uber.org/zap",

|

||||

"go.uber.org/zap/zapcore",

|

||||

|

||||

@@ -47,7 +47,7 @@ required = [

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/knative/pkg"

|

||||

revision = "c15d7c8f2220a7578b33504df6edefa948c845ae"

|

||||

revision = "f9612ef73847258e381e749c4f45b0f5e03b66e9"

|

||||

|

||||

[[override]]

|

||||

name = "github.com/golang/glog"

|

||||

|

||||

5

Makefile

@@ -7,7 +7,7 @@ LT_VERSION?=$(shell grep 'VERSION' cmd/loadtester/main.go | awk '{ print $$4 }'

|

||||

|

||||

run:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info \

|

||||

-metrics-server=https://prometheus.iowa.weavedx.com \

|

||||

-metrics-server=https://prometheus.istio.weavedx.com \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

@@ -31,7 +31,7 @@ test: test-fmt test-codegen

|

||||

go test ./...

|

||||

|

||||

helm-package:

|

||||

cd charts/ && helm package flagger/ && helm package grafana/ && helm package loadtester/

|

||||

cd charts/ && helm package ./*

|

||||

mv charts/*.tgz docs/

|

||||

helm repo index docs --url https://stefanprodan.github.io/flagger --merge ./docs/index.yaml

|

||||

|

||||

@@ -46,6 +46,7 @@ version-set:

|

||||

sed -i '' "s/flagger:$$current/flagger:$$next/g" artifacts/flagger/deployment.yaml && \

|

||||

sed -i '' "s/tag: $$current/tag: $$next/g" charts/flagger/values.yaml && \

|

||||

sed -i '' "s/appVersion: $$current/appVersion: $$next/g" charts/flagger/Chart.yaml && \

|

||||

sed -i '' "s/version: $$current/version: $$next/g" charts/flagger/Chart.yaml && \

|

||||

echo "Version $$next set in code, deployment and charts"

|

||||

|

||||

version-up:

|

||||

|

||||

384

README.md

@@ -8,9 +8,37 @@

|

||||

|

||||

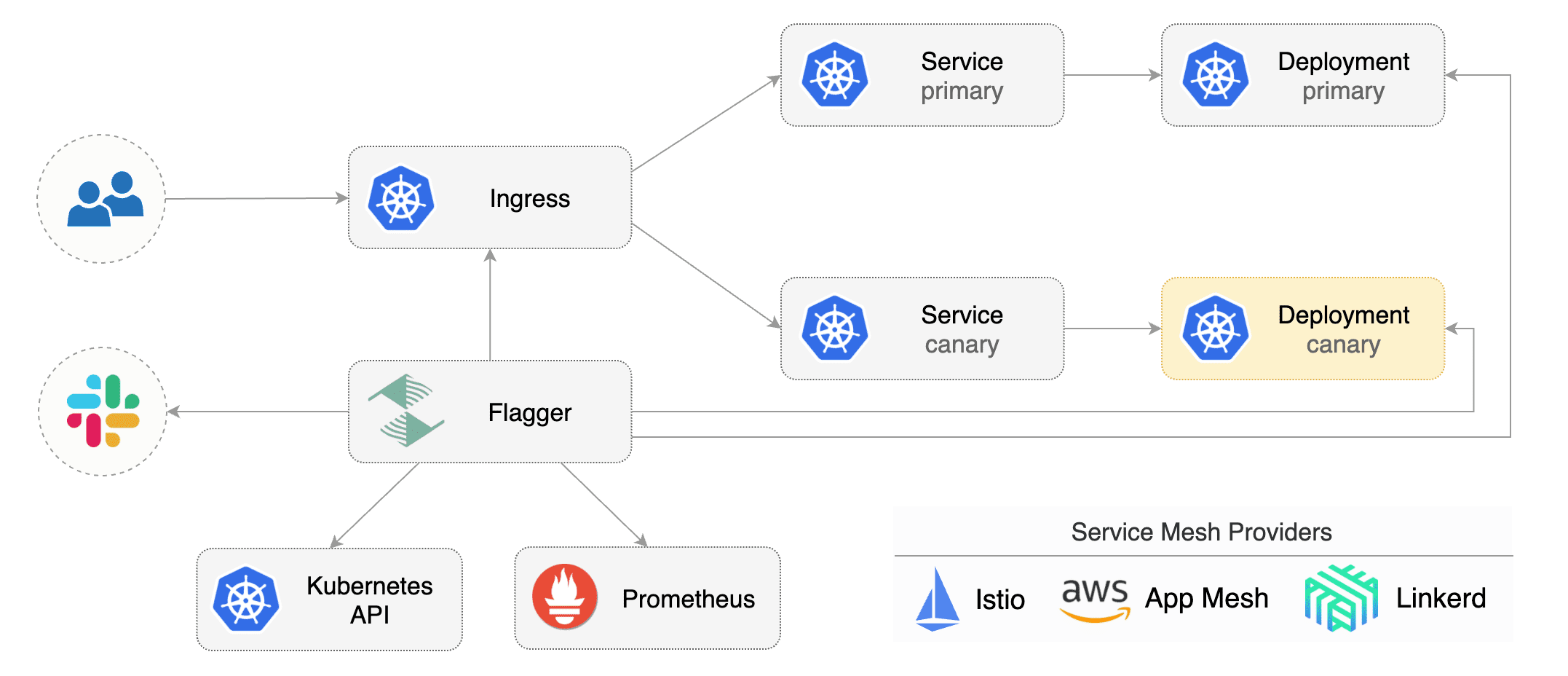

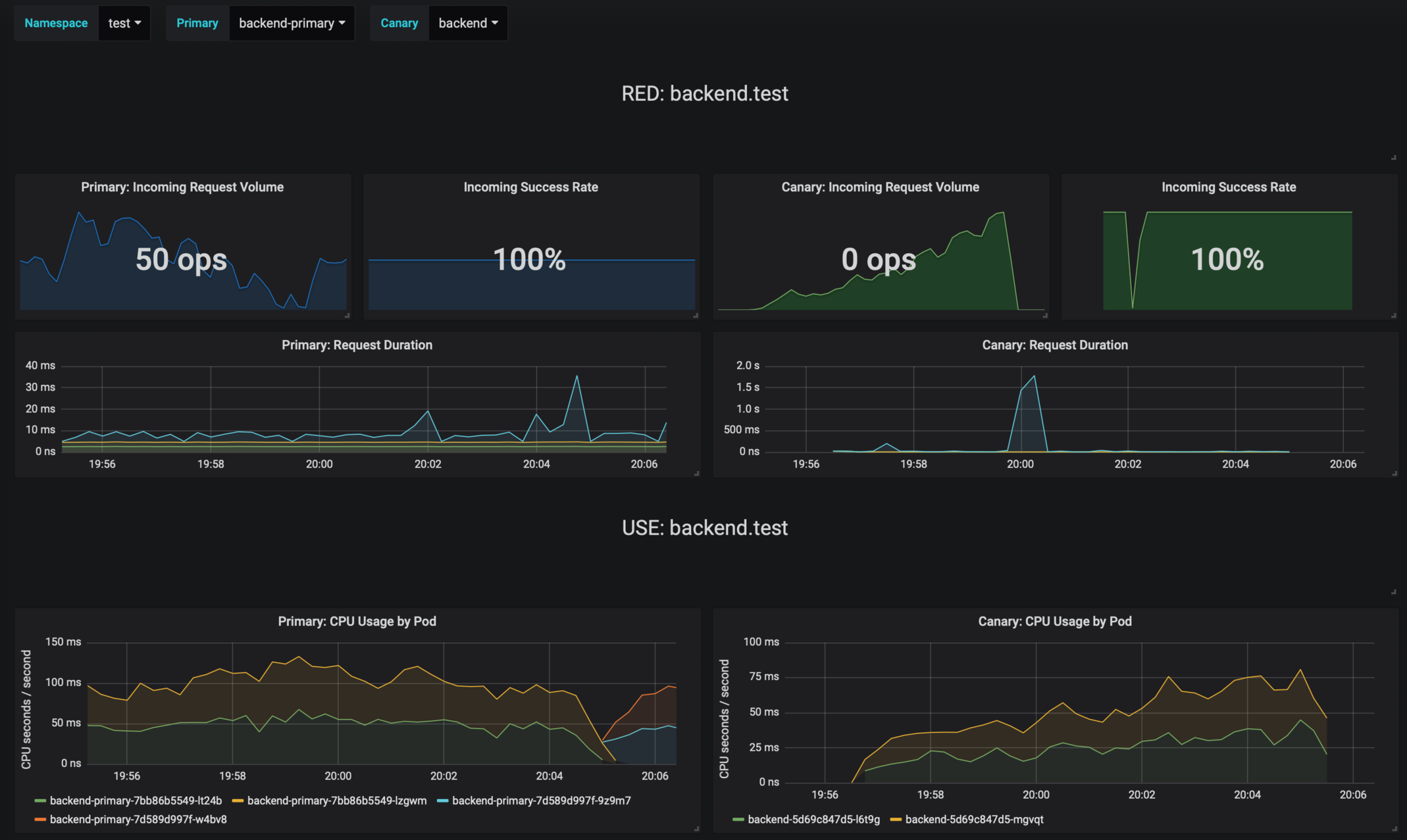

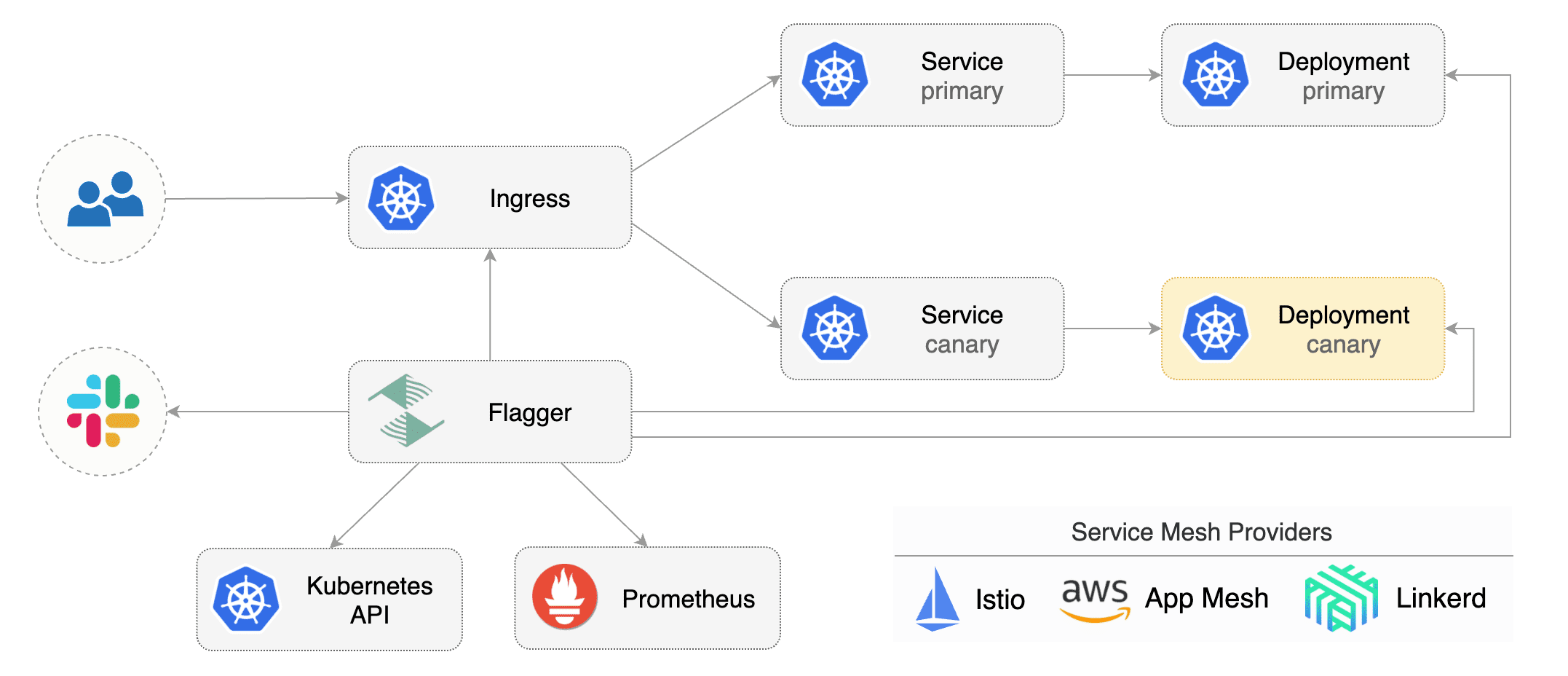

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

The canary analysis can be extended with webhooks for running acceptance tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance

|

||||

indicators like HTTP requests success rate, requests average duration and pods health.

|

||||

Based on analysis of the KPIs a canary is promoted or aborted, and the analysis result is published to Slack.

|

||||

|

||||

|

||||

|

||||

### Documentation

|

||||

|

||||

Flagger documentation can be found at [docs.flagger.app](https://docs.flagger.app)

|

||||

|

||||

* Install

|

||||

* [Flagger install on Kubernetes](https://docs.flagger.app/install/flagger-install-on-kubernetes)

|

||||

* [Flagger install on GKE](https://docs.flagger.app/install/flagger-install-on-google-cloud)

|

||||

* How it works

|

||||

* [Canary custom resource](https://docs.flagger.app/how-it-works#canary-custom-resource)

|

||||

* [Virtual Service](https://docs.flagger.app/how-it-works#virtual-service)

|

||||

* [Canary deployment stages](https://docs.flagger.app/how-it-works#canary-deployment)

|

||||

* [Canary analysis](https://docs.flagger.app/how-it-works#canary-analysis)

|

||||

* [HTTP metrics](https://docs.flagger.app/how-it-works#http-metrics)

|

||||

* [Webhooks](https://docs.flagger.app/how-it-works#webhooks)

|

||||

* [Load testing](https://docs.flagger.app/how-it-works#load-testing)

|

||||

* Usage

|

||||

* [Canary promotions and rollbacks](https://docs.flagger.app/usage/progressive-delivery)

|

||||

* [Monitoring](https://docs.flagger.app/usage/monitoring)

|

||||

* [Alerting](https://docs.flagger.app/usage/alerting)

|

||||

* Tutorials

|

||||

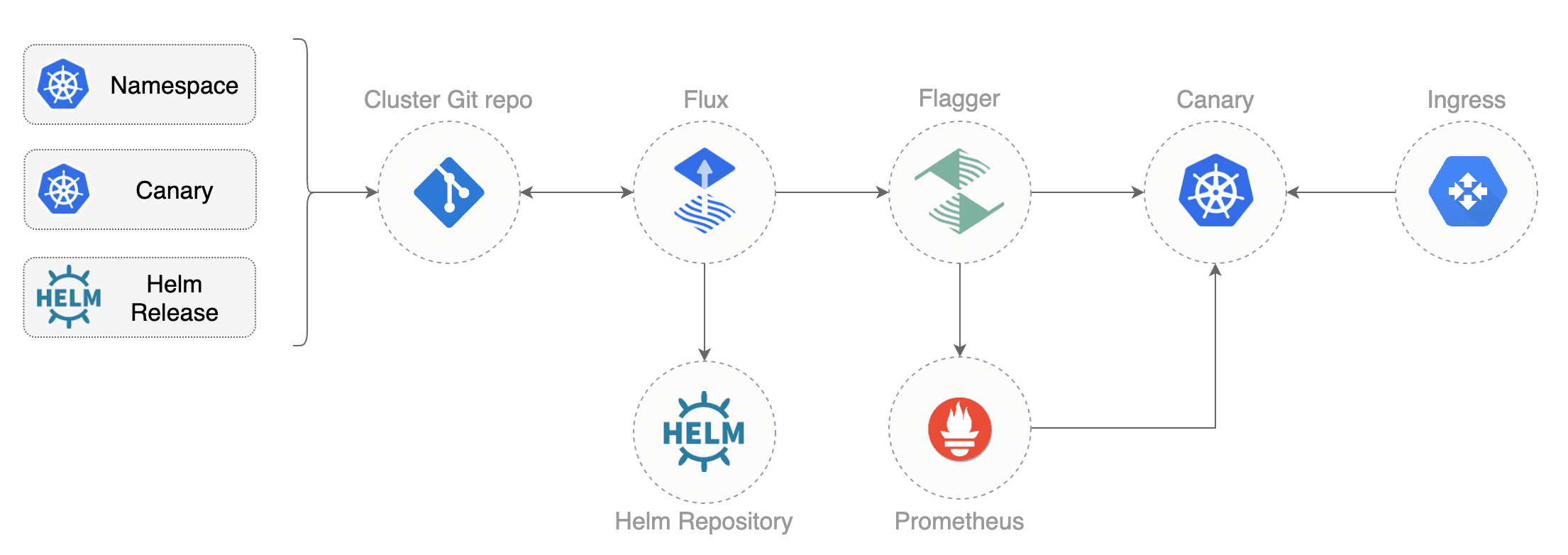

* [Canary deployments with Helm charts and Weave Flux](https://docs.flagger.app/tutorials/canary-helm-gitops)

|

||||

|

||||

### Install

|

||||

|

||||

Before installing Flagger make sure you have Istio setup up with Prometheus enabled.

|

||||

@@ -30,46 +58,14 @@ helm upgrade -i flagger flagger/flagger \

|

||||

|

||||

Flagger is compatible with Kubernetes >1.11.0 and Istio >1.0.0.

|

||||

|

||||

### Usage

|

||||

### Canary CRD

|

||||

|

||||

Flagger takes a Kubernetes deployment and creates a series of objects

|

||||

(Kubernetes [deployments](https://kubernetes.io/docs/concepts/workloads/controllers/deployment/),

|

||||

ClusterIP [services](https://kubernetes.io/docs/concepts/services-networking/service/) and

|

||||

Istio [virtual services](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#VirtualService))

|

||||

to drive the canary analysis and promotion.

|

||||

Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler (HPA),

|

||||

then creates a series of objects (Kubernetes deployments, ClusterIP services and Istio virtual services).

|

||||

These objects expose the application on the mesh and drive the canary analysis and promotion.

|

||||

|

||||

|

||||

|

||||

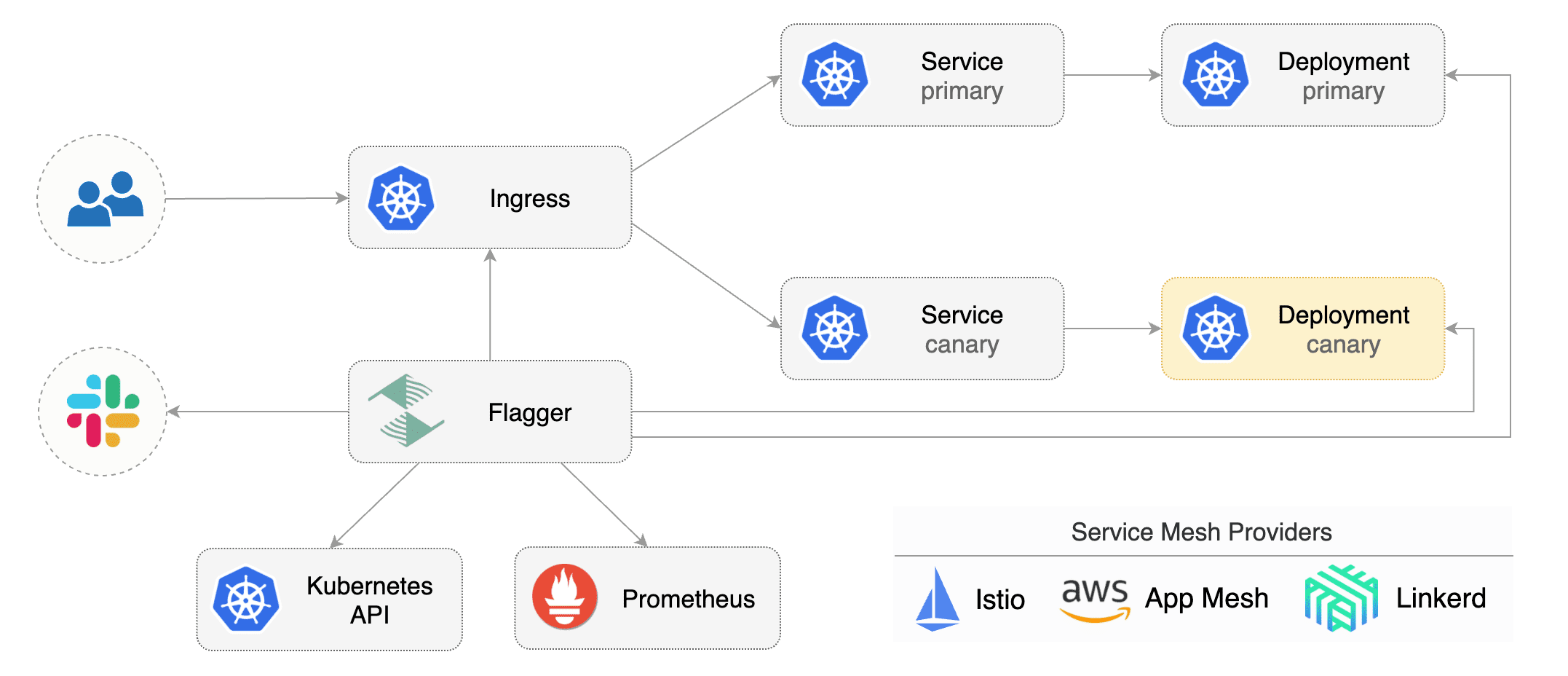

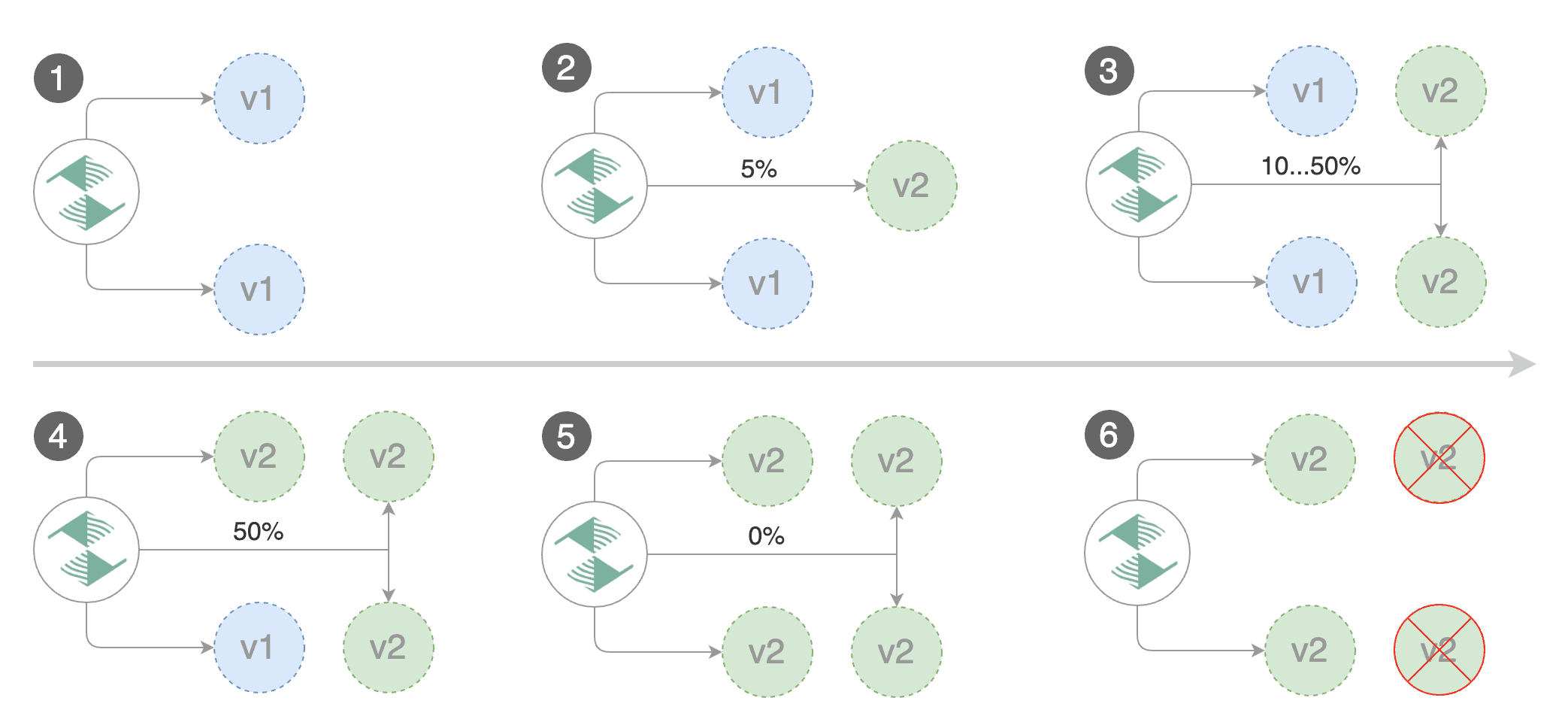

Gated canary promotion stages:

|

||||

|

||||

* scan for canary deployments

|

||||

* check Istio virtual service routes are mapped to primary and canary ClusterIP services

|

||||

* check primary and canary deployments status

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% (step weight)

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated (revision bump) and start over

|

||||

* increase canary traffic weight by 5% (step weight) till it reaches 50% (max weight)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* promote canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark rollout as finished

|

||||

* wait for the canary deployment to be updated (revision bump) and start over

|

||||

|

||||

You can change the canary analysis _max weight_ and the _step weight_ percentage in the Flagger's custom resource.

|

||||

Flagger keeps track of ConfigMaps and Secrets referenced by a Kubernetes Deployment and triggers a canary analysis if any of those objects change.

|

||||

When promoting a workload in production, both code (container images) and configuration (config maps and secrets) are being synchronised.

|

||||

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

@@ -102,6 +98,16 @@ spec:

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- podinfo.example.com

|

||||

# Istio virtual service HTTP match conditions (optional)

|

||||

match:

|

||||

- uri:

|

||||

prefix: /

|

||||

# Istio virtual service HTTP rewrite (optional)

|

||||

rewrite:

|

||||

uri: /

|

||||

# for emergency cases when you want to ship changes

|

||||

# in production without analysing the canary (default false)

|

||||

skipAnalysis: false

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

@@ -134,309 +140,13 @@ spec:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

The canary analysis is using the following promql queries:

|

||||

|

||||

_HTTP requests success rate percentage_

|

||||

|

||||

```sql

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload",

|

||||

response_code!~"5.*"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

istio_requests_total{

|

||||

reporter="destination",

|

||||

destination_workload_namespace=~"$namespace",

|

||||

destination_workload=~"$workload"

|

||||

}[$interval]

|

||||

)

|

||||

)

|

||||

```

|

||||

|

||||

_HTTP requests milliseconds duration P99_

|

||||

|

||||

```sql

|

||||

histogram_quantile(0.99,

|

||||

sum(

|

||||

irate(

|

||||

istio_request_duration_seconds_bucket{

|

||||

reporter="destination",

|

||||

destination_workload=~"$workload",

|

||||

destination_workload_namespace=~"$namespace"

|

||||

}[$interval]

|

||||

)

|

||||

) by (le)

|

||||

)

|

||||

```

|

||||

|

||||

The canary analysis can be extended with webhooks.

|

||||

Flagger will call the webhooks (HTTP POST) and determine from the response status code (HTTP 2xx) if the canary is failing or not.

|

||||

|

||||

Webhook payload:

|

||||

|

||||

```json

|

||||

{

|

||||

"name": "podinfo",

|

||||

"namespace": "test",

|

||||

"metadata": {

|

||||

"test": "all",

|

||||

"token": "16688eb5e9f289f1991c"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Automated canary analysis, promotions and rollbacks

|

||||

|

||||

Create a test namespace with Istio sidecar injection enabled:

|

||||

|

||||

```bash

|

||||

export REPO=https://raw.githubusercontent.com/stefanprodan/flagger/master

|

||||

|

||||

kubectl apply -f ${REPO}/artifacts/namespaces/test.yaml

|

||||

```

|

||||

|

||||

Create a deployment and a horizontal pod autoscaler:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary promotion custom resource (replace the Istio gateway and the internet domain with your own):

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/canary.yaml

|

||||

```

|

||||

|

||||

After a couple of seconds Flagger will create the canary objects:

|

||||

|

||||

```bash

|

||||

# applied

|

||||

deployment.apps/podinfo

|

||||

horizontalpodautoscaler.autoscaling/podinfo

|

||||

canary.flagger.app/podinfo

|

||||

# generated

|

||||

deployment.apps/podinfo-primary

|

||||

horizontalpodautoscaler.autoscaling/podinfo-primary

|

||||

service/podinfo

|

||||

service/podinfo-canary

|

||||

service/podinfo-primary

|

||||

virtualservice.networking.istio.io/podinfo

|

||||

```

|

||||

|

||||

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.0

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new canary analysis:

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger New revision detected podinfo.test

|

||||

Normal Synced 3m flagger Scaling up podinfo.test

|

||||

Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 20

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 25

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 30

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 35

|

||||

Normal Synced 55s flagger Advance podinfo.test canary weight 40

|

||||

Normal Synced 45s flagger Advance podinfo.test canary weight 45

|

||||

Normal Synced 35s flagger Advance podinfo.test canary weight 50

|

||||

Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test

|

||||

Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 5 2019-01-16T14:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

|

||||

```bash

|

||||

kubectl -n test run tester --image=quay.io/stefanprodan/podinfo:1.2.1 -- ./podinfo --port=9898

|

||||

kubectl -n test exec -it tester-xx-xx sh

|

||||

```

|

||||

|

||||

Generate HTTP 500 errors:

|

||||

|

||||

```bash

|

||||

watch curl http://podinfo-canary:9898/status/500

|

||||

```

|

||||

|

||||

Generate latency:

|

||||

|

||||

```bash

|

||||

watch curl http://podinfo-canary:9898/delay/1

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger Starting canary deployment for podinfo.test

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 3m flagger Halt podinfo.test advancement success rate 69.17% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 61.39% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 55.06% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 47.00% < 99%

|

||||

Normal Synced 2m flagger (combined from similar events): Halt podinfo.test advancement success rate 38.08% < 99%

|

||||

Warning Synced 1m flagger Rolling back podinfo.test failed checks threshold reached 10

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

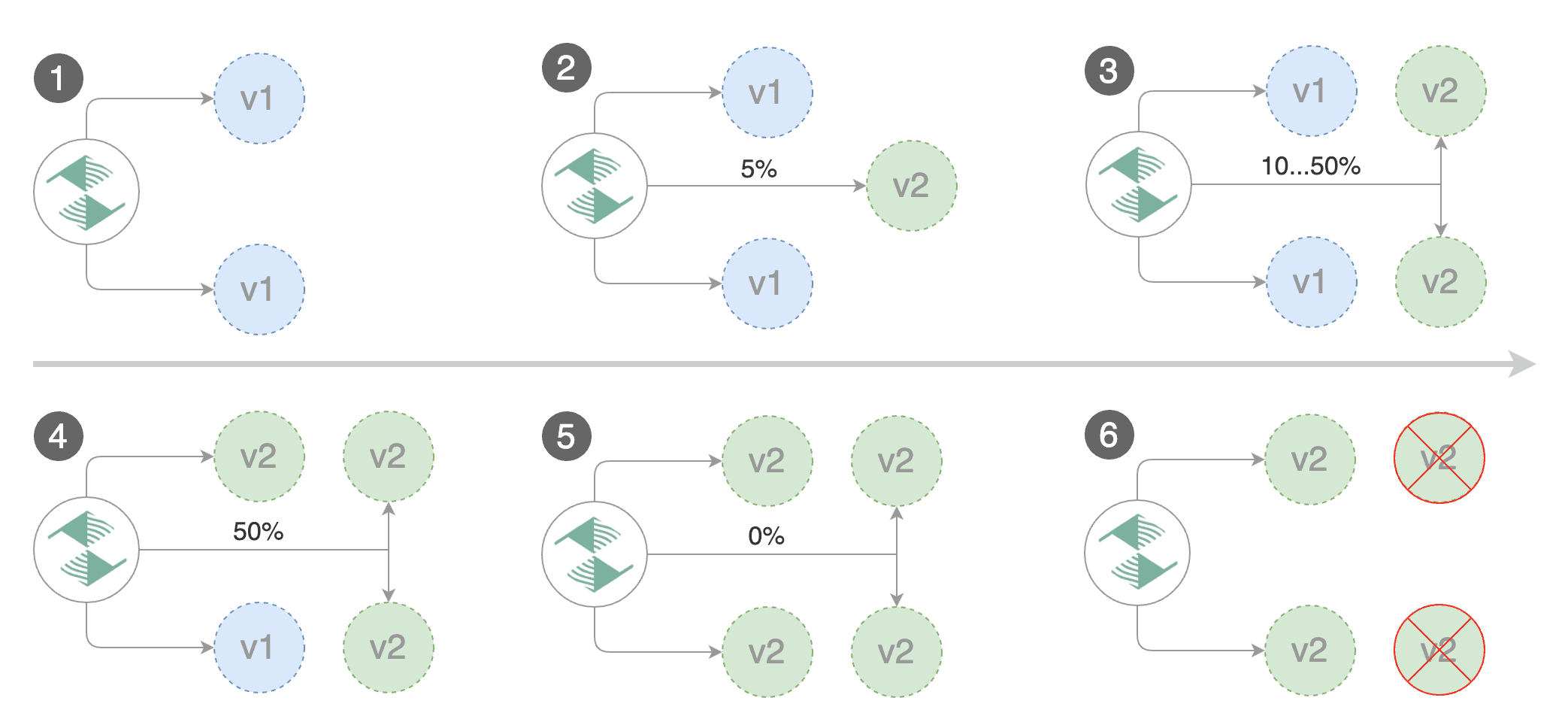

### Monitoring

|

||||

|

||||

Flagger comes with a Grafana dashboard made for canary analysis.

|

||||

|

||||

Install Grafana with Helm:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger-grafana flagger/grafana \

|

||||

--namespace=istio-system \

|

||||

--set url=http://prometheus.istio-system:9090

|

||||

```

|

||||

|

||||

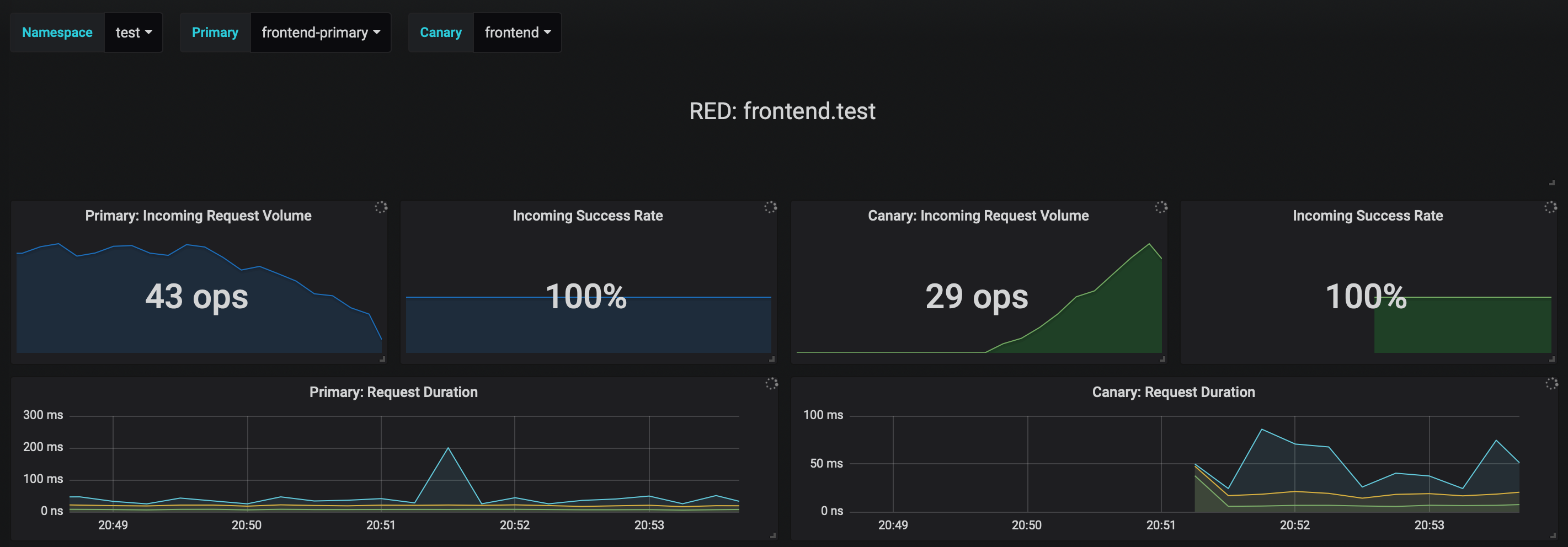

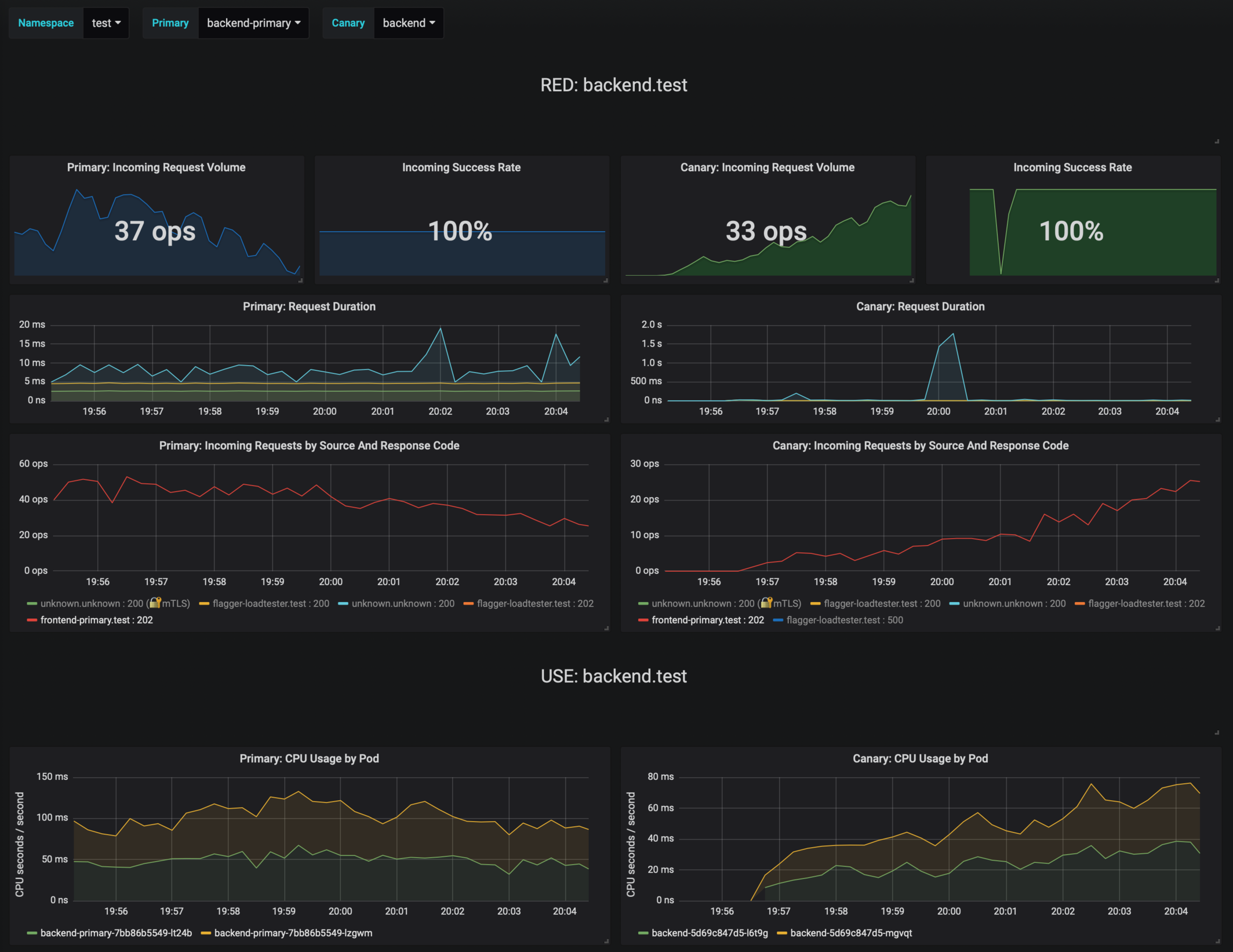

The dashboard shows the RED and USE metrics for the primary and canary workloads:

|

||||

|

||||

|

||||

|

||||

The canary errors and latency spikes have been recorded as Kubernetes events and logged by Flagger in json format:

|

||||

|

||||

```

|

||||

kubectl -n istio-system logs deployment/flagger --tail=100 | jq .msg

|

||||

|

||||

Starting canary deployment for podinfo.test

|

||||

Advance podinfo.test canary weight 5

|

||||

Advance podinfo.test canary weight 10

|

||||

Advance podinfo.test canary weight 15

|

||||

Advance podinfo.test canary weight 20

|

||||

Advance podinfo.test canary weight 25

|

||||

Advance podinfo.test canary weight 30

|

||||

Advance podinfo.test canary weight 35

|

||||

Halt podinfo.test advancement success rate 98.69% < 99%

|

||||

Advance podinfo.test canary weight 40

|

||||

Halt podinfo.test advancement request duration 1.515s > 500ms

|

||||

Advance podinfo.test canary weight 45

|

||||

Advance podinfo.test canary weight 50

|

||||

Copying podinfo.test template spec to podinfo-primary.test

|

||||

Halt podinfo-primary.test advancement waiting for rollout to finish: 1 old replicas are pending termination

|

||||

Scaling down podinfo.test

|

||||

Promotion completed! podinfo.test

|

||||

```

|

||||

|

||||

Flagger exposes Prometheus metrics that can be used to determine the canary analysis status and the destination weight values:

|

||||

|

||||

```bash

|

||||

# Canaries total gauge

|

||||

flagger_canary_total{namespace="test"} 1

|

||||

|

||||

# Canary promotion last known status gauge

|

||||

# 0 - running, 1 - successful, 2 - failed

|

||||

flagger_canary_status{name="podinfo" namespace="test"} 1

|

||||

|

||||

# Canary traffic weight gauge

|

||||

flagger_canary_weight{workload="podinfo-primary" namespace="test"} 95

|

||||

flagger_canary_weight{workload="podinfo" namespace="test"} 5

|

||||

|

||||

# Seconds spent performing canary analysis histogram

|

||||

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="10"} 6

|

||||

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="+Inf"} 6

|

||||

flagger_canary_duration_seconds_sum{name="podinfo",namespace="test"} 17.3561329

|

||||

flagger_canary_duration_seconds_count{name="podinfo",namespace="test"} 6

|

||||

```

|

||||

|

||||

### Alerting

|

||||

|

||||

Flagger can be configured to send Slack notifications:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace=istio-system \

|

||||

--set slack.url=https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK \

|

||||

--set slack.channel=general \

|

||||

--set slack.user=flagger

|

||||

```

|

||||

|

||||

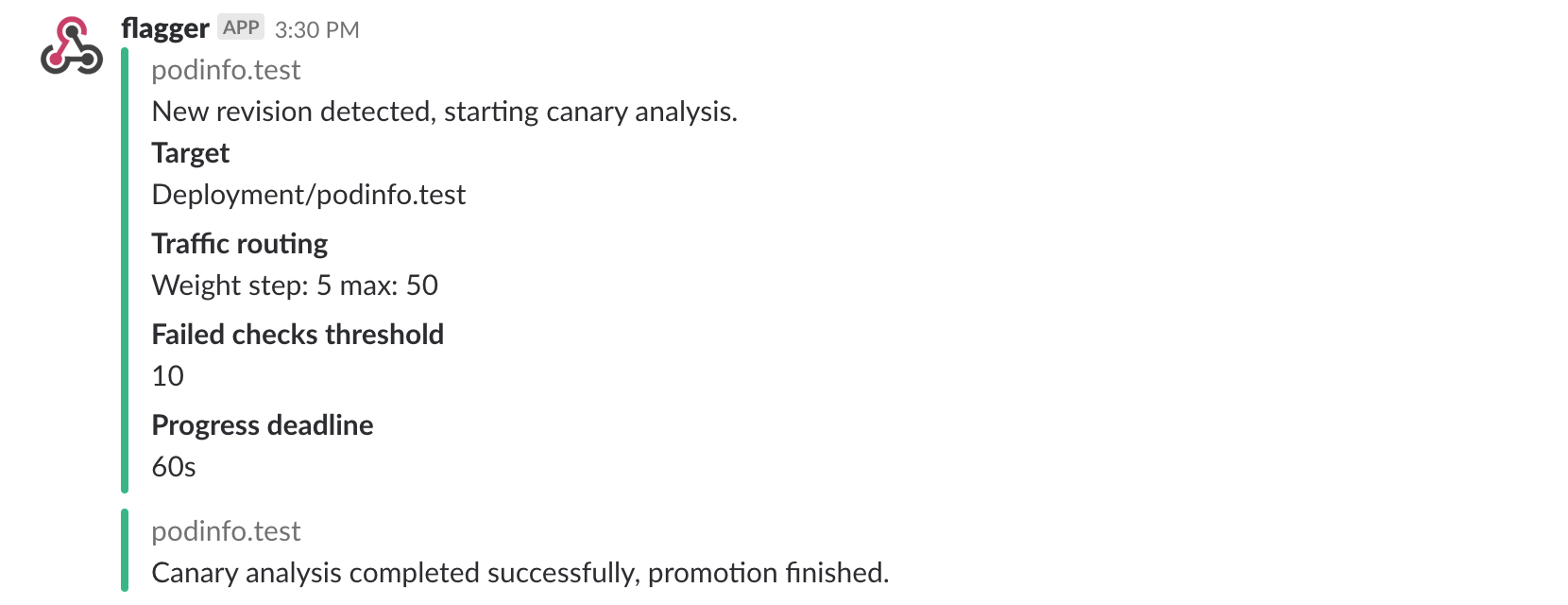

Once configured with a Slack incoming webhook, Flagger will post messages when a canary deployment has been initialized,

|

||||

when a new revision has been detected and if the canary analysis failed or succeeded.

|

||||

|

||||

|

||||

|

||||

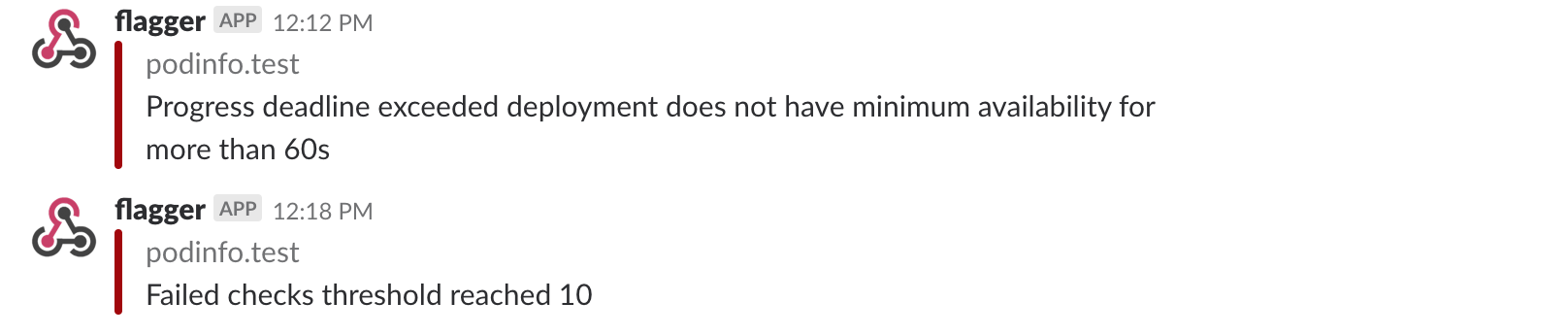

A canary deployment will be rolled back if the progress deadline exceeded or if the analysis

|

||||

reached the maximum number of failed checks:

|

||||

|

||||

|

||||

|

||||

Besides Slack, you can use Alertmanager to trigger alerts when a canary deployment failed:

|

||||

|

||||

```yaml

|

||||

- alert: canary_rollback

|

||||

expr: flagger_canary_status > 1

|

||||

for: 1m

|

||||

labels:

|

||||

severity: warning

|

||||

annotations:

|

||||

summary: "Canary failed"

|

||||

description: "Workload {{ $labels.name }} namespace {{ $labels.namespace }}"

|

||||

```

|

||||

For more details on how the canary analysis and promotion works please [read the docs](https://docs.flagger.app/how-it-works).

|

||||

|

||||

### Roadmap

|

||||

|

||||

* Extend the validation mechanism to support other metrics than HTTP success rate and latency

|

||||

* Add A/B testing capabilities using fixed routing based on HTTP headers and cookies match conditions

|

||||

* Add support for comparing the canary metrics to the primary ones and do the validation based on the derivation between the two

|

||||

* Extend the canary analysis and promotion to other types than Kubernetes deployments such as Flux Helm releases or OpenFaaS functions

|

||||

|

||||

### Contributing

|

||||

|

||||

|

||||

@@ -25,7 +25,17 @@ spec:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

- app.istio.weavedx.com

|

||||

# Istio virtual service HTTP match conditions (optional)

|

||||

match:

|

||||

- uri:

|

||||

prefix: /

|

||||

# Istio virtual service HTTP rewrite (optional)

|

||||

rewrite:

|

||||

uri: /

|

||||

# for emergency cases when you want to ship changes

|

||||

# in production without analysing the canary

|

||||

skipAnalysis: false

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

|

||||

@@ -25,7 +25,7 @@ spec:

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.3.0

|

||||

image: quay.io/stefanprodan/podinfo:1.4.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

|

||||

6

artifacts/cluster/namespaces/test.yaml

Normal file

@@ -0,0 +1,6 @@

|

||||

apiVersion: v1

|

||||

kind: Namespace

|

||||

metadata:

|

||||

name: test

|

||||

labels:

|

||||

istio-injection: enabled

|

||||

26

artifacts/cluster/releases/test/backend.yaml

Normal file

@@ -0,0 +1,26 @@

|

||||

apiVersion: flux.weave.works/v1beta1

|

||||

kind: HelmRelease

|

||||

metadata:

|

||||

name: backend

|

||||

namespace: test

|

||||

annotations:

|

||||

flux.weave.works/automated: "true"

|

||||

flux.weave.works/tag.chart-image: regexp:^1.4.*

|

||||

spec:

|

||||

releaseName: backend

|

||||

chart:

|

||||

repository: https://flagger.app/

|

||||

name: podinfo

|

||||

version: 2.0.0

|

||||

values:

|

||||

image:

|

||||

repository: quay.io/stefanprodan/podinfo

|

||||

tag: 1.4.0

|

||||

httpServer:

|

||||

timeout: 30s

|

||||

canary:

|

||||

enabled: true

|

||||

istioIngress:

|

||||

enabled: false

|

||||

loadtest:

|

||||

enabled: true

|

||||

27

artifacts/cluster/releases/test/frontend.yaml

Normal file

@@ -0,0 +1,27 @@

|

||||

apiVersion: flux.weave.works/v1beta1

|

||||

kind: HelmRelease

|

||||

metadata:

|

||||

name: frontend

|

||||

namespace: test

|

||||

annotations:

|

||||

flux.weave.works/automated: "true"

|

||||

flux.weave.works/tag.chart-image: semver:~1.4

|

||||

spec:

|

||||

releaseName: frontend

|

||||

chart:

|

||||

repository: https://flagger.app/

|

||||

name: podinfo

|

||||

version: 2.0.0

|

||||

values:

|

||||

image:

|

||||

repository: quay.io/stefanprodan/podinfo

|

||||

tag: 1.4.0

|

||||

backend: http://backend-podinfo:9898/echo

|

||||

canary:

|

||||

enabled: true

|

||||

istioIngress:

|

||||

enabled: true

|

||||

gateway: public-gateway.istio-system.svc.cluster.local

|

||||

host: frontend.istio.example.com

|

||||

loadtest:

|

||||

enabled: true

|

||||

18

artifacts/cluster/releases/test/loadtester.yaml

Normal file

@@ -0,0 +1,18 @@

|

||||

apiVersion: flux.weave.works/v1beta1

|

||||

kind: HelmRelease

|

||||

metadata:

|

||||

name: loadtester

|

||||

namespace: test

|

||||

annotations:

|

||||

flux.weave.works/automated: "true"

|

||||

flux.weave.works/tag.chart-image: glob:0.*

|

||||

spec:

|

||||

releaseName: flagger-loadtester

|

||||

chart:

|

||||

repository: https://flagger.app/

|

||||

name: loadtester

|

||||

version: 0.1.0

|

||||

values:

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger-loadtester

|

||||

tag: 0.1.0

|

||||

58

artifacts/configs/canary.yaml

Normal file

@@ -0,0 +1,58 @@

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# Istio Prometheus checks

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

16

artifacts/configs/configs.yaml

Normal file

@@ -0,0 +1,16 @@

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: podinfo-config-env

|

||||

namespace: test

|

||||

data:

|

||||

color: blue

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: podinfo-config-vol

|

||||

namespace: test

|

||||

data:

|

||||

output: console

|

||||

textmode: "true"

|

||||

89

artifacts/configs/deployment.yaml

Normal file

@@ -0,0 +1,89 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.3.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

valueFrom:

|

||||

configMapKeyRef:

|

||||

name: podinfo-config-env

|

||||

key: color

|

||||

- name: SECRET_USER

|

||||

valueFrom:

|

||||

secretKeyRef:

|

||||

name: podinfo-secret-env

|

||||

key: user

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

volumeMounts:

|

||||

- name: configs

|

||||

mountPath: /etc/podinfo/configs

|

||||

readOnly: true

|

||||

- name: secrets

|

||||

mountPath: /etc/podinfo/secrets

|

||||

readOnly: true

|

||||

volumes:

|

||||

- name: configs

|

||||

configMap:

|

||||

name: podinfo-config-vol

|

||||

- name: secrets

|

||||

secret:

|

||||

secretName: podinfo-secret-vol

|

||||

19

artifacts/configs/hpa.yaml

Normal file

@@ -0,0 +1,19 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 1

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

16

artifacts/configs/secrets.yaml

Normal file

@@ -0,0 +1,16 @@

|

||||

apiVersion: v1

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: podinfo-secret-env

|

||||

namespace: test

|

||||

data:

|

||||

password: cGFzc3dvcmQ=

|

||||

user: YWRtaW4=

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: podinfo-secret-vol

|

||||

namespace: test

|

||||

data:

|

||||

key: cGFzc3dvcmQ=

|

||||

@@ -73,6 +73,8 @@ spec:

|

||||

properties:

|

||||

port:

|

||||

type: number

|

||||

skipAnalysis:

|

||||

type: boolean

|

||||

canaryAnalysis:

|

||||

properties:

|

||||

interval:

|

||||

|

||||

@@ -22,8 +22,8 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.4.1

|

||||

imagePullPolicy: Always

|

||||

image: quay.io/stefanprodan/flagger:0.6.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

containerPort: 8080

|

||||

|

||||

27

artifacts/gke/istio-gateway.yaml

Normal file

@@ -0,0 +1,27 @@

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: Gateway

|

||||

metadata:

|

||||

name: public-gateway

|

||||

namespace: istio-system

|

||||

spec:

|

||||

selector:

|

||||

istio: ingressgateway

|

||||

servers:

|

||||

- port:

|

||||

number: 80

|

||||

name: http

|

||||

protocol: HTTP

|

||||

hosts:

|

||||

- "*"

|

||||

tls:

|

||||

httpsRedirect: true

|

||||

- port:

|

||||

number: 443

|

||||

name: https

|

||||

protocol: HTTPS

|

||||

hosts:

|

||||

- "*"

|

||||

tls:

|

||||

mode: SIMPLE

|

||||

privateKey: /etc/istio/ingressgateway-certs/tls.key

|

||||

serverCertificate: /etc/istio/ingressgateway-certs/tls.crt

|

||||

443

artifacts/gke/istio-prometheus.yaml

Normal file

@@ -0,0 +1,443 @@

|

||||

---

|

||||

apiVersion: rbac.authorization.k8s.io/v1beta1

|

||||

kind: ClusterRole

|

||||

metadata:

|

||||

name: prometheus

|

||||

labels:

|

||||

app: prometheus

|

||||

rules:

|

||||

- apiGroups: [""]

|

||||

resources:

|

||||

- nodes

|

||||

- services

|

||||

- endpoints

|

||||

- pods

|

||||

- nodes/proxy

|

||||

verbs: ["get", "list", "watch"]

|

||||

- apiGroups: [""]

|

||||

resources:

|

||||

- configmaps

|

||||

verbs: ["get"]

|

||||

- nonResourceURLs: ["/metrics"]

|

||||

verbs: ["get"]

|

||||

---

|

||||

apiVersion: rbac.authorization.k8s.io/v1beta1

|

||||

kind: ClusterRoleBinding

|

||||

metadata:

|

||||

name: prometheus

|

||||

labels:

|

||||

app: prometheus

|

||||

roleRef:

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

kind: ClusterRole

|

||||

name: prometheus

|

||||

subjects:

|

||||

- kind: ServiceAccount

|

||||

name: prometheus

|

||||

namespace: istio-system

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

metadata:

|

||||

name: prometheus

|

||||

namespace: istio-system

|

||||

labels:

|

||||

app: prometheus

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: prometheus

|

||||

namespace: istio-system

|

||||

labels:

|

||||

app: prometheus

|

||||

data:

|

||||

prometheus.yml: |-

|

||||

global:

|

||||

scrape_interval: 15s

|

||||

scrape_configs:

|

||||

|

||||

- job_name: 'istio-mesh'

|

||||

# Override the global default and scrape targets from this job every 5 seconds.

|

||||

scrape_interval: 5s

|

||||

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

namespaces:

|

||||

names:

|

||||

- istio-system

|

||||

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: istio-telemetry;prometheus

|

||||

|

||||

|

||||

# Scrape config for envoy stats

|

||||

- job_name: 'envoy-stats'

|

||||

metrics_path: /stats/prometheus

|

||||

kubernetes_sd_configs:

|

||||

- role: pod

|

||||

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_pod_container_port_name]

|

||||

action: keep

|

||||

regex: '.*-envoy-prom'

|

||||

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

|

||||

action: replace

|

||||

regex: ([^:]+)(?::\d+)?;(\d+)

|

||||

replacement: $1:15090

|

||||

target_label: __address__

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_pod_label_(.+)

|

||||

- source_labels: [__meta_kubernetes_namespace]

|

||||

action: replace

|

||||

target_label: namespace

|

||||

- source_labels: [__meta_kubernetes_pod_name]

|

||||

action: replace

|

||||

target_label: pod_name

|

||||

|

||||

metric_relabel_configs:

|

||||

# Exclude some of the envoy metrics that have massive cardinality

|

||||

# This list may need to be pruned further moving forward, as informed

|

||||

# by performance and scalability testing.

|

||||

- source_labels: [ cluster_name ]

|

||||

regex: '(outbound|inbound|prometheus_stats).*'

|

||||

action: drop

|

||||

- source_labels: [ tcp_prefix ]

|

||||

regex: '(outbound|inbound|prometheus_stats).*'

|

||||

action: drop

|

||||

- source_labels: [ listener_address ]

|

||||

regex: '(.+)'

|

||||

action: drop

|

||||

- source_labels: [ http_conn_manager_listener_prefix ]

|

||||

regex: '(.+)'

|

||||

action: drop

|

||||

- source_labels: [ http_conn_manager_prefix ]

|

||||

regex: '(.+)'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_tls.*'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_tcp_downstream.*'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_http_(stats|admin).*'

|

||||

action: drop

|

||||

- source_labels: [ __name__ ]

|

||||

regex: 'envoy_cluster_(lb|retry|bind|internal|max|original).*'

|

||||

action: drop

|

||||

|

||||

|

||||

- job_name: 'istio-policy'

|

||||

# Override the global default and scrape targets from this job every 5 seconds.

|

||||

scrape_interval: 5s

|

||||

# metrics_path defaults to '/metrics'

|

||||

# scheme defaults to 'http'.

|

||||

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

namespaces:

|

||||

names:

|

||||

- istio-system

|

||||

|

||||

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: istio-policy;http-monitoring

|

||||

|

||||

- job_name: 'istio-telemetry'

|

||||

# Override the global default and scrape targets from this job every 5 seconds.

|

||||

scrape_interval: 5s

|

||||

# metrics_path defaults to '/metrics'

|

||||

# scheme defaults to 'http'.

|

||||

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

namespaces:

|

||||

names:

|

||||

- istio-system

|

||||

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: istio-telemetry;http-monitoring

|

||||

|

||||

- job_name: 'pilot'

|

||||

# Override the global default and scrape targets from this job every 5 seconds.

|

||||

scrape_interval: 5s

|

||||

# metrics_path defaults to '/metrics'

|

||||

# scheme defaults to 'http'.

|

||||

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

namespaces:

|

||||

names:

|

||||

- istio-system

|

||||

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: istio-pilot;http-monitoring

|

||||

|

||||

- job_name: 'galley'

|

||||

# Override the global default and scrape targets from this job every 5 seconds.

|

||||

scrape_interval: 5s

|

||||

# metrics_path defaults to '/metrics'

|

||||

# scheme defaults to 'http'.

|

||||

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

namespaces:

|

||||

names:

|

||||

- istio-system

|

||||

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: istio-galley;http-monitoring

|

||||

|

||||

# scrape config for API servers

|

||||

- job_name: 'kubernetes-apiservers'

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

namespaces:

|

||||

names:

|

||||

- default

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: kubernetes;https

|

||||

|

||||

# scrape config for nodes (kubelet)

|

||||

- job_name: 'kubernetes-nodes'

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

kubernetes_sd_configs:

|

||||

- role: node

|

||||

relabel_configs:

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_node_label_(.+)

|

||||

- target_label: __address__

|

||||

replacement: kubernetes.default.svc:443

|

||||

- source_labels: [__meta_kubernetes_node_name]

|

||||

regex: (.+)

|

||||

target_label: __metrics_path__

|

||||

replacement: /api/v1/nodes/${1}/proxy/metrics

|

||||

|

||||

# Scrape config for Kubelet cAdvisor.

|

||||

#

|

||||

# This is required for Kubernetes 1.7.3 and later, where cAdvisor metrics

|

||||

# (those whose names begin with 'container_') have been removed from the

|

||||

# Kubelet metrics endpoint. This job scrapes the cAdvisor endpoint to

|

||||

# retrieve those metrics.

|

||||

#

|

||||

# In Kubernetes 1.7.0-1.7.2, these metrics are only exposed on the cAdvisor

|

||||

# HTTP endpoint; use "replacement: /api/v1/nodes/${1}:4194/proxy/metrics"

|

||||

# in that case (and ensure cAdvisor's HTTP server hasn't been disabled with

|

||||

# the --cadvisor-port=0 Kubelet flag).

|

||||

#

|

||||

# This job is not necessary and should be removed in Kubernetes 1.6 and

|

||||

# earlier versions, or it will cause the metrics to be scraped twice.

|

||||

- job_name: 'kubernetes-cadvisor'

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

kubernetes_sd_configs:

|

||||

- role: node

|

||||

relabel_configs:

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_node_label_(.+)

|

||||

- target_label: __address__

|

||||

replacement: kubernetes.default.svc:443

|

||||

- source_labels: [__meta_kubernetes_node_name]

|

||||

regex: (.+)

|

||||

target_label: __metrics_path__

|

||||

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

|

||||

|

||||

# scrape config for service endpoints.

|

||||

- job_name: 'kubernetes-service-endpoints'

|

||||

kubernetes_sd_configs:

|

||||

- role: endpoints

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

|

||||

action: keep

|

||||

regex: true

|

||||

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

|

||||

action: replace

|

||||

target_label: __scheme__

|

||||

regex: (https?)

|

||||

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

|

||||

action: replace

|

||||

target_label: __metrics_path__

|

||||

regex: (.+)

|

||||

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

|

||||

action: replace

|

||||

target_label: __address__

|

||||

regex: ([^:]+)(?::\d+)?;(\d+)

|

||||

replacement: $1:$2

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_service_label_(.+)

|

||||

- source_labels: [__meta_kubernetes_namespace]

|

||||

action: replace

|

||||

target_label: kubernetes_namespace

|

||||

- source_labels: [__meta_kubernetes_service_name]

|

||||

action: replace

|

||||

target_label: kubernetes_name

|

||||

|

||||

- job_name: 'kubernetes-pods'

|

||||

kubernetes_sd_configs:

|

||||

- role: pod

|

||||

relabel_configs: # If first two labels are present, pod should be scraped by the istio-secure job.

|

||||

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

|

||||

action: keep

|

||||

regex: true

|

||||

- source_labels: [__meta_kubernetes_pod_annotation_sidecar_istio_io_status]

|

||||

action: drop

|

||||

regex: (.+)

|

||||

- source_labels: [__meta_kubernetes_pod_annotation_istio_mtls]

|

||||

action: drop

|

||||

regex: (true)

|

||||

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

|

||||

action: replace

|

||||

target_label: __metrics_path__

|

||||

regex: (.+)

|

||||

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

|

||||

action: replace

|

||||