mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-15 18:40:12 +00:00

Compare commits

15 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

456d914c35 | ||

|

|

737507b0fe | ||

|

|

4bcf82d295 | ||

|

|

e9cd7afc8a | ||

|

|

0830abd51d | ||

|

|

5b296e01b3 | ||

|

|

3fd039afd1 | ||

|

|

5904348ba5 | ||

|

|

1a98e93723 | ||

|

|

c9685fbd13 | ||

|

|

dc347e273d | ||

|

|

8170916897 | ||

|

|

71cd4e0cb7 | ||

|

|

0109788ccc | ||

|

|

1649dea468 |

1

Makefile

1

Makefile

@@ -46,6 +46,7 @@ version-set:

|

||||

sed -i '' "s/flagger:$$current/flagger:$$next/g" artifacts/flagger/deployment.yaml && \

|

||||

sed -i '' "s/tag: $$current/tag: $$next/g" charts/flagger/values.yaml && \

|

||||

sed -i '' "s/appVersion: $$current/appVersion: $$next/g" charts/flagger/Chart.yaml && \

|

||||

sed -i '' "s/version: $$current/version: $$next/g" charts/flagger/Chart.yaml && \

|

||||

echo "Version $$next set in code, deployment and charts"

|

||||

|

||||

version-up:

|

||||

|

||||

16

README.md

16

README.md

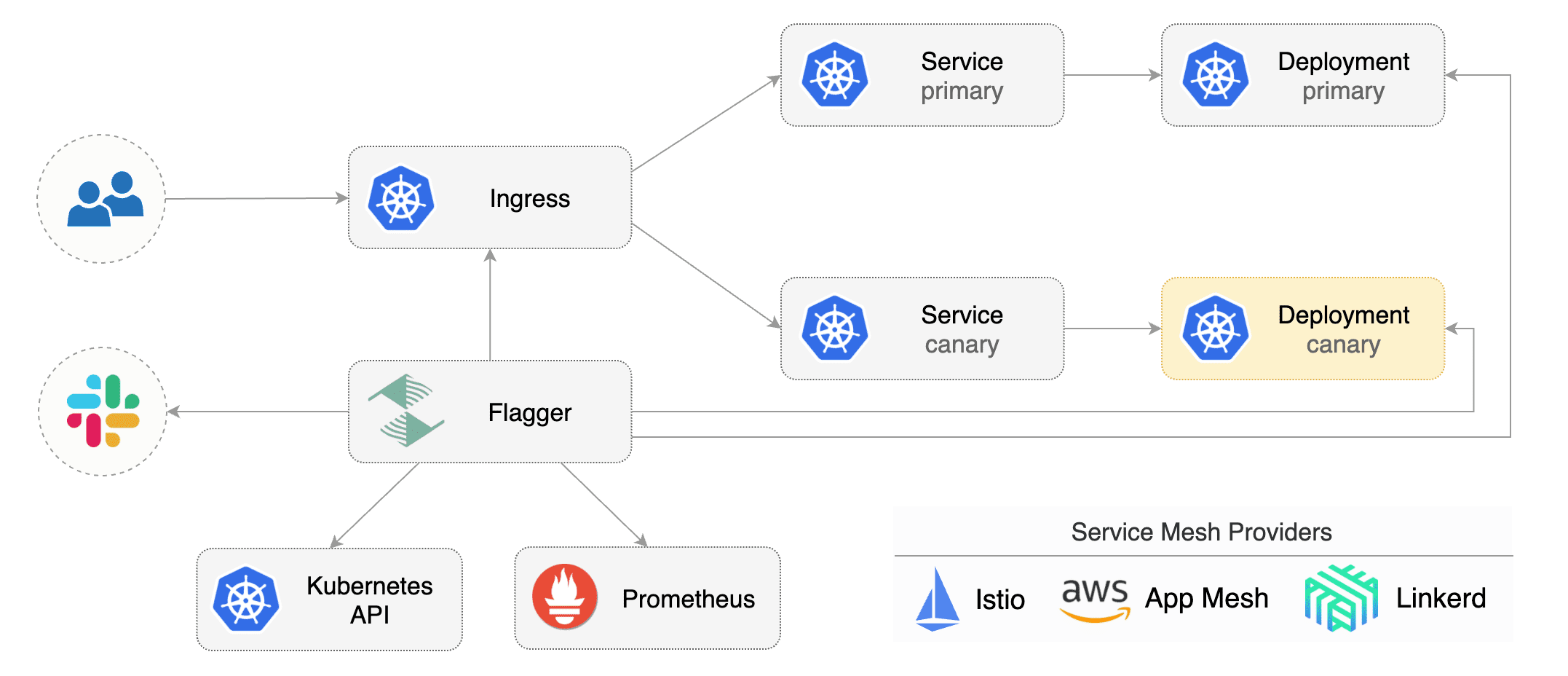

@@ -38,6 +38,9 @@ ClusterIP [services](https://kubernetes.io/docs/concepts/services-networking/ser

|

||||

Istio [virtual services](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#VirtualService))

|

||||

to drive the canary analysis and promotion.

|

||||

|

||||

Flagger keeps track of ConfigMaps and Secrets referenced by a Kubernetes Deployment and triggers a canary analysis if any of those objects change.

|

||||

When promoting a workload in production, both code (container images) and configuration (config maps and secrets) are being synchronised.

|

||||

|

||||

|

||||

|

||||

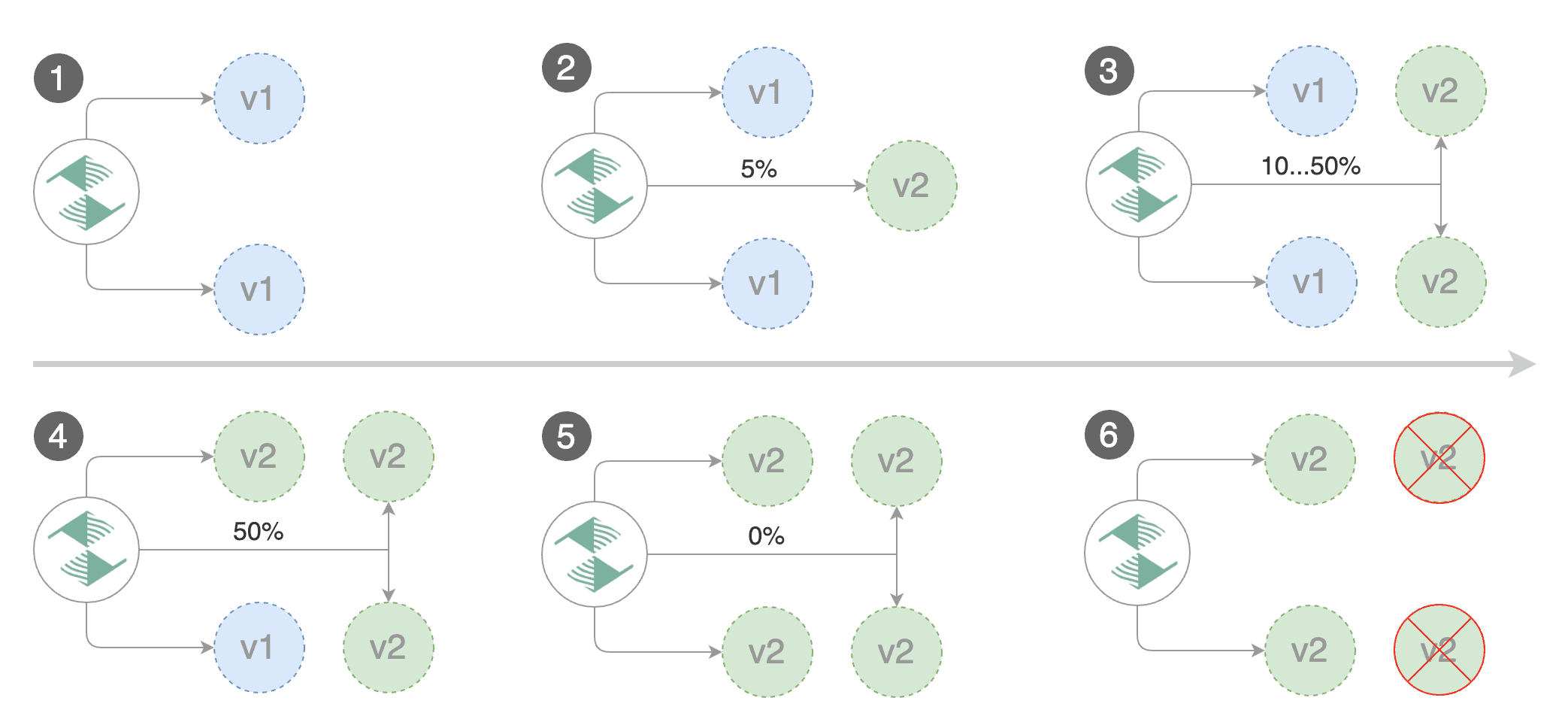

Gated canary promotion stages:

|

||||

@@ -48,28 +51,28 @@ Gated canary promotion stages:

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% (step weight)

|

||||

* call webhooks and check results

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated (revision bump) and start over

|

||||

* wait for the canary deployment to be updated and start over

|

||||

* increase canary traffic weight by 5% (step weight) till it reaches 50% (max weight)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* promote canary to primary

|

||||

* copy ConfigMaps and Secrets from canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark rollout as finished

|

||||

* wait for the canary deployment to be updated (revision bump) and start over

|

||||

|

||||

You can change the canary analysis _max weight_ and the _step weight_ percentage in the Flagger's custom resource.

|

||||

* wait for the canary deployment to be updated and start over

|

||||

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

@@ -248,6 +251,9 @@ kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.0

|

||||

```

|

||||

|

||||

**Note** that Flagger tracks changes in the deployment `PodSpec` but also in `ConfigMaps` and `Secrets`

|

||||

that are referenced in the pod's volumes and containers environment variables.

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new canary analysis:

|

||||

|

||||

```

|

||||

@@ -336,6 +342,8 @@ Events:

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

### Monitoring

|

||||

|

||||

Flagger comes with a Grafana dashboard made for canary analysis.

|

||||

|

||||

58

artifacts/configs/canary.yaml

Normal file

58

artifacts/configs/canary.yaml

Normal file

@@ -0,0 +1,58 @@

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# Istio Prometheus checks

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

16

artifacts/configs/configs.yaml

Normal file

16

artifacts/configs/configs.yaml

Normal file

@@ -0,0 +1,16 @@

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: podinfo-config-env

|

||||

namespace: test

|

||||

data:

|

||||

color: blue

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: podinfo-config-vol

|

||||

namespace: test

|

||||

data:

|

||||

output: console

|

||||

textmode: "true"

|

||||

89

artifacts/configs/deployment.yaml

Normal file

89

artifacts/configs/deployment.yaml

Normal file

@@ -0,0 +1,89 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.3.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

valueFrom:

|

||||

configMapKeyRef:

|

||||

name: podinfo-config-env

|

||||

key: color

|

||||

- name: SECRET_USER

|

||||

valueFrom:

|

||||

secretKeyRef:

|

||||

name: podinfo-secret-env

|

||||

key: user

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

volumeMounts:

|

||||

- name: configs

|

||||

mountPath: /etc/podinfo/configs

|

||||

readOnly: true

|

||||

- name: secrets

|

||||

mountPath: /etc/podinfo/secrets

|

||||

readOnly: true

|

||||

volumes:

|

||||

- name: configs

|

||||

configMap:

|

||||

name: podinfo-config-vol

|

||||

- name: secrets

|

||||

secret:

|

||||

secretName: podinfo-secret-vol

|

||||

19

artifacts/configs/hpa.yaml

Normal file

19

artifacts/configs/hpa.yaml

Normal file

@@ -0,0 +1,19 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 1

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

16

artifacts/configs/secrets.yaml

Normal file

16

artifacts/configs/secrets.yaml

Normal file

@@ -0,0 +1,16 @@

|

||||

apiVersion: v1

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: podinfo-secret-env

|

||||

namespace: test

|

||||

data:

|

||||

password: cGFzc3dvcmQ=

|

||||

user: YWRtaW4=

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: podinfo-secret-vol

|

||||

namespace: test

|

||||

data:

|

||||

key: cGFzc3dvcmQ=

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.4.1

|

||||

image: quay.io/stefanprodan/flagger:0.5.0

|

||||

imagePullPolicy: Always

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.4.1

|

||||

appVersion: 0.4.1

|

||||

version: 0.5.0

|

||||

appVersion: 0.5.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger

|

||||

tag: 0.4.1

|

||||

tag: 0.5.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

metricsServer: "http://prometheus.istio-system.svc.cluster.local:9090"

|

||||

|

||||

@@ -97,38 +97,42 @@ the Istio Virtual Service. The container port from the target deployment should

|

||||

|

||||

|

||||

|

||||

A canary deployment is triggered by changes in any of the following objects:

|

||||

|

||||

* Deployment PodSpec (container image, command, ports, env, resources, etc)

|

||||

* ConfigMaps mounted as volumes or mapped to environment variables

|

||||

* Secrets mounted as volumes or mapped to environment variables

|

||||

|

||||

Gated canary promotion stages:

|

||||

|

||||

* scan for canary deployments

|

||||

* creates the primary deployment if needed

|

||||

* check Istio virtual service routes are mapped to primary and canary ClusterIP services

|

||||

* check primary and canary deployments status

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% \(step weight\)

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% (step weight)

|

||||

* call webhooks and check results

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated \(revision bump\) and start over

|

||||

* increase canary traffic weight by 5% \(step weight\) till it reaches 50% \(max weight\)

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* halt advancement if any of the webhook calls are failing

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated and start over

|

||||

* increase canary traffic weight by 5% (step weight) till it reaches 50% (max weight)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* promote canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* copy ConfigMaps and Secrets from canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt advancement if pods are unhealthy

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark the canary deployment as finished

|

||||

* wait for the canary deployment to be updated \(revision bump\) and start over

|

||||

|

||||

You can change the canary analysis _max weight_ and the _step weight_ percentage in the Flagger's custom resource.

|

||||

* mark rollout as finished

|

||||

* wait for the canary deployment to be updated and start over

|

||||

|

||||

### Canary Analysis

|

||||

|

||||

|

||||

@@ -24,7 +24,7 @@ kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource \(replace example.com with your own domain\):

|

||||

Create a canary custom resource (replace example.com with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

@@ -146,6 +146,8 @@ Events:

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

@@ -181,7 +183,8 @@ Generate latency:

|

||||

watch curl http://podinfo-canary:9898/delay/1

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary, the canary is scaled to zero and the rollout is marked as failed.

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

@@ -93,6 +93,8 @@ type CanaryStatus struct {

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

CanaryWeight int `json:"canaryWeight"`

|

||||

// +optional

|

||||

TrackedConfigs *map[string]string `json:"trackedConfigs,omitempty"`

|

||||

// +optional

|

||||

LastAppliedSpec string `json:"lastAppliedSpec,omitempty"`

|

||||

// +optional

|

||||

LastTransitionTime metav1.Time `json:"lastTransitionTime,omitempty"`

|

||||

|

||||

@@ -188,6 +188,17 @@ func (in *CanarySpec) DeepCopy() *CanarySpec {

|

||||

// DeepCopyInto is an autogenerated deepcopy function, copying the receiver, writing into out. in must be non-nil.

|

||||

func (in *CanaryStatus) DeepCopyInto(out *CanaryStatus) {

|

||||

*out = *in

|

||||

if in.TrackedConfigs != nil {

|

||||

in, out := &in.TrackedConfigs, &out.TrackedConfigs

|

||||

*out = new(map[string]string)

|

||||

if **in != nil {

|

||||

in, out := *in, *out

|

||||

*out = make(map[string]string, len(*in))

|

||||

for key, val := range *in {

|

||||

(*out)[key] = val

|

||||

}

|

||||

}

|

||||

}

|

||||

in.LastTransitionTime.DeepCopyInto(&out.LastTransitionTime)

|

||||

return

|

||||

}

|

||||

|

||||

@@ -74,6 +74,11 @@ func NewController(

|

||||

kubeClient: kubeClient,

|

||||

istioClient: istioClient,

|

||||

flaggerClient: flaggerClient,

|

||||

configTracker: ConfigTracker{

|

||||

logger: logger,

|

||||

kubeClient: kubeClient,

|

||||

flaggerClient: flaggerClient,

|

||||

},

|

||||

}

|

||||

|

||||

router := CanaryRouter{

|

||||

|

||||

523

pkg/controller/controller_test.go

Normal file

523

pkg/controller/controller_test.go

Normal file

@@ -0,0 +1,523 @@

|

||||

package controller

|

||||

|

||||

import (

|

||||

istioclientset "github.com/knative/pkg/client/clientset/versioned"

|

||||

fakeIstio "github.com/knative/pkg/client/clientset/versioned/fake"

|

||||

"github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

clientset "github.com/stefanprodan/flagger/pkg/client/clientset/versioned"

|

||||

fakeFlagger "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/fake"

|

||||

informers "github.com/stefanprodan/flagger/pkg/client/informers/externalversions"

|

||||

"github.com/stefanprodan/flagger/pkg/logging"

|

||||

"go.uber.org/zap"

|

||||

appsv1 "k8s.io/api/apps/v1"

|

||||

hpav1 "k8s.io/api/autoscaling/v1"

|

||||

hpav2 "k8s.io/api/autoscaling/v2beta1"

|

||||

corev1 "k8s.io/api/core/v1"

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

"k8s.io/client-go/kubernetes"

|

||||

"k8s.io/client-go/kubernetes/fake"

|

||||

"k8s.io/client-go/tools/record"

|

||||

"k8s.io/client-go/util/workqueue"

|

||||

"sync"

|

||||

"time"

|

||||

)

|

||||

|

||||

var (

|

||||

alwaysReady = func() bool { return true }

|

||||

noResyncPeriodFunc = func() time.Duration { return 0 }

|

||||

)

|

||||

|

||||

type Mocks struct {

|

||||

canary *v1alpha3.Canary

|

||||

kubeClient kubernetes.Interface

|

||||

istioClient istioclientset.Interface

|

||||

flaggerClient clientset.Interface

|

||||

deployer CanaryDeployer

|

||||

router CanaryRouter

|

||||

observer CanaryObserver

|

||||

ctrl *Controller

|

||||

logger *zap.SugaredLogger

|

||||

}

|

||||

|

||||

func SetupMocks() Mocks {

|

||||

// init canary

|

||||

canary := newTestCanary()

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary)

|

||||

|

||||

// init kube clientset and register mock objects

|

||||

kubeClient := fake.NewSimpleClientset(

|

||||

newTestDeployment(),

|

||||

newTestHPA(),

|

||||

NewTestConfigMap(),

|

||||

NewTestConfigMapEnv(),

|

||||

NewTestConfigMapVol(),

|

||||

NewTestSecret(),

|

||||

NewTestSecretEnv(),

|

||||

NewTestSecretVol(),

|

||||

)

|

||||

|

||||

istioClient := fakeIstio.NewSimpleClientset()

|

||||

logger, _ := logging.NewLogger("debug")

|

||||

|

||||

// init controller helpers

|

||||

deployer := CanaryDeployer{

|

||||

flaggerClient: flaggerClient,

|

||||

kubeClient: kubeClient,

|

||||

logger: logger,

|

||||

configTracker: ConfigTracker{

|

||||

logger: logger,

|

||||

kubeClient: kubeClient,

|

||||

flaggerClient: flaggerClient,

|

||||

},

|

||||

}

|

||||

router := CanaryRouter{

|

||||

flaggerClient: flaggerClient,

|

||||

kubeClient: kubeClient,

|

||||

istioClient: istioClient,

|

||||

logger: logger,

|

||||

}

|

||||

observer := CanaryObserver{

|

||||

metricsServer: "fake",

|

||||

}

|

||||

|

||||

// init controller

|

||||

flaggerInformerFactory := informers.NewSharedInformerFactory(flaggerClient, noResyncPeriodFunc())

|

||||

flaggerInformer := flaggerInformerFactory.Flagger().V1alpha3().Canaries()

|

||||

|

||||

ctrl := &Controller{

|

||||

kubeClient: kubeClient,

|

||||

istioClient: istioClient,

|

||||

flaggerClient: flaggerClient,

|

||||

flaggerLister: flaggerInformer.Lister(),

|

||||

flaggerSynced: flaggerInformer.Informer().HasSynced,

|

||||

workqueue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), controllerAgentName),

|

||||

eventRecorder: &record.FakeRecorder{},

|

||||

logger: logger,

|

||||

canaries: new(sync.Map),

|

||||

flaggerWindow: time.Second,

|

||||

deployer: deployer,

|

||||

router: router,

|

||||

observer: observer,

|

||||

recorder: NewCanaryRecorder(false),

|

||||

}

|

||||

ctrl.flaggerSynced = alwaysReady

|

||||

|

||||

return Mocks{

|

||||

canary: canary,

|

||||

observer: observer,

|

||||

router: router,

|

||||

deployer: deployer,

|

||||

logger: logger,

|

||||

flaggerClient: flaggerClient,

|

||||

istioClient: istioClient,

|

||||

kubeClient: kubeClient,

|

||||

ctrl: ctrl,

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestConfigMap() *corev1.ConfigMap {

|

||||

return &corev1.ConfigMap{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-config-env",

|

||||

},

|

||||

Data: map[string]string{

|

||||

"color": "red",

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestConfigMapV2() *corev1.ConfigMap {

|

||||

return &corev1.ConfigMap{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-config-env",

|

||||

},

|

||||

Data: map[string]string{

|

||||

"color": "blue",

|

||||

"output": "console",

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestConfigMapEnv() *corev1.ConfigMap {

|

||||

return &corev1.ConfigMap{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-config-all-env",

|

||||

},

|

||||

Data: map[string]string{

|

||||

"color": "red",

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestConfigMapVol() *corev1.ConfigMap {

|

||||

return &corev1.ConfigMap{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-config-vol",

|

||||

},

|

||||

Data: map[string]string{

|

||||

"color": "red",

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestSecret() *corev1.Secret {

|

||||

return &corev1.Secret{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-secret-env",

|

||||

},

|

||||

Type: corev1.SecretTypeOpaque,

|

||||

Data: map[string][]byte{

|

||||

"apiKey": []byte("test"),

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestSecretV2() *corev1.Secret {

|

||||

return &corev1.Secret{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-secret-env",

|

||||

},

|

||||

Type: corev1.SecretTypeOpaque,

|

||||

Data: map[string][]byte{

|

||||

"apiKey": []byte("test2"),

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestSecretEnv() *corev1.Secret {

|

||||

return &corev1.Secret{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-secret-all-env",

|

||||

},

|

||||

Type: corev1.SecretTypeOpaque,

|

||||

Data: map[string][]byte{

|

||||

"apiKey": []byte("test"),

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func NewTestSecretVol() *corev1.Secret {

|

||||

return &corev1.Secret{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: corev1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo-secret-vol",

|

||||

},

|

||||

Type: corev1.SecretTypeOpaque,

|

||||

Data: map[string][]byte{

|

||||

"apiKey": []byte("test"),

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

func newTestCanary() *v1alpha3.Canary {

|

||||

cd := &v1alpha3.Canary{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: v1alpha3.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: v1alpha3.CanarySpec{

|

||||

TargetRef: hpav1.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "apps/v1",

|

||||

Kind: "Deployment",

|

||||

},

|

||||

AutoscalerRef: &hpav1.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "autoscaling/v2beta1",

|

||||

Kind: "HorizontalPodAutoscaler",

|

||||

}, Service: v1alpha3.CanaryService{

|

||||

Port: 9898,

|

||||

}, CanaryAnalysis: v1alpha3.CanaryAnalysis{

|

||||

Threshold: 10,

|

||||

StepWeight: 10,

|

||||

MaxWeight: 50,

|

||||

Metrics: []v1alpha3.CanaryMetric{

|

||||

{

|

||||

Name: "istio_requests_total",

|

||||

Threshold: 99,

|

||||

Interval: "1m",

|

||||

},

|

||||

{

|

||||

Name: "istio_request_duration_seconds_bucket",

|

||||

Threshold: 500,

|

||||

Interval: "1m",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

return cd

|

||||

}

|

||||

|

||||

func newTestDeployment() *appsv1.Deployment {

|

||||

d := &appsv1.Deployment{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: appsv1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: appsv1.DeploymentSpec{

|

||||

Selector: &metav1.LabelSelector{

|

||||

MatchLabels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Template: corev1.PodTemplateSpec{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Labels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Spec: corev1.PodSpec{

|

||||

Containers: []corev1.Container{

|

||||

{

|

||||

Name: "podinfo",

|

||||

Image: "quay.io/stefanprodan/podinfo:1.2.0",

|

||||

Command: []string{

|

||||

"./podinfo",

|

||||

"--port=9898",

|

||||

},

|

||||

Args: nil,

|

||||

WorkingDir: "",

|

||||

Ports: []corev1.ContainerPort{

|

||||

{

|

||||

Name: "http",

|

||||

ContainerPort: 9898,

|

||||

Protocol: corev1.ProtocolTCP,

|

||||

},

|

||||

},

|

||||

Env: []corev1.EnvVar{

|

||||

{

|

||||

Name: "PODINFO_UI_COLOR",

|

||||

ValueFrom: &corev1.EnvVarSource{

|

||||

ConfigMapKeyRef: &corev1.ConfigMapKeySelector{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-config-env",

|

||||

},

|

||||

Key: "color",

|

||||

},

|

||||

},

|

||||

},

|

||||

{

|

||||

Name: "API_KEY",

|

||||

ValueFrom: &corev1.EnvVarSource{

|

||||

SecretKeyRef: &corev1.SecretKeySelector{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-secret-env",

|

||||

},

|

||||

Key: "apiKey",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

EnvFrom: []corev1.EnvFromSource{

|

||||

{

|

||||

ConfigMapRef: &corev1.ConfigMapEnvSource{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-config-all-env",

|

||||

},

|

||||

},

|

||||

},

|

||||

{

|

||||

SecretRef: &corev1.SecretEnvSource{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-secret-all-env",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

VolumeMounts: []corev1.VolumeMount{

|

||||

{

|

||||

Name: "config",

|

||||

MountPath: "/etc/podinfo/config",

|

||||

ReadOnly: true,

|

||||

},

|

||||

{

|

||||

Name: "secret",

|

||||

MountPath: "/etc/podinfo/secret",

|

||||

ReadOnly: true,

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

Volumes: []corev1.Volume{

|

||||

{

|

||||

Name: "config",

|

||||

VolumeSource: corev1.VolumeSource{

|

||||

ConfigMap: &corev1.ConfigMapVolumeSource{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-config-vol",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

{

|

||||

Name: "secret",

|

||||

VolumeSource: corev1.VolumeSource{

|

||||

Secret: &corev1.SecretVolumeSource{

|

||||

SecretName: "podinfo-secret-vol",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

return d

|

||||

}

|

||||

|

||||

func newTestDeploymentV2() *appsv1.Deployment {

|

||||

d := &appsv1.Deployment{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: appsv1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: appsv1.DeploymentSpec{

|

||||

Selector: &metav1.LabelSelector{

|

||||

MatchLabels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Template: corev1.PodTemplateSpec{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Labels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Spec: corev1.PodSpec{

|

||||

Containers: []corev1.Container{

|

||||

{

|

||||

Name: "podinfo",

|

||||

Image: "quay.io/stefanprodan/podinfo:1.2.1",

|

||||

Ports: []corev1.ContainerPort{

|

||||

{

|

||||

Name: "http",

|

||||

ContainerPort: 9898,

|

||||

Protocol: corev1.ProtocolTCP,

|

||||

},

|

||||

},

|

||||

Command: []string{

|

||||

"./podinfo",

|

||||

"--port=9898",

|

||||

},

|

||||

Env: []corev1.EnvVar{

|

||||

{

|

||||

Name: "PODINFO_UI_COLOR",

|

||||

ValueFrom: &corev1.EnvVarSource{

|

||||

ConfigMapKeyRef: &corev1.ConfigMapKeySelector{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-config-env",

|

||||

},

|

||||

Key: "color",

|

||||

},

|

||||

},

|

||||

},

|

||||

{

|

||||

Name: "API_KEY",

|

||||

ValueFrom: &corev1.EnvVarSource{

|

||||

SecretKeyRef: &corev1.SecretKeySelector{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-secret-env",

|

||||

},

|

||||

Key: "apiKey",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

EnvFrom: []corev1.EnvFromSource{

|

||||

{

|

||||

ConfigMapRef: &corev1.ConfigMapEnvSource{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-config-all-env",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

VolumeMounts: []corev1.VolumeMount{

|

||||

{

|

||||

Name: "config",

|

||||

MountPath: "/etc/podinfo/config",

|

||||

ReadOnly: true,

|

||||

},

|

||||

{

|

||||

Name: "secret",

|

||||

MountPath: "/etc/podinfo/secret",

|

||||

ReadOnly: true,

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

Volumes: []corev1.Volume{

|

||||

{

|

||||

Name: "config",

|

||||

VolumeSource: corev1.VolumeSource{

|

||||

ConfigMap: &corev1.ConfigMapVolumeSource{

|

||||

LocalObjectReference: corev1.LocalObjectReference{

|

||||

Name: "podinfo-config-vol",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

{

|

||||

Name: "secret",

|

||||

VolumeSource: corev1.VolumeSource{

|

||||

Secret: &corev1.SecretVolumeSource{

|

||||

SecretName: "podinfo-secret-vol",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

return d

|

||||

}

|

||||

|

||||

func newTestHPA() *hpav2.HorizontalPodAutoscaler {

|

||||

h := &hpav2.HorizontalPodAutoscaler{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: hpav2.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: hpav2.HorizontalPodAutoscalerSpec{

|

||||

ScaleTargetRef: hpav2.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "apps/v1",

|

||||

Kind: "Deployment",

|

||||

},

|

||||

Metrics: []hpav2.MetricSpec{

|

||||

{

|

||||

Type: "Resource",

|

||||

Resource: &hpav2.ResourceMetricSource{

|

||||

Name: "cpu",

|

||||

TargetAverageUtilization: int32p(99),

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

return h

|

||||

}

|

||||

@@ -1,9 +1,11 @@

|

||||

package controller

|

||||

|

||||

import (

|

||||

"crypto/rand"

|

||||

"encoding/base64"

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"io"

|

||||

"time"

|

||||

|

||||

"github.com/google/go-cmp/cmp"

|

||||

@@ -28,9 +30,10 @@ type CanaryDeployer struct {

|

||||

istioClient istioclientset.Interface

|

||||

flaggerClient clientset.Interface

|

||||

logger *zap.SugaredLogger

|

||||

configTracker ConfigTracker

|

||||

}

|

||||

|

||||

// Promote copies the pod spec from canary to primary

|

||||

// Promote copies the pod spec, secrets and config maps from canary to primary

|

||||

func (c *CanaryDeployer) Promote(cd *flaggerv1.Canary) error {

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

primaryName := fmt.Sprintf("%s-primary", targetName)

|

||||

@@ -51,12 +54,30 @@ func (c *CanaryDeployer) Promote(cd *flaggerv1.Canary) error {

|

||||

return fmt.Errorf("deployment %s.%s query error %v", primaryName, cd.Namespace, err)

|

||||

}

|

||||

|

||||

// promote secrets and config maps

|

||||

configRefs, err := c.configTracker.GetTargetConfigs(cd)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

if err := c.configTracker.CreatePrimaryConfigs(cd, configRefs); err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

primaryCopy := primary.DeepCopy()

|

||||

primaryCopy.Spec.ProgressDeadlineSeconds = canary.Spec.ProgressDeadlineSeconds

|

||||

primaryCopy.Spec.MinReadySeconds = canary.Spec.MinReadySeconds

|

||||

primaryCopy.Spec.RevisionHistoryLimit = canary.Spec.RevisionHistoryLimit

|

||||

primaryCopy.Spec.Strategy = canary.Spec.Strategy

|

||||

primaryCopy.Spec.Template.Spec = canary.Spec.Template.Spec

|

||||

|

||||

// update spec with primary secrets and config maps

|

||||

primaryCopy.Spec.Template.Spec = c.configTracker.ApplyPrimaryConfigs(canary.Spec.Template.Spec, configRefs)

|

||||

|

||||

// update pod annotations to ensure a rolling update

|

||||

annotations, err := c.makeAnnotations(canary.Spec.Template.Annotations)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

primaryCopy.Spec.Template.Annotations = annotations

|

||||

|

||||

_, err = c.kubeClient.AppsV1().Deployments(cd.Namespace).Update(primaryCopy)

|

||||

if err != nil {

|

||||

@@ -157,7 +178,22 @@ func (c *CanaryDeployer) ShouldAdvance(cd *flaggerv1.Canary) (bool, error) {

|

||||

if cd.Status.LastAppliedSpec == "" || cd.Status.Phase == flaggerv1.CanaryProgressing {

|

||||

return true, nil

|

||||

}

|

||||

return c.IsNewSpec(cd)

|

||||

|

||||

newDep, err := c.IsNewSpec(cd)

|

||||

if err != nil {

|

||||

return false, err

|

||||

}

|

||||

if newDep {

|

||||

return newDep, nil

|

||||

}

|

||||

|

||||

newCfg, err := c.configTracker.HasConfigChanged(cd)

|

||||

if err != nil {

|

||||

return false, err

|

||||

}

|

||||

|

||||

return newCfg, nil

|

||||

|

||||

}

|

||||

|

||||

// SetStatusFailedChecks updates the canary failed checks counter

|

||||

@@ -218,12 +254,18 @@ func (c *CanaryDeployer) SyncStatus(cd *flaggerv1.Canary, status flaggerv1.Canar

|

||||

return fmt.Errorf("deployment %s.%s marshal error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

}

|

||||

|

||||

configs, err := c.configTracker.GetConfigRefs(cd)

|

||||

if err != nil {

|

||||

return fmt.Errorf("configs query error %v", err)

|

||||

}

|

||||

|

||||

cdCopy := cd.DeepCopy()

|

||||

cdCopy.Status.Phase = status.Phase

|

||||

cdCopy.Status.CanaryWeight = status.CanaryWeight

|

||||

cdCopy.Status.FailedChecks = status.FailedChecks

|

||||

cdCopy.Status.LastAppliedSpec = base64.StdEncoding.EncodeToString(specJson)

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

cdCopy.Status.TrackedConfigs = configs

|

||||

|

||||

cd, err = c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

if err != nil {

|

||||

@@ -290,11 +332,25 @@ func (c *CanaryDeployer) createPrimaryDeployment(cd *flaggerv1.Canary) error {

|

||||

|

||||

primaryDep, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(primaryName, metav1.GetOptions{})

|

||||

if errors.IsNotFound(err) {

|

||||

// create primary secrets and config maps

|

||||

configRefs, err := c.configTracker.GetTargetConfigs(cd)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

if err := c.configTracker.CreatePrimaryConfigs(cd, configRefs); err != nil {

|

||||

return err

|

||||

}

|

||||

annotations, err := c.makeAnnotations(canaryDep.Spec.Template.Annotations)

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

|

||||

// create primary deployment

|

||||

primaryDep = &appsv1.Deployment{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Name: primaryName,

|

||||

Annotations: canaryDep.Annotations,

|

||||

Namespace: cd.Namespace,

|

||||

Name: primaryName,

|

||||

Labels: canaryDep.Labels,

|

||||

Namespace: cd.Namespace,

|

||||

OwnerReferences: []metav1.OwnerReference{

|

||||

*metav1.NewControllerRef(cd, schema.GroupVersionKind{

|

||||

Group: flaggerv1.SchemeGroupVersion.Group,

|

||||

@@ -317,9 +373,10 @@ func (c *CanaryDeployer) createPrimaryDeployment(cd *flaggerv1.Canary) error {

|

||||

Template: corev1.PodTemplateSpec{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Labels: map[string]string{"app": primaryName},

|

||||

Annotations: canaryDep.Spec.Template.Annotations,

|

||||

Annotations: annotations,

|

||||

},

|

||||

Spec: canaryDep.Spec.Template.Spec,

|

||||

// update spec with the primary secrets and config maps

|

||||

Spec: c.configTracker.ApplyPrimaryConfigs(canaryDep.Spec.Template.Spec, configRefs),

|

||||

},

|

||||

},

|

||||

}

|

||||

@@ -353,6 +410,7 @@ func (c *CanaryDeployer) createPrimaryHpa(cd *flaggerv1.Canary) error {

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Name: primaryHpaName,

|

||||

Namespace: cd.Namespace,

|

||||

Labels: hpa.Labels,

|

||||

OwnerReferences: []metav1.OwnerReference{

|

||||

*metav1.NewControllerRef(cd, schema.GroupVersionKind{

|

||||

Group: flaggerv1.SchemeGroupVersion.Group,

|

||||

@@ -432,3 +490,26 @@ func (c *CanaryDeployer) getDeploymentCondition(

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// makeAnnotations appends an unique ID to annotations map

|

||||

func (c *CanaryDeployer) makeAnnotations(annotations map[string]string) (map[string]string, error) {

|

||||

idKey := "flagger-id"

|

||||

res := make(map[string]string)

|

||||

uuid := make([]byte, 16)

|

||||

n, err := io.ReadFull(rand.Reader, uuid)

|

||||

if n != len(uuid) || err != nil {

|

||||

return res, err

|

||||

}

|

||||

uuid[8] = uuid[8]&^0xc0 | 0x80

|

||||

uuid[6] = uuid[6]&^0xf0 | 0x40

|

||||

id := fmt.Sprintf("%x-%x-%x-%x-%x", uuid[0:4], uuid[4:6], uuid[6:8], uuid[8:10], uuid[10:])

|

||||

|

||||

for k, v := range annotations {

|

||||

if k != idKey {

|

||||

res[k] = v

|

||||

}

|

||||

}

|

||||

res[idKey] = id

|

||||

|

||||

return res, nil

|

||||

}

|

||||

|

||||

@@ -4,200 +4,24 @@ import (

|

||||

"testing"

|

||||

|

||||

"github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

fakeFlagger "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/fake"

|

||||

"github.com/stefanprodan/flagger/pkg/logging"

|

||||

appsv1 "k8s.io/api/apps/v1"

|

||||

hpav1 "k8s.io/api/autoscaling/v1"

|

||||

hpav2 "k8s.io/api/autoscaling/v2beta1"

|

||||

corev1 "k8s.io/api/core/v1"

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

"k8s.io/client-go/kubernetes/fake"

|

||||

)

|

||||

|

||||

func newTestCanary() *v1alpha3.Canary {

|

||||

cd := &v1alpha3.Canary{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: v1alpha3.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: v1alpha3.CanarySpec{

|

||||

TargetRef: hpav1.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "apps/v1",

|

||||

Kind: "Deployment",

|

||||

},

|

||||

AutoscalerRef: &hpav1.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "autoscaling/v2beta1",

|

||||

Kind: "HorizontalPodAutoscaler",

|

||||

}, Service: v1alpha3.CanaryService{

|

||||

Port: 9898,

|

||||

}, CanaryAnalysis: v1alpha3.CanaryAnalysis{

|

||||

Threshold: 10,

|

||||

StepWeight: 10,

|

||||

MaxWeight: 50,

|

||||

Metrics: []v1alpha3.CanaryMetric{

|

||||

{

|

||||

Name: "istio_requests_total",

|

||||

Threshold: 99,

|

||||

Interval: "1m",

|

||||

},

|

||||

{

|

||||

Name: "istio_request_duration_seconds_bucket",

|

||||

Threshold: 500,

|

||||

Interval: "1m",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

return cd

|

||||

}

|

||||

|

||||

func newTestDeployment() *appsv1.Deployment {

|

||||

d := &appsv1.Deployment{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: appsv1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: appsv1.DeploymentSpec{

|

||||

Selector: &metav1.LabelSelector{

|

||||

MatchLabels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Template: corev1.PodTemplateSpec{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Labels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Spec: corev1.PodSpec{

|

||||

Containers: []corev1.Container{

|

||||

{

|

||||

Name: "podinfo",

|

||||

Image: "quay.io/stefanprodan/podinfo:1.2.0",

|

||||

Ports: []corev1.ContainerPort{

|

||||

{

|

||||

Name: "http",

|

||||

ContainerPort: 9898,

|

||||

Protocol: corev1.ProtocolTCP,

|

||||

},

|

||||

},

|

||||

Command: []string{

|

||||

"./podinfo",

|

||||

"--port=9898",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

return d

|

||||

}

|

||||

|

||||

func newTestDeploymentUpdated() *appsv1.Deployment {

|

||||

d := &appsv1.Deployment{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: appsv1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: appsv1.DeploymentSpec{

|

||||

Selector: &metav1.LabelSelector{

|

||||

MatchLabels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Template: corev1.PodTemplateSpec{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Labels: map[string]string{

|

||||

"app": "podinfo",

|

||||

},

|

||||

},

|

||||

Spec: corev1.PodSpec{

|

||||

Containers: []corev1.Container{

|

||||

{

|

||||

Name: "podinfo",

|

||||

Image: "quay.io/stefanprodan/podinfo:1.2.1",

|

||||

Ports: []corev1.ContainerPort{

|

||||

{

|

||||

Name: "http",

|

||||

ContainerPort: 9898,

|

||||

Protocol: corev1.ProtocolTCP,

|

||||

},

|

||||

},

|

||||

Command: []string{

|

||||

"./podinfo",

|

||||

"--port=9898",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

return d

|

||||

}

|

||||

|

||||

func newTestHPA() *hpav2.HorizontalPodAutoscaler {

|

||||

h := &hpav2.HorizontalPodAutoscaler{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: hpav2.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: hpav2.HorizontalPodAutoscalerSpec{

|

||||

ScaleTargetRef: hpav2.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "apps/v1",

|

||||

Kind: "Deployment",

|

||||

},

|

||||

Metrics: []hpav2.MetricSpec{

|

||||

{

|

||||

Type: "Resource",

|

||||

Resource: &hpav2.ResourceMetricSource{

|

||||

Name: "cpu",

|

||||

TargetAverageUtilization: int32p(99),

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

return h

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_Sync(t *testing.T) {

|

||||

canary := newTestCanary()

|

||||

mocks := SetupMocks()

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

depPrimary, err := mocks.kubeClient.AppsV1().Deployments("default").Get("podinfo-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

dep := newTestDeployment()

|

||||

hpa := newTestHPA()

|

||||

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary)

|

||||

|

||||

kubeClient := fake.NewSimpleClientset(dep, hpa)

|

||||

|

||||

logger, _ := logging.NewLogger("debug")

|

||||

deployer := &CanaryDeployer{

|

||||

flaggerClient: flaggerClient,

|

||||

kubeClient: kubeClient,

|

||||

logger: logger,

|

||||

}

|

||||

|

||||

err := deployer.Sync(canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

depPrimary, err := kubeClient.AppsV1().Deployments("default").Get("podinfo-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

configMap := NewTestConfigMap()

|

||||

secret := NewTestSecret()

|

||||

|

||||

primaryImage := depPrimary.Spec.Template.Spec.Containers[0].Image

|

||||

sourceImage := dep.Spec.Template.Spec.Containers[0].Image

|

||||

@@ -205,7 +29,7 @@ func TestCanaryDeployer_Sync(t *testing.T) {

|

||||

t.Errorf("Got image %s wanted %s", primaryImage, sourceImage)

|

||||

}

|

||||

|

||||

hpaPrimary, err := kubeClient.AutoscalingV2beta1().HorizontalPodAutoscalers("default").Get("podinfo-primary", metav1.GetOptions{})

|

||||

hpaPrimary, err := mocks.kubeClient.AutoscalingV2beta1().HorizontalPodAutoscalers("default").Get("podinfo-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

@@ -213,37 +37,76 @@ func TestCanaryDeployer_Sync(t *testing.T) {

|

||||

if hpaPrimary.Spec.ScaleTargetRef.Name != depPrimary.Name {

|

||||

t.Errorf("Got HPA target %s wanted %s", hpaPrimary.Spec.ScaleTargetRef.Name, depPrimary.Name)

|

||||

}

|

||||

|

||||

configPrimary, err := mocks.kubeClient.CoreV1().ConfigMaps("default").Get("podinfo-config-env-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if configPrimary.Data["color"] != configMap.Data["color"] {

|

||||

t.Errorf("Got ConfigMap color %s wanted %s", configPrimary.Data["color"], configMap.Data["color"])

|

||||

}

|

||||

|

||||

configPrimaryEnv, err := mocks.kubeClient.CoreV1().ConfigMaps("default").Get("podinfo-config-all-env-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if configPrimaryEnv.Data["color"] != configMap.Data["color"] {

|

||||

t.Errorf("Got ConfigMap %s wanted %s", configPrimaryEnv.Data["a"], configMap.Data["color"])

|

||||

}

|

||||

|

||||

configPrimaryVol, err := mocks.kubeClient.CoreV1().ConfigMaps("default").Get("podinfo-config-vol-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if configPrimaryVol.Data["color"] != configMap.Data["color"] {

|

||||

t.Errorf("Got ConfigMap color %s wanted %s", configPrimary.Data["color"], configMap.Data["color"])

|

||||

}

|

||||

|

||||

secretPrimary, err := mocks.kubeClient.CoreV1().Secrets("default").Get("podinfo-secret-env-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if string(secretPrimary.Data["apiKey"]) != string(secret.Data["apiKey"]) {

|

||||

t.Errorf("Got primary secret %s wanted %s", secretPrimary.Data["apiKey"], secret.Data["apiKey"])

|

||||

}

|

||||

|

||||

secretPrimaryEnv, err := mocks.kubeClient.CoreV1().Secrets("default").Get("podinfo-secret-all-env-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if string(secretPrimaryEnv.Data["apiKey"]) != string(secret.Data["apiKey"]) {

|

||||

t.Errorf("Got primary secret %s wanted %s", secretPrimary.Data["apiKey"], secret.Data["apiKey"])

|

||||

}

|

||||

|

||||

secretPrimaryVol, err := mocks.kubeClient.CoreV1().Secrets("default").Get("podinfo-secret-vol-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if string(secretPrimaryVol.Data["apiKey"]) != string(secret.Data["apiKey"]) {

|

||||

t.Errorf("Got primary secret %s wanted %s", secretPrimary.Data["apiKey"], secret.Data["apiKey"])

|

||||

}

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_IsNewSpec(t *testing.T) {

|

||||

canary := newTestCanary()

|

||||

dep := newTestDeployment()

|

||||

dep2 := newTestDeploymentUpdated()

|

||||

|

||||

hpa := newTestHPA()

|

||||

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary)

|

||||

|

||||

kubeClient := fake.NewSimpleClientset(dep, hpa)

|

||||

|

||||

logger, _ := logging.NewLogger("debug")

|

||||

deployer := &CanaryDeployer{

|

||||

flaggerClient: flaggerClient,

|

||||

kubeClient: kubeClient,

|

||||

logger: logger,

|

||||

}

|

||||

|

||||

err := deployer.Sync(canary)

|

||||

mocks := SetupMocks()

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

_, err = kubeClient.AppsV1().Deployments("default").Update(dep2)

|

||||

dep2 := newTestDeploymentV2()

|

||||

_, err = mocks.kubeClient.AppsV1().Deployments("default").Update(dep2)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

isNew, err := deployer.IsNewSpec(canary)

|

||||

isNew, err := mocks.deployer.IsNewSpec(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

@@ -254,39 +117,30 @@ func TestCanaryDeployer_IsNewSpec(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_Promote(t *testing.T) {

|

||||

canary := newTestCanary()

|

||||

dep := newTestDeployment()

|

||||

dep2 := newTestDeploymentUpdated()

|

||||

|

||||

hpa := newTestHPA()

|

||||

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary)

|

||||

|

||||

kubeClient := fake.NewSimpleClientset(dep, hpa)

|

||||

|

||||

logger, _ := logging.NewLogger("debug")

|

||||

deployer := &CanaryDeployer{

|

||||

flaggerClient: flaggerClient,

|

||||

kubeClient: kubeClient,

|

||||

logger: logger,

|

||||

}

|

||||

|

||||

err := deployer.Sync(canary)

|

||||

mocks := SetupMocks()

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

_, err = kubeClient.AppsV1().Deployments("default").Update(dep2)

|

||||

dep2 := newTestDeploymentV2()

|

||||

_, err = mocks.kubeClient.AppsV1().Deployments("default").Update(dep2)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

err = deployer.Promote(canary)

|

||||

config2 := NewTestConfigMapV2()

|

||||

_, err = mocks.kubeClient.CoreV1().ConfigMaps("default").Update(config2)

|

||||

if err != nil {

|

||||