mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-15 18:40:12 +00:00

Compare commits

54 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

456d914c35 | ||

|

|

737507b0fe | ||

|

|

4bcf82d295 | ||

|

|

e9cd7afc8a | ||

|

|

0830abd51d | ||

|

|

5b296e01b3 | ||

|

|

3fd039afd1 | ||

|

|

5904348ba5 | ||

|

|

1a98e93723 | ||

|

|

c9685fbd13 | ||

|

|

dc347e273d | ||

|

|

8170916897 | ||

|

|

71cd4e0cb7 | ||

|

|

0109788ccc | ||

|

|

1649dea468 | ||

|

|

b8a7ea8534 | ||

|

|

afe4d59d5a | ||

|

|

0f2697df23 | ||

|

|

05664fa648 | ||

|

|

3b2564f34b | ||

|

|

dd0cf2d588 | ||

|

|

7c66f23c6a | ||

|

|

a9f034de1a | ||

|

|

6ad2dca57a | ||

|

|

e8353c110b | ||

|

|

dbf26ddf53 | ||

|

|

acc72d207f | ||

|

|

a784f83464 | ||

|

|

07d8355363 | ||

|

|

f7a439274e | ||

|

|

bd6d446cb8 | ||

|

|

385d0e0549 | ||

|

|

02236374d8 | ||

|

|

c46fe55ad0 | ||

|

|

36a54fbf2a | ||

|

|

60f6b05397 | ||

|

|

6d8a7343b7 | ||

|

|

aff8b117d4 | ||

|

|

1b3c3b22b3 | ||

|

|

1d31b5ed90 | ||

|

|

1ef310f00d | ||

|

|

acdd2c46d5 | ||

|

|

9872e6bc16 | ||

|

|

10c2bdec86 | ||

|

|

4bf3b70048 | ||

|

|

ada446bbaa | ||

|

|

c4981ef4db | ||

|

|

d1b84cd31d | ||

|

|

9232c8647a | ||

|

|

23e8c7d616 | ||

|

|

42607fbd64 | ||

|

|

28781a5f02 | ||

|

|

3589e11244 | ||

|

|

5e880d3942 |

17

.github/main.workflow

vendored

Normal file

17

.github/main.workflow

vendored

Normal file

@@ -0,0 +1,17 @@

|

||||

workflow "Publish Helm charts" {

|

||||

on = "push"

|

||||

resolves = ["helm-push"]

|

||||

}

|

||||

|

||||

action "helm-lint" {

|

||||

uses = "stefanprodan/gh-actions/helm@master"

|

||||

args = ["lint charts/*"]

|

||||

}

|

||||

|

||||

action "helm-push" {

|

||||

needs = ["helm-lint"]

|

||||

uses = "stefanprodan/gh-actions/helm-gh-pages@master"

|

||||

args = ["charts/*","https://flagger.app"]

|

||||

secrets = ["GITHUB_TOKEN"]

|

||||

}

|

||||

|

||||

@@ -23,9 +23,10 @@ after_success:

|

||||

- if [ -z "$DOCKER_USER" ]; then

|

||||

echo "PR build, skipping image push";

|

||||

else

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

BRANCH_COMMIT=${TRAVIS_BRANCH}-$(echo ${TRAVIS_COMMIT} | head -c7);

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${BRANCH_COMMIT};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin quay.io;

|

||||

docker push quay.io/stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

docker push quay.io/stefanprodan/flagger:${BRANCH_COMMIT};

|

||||

fi

|

||||

- if [ -z "$TRAVIS_TAG" ]; then

|

||||

echo "Not a release, skipping image push";

|

||||

|

||||

44

Dockerfile.loadtester

Normal file

44

Dockerfile.loadtester

Normal file

@@ -0,0 +1,44 @@

|

||||

FROM golang:1.11 AS hey-builder

|

||||

|

||||

RUN mkdir -p /go/src/github.com/rakyll/hey/

|

||||

|

||||

WORKDIR /go/src/github.com/rakyll/hey

|

||||

|

||||

ADD https://github.com/rakyll/hey/archive/v0.1.1.tar.gz .

|

||||

|

||||

RUN tar xzf v0.1.1.tar.gz --strip 1

|

||||

|

||||

RUN go get ./...

|

||||

|

||||

RUN CGO_ENABLED=0 GOOS=linux GOARCH=amd64 \

|

||||

go install -ldflags '-w -extldflags "-static"' \

|

||||

/go/src/github.com/rakyll/hey

|

||||

|

||||

FROM golang:1.11 AS builder

|

||||

|

||||

RUN mkdir -p /go/src/github.com/stefanprodan/flagger/

|

||||

|

||||

WORKDIR /go/src/github.com/stefanprodan/flagger

|

||||

|

||||

COPY . .

|

||||

|

||||

RUN go test -race ./pkg/loadtester/

|

||||

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o loadtester ./cmd/loadtester/*

|

||||

|

||||

FROM alpine:3.8

|

||||

|

||||

RUN addgroup -S app \

|

||||

&& adduser -S -g app app \

|

||||

&& apk --no-cache add ca-certificates curl

|

||||

|

||||

WORKDIR /home/app

|

||||

|

||||

COPY --from=hey-builder /go/bin/hey /usr/local/bin/hey

|

||||

COPY --from=builder /go/src/github.com/stefanprodan/flagger/loadtester .

|

||||

|

||||

RUN chown -R app:app ./

|

||||

|

||||

USER app

|

||||

|

||||

ENTRYPOINT ["./loadtester"]

|

||||

9

Makefile

9

Makefile

@@ -3,6 +3,8 @@ VERSION?=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | t

|

||||

VERSION_MINOR:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | tr -d '"' | rev | cut -d'.' -f2- | rev)

|

||||

PATCH:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | tr -d '"' | awk -F. '{print $$NF}')

|

||||

SOURCE_DIRS = cmd pkg/apis pkg/controller pkg/server pkg/logging pkg/version

|

||||

LT_VERSION?=$(shell grep 'VERSION' cmd/loadtester/main.go | awk '{ print $$4 }' | tr -d '"' | head -n1)

|

||||

|

||||

run:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info \

|

||||

-metrics-server=https://prometheus.iowa.weavedx.com \

|

||||

@@ -29,7 +31,7 @@ test: test-fmt test-codegen

|

||||

go test ./...

|

||||

|

||||

helm-package:

|

||||

cd charts/ && helm package flagger/ && helm package grafana/

|

||||

cd charts/ && helm package flagger/ && helm package grafana/ && helm package loadtester/

|

||||

mv charts/*.tgz docs/

|

||||

helm repo index docs --url https://stefanprodan.github.io/flagger --merge ./docs/index.yaml

|

||||

|

||||

@@ -44,6 +46,7 @@ version-set:

|

||||

sed -i '' "s/flagger:$$current/flagger:$$next/g" artifacts/flagger/deployment.yaml && \

|

||||

sed -i '' "s/tag: $$current/tag: $$next/g" charts/flagger/values.yaml && \

|

||||

sed -i '' "s/appVersion: $$current/appVersion: $$next/g" charts/flagger/Chart.yaml && \

|

||||

sed -i '' "s/version: $$current/version: $$next/g" charts/flagger/Chart.yaml && \

|

||||

echo "Version $$next set in code, deployment and charts"

|

||||

|

||||

version-up:

|

||||

@@ -77,3 +80,7 @@ reset-test:

|

||||

kubectl delete -f ./artifacts/namespaces

|

||||

kubectl apply -f ./artifacts/namespaces

|

||||

kubectl apply -f ./artifacts/canaries

|

||||

|

||||

loadtester-push:

|

||||

docker build -t quay.io/stefanprodan/flagger-loadtester:$(LT_VERSION) . -f Dockerfile.loadtester

|

||||

docker push quay.io/stefanprodan/flagger-loadtester:$(LT_VERSION)

|

||||

65

README.md

65

README.md

@@ -8,8 +8,8 @@

|

||||

|

||||

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests, load tests or any other custom

|

||||

validation.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

### Install

|

||||

|

||||

@@ -28,7 +28,7 @@ helm upgrade -i flagger flagger/flagger \

|

||||

--set metricsServer=http://prometheus.istio-system:9090

|

||||

```

|

||||

|

||||

Flagger is compatible with Kubernetes >1.10.0 and Istio >1.0.0.

|

||||

Flagger is compatible with Kubernetes >1.11.0 and Istio >1.0.0.

|

||||

|

||||

### Usage

|

||||

|

||||

@@ -38,6 +38,9 @@ ClusterIP [services](https://kubernetes.io/docs/concepts/services-networking/ser

|

||||

Istio [virtual services](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#VirtualService))

|

||||

to drive the canary analysis and promotion.

|

||||

|

||||

Flagger keeps track of ConfigMaps and Secrets referenced by a Kubernetes Deployment and triggers a canary analysis if any of those objects change.

|

||||

When promoting a workload in production, both code (container images) and configuration (config maps and secrets) are being synchronised.

|

||||

|

||||

|

||||

|

||||

Gated canary promotion stages:

|

||||

@@ -48,28 +51,28 @@ Gated canary promotion stages:

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% (step weight)

|

||||

* call webhooks and check results

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated (revision bump) and start over

|

||||

* wait for the canary deployment to be updated and start over

|

||||

* increase canary traffic weight by 5% (step weight) till it reaches 50% (max weight)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* promote canary to primary

|

||||

* copy ConfigMaps and Secrets from canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark rollout as finished

|

||||

* wait for the canary deployment to be updated (revision bump) and start over

|

||||

|

||||

You can change the canary analysis _max weight_ and the _step weight_ percentage in the Flagger's custom resource.

|

||||

* wait for the canary deployment to be updated and start over

|

||||

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

@@ -127,12 +130,11 @@ spec:

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: integration-tests

|

||||

url: http://podinfo.test:9898/echo

|

||||

timeout: 1m

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

test: "all"

|

||||

token: "16688eb5e9f289f1991c"

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

The canary analysis is using the following promql queries:

|

||||

@@ -211,6 +213,13 @@ kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary promotion custom resource (replace the Istio gateway and the internet domain with your own):

|

||||

|

||||

```bash

|

||||

@@ -239,18 +248,22 @@ Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.0

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

**Note** that Flagger tracks changes in the deployment `PodSpec` but also in `ConfigMaps` and `Secrets`

|

||||

that are referenced in the pod's volumes and containers environment variables.

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new canary analysis:

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -272,6 +285,15 @@ Events:

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 5 2019-01-16T14:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

@@ -300,9 +322,10 @@ the canary is scaled to zero and the rollout is marked as failed.

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 16695041

|

||||

Failed Checks: 10

|

||||

State: failed

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -319,6 +342,8 @@ Events:

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

### Monitoring

|

||||

|

||||

Flagger comes with a Grafana dashboard made for canary analysis.

|

||||

|

||||

@@ -51,9 +51,8 @@ spec:

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: integration-tests

|

||||

url: https://httpbin.org/post

|

||||

timeout: 1m

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

test: "all"

|

||||

token: "16688eb5e9f289f1991c"

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

|

||||

58

artifacts/configs/canary.yaml

Normal file

58

artifacts/configs/canary.yaml

Normal file

@@ -0,0 +1,58 @@

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# Istio Prometheus checks

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

16

artifacts/configs/configs.yaml

Normal file

16

artifacts/configs/configs.yaml

Normal file

@@ -0,0 +1,16 @@

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: podinfo-config-env

|

||||

namespace: test

|

||||

data:

|

||||

color: blue

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: podinfo-config-vol

|

||||

namespace: test

|

||||

data:

|

||||

output: console

|

||||

textmode: "true"

|

||||

89

artifacts/configs/deployment.yaml

Normal file

89

artifacts/configs/deployment.yaml

Normal file

@@ -0,0 +1,89 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.3.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

valueFrom:

|

||||

configMapKeyRef:

|

||||

name: podinfo-config-env

|

||||

key: color

|

||||

- name: SECRET_USER

|

||||

valueFrom:

|

||||

secretKeyRef:

|

||||

name: podinfo-secret-env

|

||||

key: user

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

volumeMounts:

|

||||

- name: configs

|

||||

mountPath: /etc/podinfo/configs

|

||||

readOnly: true

|

||||

- name: secrets

|

||||

mountPath: /etc/podinfo/secrets

|

||||

readOnly: true

|

||||

volumes:

|

||||

- name: configs

|

||||

configMap:

|

||||

name: podinfo-config-vol

|

||||

- name: secrets

|

||||

secret:

|

||||

secretName: podinfo-secret-vol

|

||||

19

artifacts/configs/hpa.yaml

Normal file

19

artifacts/configs/hpa.yaml

Normal file

@@ -0,0 +1,19 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 1

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

16

artifacts/configs/secrets.yaml

Normal file

16

artifacts/configs/secrets.yaml

Normal file

@@ -0,0 +1,16 @@

|

||||

apiVersion: v1

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: podinfo-secret-env

|

||||

namespace: test

|

||||

data:

|

||||

password: cGFzc3dvcmQ=

|

||||

user: YWRtaW4=

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: podinfo-secret-vol

|

||||

namespace: test

|

||||

data:

|

||||

key: cGFzc3dvcmQ=

|

||||

@@ -19,7 +19,21 @@ spec:

|

||||

plural: canaries

|

||||

singular: canary

|

||||

kind: Canary

|

||||

categories:

|

||||

- all

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

additionalPrinterColumns:

|

||||

- name: Status

|

||||

type: string

|

||||

JSONPath: .status.phase

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

validation:

|

||||

openAPIV3Schema:

|

||||

properties:

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.3.0

|

||||

image: quay.io/stefanprodan/flagger:0.5.0

|

||||

imagePullPolicy: Always

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

60

artifacts/loadtester/deployment.yaml

Normal file

60

artifacts/loadtester/deployment.yaml

Normal file

@@ -0,0 +1,60 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: flagger-loadtester

|

||||

labels:

|

||||

app: flagger-loadtester

|

||||

spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: flagger-loadtester

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app: flagger-loadtester

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

spec:

|

||||

containers:

|

||||

- name: loadtester

|

||||

image: quay.io/stefanprodan/flagger-loadtester:0.1.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

containerPort: 8080

|

||||

command:

|

||||

- ./loadtester

|

||||

- -port=8080

|

||||

- -log-level=info

|

||||

- -timeout=1h

|

||||

- -log-cmd-output=true

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

memory: "512Mi"

|

||||

cpu: "1000m"

|

||||

requests:

|

||||

memory: "32Mi"

|

||||

cpu: "10m"

|

||||

securityContext:

|

||||

readOnlyRootFilesystem: true

|

||||

runAsUser: 10001

|

||||

15

artifacts/loadtester/service.yaml

Normal file

15

artifacts/loadtester/service.yaml

Normal file

@@ -0,0 +1,15 @@

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: flagger-loadtester

|

||||

labels:

|

||||

app: flagger-loadtester

|

||||

spec:

|

||||

type: ClusterIP

|

||||

selector:

|

||||

app: flagger-loadtester

|

||||

ports:

|

||||

- name: http

|

||||

port: 80

|

||||

protocol: TCP

|

||||

targetPort: http

|

||||

@@ -1,8 +1,8 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.3.0

|

||||

appVersion: 0.3.0

|

||||

kubeVersion: ">=1.9.0-0"

|

||||

version: 0.5.0

|

||||

appVersion: 0.5.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

home: https://docs.flagger.app

|

||||

|

||||

@@ -8,7 +8,7 @@ Based on the KPIs analysis a canary is promoted or aborted and the analysis resu

|

||||

|

||||

## Prerequisites

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

|

||||

@@ -20,7 +20,21 @@ spec:

|

||||

plural: canaries

|

||||

singular: canary

|

||||

kind: Canary

|

||||

categories:

|

||||

- all

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

additionalPrinterColumns:

|

||||

- name: Status

|

||||

type: string

|

||||

JSONPath: .status.phase

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

validation:

|

||||

openAPIV3Schema:

|

||||

properties:

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger

|

||||

tag: 0.3.0

|

||||

tag: 0.5.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

metricsServer: "http://prometheus.istio-system.svc.cluster.local:9090"

|

||||

|

||||

@@ -6,7 +6,7 @@ Grafana dashboards for monitoring progressive deployments powered by Istio, Prom

|

||||

|

||||

## Prerequisites

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

@@ -75,5 +75,5 @@ helm install flagger/grafana --name flagger-grafana -f values.yaml

|

||||

```

|

||||

|

||||

> **Tip**: You can use the default [values.yaml](values.yaml)

|

||||

```

|

||||

|

||||

|

||||

|

||||

22

charts/loadtester/.helmignore

Normal file

22

charts/loadtester/.helmignore

Normal file

@@ -0,0 +1,22 @@

|

||||

# Patterns to ignore when building packages.

|

||||

# This supports shell glob matching, relative path matching, and

|

||||

# negation (prefixed with !). Only one pattern per line.

|

||||

.DS_Store

|

||||

# Common VCS dirs

|

||||

.git/

|

||||

.gitignore

|

||||

.bzr/

|

||||

.bzrignore

|

||||

.hg/

|

||||

.hgignore

|

||||

.svn/

|

||||

# Common backup files

|

||||

*.swp

|

||||

*.bak

|

||||

*.tmp

|

||||

*~

|

||||

# Various IDEs

|

||||

.project

|

||||

.idea/

|

||||

*.tmproj

|

||||

.vscode/

|

||||

20

charts/loadtester/Chart.yaml

Normal file

20

charts/loadtester/Chart.yaml

Normal file

@@ -0,0 +1,20 @@

|

||||

apiVersion: v1

|

||||

name: loadtester

|

||||

version: 0.1.0

|

||||

appVersion: 0.1.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger's load testing services based on rakyll/hey that generates traffic during canary analysis when configured as a webhook.

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

maintainers:

|

||||

- name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

email: stefanprodan@users.noreply.github.com

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

- load testing

|

||||

78

charts/loadtester/README.md

Normal file

78

charts/loadtester/README.md

Normal file

@@ -0,0 +1,78 @@

|

||||

# Flagger load testing service

|

||||

|

||||

[Flagger's](https://github.com/stefanprodan/flagger) load testing service is based on

|

||||

[rakyll/hey](https://github.com/rakyll/hey)

|

||||

and can be used to generates traffic during canary analysis when configured as a webhook.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

|

||||

## Installing the Chart

|

||||

|

||||

Add Flagger Helm repository:

|

||||

|

||||

```console

|

||||

helm repo add flagger https://flagger.app

|

||||

```

|

||||

|

||||

To install the chart with the release name `flagger-loadtester`:

|

||||

|

||||

```console

|

||||

helm upgrade -i flagger-loadtester flagger/loadtester

|

||||

```

|

||||

|

||||

The command deploys Grafana on the Kubernetes cluster in the default namespace.

|

||||

|

||||

> **Tip**: Note that the namespace where you deploy the load tester should have the Istio sidecar injection enabled

|

||||

|

||||

The [configuration](#configuration) section lists the parameters that can be configured during installation.

|

||||

|

||||

## Uninstalling the Chart

|

||||

|

||||

To uninstall/delete the `flagger-loadtester` deployment:

|

||||

|

||||

```console

|

||||

helm delete --purge flagger-loadtester

|

||||

```

|

||||

|

||||

The command removes all the Kubernetes components associated with the chart and deletes the release.

|

||||

|

||||

## Configuration

|

||||

|

||||

The following tables lists the configurable parameters of the load tester chart and their default values.

|

||||

|

||||

Parameter | Description | Default

|

||||

--- | --- | ---

|

||||

`image.repository` | Image repository | `quay.io/stefanprodan/flagger-loadtester`

|

||||

`image.pullPolicy` | Image pull policy | `IfNotPresent`

|

||||

`image.tag` | Image tag | `<VERSION>`

|

||||

`replicaCount` | desired number of pods | `1`

|

||||

`resources.requests.cpu` | CPU requests | `10m`

|

||||

`resources.requests.memory` | memory requests | `64Mi`

|

||||

`tolerations` | List of node taints to tolerate | `[]`

|

||||

`affinity` | node/pod affinities | `node`

|

||||

`nodeSelector` | node labels for pod assignment | `{}`

|

||||

`service.type` | type of service | `ClusterIP`

|

||||

`service.port` | ClusterIP port | `80`

|

||||

`cmd.logOutput` | Log the command output to stderr | `true`

|

||||

`cmd.timeout` | Command execution timeout | `1h`

|

||||

`logLevel` | Log level can be debug, info, warning, error or panic | `info`

|

||||

|

||||

Specify each parameter using the `--set key=value[,key=value]` argument to `helm install`. For example,

|

||||

|

||||

```console

|

||||

helm install flagger/loadtester --name flagger-loadtester \

|

||||

--set cmd.logOutput=false

|

||||

```

|

||||

|

||||

Alternatively, a YAML file that specifies the values for the above parameters can be provided while installing the chart. For example,

|

||||

|

||||

```console

|

||||

helm install flagger/loadtester --name flagger-loadtester -f values.yaml

|

||||

```

|

||||

|

||||

> **Tip**: You can use the default [values.yaml](values.yaml)

|

||||

|

||||

|

||||

1

charts/loadtester/templates/NOTES.txt

Normal file

1

charts/loadtester/templates/NOTES.txt

Normal file

@@ -0,0 +1 @@

|

||||

Flagger's load testing service is available at http://{{ include "loadtester.fullname" . }}.{{ .Release.Namespace }}/

|

||||

32

charts/loadtester/templates/_helpers.tpl

Normal file

32

charts/loadtester/templates/_helpers.tpl

Normal file

@@ -0,0 +1,32 @@

|

||||

{{/* vim: set filetype=mustache: */}}

|

||||

{{/*

|

||||

Expand the name of the chart.

|

||||

*/}}

|

||||

{{- define "loadtester.name" -}}

|

||||

{{- default .Chart.Name .Values.nameOverride | trunc 63 | trimSuffix "-" -}}

|

||||

{{- end -}}

|

||||

|

||||

{{/*

|

||||

Create a default fully qualified app name.

|

||||

We truncate at 63 chars because some Kubernetes name fields are limited to this (by the DNS naming spec).

|

||||

If release name contains chart name it will be used as a full name.

|

||||

*/}}

|

||||

{{- define "loadtester.fullname" -}}

|

||||

{{- if .Values.fullnameOverride -}}

|

||||

{{- .Values.fullnameOverride | trunc 63 | trimSuffix "-" -}}

|

||||

{{- else -}}

|

||||

{{- $name := default .Chart.Name .Values.nameOverride -}}

|

||||

{{- if contains $name .Release.Name -}}

|

||||

{{- .Release.Name | trunc 63 | trimSuffix "-" -}}

|

||||

{{- else -}}

|

||||

{{- printf "%s-%s" .Release.Name $name | trunc 63 | trimSuffix "-" -}}

|

||||

{{- end -}}

|

||||

{{- end -}}

|

||||

{{- end -}}

|

||||

|

||||

{{/*

|

||||

Create chart name and version as used by the chart label.

|

||||

*/}}

|

||||

{{- define "loadtester.chart" -}}

|

||||

{{- printf "%s-%s" .Chart.Name .Chart.Version | replace "+" "_" | trunc 63 | trimSuffix "-" -}}

|

||||

{{- end -}}

|

||||

66

charts/loadtester/templates/deployment.yaml

Normal file

66

charts/loadtester/templates/deployment.yaml

Normal file

@@ -0,0 +1,66 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: {{ include "loadtester.fullname" . }}

|

||||

labels:

|

||||

app.kubernetes.io/name: {{ include "loadtester.name" . }}

|

||||

helm.sh/chart: {{ include "loadtester.chart" . }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

spec:

|

||||

replicas: {{ .Values.replicaCount }}

|

||||

selector:

|

||||

matchLabels:

|

||||

app: {{ include "loadtester.name" . }}

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app: {{ include "loadtester.name" . }}

|

||||

spec:

|

||||

containers:

|

||||

- name: {{ .Chart.Name }}

|

||||

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

|

||||

imagePullPolicy: {{ .Values.image.pullPolicy }}

|

||||

ports:

|

||||

- name: http

|

||||

containerPort: 8080

|

||||

command:

|

||||

- ./loadtester

|

||||

- -port=8080

|

||||

- -log-level={{ .Values.logLevel }}

|

||||

- -timeout={{ .Values.cmd.timeout }}

|

||||

- -log-cmd-output={{ .Values.cmd.logOutput }}

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

{{- toYaml .Values.resources | nindent 12 }}

|

||||

{{- with .Values.nodeSelector }}

|

||||

nodeSelector:

|

||||

{{- toYaml . | nindent 8 }}

|

||||

{{- end }}

|

||||

{{- with .Values.affinity }}

|

||||

affinity:

|

||||

{{- toYaml . | nindent 8 }}

|

||||

{{- end }}

|

||||

{{- with .Values.tolerations }}

|

||||

tolerations:

|

||||

{{- toYaml . | nindent 8 }}

|

||||

{{- end }}

|

||||

18

charts/loadtester/templates/service.yaml

Normal file

18

charts/loadtester/templates/service.yaml

Normal file

@@ -0,0 +1,18 @@

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: {{ include "loadtester.fullname" . }}

|

||||

labels:

|

||||

app.kubernetes.io/name: {{ include "loadtester.name" . }}

|

||||

helm.sh/chart: {{ include "loadtester.chart" . }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

spec:

|

||||

type: {{ .Values.service.type }}

|

||||

ports:

|

||||

- port: {{ .Values.service.port }}

|

||||

targetPort: http

|

||||

protocol: TCP

|

||||

name: http

|

||||

selector:

|

||||

app: {{ include "loadtester.name" . }}

|

||||

29

charts/loadtester/values.yaml

Normal file

29

charts/loadtester/values.yaml

Normal file

@@ -0,0 +1,29 @@

|

||||

replicaCount: 1

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger-loadtester

|

||||

tag: 0.1.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

logLevel: info

|

||||

cmd:

|

||||

logOutput: true

|

||||

timeout: 1h

|

||||

|

||||

nameOverride: ""

|

||||

fullnameOverride: ""

|

||||

|

||||

service:

|

||||

type: ClusterIP

|

||||

port: 80

|

||||

|

||||

resources:

|

||||

requests:

|

||||

cpu: 10m

|

||||

memory: 64Mi

|

||||

|

||||

nodeSelector: {}

|

||||

|

||||

tolerations: []

|

||||

|

||||

affinity: {}

|

||||

44

cmd/loadtester/main.go

Normal file

44

cmd/loadtester/main.go

Normal file

@@ -0,0 +1,44 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"flag"

|

||||

"github.com/knative/pkg/signals"

|

||||

"github.com/stefanprodan/flagger/pkg/loadtester"

|

||||

"github.com/stefanprodan/flagger/pkg/logging"

|

||||

"log"

|

||||

"time"

|

||||

)

|

||||

|

||||

var VERSION = "0.1.0"

|

||||

var (

|

||||

logLevel string

|

||||

port string

|

||||

timeout time.Duration

|

||||

logCmdOutput bool

|

||||

)

|

||||

|

||||

func init() {

|

||||

flag.StringVar(&logLevel, "log-level", "debug", "Log level can be: debug, info, warning, error.")

|

||||

flag.StringVar(&port, "port", "9090", "Port to listen on.")

|

||||

flag.DurationVar(&timeout, "timeout", time.Hour, "Command exec timeout.")

|

||||

flag.BoolVar(&logCmdOutput, "log-cmd-output", true, "Log command output to stderr")

|

||||

}

|

||||

|

||||

func main() {

|

||||

flag.Parse()

|

||||

|

||||

logger, err := logging.NewLogger(logLevel)

|

||||

if err != nil {

|

||||

log.Fatalf("Error creating logger: %v", err)

|

||||

}

|

||||

defer logger.Sync()

|

||||

|

||||

stopCh := signals.SetupSignalHandler()

|

||||

|

||||

taskRunner := loadtester.NewTaskRunner(logger, timeout, logCmdOutput)

|

||||

|

||||

go taskRunner.Start(100*time.Millisecond, stopCh)

|

||||

|

||||

logger.Infof("Starting load tester v%s API on port %s", VERSION, port)

|

||||

loadtester.ListenAndServe(port, time.Minute, logger, taskRunner, stopCh)

|

||||

}

|

||||

@@ -1 +0,0 @@

|

||||

flagger.app

|

||||

@@ -1,11 +0,0 @@

|

||||

# Flagger

|

||||

|

||||

Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic

|

||||

shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance

|

||||

indicators like HTTP requests success rate, requests average duration and pods health. Based on the KPIs analysis

|

||||

a canary is promoted or aborted and the analysis result is published to Slack.

|

||||

|

||||

### For the install instructions and usage examples please see [docs.flagger.app](https://docs.flagger.app)

|

||||

|

||||

@@ -1,55 +0,0 @@

|

||||

title: Flagger - Istio Progressive Delivery Kubernetes Operator

|

||||

|

||||

remote_theme: errordeveloper/simple-project-homepage

|

||||

repository: stefanprodan/flagger

|

||||

by_weaveworks: true

|

||||

|

||||

url: "https://flagger.app"

|

||||

baseurl: "/"

|

||||

|

||||

twitter:

|

||||

username: "stefanprodan"

|

||||

author:

|

||||

twitter: "stefanprodan"

|

||||

|

||||

# Set default og:image

|

||||

defaults:

|

||||

- scope: {path: ""}

|

||||

values: {image: "diagrams/flagger-overview.png"}

|

||||

|

||||

# See: https://material.io/guidelines/style/color.html

|

||||

# Use color-name-value, like pink-200 or deep-purple-100

|

||||

brand_color: "amber-400"

|

||||

|

||||

# How article URLs are structured.

|

||||

# See: https://jekyllrb.com/docs/permalinks/

|

||||

permalink: posts/:title/

|

||||

|

||||

# "UA-NNNNNNNN-N"

|

||||

google_analytics: ""

|

||||

|

||||

# Language. For example, if you write in Japanese, use "ja"

|

||||

lang: "en"

|

||||

|

||||

# How many posts are visible on the home page without clicking "View More"

|

||||

num_posts_visible_initially: 5

|

||||

|

||||

# Date format: See http://strftime.net/

|

||||

date_format: "%b %-d, %Y"

|

||||

|

||||

plugins:

|

||||

- jekyll-feed

|

||||

- jekyll-readme-index

|

||||

- jekyll-seo-tag

|

||||

- jekyll-sitemap

|

||||

- jemoji

|

||||

# # required for local builds with starefossen/github-pages

|

||||

# - jekyll-github-metadata

|

||||

# - jekyll-mentions

|

||||

# - jekyll-redirect-from

|

||||

# - jekyll-remote-theme

|

||||

|

||||

exclude:

|

||||

- CNAME

|

||||

- gitbook

|

||||

|

||||

BIN

docs/diagrams/flagger-load-testing.png

Normal file

BIN

docs/diagrams/flagger-load-testing.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 159 KiB |

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

@@ -4,13 +4,20 @@ description: Flagger is an Istio progressive delivery Kubernetes operator

|

||||

|

||||

# Introduction

|

||||

|

||||

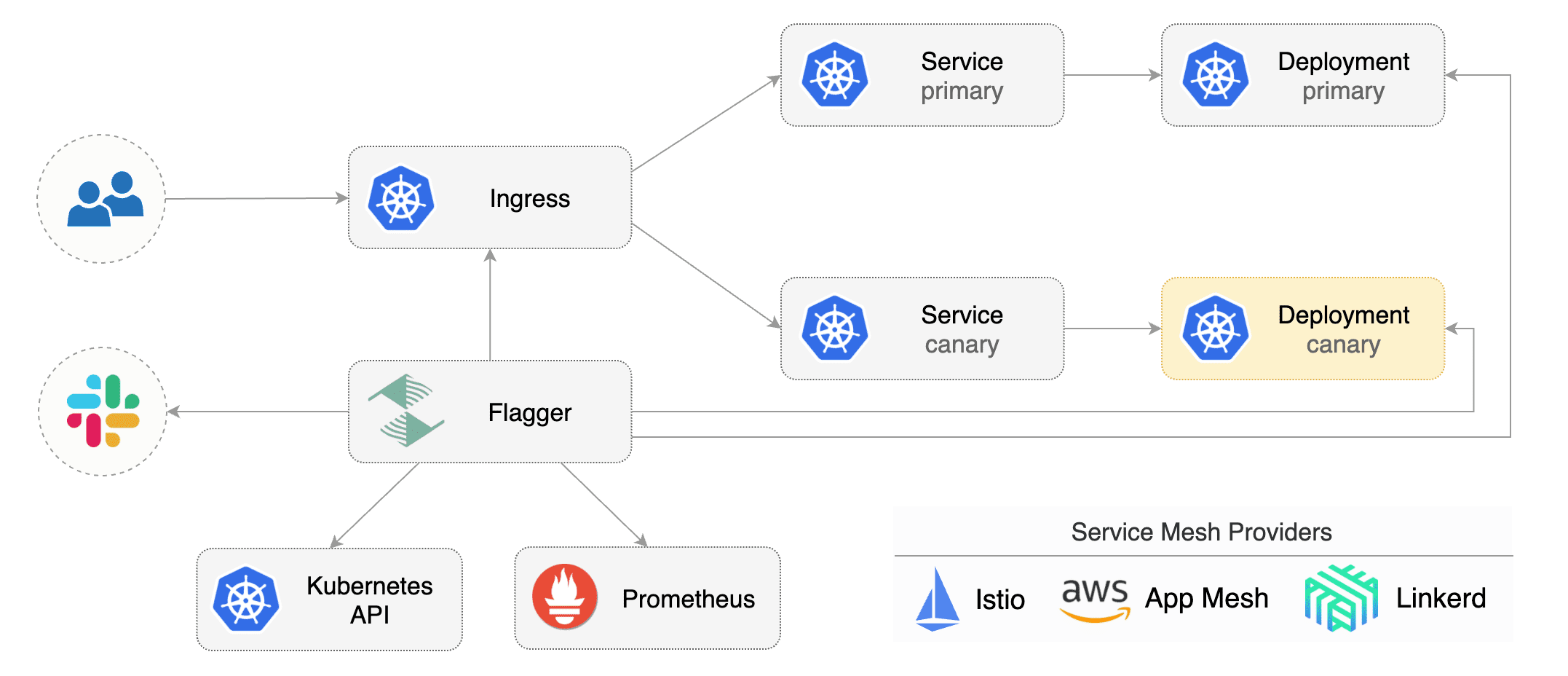

[Flagger](https://github.com/stefanprodan/flagger) is a **Kubernetes** operator that automates the promotion of canary deployments using **Istio** routing for traffic shifting and **Prometheus** metrics for canary analysis.

|

||||

[Flagger](https://github.com/stefanprodan/flagger) is a **Kubernetes** operator that automates the promotion of canary

|

||||

deployments using **Istio** routing for traffic shifting and **Prometheus** metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators like HTTP requests success rate, requests average duration and pods health. Based on the **KPIs** analysis a canary is promoted or aborted and the analysis result is published to **Slack**.

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance

|

||||

indicators like HTTP requests success rate, requests average duration and pods health.

|

||||

Based on the **KPIs** analysis a canary is promoted or aborted and the analysis result is published to **Slack**.

|

||||

|

||||

|

||||

|

||||

Flagger can be configured with Kubernetes custom resources \(canaries.flagger.app kind\) and is compatible with any CI/CD solutions made for Kubernetes. Since Flagger is declarative and reacts to Kubernetes events, it can be used in **GitOps** pipelines together with Weave Flux or JenkinsX.

|

||||

Flagger can be configured with Kubernetes custom resources \(canaries.flagger.app kind\) and is compatible with

|

||||

any CI/CD solutions made for Kubernetes. Since Flagger is declarative and reacts to Kubernetes events,

|

||||

it can be used in **GitOps** pipelines together with Weave Flux or JenkinsX.

|

||||

|

||||

This project is sponsored by [Weaveworks](https://www.weave.works/)

|

||||

|

||||

|

||||

@@ -1,6 +1,8 @@

|

||||

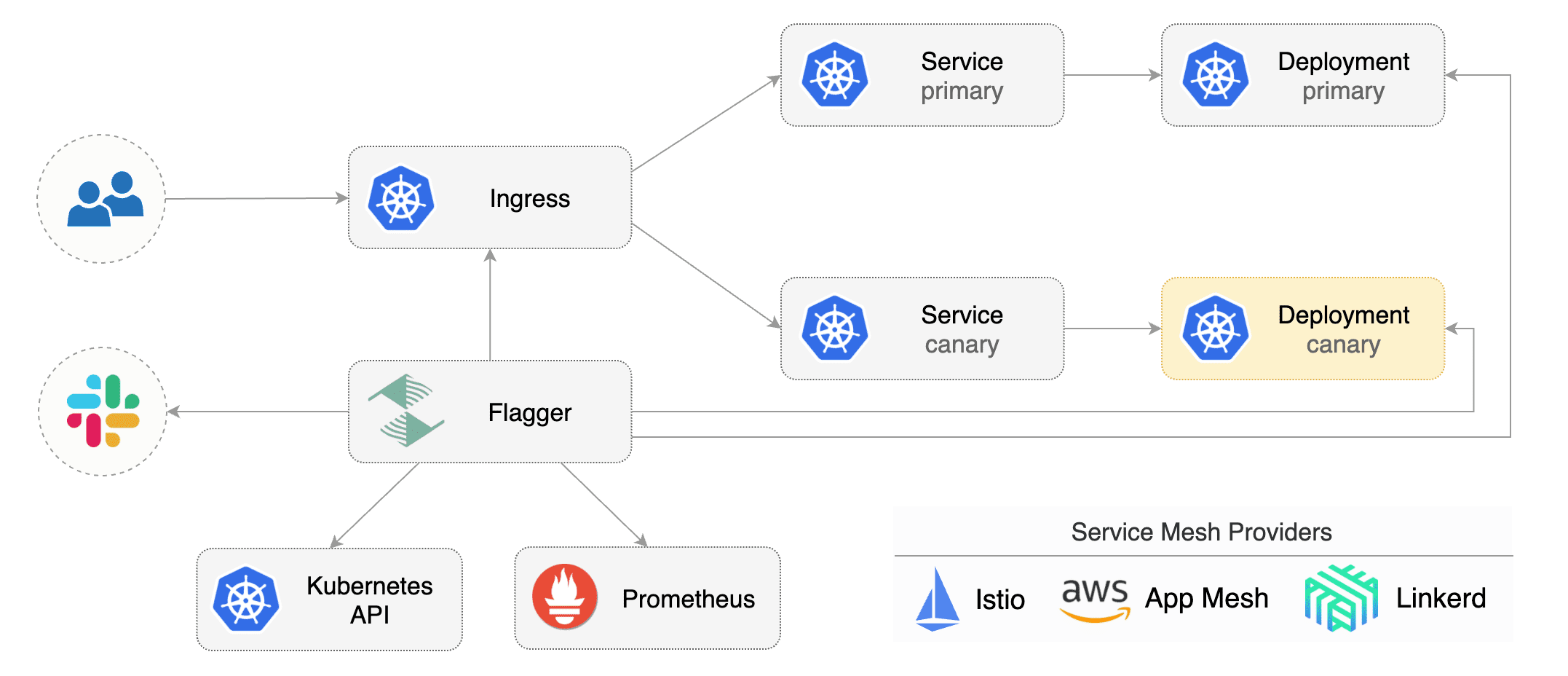

# How it works

|

||||

|

||||

[Flagger](https://github.com/stefanprodan/flagger) takes a Kubernetes deployment and optionally a horizontal pod autoscaler \(HPA\) and creates a series of objects \(Kubernetes deployments, ClusterIP services and Istio virtual services\) to drive the canary analysis and promotion.

|

||||

[Flagger](https://github.com/stefanprodan/flagger) takes a Kubernetes deployment and optionally

|

||||

a horizontal pod autoscaler \(HPA\) and creates a series of objects

|

||||

\(Kubernetes deployments, ClusterIP services and Istio virtual services\) to drive the canary analysis and promotion.

|

||||

|

||||

|

||||

|

||||

@@ -95,37 +97,42 @@ the Istio Virtual Service. The container port from the target deployment should

|

||||

|

||||

|

||||

|

||||

A canary deployment is triggered by changes in any of the following objects:

|

||||

|

||||

* Deployment PodSpec (container image, command, ports, env, resources, etc)

|

||||

* ConfigMaps mounted as volumes or mapped to environment variables

|

||||

* Secrets mounted as volumes or mapped to environment variables

|

||||

|

||||

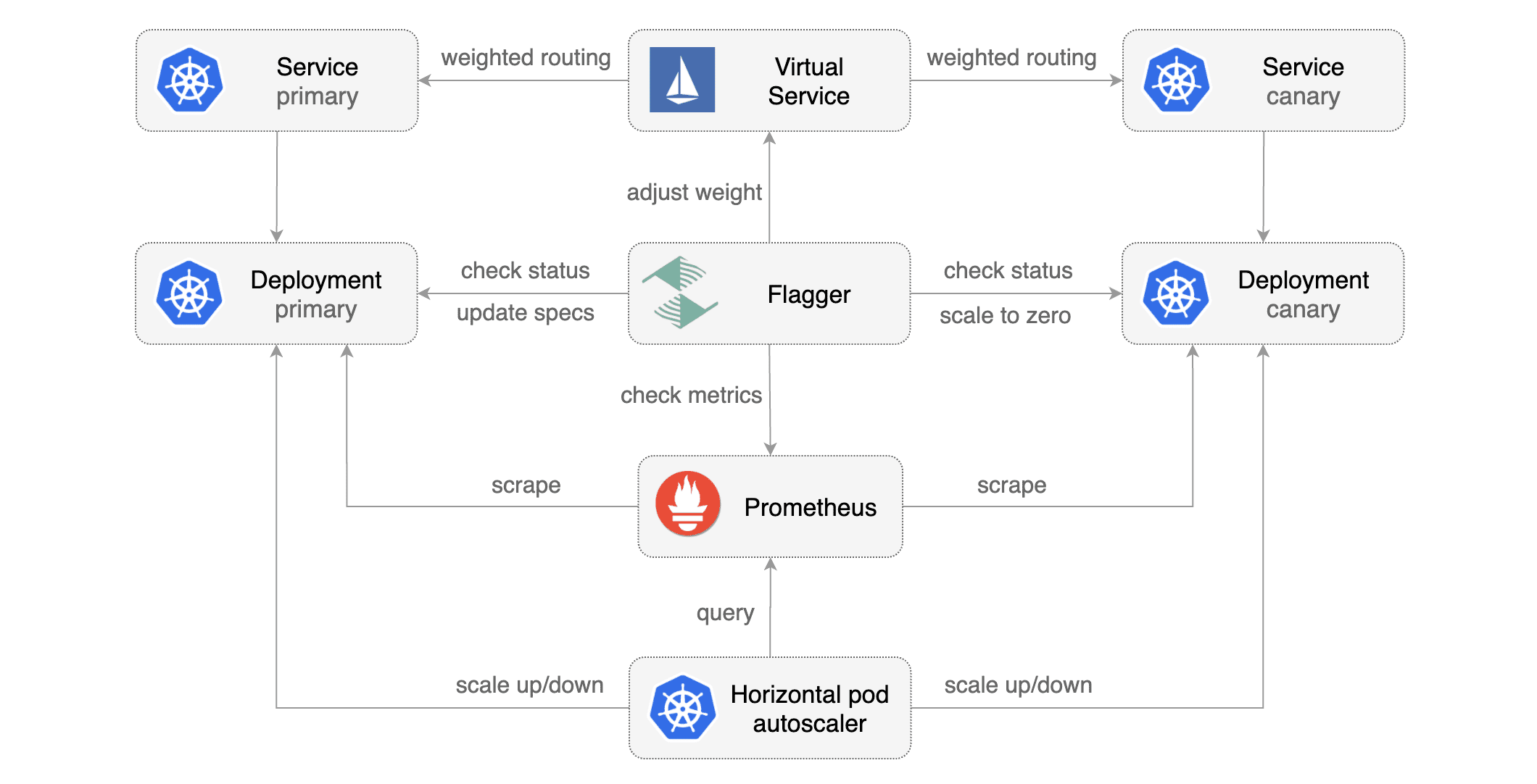

Gated canary promotion stages:

|

||||

|

||||

* scan for canary deployments

|

||||

* creates the primary deployment if needed

|

||||

* check Istio virtual service routes are mapped to primary and canary ClusterIP services

|

||||

* check primary and canary deployments status

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% \(step weight\)

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% (step weight)

|

||||

* call webhooks and check results

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated \(revision bump\) and start over

|

||||

* increase canary traffic weight by 5% \(step weight\) till it reaches 50% \(max weight\)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated and start over

|

||||

* increase canary traffic weight by 5% (step weight) till it reaches 50% (max weight)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* promote canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* copy ConfigMaps and Secrets from canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt advancement if pods are unhealthy

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark the canary deployment as finished

|

||||

* wait for the canary deployment to be updated \(revision bump\) and start over

|

||||

|

||||

You can change the canary analysis _max weight_ and the _step weight_ percentage in the Flagger's custom resource.

|

||||

* mark rollout as finished

|

||||

* wait for the canary deployment to be updated and start over

|

||||

|

||||

### Canary Analysis

|

||||

|

||||

@@ -281,4 +288,78 @@ Response status codes:

|

||||

|

||||

On a non-2xx response Flagger will include the response body (if any) in the failed checks log and Kubernetes events.

|

||||

|

||||

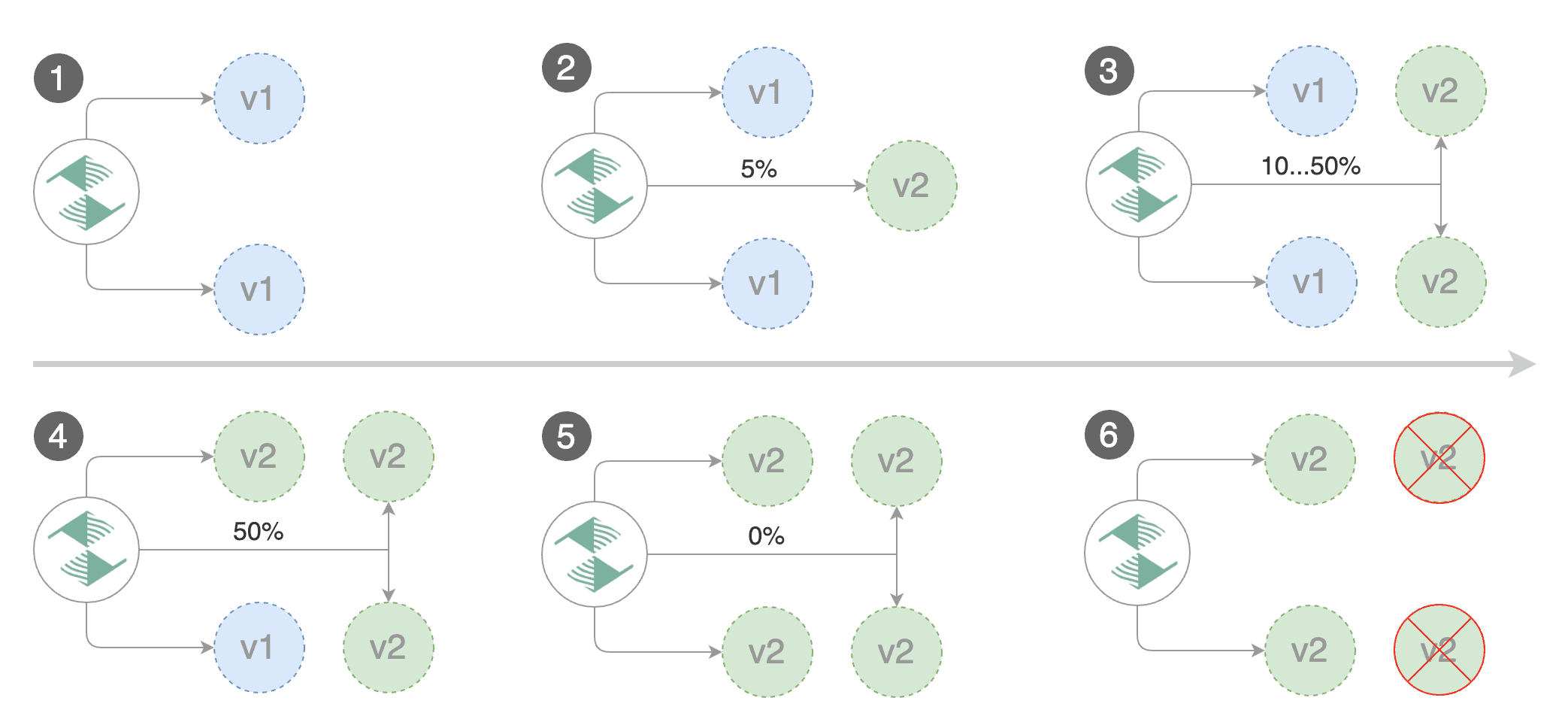

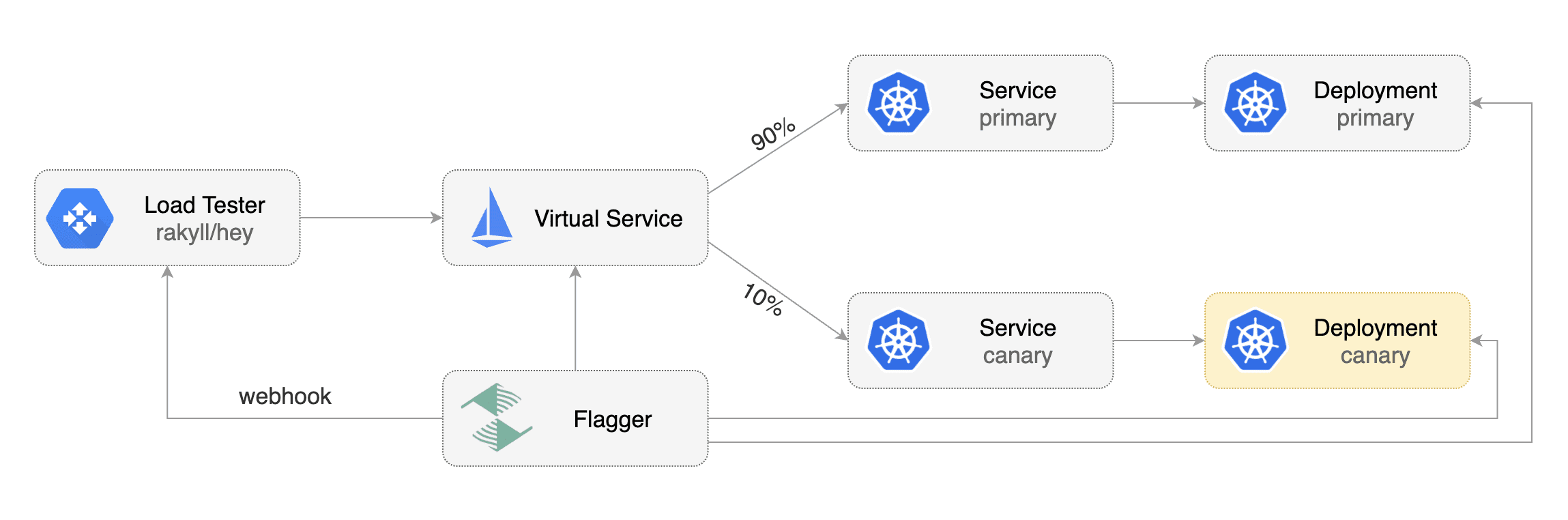

### Load Testing

|

||||

|

||||

For workloads that are not receiving constant traffic Flagger can be configured with a webhook,

|

||||

that when called, will start a load test for the target workload.

|

||||

If the target workload doesn't receive any traffic during the canary analysis,

|

||||

Flagger metric checks will fail with "no values found for metric istio_requests_total".

|

||||

|

||||

Flagger comes with a load testing service based on [rakyll/hey](https://github.com/rakyll/hey)

|

||||

that generates traffic during analysis when configured as a webhook.

|

||||

|

||||

|

||||

|

||||

First you need to deploy the load test runner in a namespace with Istio sidecar injection enabled:

|

||||

|

||||

```bash

|

||||

export REPO=https://raw.githubusercontent.com/stefanprodan/flagger/master

|

||||

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Or by using Helm:

|

||||

|

||||

```bash

|

||||

helm repo add flagger https://flagger.app

|

||||

|

||||

helm upgrade -i flagger-loadtester flagger/loadtester \

|

||||

--namepace=test \

|

||||

--set cmd.logOutput=true \

|

||||

--set cmd.timeout=1h

|

||||

```

|

||||

|

||||

When deployed the load tester API will be available at `http://flagger-loadtester.test/`.

|

||||

|

||||

Now you can add webhooks to the canary analysis spec:

|

||||

|

||||

```yaml

|

||||

webhooks:

|

||||

- name: load-test-get

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

- name: load-test-post

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 -m POST -d '{test: 2}' http://podinfo.test:9898/echo"

|

||||

```

|

||||

|

||||

When the canary analysis starts, Flagger will call the webhooks and the load tester will run the `hey` commands

|

||||

in the background, if they are not already running. This will ensure that during the

|

||||

analysis, the `podinfo.test` virtual service will receive a steady steam of GET and POST requests.

|

||||

|

||||

If your workload is exposed outside the mesh with the Istio Gateway and TLS you can point `hey` to the

|

||||

public URL and use HTTP2.

|

||||

|

||||

```yaml

|

||||

webhooks:

|

||||

- name: load-test-get

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 -h2 https://podinfo.example.com/"

|

||||

```

|

||||

|

||||

The load tester can run arbitrary commands as long as the binary is present in the container image.

|

||||

For example if you you want to replace `hey` with another CLI, you can create your own Docker image:

|

||||

|

||||

```dockerfile

|

||||

FROM quay.io/stefanprodan/flagger-loadtester:<VER>

|

||||

|

||||

RUN curl -Lo /usr/local/bin/my-cli https://github.com/user/repo/releases/download/ver/my-cli \

|

||||

&& chmod +x /usr/local/bin/my-cli

|

||||

```

|

||||

|

||||

@@ -6,7 +6,7 @@ If you are new to Istio you can follow this GKE guide

|

||||

|

||||

**Prerequisites**

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

|

||||

@@ -17,7 +17,14 @@ kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource \(replace example.com with your own domain\):

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource (replace example.com with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

@@ -70,6 +77,13 @@ spec:

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# generate traffic during analysis

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

Save the above resource as podinfo-canary.yaml and then apply it:

|

||||

@@ -99,7 +113,7 @@ Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.0

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

@@ -108,9 +122,9 @@ Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -132,6 +146,19 @@ Events:

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 15 2019-01-16T14:05:07Z

|

||||

prod frontend Succeeded 0 2019-01-15T16:15:07Z

|

||||

prod backend Failed 0 2019-01-14T17:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

@@ -156,15 +183,16 @@ Generate latency:

|

||||

watch curl http://podinfo-canary:9898/delay/1

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary, the canary is scaled to zero and the rollout is marked as failed.

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 16695041

|

||||

Failed Checks: 10

|

||||

State: failed

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -181,5 +209,3 @@ Events:

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

####

|

||||

|

||||

|

||||

Binary file not shown.

121

docs/index.yaml

121

docs/index.yaml

@@ -1,121 +0,0 @@

|

||||

apiVersion: v1

|

||||

entries:

|

||||

flagger:

|

||||

- apiVersion: v1

|

||||

appVersion: 0.3.0

|

||||

created: 2019-01-11T20:08:47.476526+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 8baa478cc802f4e6b7593934483359b8f70ec34413ca3b8de3a692e347a9bda4

|

||||

engine: gotpl

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.9.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|