mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-15 10:30:01 +00:00

Compare commits

21 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c46fe55ad0 | ||

|

|

36a54fbf2a | ||

|

|

60f6b05397 | ||

|

|

6d8a7343b7 | ||

|

|

aff8b117d4 | ||

|

|

1b3c3b22b3 | ||

|

|

1d31b5ed90 | ||

|

|

1ef310f00d | ||

|

|

acdd2c46d5 | ||

|

|

9872e6bc16 | ||

|

|

10c2bdec86 | ||

|

|

4bf3b70048 | ||

|

|

ada446bbaa | ||

|

|

c4981ef4db | ||

|

|

d1b84cd31d | ||

|

|

9232c8647a | ||

|

|

23e8c7d616 | ||

|

|

42607fbd64 | ||

|

|

28781a5f02 | ||

|

|

3589e11244 | ||

|

|

5e880d3942 |

31

README.md

31

README.md

@@ -8,8 +8,8 @@

|

||||

|

||||

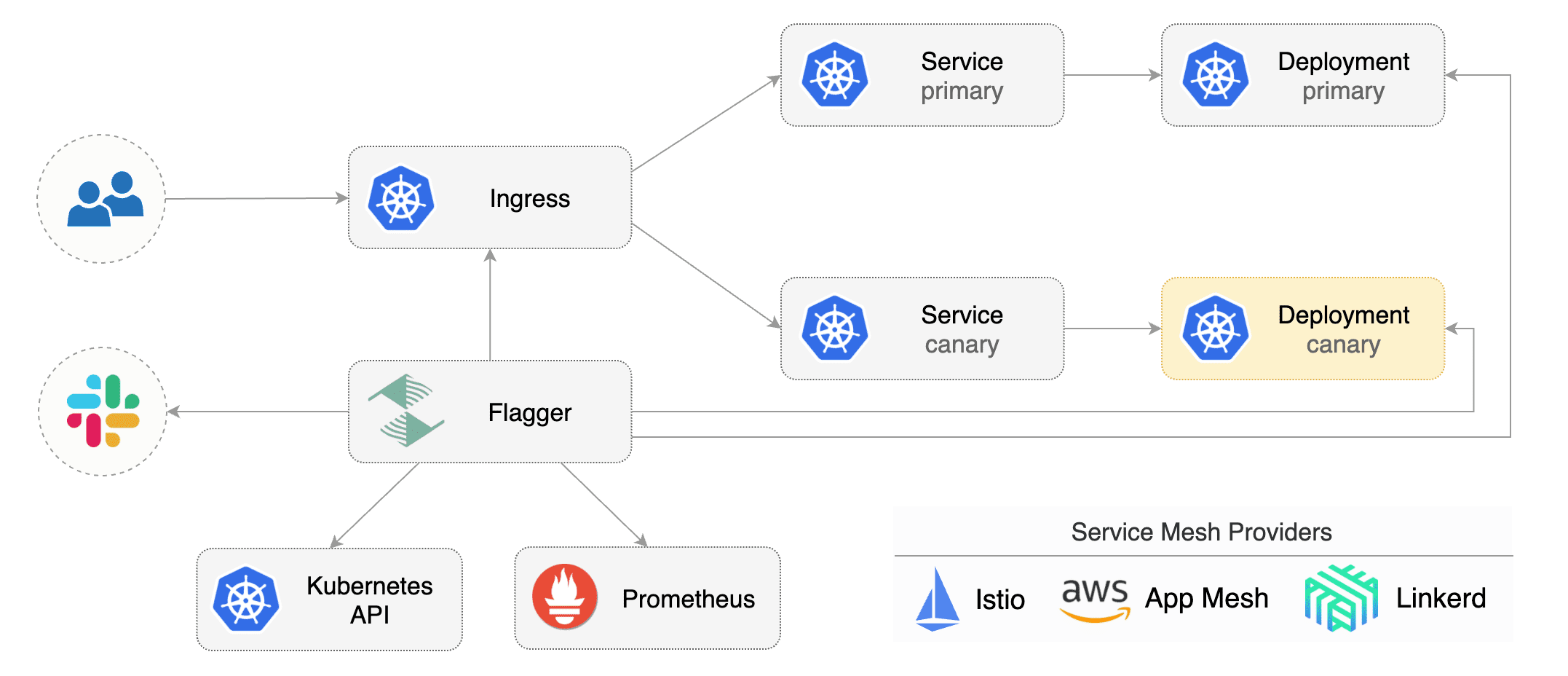

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests, load tests or any other custom

|

||||

validation.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

### Install

|

||||

|

||||

@@ -28,7 +28,7 @@ helm upgrade -i flagger flagger/flagger \

|

||||

--set metricsServer=http://prometheus.istio-system:9090

|

||||

```

|

||||

|

||||

Flagger is compatible with Kubernetes >1.10.0 and Istio >1.0.0.

|

||||

Flagger is compatible with Kubernetes >1.11.0 and Istio >1.0.0.

|

||||

|

||||

### Usage

|

||||

|

||||

@@ -242,15 +242,16 @@ kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

Flagger detects that the deployment revision changed and starts a new canary analysis:

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -272,6 +273,15 @@ Events:

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 5 2019-01-16T14:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

@@ -300,9 +310,10 @@ the canary is scaled to zero and the rollout is marked as failed.

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 16695041

|

||||

Failed Checks: 10

|

||||

State: failed

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

|

||||

@@ -19,7 +19,21 @@ spec:

|

||||

plural: canaries

|

||||

singular: canary

|

||||

kind: Canary

|

||||

categories:

|

||||

- all

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

additionalPrinterColumns:

|

||||

- name: Status

|

||||

type: string

|

||||

JSONPath: .status.phase

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

validation:

|

||||

openAPIV3Schema:

|

||||

properties:

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.3.0

|

||||

image: quay.io/stefanprodan/flagger:0.4.0

|

||||

imagePullPolicy: Always

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -1,8 +1,8 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.3.0

|

||||

appVersion: 0.3.0

|

||||

kubeVersion: ">=1.9.0-0"

|

||||

version: 0.4.0

|

||||

appVersion: 0.4.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

home: https://docs.flagger.app

|

||||

|

||||

@@ -8,7 +8,7 @@ Based on the KPIs analysis a canary is promoted or aborted and the analysis resu

|

||||

|

||||

## Prerequisites

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

|

||||

@@ -20,7 +20,21 @@ spec:

|

||||

plural: canaries

|

||||

singular: canary

|

||||

kind: Canary

|

||||

categories:

|

||||

- all

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

additionalPrinterColumns:

|

||||

- name: Status

|

||||

type: string

|

||||

JSONPath: .status.phase

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

validation:

|

||||

openAPIV3Schema:

|

||||

properties:

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger

|

||||

tag: 0.3.0

|

||||

tag: 0.4.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

metricsServer: "http://prometheus.istio-system.svc.cluster.local:9090"

|

||||

|

||||

BIN

docs/flagger-0.4.0.tgz

Normal file

BIN

docs/flagger-0.4.0.tgz

Normal file

Binary file not shown.

@@ -4,13 +4,20 @@ description: Flagger is an Istio progressive delivery Kubernetes operator

|

||||

|

||||

# Introduction

|

||||

|

||||

[Flagger](https://github.com/stefanprodan/flagger) is a **Kubernetes** operator that automates the promotion of canary deployments using **Istio** routing for traffic shifting and **Prometheus** metrics for canary analysis.

|

||||

[Flagger](https://github.com/stefanprodan/flagger) is a **Kubernetes** operator that automates the promotion of canary

|

||||

deployments using **Istio** routing for traffic shifting and **Prometheus** metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators like HTTP requests success rate, requests average duration and pods health. Based on the **KPIs** analysis a canary is promoted or aborted and the analysis result is published to **Slack**.

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance

|

||||

indicators like HTTP requests success rate, requests average duration and pods health.

|

||||

Based on the **KPIs** analysis a canary is promoted or aborted and the analysis result is published to **Slack**.

|

||||

|

||||

|

||||

|

||||

Flagger can be configured with Kubernetes custom resources \(canaries.flagger.app kind\) and is compatible with any CI/CD solutions made for Kubernetes. Since Flagger is declarative and reacts to Kubernetes events, it can be used in **GitOps** pipelines together with Weave Flux or JenkinsX.

|

||||

Flagger can be configured with Kubernetes custom resources \(canaries.flagger.app kind\) and is compatible with

|

||||

any CI/CD solutions made for Kubernetes. Since Flagger is declarative and reacts to Kubernetes events,

|

||||

it can be used in **GitOps** pipelines together with Weave Flux or JenkinsX.

|

||||

|

||||

This project is sponsored by [Weaveworks](https://www.weave.works/)

|

||||

|

||||

|

||||

@@ -6,7 +6,7 @@ If you are new to Istio you can follow this GKE guide

|

||||

|

||||

**Prerequisites**

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

|

||||

@@ -108,9 +108,9 @@ Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -132,6 +132,17 @@ Events:

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 15 2019-01-16T14:05:07Z

|

||||

prod frontend Succeeded 0 2019-01-15T16:15:07Z

|

||||

prod backend Failed 0 2019-01-14T17:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

@@ -162,9 +173,9 @@ When the number of failed checks reaches the canary analysis threshold, the traf

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 16695041

|

||||

Failed Checks: 10

|

||||

State: failed

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -181,5 +192,3 @@ Events:

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

####

|

||||

|

||||

|

||||

Binary file not shown.

@@ -1,9 +1,34 @@

|

||||

apiVersion: v1

|

||||

entries:

|

||||

flagger:

|

||||

- apiVersion: v1

|

||||

appVersion: 0.4.0

|

||||

created: 2019-01-18T12:49:18.099861+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: fe06de1c68c6cc414440ef681cde67ae02c771de9b1e4d2d264c38a7a9c37b3d

|

||||

engine: gotpl

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.11.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: flagger

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.4.0.tgz

|

||||

version: 0.4.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.3.0

|

||||

created: 2019-01-11T20:08:47.476526+02:00

|

||||

created: 2019-01-18T12:49:18.099501+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -28,7 +53,7 @@ entries:

|

||||

version: 0.3.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.2.0

|

||||

created: 2019-01-11T20:08:47.476127+02:00

|

||||

created: 2019-01-18T12:49:18.099162+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -53,7 +78,7 @@ entries:

|

||||

version: 0.2.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.2

|

||||

created: 2019-01-11T20:08:47.475257+02:00

|

||||

created: 2019-01-18T12:49:18.098811+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -78,7 +103,7 @@ entries:

|

||||

version: 0.1.2

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.1

|

||||

created: 2019-01-11T20:08:47.474547+02:00

|

||||

created: 2019-01-18T12:49:18.098439+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -90,7 +115,7 @@ entries:

|

||||

version: 0.1.1

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.0

|

||||

created: 2019-01-11T20:08:47.473757+02:00

|

||||

created: 2019-01-18T12:49:18.098153+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -103,9 +128,9 @@ entries:

|

||||

grafana:

|

||||

- apiVersion: v1

|

||||

appVersion: 5.4.2

|

||||

created: 2019-01-11T20:08:47.477041+02:00

|

||||

created: 2019-01-18T12:49:18.100331+02:00

|

||||

description: Grafana dashboards for monitoring Flagger canary deployments

|

||||

digest: 1c929348357ea747405308125d9c7969cf743de5ab9e8adff6fa83943593b2f0

|

||||

digest: 97257d1742aca506f8703922d67863c459c1b43177870bc6050d453d19a683c0

|

||||

home: https://flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

maintainers:

|

||||

@@ -118,4 +143,4 @@ entries:

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/grafana-0.1.0.tgz

|

||||

version: 0.1.0

|

||||

generated: 2019-01-11T20:08:47.472865+02:00

|

||||

generated: 2019-01-18T12:49:18.097682+02:00

|

||||

|

||||

@@ -56,7 +56,7 @@ type CanarySpec struct {

|

||||

CanaryAnalysis CanaryAnalysis `json:"canaryAnalysis"`

|

||||

|

||||

// the maximum time in seconds for a canary deployment to make progress

|

||||

// before it is considered to be failed. Defaults to 60s.

|

||||

// before it is considered to be failed. Defaults to ten minutes.

|

||||

ProgressDeadlineSeconds *int32 `json:"progressDeadlineSeconds,omitempty"`

|

||||

}

|

||||

|

||||

@@ -70,21 +70,30 @@ type CanaryList struct {

|

||||

Items []Canary `json:"items"`

|

||||

}

|

||||

|

||||

// CanaryState used for status state op

|

||||

type CanaryState string

|

||||

// CanaryPhase is a label for the condition of a canary at the current time

|

||||

type CanaryPhase string

|

||||

|

||||

const (

|

||||

CanaryRunning CanaryState = "running"

|

||||

CanaryFinished CanaryState = "finished"

|

||||

CanaryFailed CanaryState = "failed"

|

||||

CanaryInitialized CanaryState = "initialized"

|

||||

// CanaryInitialized means the primary deployment, hpa and ClusterIP services

|

||||

// have been created along with the Istio virtual service

|

||||

CanaryInitialized CanaryPhase = "Initialized"

|

||||

// CanaryProgressing means the canary analysis is underway

|

||||

CanaryProgressing CanaryPhase = "Progressing"

|

||||

// CanarySucceeded means the canary analysis has been successful

|

||||

// and the canary deployment has been promoted

|

||||

CanarySucceeded CanaryPhase = "Succeeded"

|

||||

// CanaryFailed means the canary analysis failed

|

||||

// and the canary deployment has been scaled to zero

|

||||

CanaryFailed CanaryPhase = "Failed"

|

||||

)

|

||||

|

||||

// CanaryStatus is used for state persistence (read-only)

|

||||

type CanaryStatus struct {

|

||||

State CanaryState `json:"state"`

|

||||

CanaryRevision string `json:"canaryRevision"`

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

Phase CanaryPhase `json:"phase"`

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

CanaryWeight int `json:"canaryWeight"`

|

||||

// +optional

|

||||

LastAppliedSpec string `json:"lastAppliedSpec,omitempty"`

|

||||

// +optional

|

||||

LastTransitionTime metav1.Time `json:"lastTransitionTime,omitempty"`

|

||||

}

|

||||

@@ -139,6 +148,7 @@ func (c *Canary) GetProgressDeadlineSeconds() int {

|

||||

return ProgressDeadlineSeconds

|

||||

}

|

||||

|

||||

// GetAnalysisInterval returns the canary analysis interval (default 60s)

|

||||

func (c *Canary) GetAnalysisInterval() time.Duration {

|

||||

if c.Spec.CanaryAnalysis.Interval == "" {

|

||||

return AnalysisInterval

|

||||

|

||||

@@ -248,17 +248,17 @@ func checkCustomResourceType(obj interface{}, logger *zap.SugaredLogger) (flagge

|

||||

}

|

||||

|

||||

func (c *Controller) recordEventInfof(r *flaggerv1.Canary, template string, args ...interface{}) {

|

||||

c.logger.Infof(template, args...)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", r.Name, r.Namespace)).Infof(template, args...)

|

||||

c.eventRecorder.Event(r, corev1.EventTypeNormal, "Synced", fmt.Sprintf(template, args...))

|

||||

}

|

||||

|

||||

func (c *Controller) recordEventErrorf(r *flaggerv1.Canary, template string, args ...interface{}) {

|

||||

c.logger.Errorf(template, args...)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", r.Name, r.Namespace)).Errorf(template, args...)

|

||||

c.eventRecorder.Event(r, corev1.EventTypeWarning, "Synced", fmt.Sprintf(template, args...))

|

||||

}

|

||||

|

||||

func (c *Controller) recordEventWarningf(r *flaggerv1.Canary, template string, args ...interface{}) {

|

||||

c.logger.Infof(template, args...)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", r.Name, r.Namespace)).Infof(template, args...)

|

||||

c.eventRecorder.Event(r, corev1.EventTypeWarning, "Synced", fmt.Sprintf(template, args...))

|

||||

}

|

||||

|

||||

|

||||

@@ -32,15 +32,17 @@ type CanaryDeployer struct {

|

||||

|

||||

// Promote copies the pod spec from canary to primary

|

||||

func (c *CanaryDeployer) Promote(cd *flaggerv1.Canary) error {

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

primaryName := fmt.Sprintf("%s-primary", targetName)

|

||||

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return fmt.Errorf("deployment %s.%s not found", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

return fmt.Errorf("deployment %s.%s not found", targetName, cd.Namespace)

|

||||

}

|

||||

return fmt.Errorf("deployment %s.%s query error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("deployment %s.%s query error %v", targetName, cd.Namespace, err)

|

||||

}

|

||||

|

||||

primaryName := fmt.Sprintf("%s-primary", cd.Spec.TargetRef.Name)

|

||||

primary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(primaryName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

@@ -49,15 +51,17 @@ func (c *CanaryDeployer) Promote(cd *flaggerv1.Canary) error {

|

||||

return fmt.Errorf("deployment %s.%s query error %v", primaryName, cd.Namespace, err)

|

||||

}

|

||||

|

||||

primary.Spec.ProgressDeadlineSeconds = canary.Spec.ProgressDeadlineSeconds

|

||||

primary.Spec.MinReadySeconds = canary.Spec.MinReadySeconds

|

||||

primary.Spec.RevisionHistoryLimit = canary.Spec.RevisionHistoryLimit

|

||||

primary.Spec.Strategy = canary.Spec.Strategy

|

||||

primary.Spec.Template.Spec = canary.Spec.Template.Spec

|

||||

_, err = c.kubeClient.AppsV1().Deployments(primary.Namespace).Update(primary)

|

||||

if err != nil {

|

||||

return fmt.Errorf("updating template spec %s.%s failed: %v", primary.GetName(), primary.Namespace, err)

|

||||

primaryCopy := primary.DeepCopy()

|

||||

primaryCopy.Spec.ProgressDeadlineSeconds = canary.Spec.ProgressDeadlineSeconds

|

||||

primaryCopy.Spec.MinReadySeconds = canary.Spec.MinReadySeconds

|

||||

primaryCopy.Spec.RevisionHistoryLimit = canary.Spec.RevisionHistoryLimit

|

||||

primaryCopy.Spec.Strategy = canary.Spec.Strategy

|

||||

primaryCopy.Spec.Template.Spec = canary.Spec.Template.Spec

|

||||

|

||||

_, err = c.kubeClient.AppsV1().Deployments(cd.Namespace).Update(primaryCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("updating deployment %s.%s template spec failed: %v",

|

||||

primaryCopy.GetName(), primaryCopy.Namespace, err)

|

||||

}

|

||||

|

||||

return nil

|

||||

@@ -78,15 +82,11 @@ func (c *CanaryDeployer) IsPrimaryReady(cd *flaggerv1.Canary) (bool, error) {

|

||||

|

||||

retriable, err := c.isDeploymentReady(primary, cd.GetProgressDeadlineSeconds())

|

||||

if err != nil {

|

||||

if retriable {

|

||||

return retriable, fmt.Errorf("Halt %s.%s advancement %s", cd.Name, cd.Namespace, err.Error())

|

||||

} else {

|

||||

return retriable, err

|

||||

}

|

||||

return retriable, fmt.Errorf("Halt advancement %s.%s %s", primaryName, cd.Namespace, err.Error())

|

||||

}

|

||||

|

||||

if primary.Spec.Replicas == int32p(0) {

|

||||

return true, fmt.Errorf("halt %s.%s advancement primary deployment is scaled to zero",

|

||||

return true, fmt.Errorf("Halt %s.%s advancement primary deployment is scaled to zero",

|

||||

cd.Name, cd.Namespace)

|

||||

}

|

||||

return true, nil

|

||||

@@ -96,18 +96,19 @@ func (c *CanaryDeployer) IsPrimaryReady(cd *flaggerv1.Canary) (bool, error) {

|

||||

// the deployment is in the middle of a rolling update or if the pods are unhealthy

|

||||

// it will return a non retriable error if the rolling update is stuck

|

||||

func (c *CanaryDeployer) IsCanaryReady(cd *flaggerv1.Canary) (bool, error) {

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return true, fmt.Errorf("deployment %s.%s not found", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

return true, fmt.Errorf("deployment %s.%s not found", targetName, cd.Namespace)

|

||||

}

|

||||

return true, fmt.Errorf("deployment %s.%s query error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

return true, fmt.Errorf("deployment %s.%s query error %v", targetName, cd.Namespace, err)

|

||||

}

|

||||

|

||||

retriable, err := c.isDeploymentReady(canary, cd.GetProgressDeadlineSeconds())

|

||||

if err != nil {

|

||||

if retriable {

|

||||

return retriable, fmt.Errorf("Halt %s.%s advancement %s", cd.Name, cd.Namespace, err.Error())

|

||||

return retriable, fmt.Errorf("Halt advancement %s.%s %s", targetName, cd.Namespace, err.Error())

|

||||

} else {

|

||||

return retriable, fmt.Errorf("deployment does not have minimum availability for more than %vs",

|

||||

cd.GetProgressDeadlineSeconds())

|

||||

@@ -119,22 +120,23 @@ func (c *CanaryDeployer) IsCanaryReady(cd *flaggerv1.Canary) (bool, error) {

|

||||

|

||||

// IsNewSpec returns true if the canary deployment pod spec has changed

|

||||

func (c *CanaryDeployer) IsNewSpec(cd *flaggerv1.Canary) (bool, error) {

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return false, fmt.Errorf("deployment %s.%s not found", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

return false, fmt.Errorf("deployment %s.%s not found", targetName, cd.Namespace)

|

||||

}

|

||||

return false, fmt.Errorf("deployment %s.%s query error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

return false, fmt.Errorf("deployment %s.%s query error %v", targetName, cd.Namespace, err)

|

||||

}

|

||||

|

||||

if cd.Status.CanaryRevision == "" {

|

||||

if cd.Status.LastAppliedSpec == "" {

|

||||

return true, nil

|

||||

}

|

||||

|

||||

newSpec := &canary.Spec.Template.Spec

|

||||

oldSpecJson, err := base64.StdEncoding.DecodeString(cd.Status.CanaryRevision)

|

||||

oldSpecJson, err := base64.StdEncoding.DecodeString(cd.Status.LastAppliedSpec)

|

||||

if err != nil {

|

||||

return false, err

|

||||

return false, fmt.Errorf("%s.%s decode error %v", cd.Name, cd.Namespace, err)

|

||||

}

|

||||

oldSpec := &corev1.PodSpec{}

|

||||

err = json.Unmarshal(oldSpecJson, oldSpec)

|

||||

@@ -150,31 +152,60 @@ func (c *CanaryDeployer) IsNewSpec(cd *flaggerv1.Canary) (bool, error) {

|

||||

return false, nil

|

||||

}

|

||||

|

||||

// SetFailedChecks updates the canary failed checks counter

|

||||

func (c *CanaryDeployer) SetFailedChecks(cd *flaggerv1.Canary, val int) error {

|

||||

cd.Status.FailedChecks = val

|

||||

cd.Status.LastTransitionTime = metav1.Now()

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).Update(cd)

|

||||

// ShouldAdvance determines if the canary analysis can proceed

|

||||

func (c *CanaryDeployer) ShouldAdvance(cd *flaggerv1.Canary) (bool, error) {

|

||||

if cd.Status.LastAppliedSpec == "" || cd.Status.Phase == flaggerv1.CanaryProgressing {

|

||||

return true, nil

|

||||

}

|

||||

return c.IsNewSpec(cd)

|

||||

}

|

||||

|

||||

// SetStatusFailedChecks updates the canary failed checks counter

|

||||

func (c *CanaryDeployer) SetStatusFailedChecks(cd *flaggerv1.Canary, val int) error {

|

||||

cdCopy := cd.DeepCopy()

|

||||

cdCopy.Status.FailedChecks = val

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("deployment %s.%s update error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("canary %s.%s status update error %v", cdCopy.Name, cdCopy.Namespace, err)

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// SetState updates the canary status state

|

||||

func (c *CanaryDeployer) SetState(cd *flaggerv1.Canary, state flaggerv1.CanaryState) error {

|

||||

cd.Status.State = state

|

||||

cd.Status.LastTransitionTime = metav1.Now()

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).Update(cd)

|

||||

// SetStatusWeight updates the canary status weight value

|

||||

func (c *CanaryDeployer) SetStatusWeight(cd *flaggerv1.Canary, val int) error {

|

||||

cdCopy := cd.DeepCopy()

|

||||

cdCopy.Status.CanaryWeight = val

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("deployment %s.%s update error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("canary %s.%s status update error %v", cdCopy.Name, cdCopy.Namespace, err)

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// SetStatusPhase updates the canary status phase

|

||||

func (c *CanaryDeployer) SetStatusPhase(cd *flaggerv1.Canary, phase flaggerv1.CanaryPhase) error {

|

||||

cdCopy := cd.DeepCopy()

|

||||

cdCopy.Status.Phase = phase

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

|

||||

if phase != flaggerv1.CanaryProgressing {

|

||||

cdCopy.Status.CanaryWeight = 0

|

||||

}

|

||||

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("canary %s.%s status update error %v", cdCopy.Name, cdCopy.Namespace, err)

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// SyncStatus encodes the canary pod spec and updates the canary status

|

||||

func (c *CanaryDeployer) SyncStatus(cd *flaggerv1.Canary, status flaggerv1.CanaryStatus) error {

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

dep, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return fmt.Errorf("deployment %s.%s not found", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

@@ -182,36 +213,42 @@ func (c *CanaryDeployer) SyncStatus(cd *flaggerv1.Canary, status flaggerv1.Canar

|

||||

return fmt.Errorf("deployment %s.%s query error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

}

|

||||

|

||||

specJson, err := json.Marshal(canary.Spec.Template.Spec)

|

||||

specJson, err := json.Marshal(dep.Spec.Template.Spec)

|

||||

if err != nil {

|

||||

return fmt.Errorf("deployment %s.%s marshal error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

}

|

||||

|

||||

specEnc := base64.StdEncoding.EncodeToString(specJson)

|

||||

cd.Status.State = status.State

|

||||

cd.Status.FailedChecks = status.FailedChecks

|

||||

cd.Status.CanaryRevision = specEnc

|

||||

cd.Status.LastTransitionTime = metav1.Now()

|

||||

_, err = c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).Update(cd)

|

||||

cdCopy := cd.DeepCopy()

|

||||

cdCopy.Status.Phase = status.Phase

|

||||

cdCopy.Status.CanaryWeight = status.CanaryWeight

|

||||

cdCopy.Status.FailedChecks = status.FailedChecks

|

||||

cdCopy.Status.LastAppliedSpec = base64.StdEncoding.EncodeToString(specJson)

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

|

||||

cd, err = c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("deployment %s.%s update error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("canary %s.%s status update error %v", cdCopy.Name, cdCopy.Namespace, err)

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// Scale sets the canary deployment replicas

|

||||

func (c *CanaryDeployer) Scale(cd *flaggerv1.Canary, replicas int32) error {

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

dep, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return fmt.Errorf("deployment %s.%s not found", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

return fmt.Errorf("deployment %s.%s not found", targetName, cd.Namespace)

|

||||

}

|

||||

return fmt.Errorf("deployment %s.%s query error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("deployment %s.%s query error %v", targetName, cd.Namespace, err)

|

||||

}

|

||||

canary.Spec.Replicas = int32p(replicas)

|

||||

canary, err = c.kubeClient.AppsV1().Deployments(canary.Namespace).Update(canary)

|

||||

|

||||

depCopy := dep.DeepCopy()

|

||||

depCopy.Spec.Replicas = int32p(replicas)

|

||||

|

||||

_, err = c.kubeClient.AppsV1().Deployments(dep.Namespace).Update(depCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("scaling %s.%s to %v failed: %v", canary.GetName(), canary.Namespace, replicas, err)

|

||||

return fmt.Errorf("scaling %s.%s to %v failed: %v", depCopy.GetName(), depCopy.Namespace, replicas, err)

|

||||

}

|

||||

return nil

|

||||

}

|

||||

@@ -224,8 +261,8 @@ func (c *CanaryDeployer) Sync(cd *flaggerv1.Canary) error {

|

||||

return fmt.Errorf("creating deployment %s.%s failed: %v", primaryName, cd.Namespace, err)

|

||||

}

|

||||

|

||||

if cd.Status.State == "" {

|

||||

c.logger.Infof("Scaling down %s.%s", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

if cd.Status.Phase == "" {

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Infof("Scaling down %s.%s", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

if err := c.Scale(cd, 0); err != nil {

|

||||

return err

|

||||

}

|

||||

@@ -240,13 +277,13 @@ func (c *CanaryDeployer) Sync(cd *flaggerv1.Canary) error {

|

||||

}

|

||||

|

||||

func (c *CanaryDeployer) createPrimaryDeployment(cd *flaggerv1.Canary) error {

|

||||

canaryName := cd.Spec.TargetRef.Name

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

primaryName := fmt.Sprintf("%s-primary", cd.Spec.TargetRef.Name)

|

||||

|

||||

canaryDep, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(canaryName, metav1.GetOptions{})

|

||||

canaryDep, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return fmt.Errorf("deployment %s.%s not found, retrying", canaryName, cd.Namespace)

|

||||

return fmt.Errorf("deployment %s.%s not found, retrying", targetName, cd.Namespace)

|

||||

}

|

||||

return err

|

||||

}

|

||||

@@ -292,7 +329,7 @@ func (c *CanaryDeployer) createPrimaryDeployment(cd *flaggerv1.Canary) error {

|

||||

return err

|

||||

}

|

||||

|

||||

c.logger.Infof("Deployment %s.%s created", primaryDep.GetName(), cd.Namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Infof("Deployment %s.%s created", primaryDep.GetName(), cd.Namespace)

|

||||

}

|

||||

|

||||

return nil

|

||||

@@ -340,7 +377,7 @@ func (c *CanaryDeployer) createPrimaryHpa(cd *flaggerv1.Canary) error {

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

c.logger.Infof("HorizontalPodAutoscaler %s.%s created", primaryHpa.GetName(), cd.Namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Infof("HorizontalPodAutoscaler %s.%s created", primaryHpa.GetName(), cd.Namespace)

|

||||

}

|

||||

|

||||

return nil

|

||||

|

||||

@@ -351,7 +351,7 @@ func TestCanaryDeployer_SetFailedChecks(t *testing.T) {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

err = deployer.SetFailedChecks(canary, 1)

|

||||

err = deployer.SetStatusFailedChecks(canary, 1)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

@@ -387,7 +387,7 @@ func TestCanaryDeployer_SetState(t *testing.T) {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

err = deployer.SetState(canary, v1alpha3.CanaryRunning)

|

||||

err = deployer.SetStatusPhase(canary, v1alpha3.CanaryProgressing)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

@@ -397,8 +397,8 @@ func TestCanaryDeployer_SetState(t *testing.T) {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if res.Status.State != v1alpha3.CanaryRunning {

|

||||

t.Errorf("Got %v wanted %v", res.Status.State, v1alpha3.CanaryRunning)

|

||||

if res.Status.Phase != v1alpha3.CanaryProgressing {

|

||||

t.Errorf("Got %v wanted %v", res.Status.Phase, v1alpha3.CanaryProgressing)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -424,7 +424,7 @@ func TestCanaryDeployer_SyncStatus(t *testing.T) {

|

||||

}

|

||||

|

||||

status := v1alpha3.CanaryStatus{

|

||||

State: v1alpha3.CanaryRunning,

|

||||

Phase: v1alpha3.CanaryProgressing,

|

||||

FailedChecks: 2,

|

||||

}

|

||||

err = deployer.SyncStatus(canary, status)

|

||||

@@ -437,8 +437,8 @@ func TestCanaryDeployer_SyncStatus(t *testing.T) {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if res.Status.State != status.State {

|

||||

t.Errorf("Got state %v wanted %v", res.Status.State, status.State)

|

||||

if res.Status.Phase != status.Phase {

|

||||

t.Errorf("Got state %v wanted %v", res.Status.Phase, status.Phase)

|

||||

}

|

||||

|

||||

if res.Status.FailedChecks != status.FailedChecks {

|

||||

|

||||

@@ -6,7 +6,8 @@ import "time"

|

||||

type CanaryJob struct {

|

||||

Name string

|

||||

Namespace string

|

||||

function func(name string, namespace string)

|

||||

SkipTests bool

|

||||

function func(name string, namespace string, skipTests bool)

|

||||

done chan bool

|

||||

ticker *time.Ticker

|

||||

}

|

||||

@@ -15,11 +16,11 @@ type CanaryJob struct {

|

||||

func (j CanaryJob) Start() {

|

||||

go func() {

|

||||

// run the infra bootstrap on job creation

|

||||

j.function(j.Name, j.Namespace)

|

||||

j.function(j.Name, j.Namespace, j.SkipTests)

|

||||

for {

|

||||

select {

|

||||

case <-j.ticker.C:

|

||||

j.function(j.Name, j.Namespace)

|

||||

j.function(j.Name, j.Namespace, j.SkipTests)

|

||||

case <-j.done:

|

||||

return

|

||||

}

|

||||

|

||||

@@ -72,8 +72,8 @@ func (cr *CanaryRecorder) SetTotal(namespace string, total int) {

|

||||

// SetStatus sets the last known canary analysis status

|

||||

func (cr *CanaryRecorder) SetStatus(cd *flaggerv1.Canary) {

|

||||

status := 1

|

||||

switch cd.Status.State {

|

||||

case flaggerv1.CanaryRunning:

|

||||

switch cd.Status.Phase {

|

||||

case flaggerv1.CanaryProgressing:

|

||||

status = 0

|

||||

case flaggerv1.CanaryFailed:

|

||||

status = 2

|

||||

|

||||

@@ -42,13 +42,13 @@ func (c *CanaryRouter) Sync(cd *flaggerv1.Canary) error {

|

||||

}

|

||||

|

||||

func (c *CanaryRouter) createServices(cd *flaggerv1.Canary) error {

|

||||

canaryName := cd.Spec.TargetRef.Name

|

||||

primaryName := fmt.Sprintf("%s-primary", canaryName)

|

||||

canaryService, err := c.kubeClient.CoreV1().Services(cd.Namespace).Get(canaryName, metav1.GetOptions{})

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

primaryName := fmt.Sprintf("%s-primary", targetName)

|

||||

canaryService, err := c.kubeClient.CoreV1().Services(cd.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

if errors.IsNotFound(err) {

|

||||

canaryService = &corev1.Service{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Name: canaryName,

|

||||

Name: targetName,

|

||||

Namespace: cd.Namespace,

|

||||

OwnerReferences: []metav1.OwnerReference{

|

||||

*metav1.NewControllerRef(cd, schema.GroupVersionKind{

|

||||

@@ -60,7 +60,7 @@ func (c *CanaryRouter) createServices(cd *flaggerv1.Canary) error {

|

||||

},

|

||||

Spec: corev1.ServiceSpec{

|

||||

Type: corev1.ServiceTypeClusterIP,

|

||||

Selector: map[string]string{"app": canaryName},

|

||||

Selector: map[string]string{"app": targetName},

|

||||

Ports: []corev1.ServicePort{

|

||||

{

|

||||

Name: "http",

|

||||

@@ -79,7 +79,7 @@ func (c *CanaryRouter) createServices(cd *flaggerv1.Canary) error {

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

c.logger.Infof("Service %s.%s created", canaryService.GetName(), cd.Namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Infof("Service %s.%s created", canaryService.GetName(), cd.Namespace)

|

||||

}

|

||||

|

||||

canaryTestServiceName := fmt.Sprintf("%s-canary", cd.Spec.TargetRef.Name)

|

||||

@@ -99,7 +99,7 @@ func (c *CanaryRouter) createServices(cd *flaggerv1.Canary) error {

|

||||

},

|

||||

Spec: corev1.ServiceSpec{

|

||||

Type: corev1.ServiceTypeClusterIP,

|

||||

Selector: map[string]string{"app": canaryName},

|

||||

Selector: map[string]string{"app": targetName},

|

||||

Ports: []corev1.ServicePort{

|

||||

{

|

||||

Name: "http",

|

||||

@@ -118,7 +118,7 @@ func (c *CanaryRouter) createServices(cd *flaggerv1.Canary) error {

|

||||

if err != nil {

|

||||

return err

|

||||

}

|

||||

c.logger.Infof("Service %s.%s created", canaryTestService.GetName(), cd.Namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Infof("Service %s.%s created", canaryTestService.GetName(), cd.Namespace)

|

||||

}

|

||||

|

||||

primaryService, err := c.kubeClient.CoreV1().Services(cd.Namespace).Get(primaryName, metav1.GetOptions{})

|

||||

@@ -157,23 +157,23 @@ func (c *CanaryRouter) createServices(cd *flaggerv1.Canary) error {

|

||||

return err

|

||||

}

|

||||

|

||||

c.logger.Infof("Service %s.%s created", primaryService.GetName(), cd.Namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Infof("Service %s.%s created", primaryService.GetName(), cd.Namespace)

|

||||

}

|

||||

|

||||

return nil

|

||||

}

|

||||

|

||||

func (c *CanaryRouter) createVirtualService(cd *flaggerv1.Canary) error {

|

||||

canaryName := cd.Name

|

||||

primaryName := fmt.Sprintf("%s-primary", canaryName)

|

||||

hosts := append(cd.Spec.Service.Hosts, canaryName)

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

primaryName := fmt.Sprintf("%s-primary", targetName)

|

||||

hosts := append(cd.Spec.Service.Hosts, targetName)

|

||||

gateways := append(cd.Spec.Service.Gateways, "mesh")

|

||||

virtualService, err := c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Get(canaryName, metav1.GetOptions{})

|

||||

virtualService, err := c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

if errors.IsNotFound(err) {

|

||||

c.logger.Debugf("VirtualService %s.%s not found", canaryName, cd.Namespace)

|

||||

c.logger.Debugf("VirtualService %s.%s not found", targetName, cd.Namespace)

|

||||

virtualService = &istiov1alpha3.VirtualService{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Name: cd.Name,

|

||||

Name: targetName,

|

||||

Namespace: cd.Namespace,

|

||||

OwnerReferences: []metav1.OwnerReference{

|

||||

*metav1.NewControllerRef(cd, schema.GroupVersionKind{

|

||||

@@ -200,7 +200,7 @@ func (c *CanaryRouter) createVirtualService(cd *flaggerv1.Canary) error {

|

||||

},

|

||||

{

|

||||

Destination: istiov1alpha3.Destination{

|

||||

Host: canaryName,

|

||||

Host: targetName,

|

||||

Port: istiov1alpha3.PortSelector{

|

||||

Number: uint32(cd.Spec.Service.Port),

|

||||

},

|

||||

@@ -216,9 +216,9 @@ func (c *CanaryRouter) createVirtualService(cd *flaggerv1.Canary) error {

|

||||

c.logger.Debugf("Creating VirtualService %s.%s", virtualService.GetName(), cd.Namespace)

|

||||

_, err = c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Create(virtualService)

|

||||

if err != nil {

|

||||

return fmt.Errorf("VirtualService %s.%s create error %v", cd.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("VirtualService %s.%s create error %v", targetName, cd.Namespace, err)

|

||||

}

|

||||

c.logger.Infof("VirtualService %s.%s created", virtualService.GetName(), cd.Namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Infof("VirtualService %s.%s created", virtualService.GetName(), cd.Namespace)

|

||||

}

|

||||

|

||||

return nil

|

||||

@@ -230,23 +230,24 @@ func (c *CanaryRouter) GetRoutes(cd *flaggerv1.Canary) (

|

||||

canary istiov1alpha3.DestinationWeight,

|

||||

err error,

|

||||

) {

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

vs := &istiov1alpha3.VirtualService{}

|

||||

vs, err = c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Get(cd.Name, v1.GetOptions{})

|

||||

vs, err = c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Get(targetName, v1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

err = fmt.Errorf("VirtualService %s.%s not found", cd.Name, cd.Namespace)

|

||||

err = fmt.Errorf("VirtualService %s.%s not found", targetName, cd.Namespace)

|

||||

return

|

||||

}

|

||||

err = fmt.Errorf("VirtualService %s.%s query error %v", cd.Name, cd.Namespace, err)

|

||||

err = fmt.Errorf("VirtualService %s.%s query error %v", targetName, cd.Namespace, err)

|

||||

return

|

||||

}

|

||||

|

||||

for _, http := range vs.Spec.Http {

|

||||

for _, route := range http.Route {

|

||||

if route.Destination.Host == fmt.Sprintf("%s-primary", cd.Spec.TargetRef.Name) {

|

||||

if route.Destination.Host == fmt.Sprintf("%s-primary", targetName) {

|

||||

primary = route

|

||||

}

|

||||

if route.Destination.Host == cd.Spec.TargetRef.Name {

|

||||

if route.Destination.Host == targetName {

|

||||

canary = route

|

||||

}

|

||||

}

|

||||

@@ -254,7 +255,7 @@ func (c *CanaryRouter) GetRoutes(cd *flaggerv1.Canary) (

|

||||

|

||||

if primary.Weight == 0 && canary.Weight == 0 {

|

||||

err = fmt.Errorf("VirtualService %s.%s does not contain routes for %s and %s",

|

||||

cd.Name, cd.Namespace, fmt.Sprintf("%s-primary", cd.Spec.TargetRef.Name), cd.Spec.TargetRef.Name)

|

||||

targetName, cd.Namespace, fmt.Sprintf("%s-primary", targetName), targetName)

|

||||

}

|

||||

|

||||

return

|

||||

@@ -266,23 +267,26 @@ func (c *CanaryRouter) SetRoutes(

|

||||

primary istiov1alpha3.DestinationWeight,

|

||||

canary istiov1alpha3.DestinationWeight,

|

||||

) error {

|

||||

vs, err := c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Get(cd.Name, v1.GetOptions{})

|

||||

targetName := cd.Spec.TargetRef.Name

|

||||

vs, err := c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Get(targetName, v1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return fmt.Errorf("VirtualService %s.%s not found", cd.Name, cd.Namespace)

|

||||

return fmt.Errorf("VirtualService %s.%s not found", targetName, cd.Namespace)

|

||||

|

||||

}

|

||||

return fmt.Errorf("VirtualService %s.%s query error %v", cd.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("VirtualService %s.%s query error %v", targetName, cd.Namespace, err)

|

||||

}

|

||||

vs.Spec.Http = []istiov1alpha3.HTTPRoute{

|

||||

|

||||

vsCopy := vs.DeepCopy()

|

||||

vsCopy.Spec.Http = []istiov1alpha3.HTTPRoute{

|

||||

{

|

||||

Route: []istiov1alpha3.DestinationWeight{primary, canary},

|

||||

},

|

||||

}

|

||||

|

||||

vs, err = c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Update(vs)

|

||||

vs, err = c.istioClient.NetworkingV1alpha3().VirtualServices(cd.Namespace).Update(vsCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("VirtualService %s.%s update failed: %v", cd.Name, cd.Namespace, err)

|

||||

return fmt.Errorf("VirtualService %s.%s update failed: %v", targetName, cd.Namespace, err)

|

||||

|

||||

}

|

||||

return nil

|

||||

|

||||

@@ -12,7 +12,7 @@ import (

|

||||

// for new canaries new jobs are created and started

|

||||

// for the removed canaries the jobs are stopped and deleted

|

||||

func (c *Controller) scheduleCanaries() {

|

||||

current := make(map[string]bool)

|

||||

current := make(map[string]string)

|

||||

stats := make(map[string]int)

|

||||

|

||||

c.canaries.Range(func(key interface{}, value interface{}) bool {

|

||||

@@ -20,7 +20,7 @@ func (c *Controller) scheduleCanaries() {

|

||||

|

||||

// format: <name>.<namespace>

|

||||

name := key.(string)

|

||||

current[name] = true

|

||||

current[name] = fmt.Sprintf("%s.%s", canary.Spec.TargetRef.Name, canary.Namespace)

|

||||

|

||||

// schedule new jobs

|

||||

if _, exists := c.jobs[name]; !exists {

|

||||

@@ -54,18 +54,27 @@ func (c *Controller) scheduleCanaries() {

|

||||

}

|

||||

}

|

||||

|

||||

// check if multiple canaries have the same target

|

||||

for canaryName, targetName := range current {

|

||||

for name, target := range current {

|

||||

if name != canaryName && target == targetName {

|

||||

c.logger.With("canary", canaryName).Errorf("Bad things will happen! Found more than one canary with the same target %s", targetName)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// set total canaries per namespace metric

|

||||

for k, v := range stats {

|

||||

c.recorder.SetTotal(k, v)

|

||||

}

|

||||

}

|

||||

|

||||

func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

func (c *Controller) advanceCanary(name string, namespace string, skipLivenessChecks bool) {

|

||||

begin := time.Now()

|

||||

// check if the canary exists

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(namespace).Get(name, v1.GetOptions{})

|

||||

if err != nil {

|

||||

c.logger.Errorf("Canary %s.%s not found", name, namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", name, namespace)).Errorf("Canary %s.%s not found", name, namespace)

|

||||

return

|

||||

}

|

||||

|

||||

@@ -81,6 +90,13 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

return

|

||||

}

|

||||

|

||||

if ok, err := c.deployer.ShouldAdvance(cd); !ok {

|

||||

if err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

// set max weight default value to 100%

|

||||

maxWeight := 100

|

||||

if cd.Spec.CanaryAnalysis.MaxWeight > 0 {

|

||||

@@ -88,9 +104,11 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

}

|

||||

|

||||

// check primary deployment status

|

||||

if _, err := c.deployer.IsPrimaryReady(cd); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

if !skipLivenessChecks {

|

||||

if _, err := c.deployer.IsPrimaryReady(cd); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

}

|

||||

|

||||

// check if virtual service exists

|

||||

@@ -104,7 +122,33 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

c.recorder.SetWeight(cd, primaryRoute.Weight, canaryRoute.Weight)

|

||||

|

||||

// check if canary analysis should start (canary revision has changes) or continue

|

||||

if ok := c.checkCanaryStatus(cd, c.deployer); !ok {

|

||||

if ok := c.checkCanaryStatus(cd); !ok {

|

||||

return

|

||||

}

|

||||

|

||||

// check if canary revision changed during analysis

|

||||

if restart := c.hasCanaryRevisionChanged(cd); restart {

|

||||

c.recordEventInfof(cd, "New revision detected! Restarting analysis for %s.%s",

|

||||

cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

|

||||

// route all traffic back to primary

|

||||

primaryRoute.Weight = 100

|

||||

canaryRoute.Weight = 0

|

||||

if err := c.router.SetRoutes(cd, primaryRoute, canaryRoute); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

|

||||

// reset status

|

||||

status := flaggerv1.CanaryStatus{

|

||||

Phase: flaggerv1.CanaryProgressing,

|

||||

CanaryWeight: 0,

|

||||

FailedChecks: 0,

|

||||

}

|

||||

if err := c.deployer.SyncStatus(cd, status); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

@@ -113,14 +157,17 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

}()

|

||||

|

||||

// check canary deployment status

|

||||

retriable, err := c.deployer.IsCanaryReady(cd)

|

||||

if err != nil && retriable {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

var retriable = true

|

||||

if !skipLivenessChecks {

|

||||

retriable, err = c.deployer.IsCanaryReady(cd)

|

||||

if err != nil && retriable {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

}

|

||||

|

||||

// check if the number of failed checks reached the threshold

|

||||

if cd.Status.State == flaggerv1.CanaryRunning &&

|

||||

if cd.Status.Phase == flaggerv1.CanaryProgressing &&

|

||||

(!retriable || cd.Status.FailedChecks >= cd.Spec.CanaryAnalysis.Threshold) {

|

||||

|

||||

if cd.Status.FailedChecks >= cd.Spec.CanaryAnalysis.Threshold {

|

||||

@@ -156,8 +203,8 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

}

|

||||

|

||||

// mark canary as failed

|

||||

if err := c.deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: flaggerv1.CanaryFailed}); err != nil {

|

||||

c.logger.Errorf("%v", err)

|

||||

if err := c.deployer.SyncStatus(cd, flaggerv1.CanaryStatus{Phase: flaggerv1.CanaryFailed, CanaryWeight: 0}); err != nil {

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Errorf("%v", err)

|

||||

return

|

||||

}

|

||||

|

||||

@@ -168,10 +215,10 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

// check if the canary success rate is above the threshold

|

||||

// skip check if no traffic is routed to canary

|

||||

if canaryRoute.Weight == 0 {

|

||||

c.recordEventInfof(cd, "Starting canary deployment for %s.%s", cd.Name, cd.Namespace)

|

||||

c.recordEventInfof(cd, "Starting canary analysis for %s.%s", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

} else {

|

||||

if ok := c.analyseCanary(cd); !ok {

|

||||

if err := c.deployer.SetFailedChecks(cd, cd.Status.FailedChecks+1); err != nil {

|

||||

if err := c.deployer.SetStatusFailedChecks(cd, cd.Status.FailedChecks+1); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

@@ -195,6 +242,12 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

return

|

||||

}

|

||||

|

||||

// update weight status

|

||||

if err := c.deployer.SetStatusWeight(cd, canaryRoute.Weight); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

|

||||

c.recorder.SetWeight(cd, primaryRoute.Weight, canaryRoute.Weight)

|

||||

c.recordEventInfof(cd, "Advance %s.%s canary weight %v", cd.Name, cd.Namespace, canaryRoute.Weight)

|

||||

|

||||

@@ -226,8 +279,8 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

return

|

||||

}

|

||||

|

||||

// update status

|

||||

if err := c.deployer.SetState(cd, flaggerv1.CanaryFinished); err != nil {

|

||||

// update status phase

|

||||

if err := c.deployer.SetStatusPhase(cd, flaggerv1.CanarySucceeded); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

@@ -237,15 +290,15 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

}

|

||||

}

|

||||

|

||||

func (c *Controller) checkCanaryStatus(cd *flaggerv1.Canary, deployer CanaryDeployer) bool {

|

||||

func (c *Controller) checkCanaryStatus(cd *flaggerv1.Canary) bool {

|

||||

c.recorder.SetStatus(cd)

|

||||

if cd.Status.State == "running" {

|

||||

if cd.Status.Phase == flaggerv1.CanaryProgressing {

|

||||

return true

|

||||

}

|

||||

|

||||

if cd.Status.State == "" {

|

||||

if err := deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: flaggerv1.CanaryInitialized}); err != nil {

|

||||

c.logger.Errorf("%v", err)

|

||||

if cd.Status.Phase == "" {

|

||||

if err := c.deployer.SyncStatus(cd, flaggerv1.CanaryStatus{Phase: flaggerv1.CanaryInitialized}); err != nil {

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Errorf("%v", err)

|

||||

return false

|

||||

}

|

||||

c.recorder.SetStatus(cd)

|

||||

@@ -255,16 +308,16 @@ func (c *Controller) checkCanaryStatus(cd *flaggerv1.Canary, deployer CanaryDepl

|

||||

return false

|

||||

}

|

||||

|

||||

if diff, err := deployer.IsNewSpec(cd); diff {

|

||||

if diff, err := c.deployer.IsNewSpec(cd); diff {

|

||||

c.recordEventInfof(cd, "New revision detected! Scaling up %s.%s", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

c.sendNotification(cd, "New revision detected, starting canary analysis.",

|

||||

true, false)

|

||||

if err = deployer.Scale(cd, 1); err != nil {

|

||||

if err = c.deployer.Scale(cd, 1); err != nil {

|

||||

c.recordEventErrorf(cd, "%v", err)

|

||||

return false

|

||||

}

|

||||

if err := deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: flaggerv1.CanaryRunning}); err != nil {

|

||||

c.logger.Errorf("%v", err)

|

||||

if err := c.deployer.SyncStatus(cd, flaggerv1.CanaryStatus{Phase: flaggerv1.CanaryProgressing}); err != nil {

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", cd.Name, cd.Namespace)).Errorf("%v", err)

|

||||

return false

|

||||

}

|

||||

c.recorder.SetStatus(cd)

|

||||

@@ -273,6 +326,15 @@ func (c *Controller) checkCanaryStatus(cd *flaggerv1.Canary, deployer CanaryDepl

|

||||

return false

|

||||

}

|

||||

|

||||

func (c *Controller) hasCanaryRevisionChanged(cd *flaggerv1.Canary) bool {

|

||||

if cd.Status.Phase == flaggerv1.CanaryProgressing {

|

||||

if diff, _ := c.deployer.IsNewSpec(cd); diff {

|

||||

return true

|

||||

}

|

||||

}

|

||||

return false

|

||||

}

|

||||

|

||||

func (c *Controller) analyseCanary(r *flaggerv1.Canary) bool {

|

||||

// run metrics checks

|

||||

for _, metric := range r.Spec.CanaryAnalysis.Metrics {

|

||||

|

||||

@@ -1,12 +1,16 @@

|

||||

package controller

|

||||

|

||||

import (

|

||||

"go.uber.org/zap"

|

||||

"k8s.io/client-go/kubernetes"

|

||||

"sync"

|

||||

"testing"

|

||||

"time"

|

||||

|

||||

istioclientset "github.com/knative/pkg/client/clientset/versioned"

|

||||

fakeIstio "github.com/knative/pkg/client/clientset/versioned/fake"

|

||||

"github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

clientset "github.com/stefanprodan/flagger/pkg/client/clientset/versioned"

|

||||

fakeFlagger "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/fake"

|

||||

informers "github.com/stefanprodan/flagger/pkg/client/informers/externalversions"

|

||||

"github.com/stefanprodan/flagger/pkg/logging"

|

||||

@@ -21,6 +25,39 @@ var (

|

||||

noResyncPeriodFunc = func() time.Duration { return 0 }

|

||||

)

|

||||

|

||||

func newTestController(

|

||||

kubeClient kubernetes.Interface,

|

||||

istioClient istioclientset.Interface,

|

||||

flaggerClient clientset.Interface,

|

||||

logger *zap.SugaredLogger,

|

||||