mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-15 10:30:01 +00:00

Compare commits

41 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c46fe55ad0 | ||

|

|

36a54fbf2a | ||

|

|

60f6b05397 | ||

|

|

6d8a7343b7 | ||

|

|

aff8b117d4 | ||

|

|

1b3c3b22b3 | ||

|

|

1d31b5ed90 | ||

|

|

1ef310f00d | ||

|

|

acdd2c46d5 | ||

|

|

9872e6bc16 | ||

|

|

10c2bdec86 | ||

|

|

4bf3b70048 | ||

|

|

ada446bbaa | ||

|

|

c4981ef4db | ||

|

|

d1b84cd31d | ||

|

|

9232c8647a | ||

|

|

23e8c7d616 | ||

|

|

42607fbd64 | ||

|

|

28781a5f02 | ||

|

|

3589e11244 | ||

|

|

5e880d3942 | ||

|

|

f7e675144d | ||

|

|

3bff2c339b | ||

|

|

b035c1e7fb | ||

|

|

7ae0d49e80 | ||

|

|

07f66e849d | ||

|

|

06c29051eb | ||

|

|

83118faeb3 | ||

|

|

aa2c28c733 | ||

|

|

10185407f6 | ||

|

|

c1bde57c17 | ||

|

|

882b4b2d23 | ||

|

|

cac585157f | ||

|

|

cc2860a49f | ||

|

|

bec96356ec | ||

|

|

b5c648ea54 | ||

|

|

e6e3e500be | ||

|

|

537e8fdaf7 | ||

|

|

322c83bdad | ||

|

|

41f0ba0247 | ||

|

|

b67b49fde6 |

40

README.md

40

README.md

@@ -8,8 +8,8 @@

|

||||

|

||||

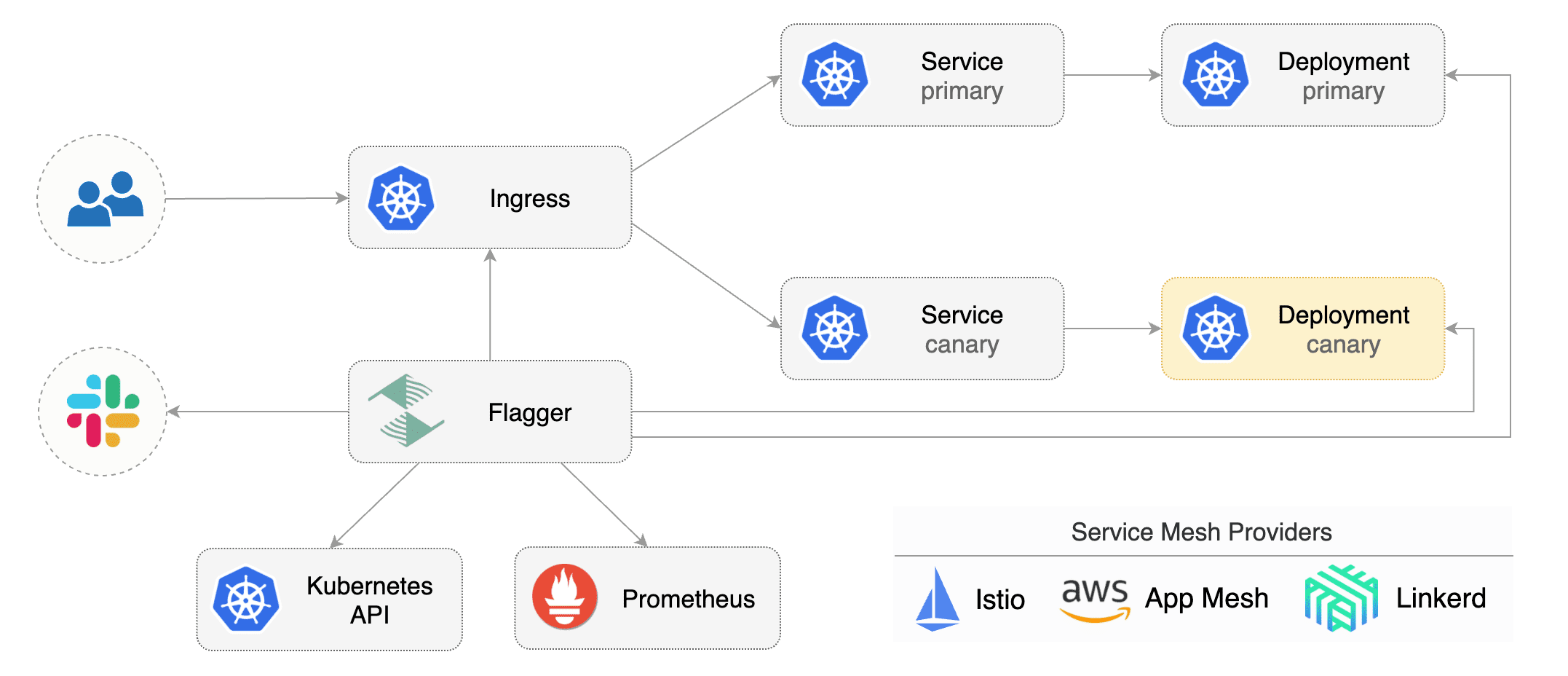

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests, load tests or any other custom

|

||||

validation.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

### Install

|

||||

|

||||

@@ -25,11 +25,10 @@ helm repo add flagger https://flagger.app

|

||||

# install or upgrade

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace=istio-system \

|

||||

--set metricsServer=http://prometheus.istio-system:9090 \

|

||||

--set controlLoopInterval=1m

|

||||

--set metricsServer=http://prometheus.istio-system:9090

|

||||

```

|

||||

|

||||

Flagger is compatible with Kubernetes >1.10.0 and Istio >1.0.0.

|

||||

Flagger is compatible with Kubernetes >1.11.0 and Istio >1.0.0.

|

||||

|

||||

### Usage

|

||||

|

||||

@@ -75,7 +74,7 @@ You can change the canary analysis _max weight_ and the _step weight_ percentage

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha2

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

@@ -102,8 +101,10 @@ spec:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

- podinfo.example.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

@@ -241,15 +242,16 @@ kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

Flagger detects that the deployment revision changed and starts a new canary analysis:

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -271,6 +273,15 @@ Events:

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 5 2019-01-16T14:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

@@ -299,9 +310,10 @@ the canary is scaled to zero and the rollout is marked as failed.

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 16695041

|

||||

Failed Checks: 10

|

||||

State: failed

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Last Transition Time: 2019-01-16T13:47:16Z

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

apiVersion: flagger.app/v1alpha2

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

@@ -27,6 +27,8 @@ spec:

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

@@ -50,7 +52,7 @@ spec:

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: integration-tests

|

||||

url: http://podinfo.test:9898/echo

|

||||

url: https://httpbin.org/post

|

||||

timeout: 1m

|

||||

metadata:

|

||||

test: "all"

|

||||

|

||||

@@ -4,11 +4,14 @@ metadata:

|

||||

name: canaries.flagger.app

|

||||

spec:

|

||||

group: flagger.app

|

||||

version: v1alpha2

|

||||

version: v1alpha3

|

||||

versions:

|

||||

- name: v1alpha2

|

||||

- name: v1alpha3

|

||||

served: true

|

||||

storage: true

|

||||

- name: v1alpha2

|

||||

served: true

|

||||

storage: false

|

||||

- name: v1alpha1

|

||||

served: true

|

||||

storage: false

|

||||

@@ -16,7 +19,21 @@ spec:

|

||||

plural: canaries

|

||||

singular: canary

|

||||

kind: Canary

|

||||

categories:

|

||||

- all

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

additionalPrinterColumns:

|

||||

- name: Status

|

||||

type: string

|

||||

JSONPath: .status.phase

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

validation:

|

||||

openAPIV3Schema:

|

||||

properties:

|

||||

@@ -39,7 +56,9 @@ spec:

|

||||

name:

|

||||

type: string

|

||||

autoscalerRef:

|

||||

type: object

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

properties:

|

||||

apiVersion:

|

||||

@@ -56,6 +75,9 @@ spec:

|

||||

type: number

|

||||

canaryAnalysis:

|

||||

properties:

|

||||

interval:

|

||||

type: string

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

threshold:

|

||||

type: number

|

||||

maxWeight:

|

||||

@@ -73,7 +95,7 @@ spec:

|

||||

type: string

|

||||

interval:

|

||||

type: string

|

||||

pattern: "^[0-9]+(m)"

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

threshold:

|

||||

type: number

|

||||

webhooks:

|

||||

@@ -90,4 +112,4 @@ spec:

|

||||

format: url

|

||||

timeout:

|

||||

type: string

|

||||

pattern: "^[0-9]+(s)"

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.2.0

|

||||

image: quay.io/stefanprodan/flagger:0.4.0

|

||||

imagePullPolicy: Always

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -1,11 +1,11 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.2.0

|

||||

appVersion: 0.2.0

|

||||

kubeVersion: ">=1.9.0-0"

|

||||

version: 0.4.0

|

||||

appVersion: 0.4.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

home: https://flagger.app

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

|

||||

@@ -1,14 +1,14 @@

|

||||

# Flagger

|

||||

|

||||

[Flagger](https://flagger.app) is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

[Flagger](https://github.com/stefanprodan/flagger) is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators

|

||||

like HTTP requests success rate, requests average duration and pods health.

|

||||

Based on the KPIs analysis a canary is promoted or aborted and the analysis result is published to Slack.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

@@ -48,7 +48,6 @@ Parameter | Description | Default

|

||||

`image.repository` | image repository | `quay.io/stefanprodan/flagger`

|

||||

`image.tag` | image tag | `<VERSION>`

|

||||

`image.pullPolicy` | image pull policy | `IfNotPresent`

|

||||

`controlLoopInterval` | wait interval between checks | `10s`

|

||||

`metricsServer` | Prometheus URL | `http://prometheus.istio-system:9090`

|

||||

`slack.url` | Slack incoming webhook | None

|

||||

`slack.channel` | Slack channel | None

|

||||

@@ -68,7 +67,8 @@ Specify each parameter using the `--set key=value[,key=value]` argument to `helm

|

||||

```console

|

||||

$ helm upgrade -i flagger flagger/flagger \

|

||||

--namespace istio-system \

|

||||

--set controlLoopInterval=1m

|

||||

--set slack.url=https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK \

|

||||

--set slack.channel=general

|

||||

```

|

||||

|

||||

Alternatively, a YAML file that specifies the values for the above parameters can be provided while installing the chart. For example,

|

||||

@@ -80,5 +80,5 @@ $ helm upgrade -i flagger flagger/flagger \

|

||||

```

|

||||

|

||||

> **Tip**: You can use the default [values.yaml](values.yaml)

|

||||

```

|

||||

|

||||

|

||||

|

||||

@@ -5,11 +5,14 @@ metadata:

|

||||

name: canaries.flagger.app

|

||||

spec:

|

||||

group: flagger.app

|

||||

version: v1alpha2

|

||||

version: v1alpha3

|

||||

versions:

|

||||

- name: v1alpha2

|

||||

- name: v1alpha3

|

||||

served: true

|

||||

storage: true

|

||||

- name: v1alpha2

|

||||

served: true

|

||||

storage: false

|

||||

- name: v1alpha1

|

||||

served: true

|

||||

storage: false

|

||||

@@ -17,7 +20,21 @@ spec:

|

||||

plural: canaries

|

||||

singular: canary

|

||||

kind: Canary

|

||||

categories:

|

||||

- all

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

additionalPrinterColumns:

|

||||

- name: Status

|

||||

type: string

|

||||

JSONPath: .status.phase

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

validation:

|

||||

openAPIV3Schema:

|

||||

properties:

|

||||

@@ -40,7 +57,9 @@ spec:

|

||||

name:

|

||||

type: string

|

||||

autoscalerRef:

|

||||

type: object

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

properties:

|

||||

apiVersion:

|

||||

@@ -57,6 +76,9 @@ spec:

|

||||

type: number

|

||||

canaryAnalysis:

|

||||

properties:

|

||||

interval:

|

||||

type: string

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

threshold:

|

||||

type: number

|

||||

maxWeight:

|

||||

@@ -74,7 +96,7 @@ spec:

|

||||

type: string

|

||||

interval:

|

||||

type: string

|

||||

pattern: "^[0-9]+(m)"

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

threshold:

|

||||

type: number

|

||||

webhooks:

|

||||

@@ -91,6 +113,5 @@ spec:

|

||||

format: url

|

||||

timeout:

|

||||

type: string

|

||||

pattern: "^[0-9]+(s)"

|

||||

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

{{- end }}

|

||||

|

||||

@@ -35,7 +35,6 @@ spec:

|

||||

command:

|

||||

- ./flagger

|

||||

- -log-level=info

|

||||

- -control-loop-interval={{ .Values.controlLoopInterval }}

|

||||

- -metrics-server={{ .Values.metricsServer }}

|

||||

{{- if .Values.slack.url }}

|

||||

- -slack-url={{ .Values.slack.url }}

|

||||

|

||||

@@ -2,10 +2,9 @@

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger

|

||||

tag: 0.2.0

|

||||

tag: 0.4.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

controlLoopInterval: "10s"

|

||||

metricsServer: "http://prometheus.istio-system.svc.cluster.local:9090"

|

||||

|

||||

slack:

|

||||

|

||||

@@ -37,7 +37,7 @@ func init() {

|

||||

flag.StringVar(&kubeconfig, "kubeconfig", "", "Path to a kubeconfig. Only required if out-of-cluster.")

|

||||

flag.StringVar(&masterURL, "master", "", "The address of the Kubernetes API server. Overrides any value in kubeconfig. Only required if out-of-cluster.")

|

||||

flag.StringVar(&metricsServer, "metrics-server", "http://prometheus:9090", "Prometheus URL")

|

||||

flag.DurationVar(&controlLoopInterval, "control-loop-interval", 10*time.Second, "wait interval between rollouts")

|

||||

flag.DurationVar(&controlLoopInterval, "control-loop-interval", 10*time.Second, "Kubernetes API sync interval")

|

||||

flag.StringVar(&logLevel, "log-level", "debug", "Log level can be: debug, info, warning, error.")

|

||||

flag.StringVar(&port, "port", "8080", "Port to listen on.")

|

||||

flag.StringVar(&slackURL, "slack-url", "", "Slack hook URL.")

|

||||

@@ -77,7 +77,7 @@ func main() {

|

||||

}

|

||||

|

||||

flaggerInformerFactory := informers.NewSharedInformerFactory(flaggerClient, time.Second*30)

|

||||

canaryInformer := flaggerInformerFactory.Flagger().V1alpha2().Canaries()

|

||||

canaryInformer := flaggerInformerFactory.Flagger().V1alpha3().Canaries()

|

||||

|

||||

logger.Infof("Starting flagger version %s revision %s", version.VERSION, version.REVISION)

|

||||

|

||||

|

||||

BIN

docs/flagger-0.3.0.tgz

Normal file

BIN

docs/flagger-0.3.0.tgz

Normal file

Binary file not shown.

BIN

docs/flagger-0.4.0.tgz

Normal file

BIN

docs/flagger-0.4.0.tgz

Normal file

Binary file not shown.

@@ -4,13 +4,20 @@ description: Flagger is an Istio progressive delivery Kubernetes operator

|

||||

|

||||

# Introduction

|

||||

|

||||

[Flagger](https://github.com/stefanprodan/flagger) is a **Kubernetes** operator that automates the promotion of canary deployments using **Istio** routing for traffic shifting and **Prometheus** metrics for canary analysis.

|

||||

[Flagger](https://github.com/stefanprodan/flagger) is a **Kubernetes** operator that automates the promotion of canary

|

||||

deployments using **Istio** routing for traffic shifting and **Prometheus** metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running integration tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators like HTTP requests success rate, requests average duration and pods health. Based on the **KPIs** analysis a canary is promoted or aborted and the analysis result is published to **Slack**.

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance

|

||||

indicators like HTTP requests success rate, requests average duration and pods health.

|

||||

Based on the **KPIs** analysis a canary is promoted or aborted and the analysis result is published to **Slack**.

|

||||

|

||||

|

||||

|

||||

Flagger can be configured with Kubernetes custom resources \(canaries.flagger.app kind\) and is compatible with any CI/CD solutions made for Kubernetes. Since Flagger is declarative and reacts to Kubernetes events, it can be used in **GitOps** pipelines together with Weave Flux or JenkinsX.

|

||||

Flagger can be configured with Kubernetes custom resources \(canaries.flagger.app kind\) and is compatible with

|

||||

any CI/CD solutions made for Kubernetes. Since Flagger is declarative and reacts to Kubernetes events,

|

||||

it can be used in **GitOps** pipelines together with Weave Flux or JenkinsX.

|

||||

|

||||

This project is sponsored by [Weaveworks](https://www.weave.works/)

|

||||

|

||||

|

||||

@@ -5,8 +5,8 @@

|

||||

|

||||

## Install

|

||||

|

||||

* [Install Flagger](install/installing-flagger.md)

|

||||

* [Install Grafana](install/installing-grafana.md)

|

||||

* [Install Flagger](install/install-flagger.md)

|

||||

* [Install Grafana](install/install-grafana.md)

|

||||

* [Install Istio](install/install-istio.md)

|

||||

|

||||

## Usage

|

||||

|

||||

@@ -2,14 +2,14 @@

|

||||

|

||||

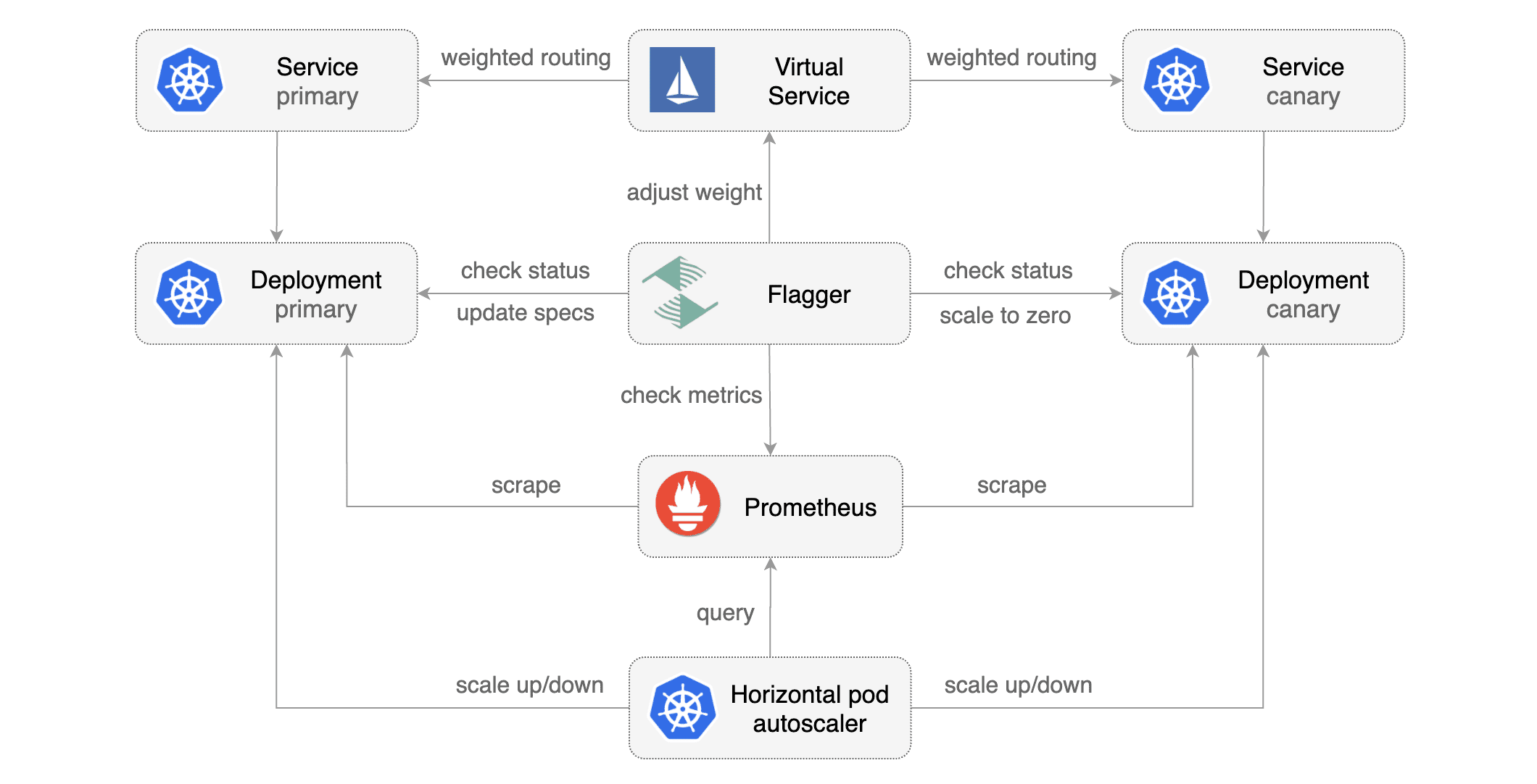

[Flagger](https://github.com/stefanprodan/flagger) takes a Kubernetes deployment and optionally a horizontal pod autoscaler \(HPA\) and creates a series of objects \(Kubernetes deployments, ClusterIP services and Istio virtual services\) to drive the canary analysis and promotion.

|

||||

|

||||

|

||||

|

||||

|

||||

### Canary Custom Resource

|

||||

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha2

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

@@ -36,8 +36,10 @@ spec:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

- podinfo.example.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

@@ -63,14 +65,35 @@ spec:

|

||||

- name: integration-tests

|

||||

url: http://podinfo.test:9898/echo

|

||||

timeout: 1m

|

||||

# key-value pairs (optional)

|

||||

metadata:

|

||||

test: "all"

|

||||

token: "16688eb5e9f289f1991c"

|

||||

```

|

||||

|

||||

**Note** that the target deployment must have a single label selector in the format `app: <DEPLOYMENT-NAME>`:

|

||||

|

||||

```yaml

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app: podinfo

|

||||

```

|

||||

|

||||

The target deployment should expose a TCP port that will be used by Flagger to create the ClusterIP Service and

|

||||

the Istio Virtual Service. The container port from the target deployment should match the `service.port` value.

|

||||

|

||||

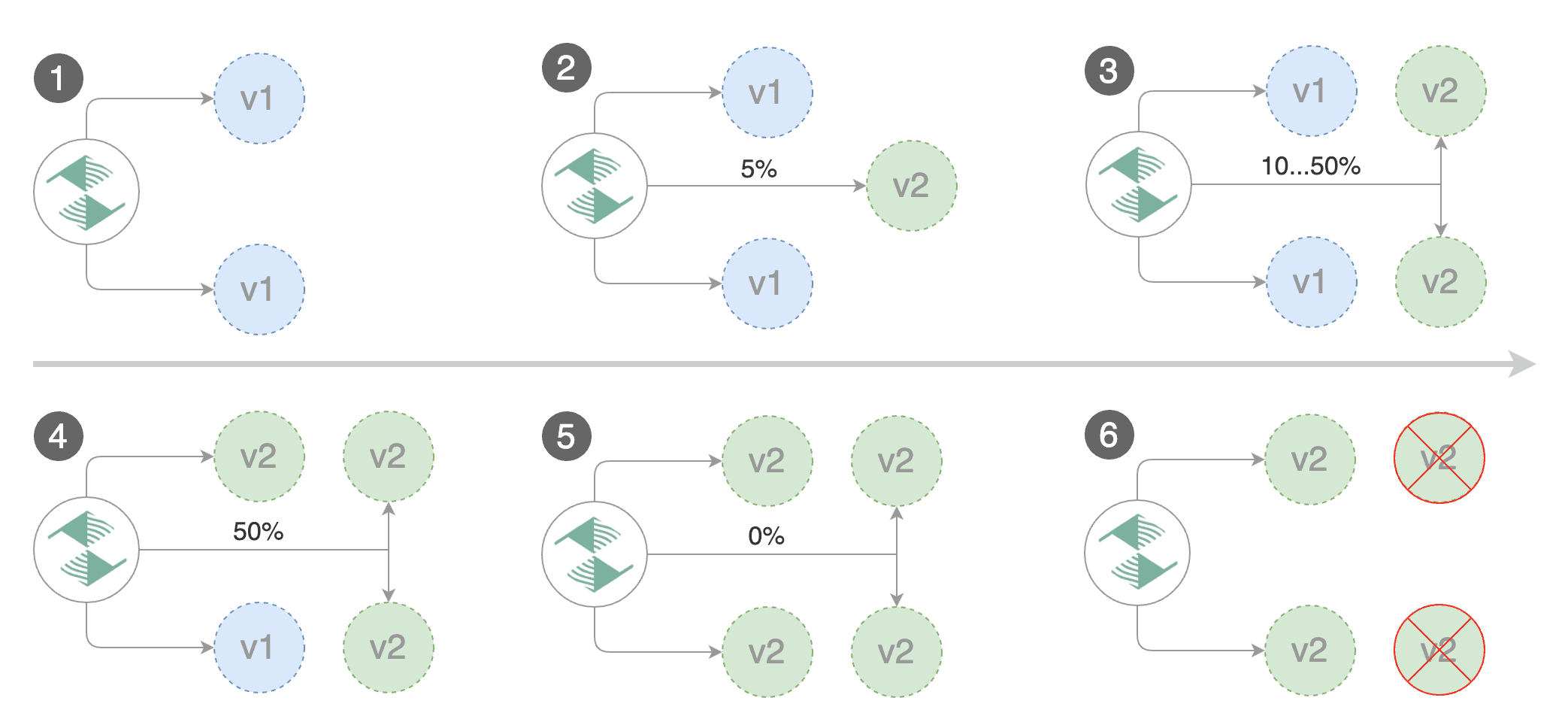

### Canary Deployment

|

||||

|

||||

|

||||

|

||||

|

||||

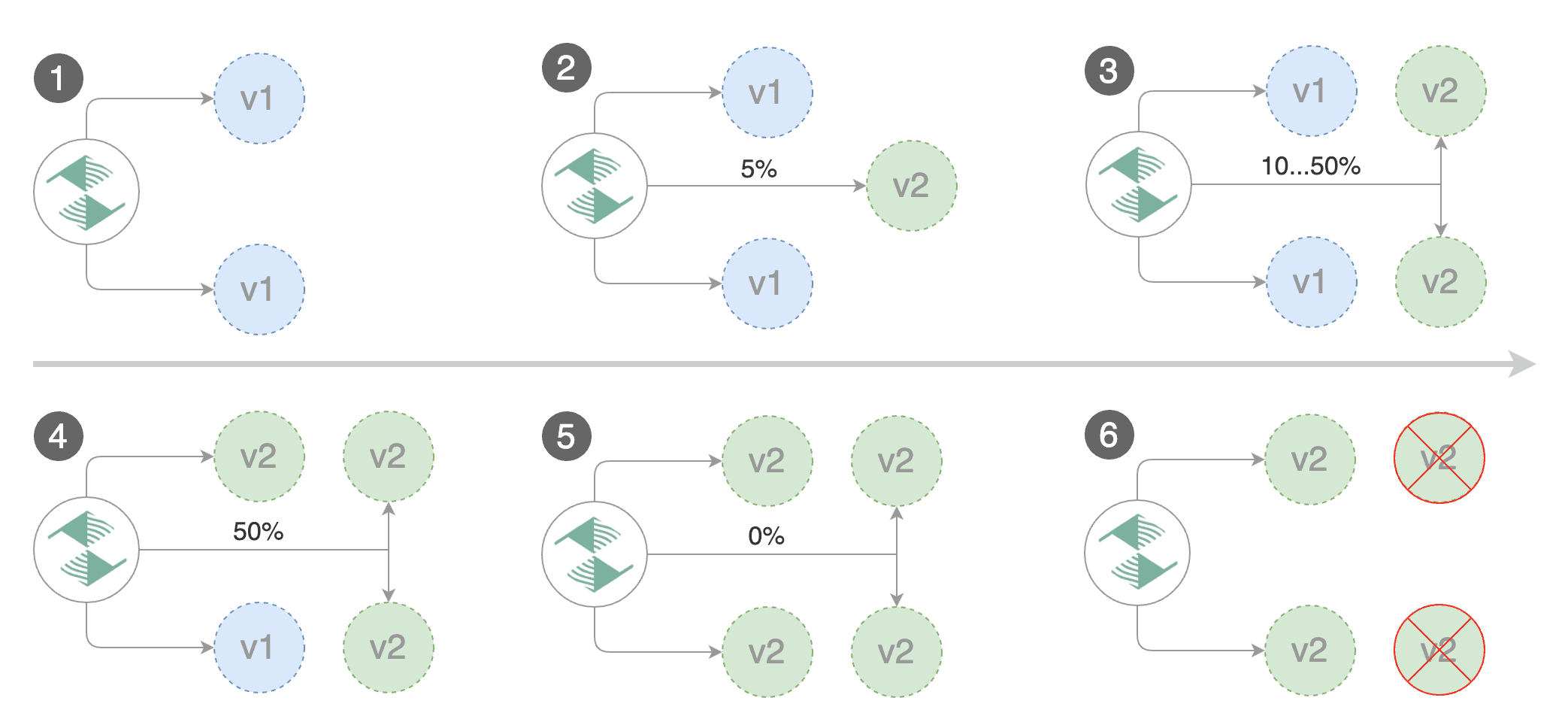

Gated canary promotion stages:

|

||||

|

||||

@@ -99,17 +122,21 @@ Gated canary promotion stages:

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark rollout as finished

|

||||

* mark the canary deployment as finished

|

||||

* wait for the canary deployment to be updated \(revision bump\) and start over

|

||||

|

||||

You can change the canary analysis _max weight_ and the _step weight_ percentage in the Flagger's custom resource.

|

||||

|

||||

### Canary Analysis

|

||||

|

||||

The canary analysis runs periodically until it reaches the maximum traffic weight or the failed checks threshold.

|

||||

|

||||

Spec:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

@@ -117,19 +144,20 @@ Spec:

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

stepWeight: 2

|

||||

```

|

||||

|

||||

The above analysis, if it succeeds, will run for 25 minutes while validating the HTTP metrics and webhooks every minute.

|

||||

You can determine the minimum time that it takes to validate and promote a canary deployment using this formula:

|

||||

|

||||

```

|

||||

controlLoopInterval * (maxWeight / stepWeight)

|

||||

interval * (maxWeight / stepWeight)

|

||||

```

|

||||

|

||||

And the time it takes for a canary to be rollback:

|

||||

And the time it takes for a canary to be rollback when the metrics or webhook checks are failing:

|

||||

|

||||

```

|

||||

controlLoopInterval * threshold

|

||||

interval * threshold

|

||||

```

|

||||

|

||||

### HTTP Metrics

|

||||

@@ -205,10 +233,12 @@ histogram_quantile(0.99,

|

||||

)

|

||||

```

|

||||

|

||||

> **Note** that the metric interval should be lower or equal to the control loop interval.

|

||||

|

||||

### Webhooks

|

||||

|

||||

The canary analysis can be extended with webhooks.

|

||||

Flagger would call a URL (HTTP POST) and determine from the response status code (HTTP 2xx) if the canary is failing or not.

|

||||

Flagger will call each webhook URL and determine from the response status code (HTTP 2xx) if the canary is failing or not.

|

||||

|

||||

Spec:

|

||||

|

||||

@@ -217,13 +247,21 @@ Spec:

|

||||

webhooks:

|

||||

- name: integration-tests

|

||||

url: http://podinfo.test:9898/echo

|

||||

timeout: 1m

|

||||

timeout: 30s

|

||||

metadata:

|

||||

test: "all"

|

||||

token: "16688eb5e9f289f1991c"

|

||||

- name: load-tests

|

||||

url: http://podinfo.test:9898/echo

|

||||

timeout: 30s

|

||||

metadata:

|

||||

key1: "val1"

|

||||

key2: "val2"

|

||||

```

|

||||

|

||||

Webhook payload:

|

||||

> **Note** that the sum of all webhooks timeouts should be lower than the control loop interval.

|

||||

|

||||

Webhook payload (HTTP POST):

|

||||

|

||||

```json

|

||||

{

|

||||

|

||||

@@ -1,10 +1,12 @@

|

||||

# Install Flagger

|

||||

|

||||

Before installing Flagger make sure you have [Istio](https://istio.io) running with Prometheus enabled. If you are new to Istio you can follow this GKE guide [Istio service mesh walk-through](https://docs.flagger.app/install/install-istio).

|

||||

Before installing Flagger make sure you have [Istio](https://istio.io) running with Prometheus enabled.

|

||||

If you are new to Istio you can follow this GKE guide

|

||||

[Istio service mesh walk-through](https://docs.flagger.app/install/install-istio).

|

||||

|

||||

**Prerequisites**

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

@@ -21,8 +23,7 @@ Deploy Flagger in the _**istio-system**_ namespace:

|

||||

```bash

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace=istio-system \

|

||||

--set metricsServer=http://prometheus.istio-system:9090 \

|

||||

--set controlLoopInterval=1m

|

||||

--set metricsServer=http://prometheus.istio-system:9090

|

||||

```

|

||||

|

||||

Enable **Slack** notifications:

|

||||

@@ -61,11 +62,12 @@ helm delete --purge flagger

|

||||

|

||||

The command removes all the Kubernetes components associated with the chart and deletes the release.

|

||||

|

||||

{% hint style="info" %}

|

||||

On uninstall the Flagger CRD will not be removed. Deleting the CRD will make Kubernetes remove all the objects owned by the CRD like Istio virtual services, Kubernetes deployments and ClusterIP services.

|

||||

{% endhint %}

|

||||

> **Note** that on uninstall the Canary CRD will not be removed.

|

||||

Deleting the CRD will make Kubernetes remove all the objects owned by Flagger like Istio virtual services,

|

||||

Kubernetes deployments and ClusterIP services.

|

||||

|

||||

If you want to remove all the objects created by Flagger you have delete the canary CRD with kubectl:

|

||||

|

||||

If you want to remove all the objects created by Flagger you have delete the Canary CRD with kubectl:

|

||||

|

||||

```text

|

||||

kubectl delete crd canaries.flagger.app

|

||||

@@ -1,12 +1,14 @@

|

||||

# Install Istio

|

||||

|

||||

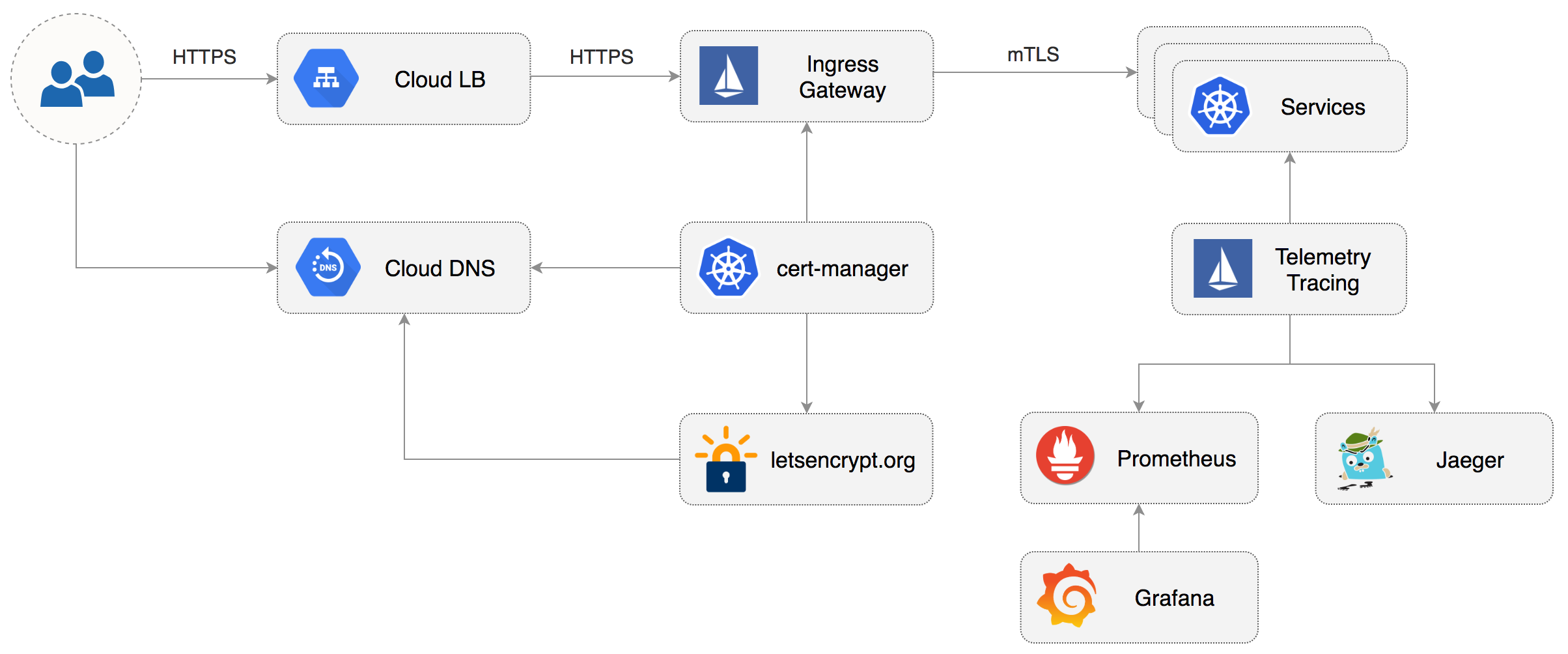

This guide walks you through setting up Istio with Jaeger, Prometheus, Grafana and Let’s Encrypt TLS for ingress gateway on Google Kubernetes Engine.

|

||||

This guide walks you through setting up Istio with Jaeger, Prometheus, Grafana and

|

||||

Let’s Encrypt TLS for ingress gateway on Google Kubernetes Engine.

|

||||

|

||||

|

||||

|

||||

### Prerequisites

|

||||

|

||||

You will be creating a cluster on Google’s Kubernetes Engine \(GKE\), if you don’t have an account you can sign up [here](https://cloud.google.com/free/) for free credits.

|

||||

You will be creating a cluster on Google’s Kubernetes Engine \(GKE\),

|

||||

if you don’t have an account you can sign up [here](https://cloud.google.com/free/) for free credits.

|

||||

|

||||

Login into GCP, create a project and enable billing for it.

|

||||

|

||||

@@ -64,7 +66,9 @@ gcloud container clusters create istio \

|

||||

--scopes=gke-default,compute-rw,storage-rw

|

||||

```

|

||||

|

||||

The above command will create a default node pool consisting of `n1-highcpu-4` \(vCPU: 4, RAM 3.60GB, DISK: 30GB\) preemptible VMs. Preemptible VMs are up to 80% cheaper than regular instances and are terminated and replaced after a maximum of 24 hours.

|

||||

The above command will create a default node pool consisting of `n1-highcpu-4` \(vCPU: 4, RAM 3.60GB, DISK: 30GB\)

|

||||

preemptible VMs. Preemptible VMs are up to 80% cheaper than regular instances and are terminated and replaced

|

||||

after a maximum of 24 hours.

|

||||

|

||||

Set up credentials for `kubectl`:

|

||||

|

||||

@@ -330,10 +334,11 @@ kubectl create secret generic cert-manager-credentials \

|

||||

--namespace=istio-system

|

||||

```

|

||||

|

||||

Create a letsencrypt issuer for CloudDNS \(replace `email@example.com` with a valid email address and `my-gcp-project`with your project ID\):

|

||||

Create a letsencrypt issuer for CloudDNS \(replace `email@example.com` with a valid email address and

|

||||

`my-gcp-project`with your project ID\):

|

||||

|

||||

```yaml

|

||||

apiVersion: v1alpha2

|

||||

apiVersion: certmanager.k8s.io/v1alpha1

|

||||

kind: Issuer

|

||||

metadata:

|

||||

name: letsencrypt-prod

|

||||

@@ -363,7 +368,7 @@ kubectl apply -f ./letsencrypt-issuer.yaml

|

||||

Create a wildcard certificate \(replace `example.com` with your domain\):

|

||||

|

||||

```yaml

|

||||

apiVersion: v1alpha2

|

||||

apiVersion: certmanager.k8s.io/v1alpha1

|

||||

kind: Certificate

|

||||

metadata:

|

||||

name: istio-gateway

|

||||

@@ -403,7 +408,10 @@ Recreate Istio ingress gateway pods:

|

||||

kubectl -n istio-system delete pods -l istio=ingressgateway

|

||||

```

|

||||

|

||||

Note that Istio gateway doesn't reload the certificates from the TLS secret on cert-manager renewal. Since the GKE cluster is made out of preemptible VMs the gateway pods will be replaced once every 24h, if your not using preemptible nodes then you need to manually kill the gateway pods every two months before the certificate expires.

|

||||

Note that Istio gateway doesn't reload the certificates from the TLS secret on cert-manager renewal.

|

||||

Since the GKE cluster is made out of preemptible VMs the gateway pods will be replaced once every 24h,

|

||||

if your not using preemptible nodes then you need to manually kill the gateway pods every two months

|

||||

before the certificate expires.

|

||||

|

||||

### Expose services outside the service mesh

|

||||

|

||||

|

||||

@@ -12,11 +12,13 @@ helm upgrade -i flagger flagger/flagger \

|

||||

--set slack.user=flagger

|

||||

```

|

||||

|

||||

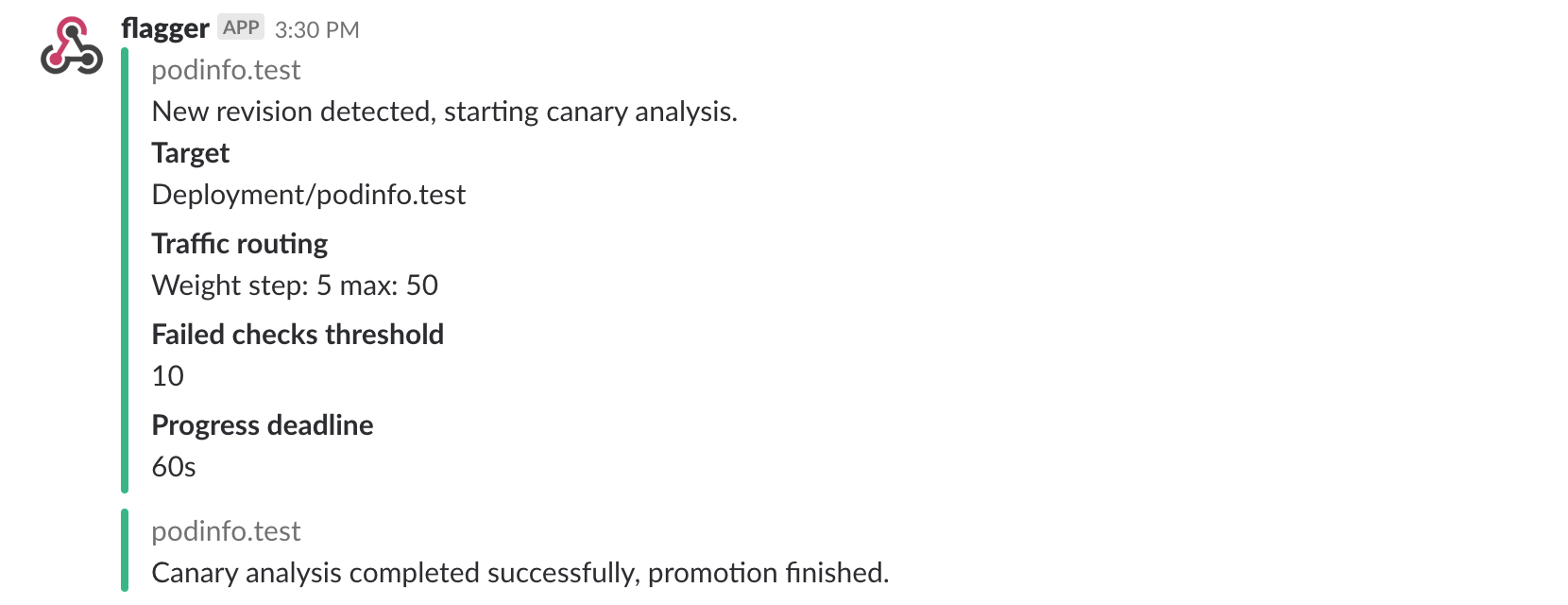

Once configured with a Slack incoming **webhook**, Flagger will post messages when a canary deployment has been initialised, when a new revision has been detected and if the canary analysis failed or succeeded.

|

||||

Once configured with a Slack incoming **webhook**, Flagger will post messages when a canary deployment

|

||||

has been initialised, when a new revision has been detected and if the canary analysis failed or succeeded.

|

||||

|

||||

|

||||

|

||||

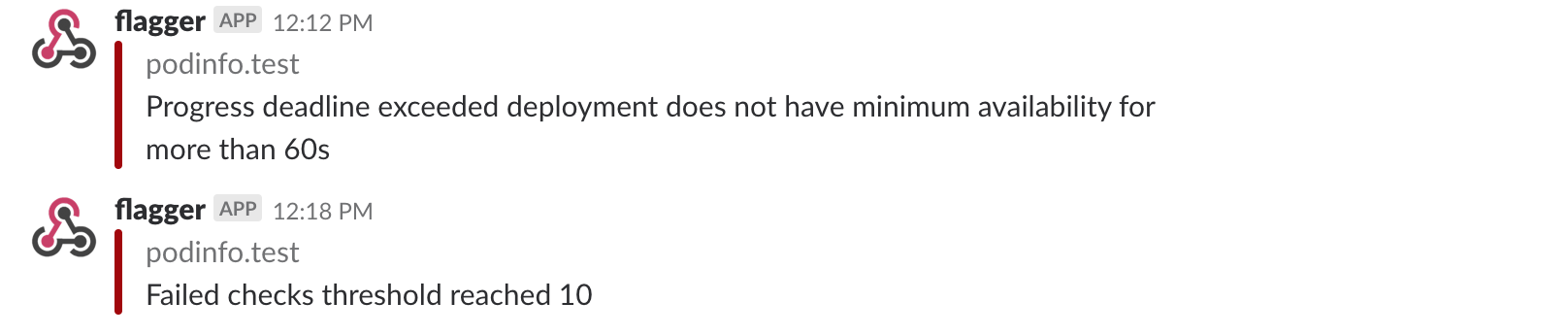

A canary deployment will be rolled back if the progress deadline exceeded or if the analysis reached the maximum number of failed checks:

|

||||

A canary deployment will be rolled back if the progress deadline exceeded or if the analysis reached the

|

||||

maximum number of failed checks:

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -44,7 +44,8 @@ Promotion completed! podinfo.test

|

||||

|

||||

### Metrics

|

||||

|

||||

Flagger exposes Prometheus metrics that can be used to determine the canary analysis status and the destination weight values:

|

||||

Flagger exposes Prometheus metrics that can be used to determine the canary analysis status and

|

||||

the destination weight values:

|

||||

|

||||

```bash

|

||||

# Canaries total gauge

|

||||

@@ -65,5 +66,4 @@ flagger_canary_duration_seconds_sum{name="podinfo",namespace="test"} 17.3561329

|

||||

flagger_canary_duration_seconds_count{name="podinfo",namespace="test"} 6

|

||||

```

|

||||

|

||||

####

|

||||

|

||||

|

||||

@@ -20,7 +20,7 @@ kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

Create a canary custom resource \(replace example.com with your own domain\):

|

||||

|

||||

```yaml

|

||||

apiVersion: v1alpha2

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

@@ -49,6 +49,8 @@ spec:

|

||||

hosts:

|

||||

- app.example.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 5

|

||||

# max traffic percentage routed to canary

|

||||

@@ -106,9 +108,9 @@ Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -130,6 +132,17 @@ Events:

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 15 2019-01-16T14:05:07Z

|

||||

prod frontend Succeeded 0 2019-01-15T16:15:07Z

|

||||

prod backend Failed 0 2019-01-14T17:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

@@ -160,9 +173,9 @@ When the number of failed checks reaches the canary analysis threshold, the traf

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 16695041

|

||||

Failed Checks: 10

|

||||

State: failed

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

@@ -179,5 +192,3 @@ Events:

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

####

|

||||

|

||||

|

||||

Binary file not shown.

@@ -1,9 +1,59 @@

|

||||

apiVersion: v1

|

||||

entries:

|

||||

flagger:

|

||||

- apiVersion: v1

|

||||

appVersion: 0.4.0

|

||||

created: 2019-01-18T12:49:18.099861+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: fe06de1c68c6cc414440ef681cde67ae02c771de9b1e4d2d264c38a7a9c37b3d

|

||||

engine: gotpl

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.11.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: flagger

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.4.0.tgz

|

||||

version: 0.4.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.3.0

|

||||

created: 2019-01-18T12:49:18.099501+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 8baa478cc802f4e6b7593934483359b8f70ec34413ca3b8de3a692e347a9bda4

|

||||

engine: gotpl

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.9.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: flagger

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.3.0.tgz

|

||||

version: 0.3.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.2.0

|

||||

created: 2019-01-04T13:38:42.239798+02:00

|

||||

created: 2019-01-18T12:49:18.099162+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -28,7 +78,7 @@ entries:

|

||||

version: 0.2.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.2

|

||||

created: 2019-01-04T13:38:42.239389+02:00

|

||||

created: 2019-01-18T12:49:18.098811+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -53,7 +103,7 @@ entries:

|

||||

version: 0.1.2

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.1

|

||||

created: 2019-01-04T13:38:42.238504+02:00

|

||||

created: 2019-01-18T12:49:18.098439+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -65,7 +115,7 @@ entries:

|

||||

version: 0.1.1

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.0

|

||||

created: 2019-01-04T13:38:42.237702+02:00

|

||||

created: 2019-01-18T12:49:18.098153+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -78,9 +128,9 @@ entries:

|

||||

grafana:

|

||||

- apiVersion: v1

|

||||

appVersion: 5.4.2

|

||||

created: 2019-01-04T13:38:42.24034+02:00

|

||||

created: 2019-01-18T12:49:18.100331+02:00

|

||||

description: Grafana dashboards for monitoring Flagger canary deployments

|

||||

digest: f94c0c2eaf7a7db7ef070575d280c37f93922c0e11ebdf203482c9f43603a1c9

|

||||

digest: 97257d1742aca506f8703922d67863c459c1b43177870bc6050d453d19a683c0

|

||||

home: https://flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

maintainers:

|

||||

@@ -93,4 +143,4 @@ entries:

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/grafana-0.1.0.tgz

|

||||

version: 0.1.0

|

||||

generated: 2019-01-04T13:38:42.236727+02:00

|

||||

generated: 2019-01-18T12:49:18.097682+02:00

|

||||

|

||||

@@ -23,6 +23,6 @@ CODEGEN_PKG=${CODEGEN_PKG:-$(cd ${SCRIPT_ROOT}; ls -d -1 ./vendor/k8s.io/code-ge

|

||||

|

||||

${CODEGEN_PKG}/generate-groups.sh "deepcopy,client,informer,lister" \

|

||||

github.com/stefanprodan/flagger/pkg/client github.com/stefanprodan/flagger/pkg/apis \

|

||||

flagger:v1alpha2 \

|

||||

flagger:v1alpha3 \

|

||||

--go-header-file ${SCRIPT_ROOT}/hack/boilerplate.go.txt

|

||||

|

||||

|

||||

@@ -16,6 +16,6 @@ limitations under the License.

|

||||

|

||||

// +k8s:deepcopy-gen=package

|

||||

|

||||

// Package v1alpha2 is the v1alpha2 version of the API.

|

||||

// Package v1alpha3 is the v1alpha3 version of the API.

|

||||

// +groupName=flagger.app

|

||||

package v1alpha2

|

||||

package v1alpha3

|

||||

@@ -14,7 +14,7 @@ See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

package v1alpha2

|

||||

package v1alpha3

|

||||

|

||||

import (

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

@@ -25,7 +25,7 @@ import (

|

||||

)

|

||||

|

||||

// SchemeGroupVersion is group version used to register these objects

|

||||

var SchemeGroupVersion = schema.GroupVersion{Group: rollout.GroupName, Version: "v1alpha2"}

|

||||

var SchemeGroupVersion = schema.GroupVersion{Group: rollout.GroupName, Version: "v1alpha3"}

|

||||

|

||||

// Kind takes an unqualified kind and returns back a Group qualified GroupKind

|

||||

func Kind(kind string) schema.GroupKind {

|

||||

@@ -14,16 +14,18 @@ See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

package v1alpha2

|

||||

package v1alpha3

|

||||

|

||||

import (

|

||||

hpav1 "k8s.io/api/autoscaling/v1"

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

"time"

|

||||

)

|

||||

|

||||

const (

|

||||

CanaryKind = "Canary"

|

||||

ProgressDeadlineSeconds = 600

|

||||

AnalysisInterval = 60 * time.Second

|

||||

)

|

||||

|

||||

// +genclient

|

||||

@@ -44,7 +46,8 @@ type CanarySpec struct {

|

||||

TargetRef hpav1.CrossVersionObjectReference `json:"targetRef"`

|

||||

|

||||

// reference to autoscaling resource

|

||||

AutoscalerRef hpav1.CrossVersionObjectReference `json:"autoscalerRef"`

|

||||

// +optional

|

||||

AutoscalerRef *hpav1.CrossVersionObjectReference `json:"autoscalerRef,omitempty"`

|

||||

|

||||

// virtual service spec

|

||||

Service CanaryService `json:"service"`

|

||||

@@ -53,7 +56,7 @@ type CanarySpec struct {

|

||||

CanaryAnalysis CanaryAnalysis `json:"canaryAnalysis"`

|

||||

|

||||

// the maximum time in seconds for a canary deployment to make progress

|

||||

// before it is considered to be failed. Defaults to 60s.

|

||||

// before it is considered to be failed. Defaults to ten minutes.

|

||||

ProgressDeadlineSeconds *int32 `json:"progressDeadlineSeconds,omitempty"`

|

||||

}

|

||||

|

||||

@@ -67,21 +70,30 @@ type CanaryList struct {

|

||||

Items []Canary `json:"items"`

|

||||

}

|

||||

|

||||

// CanaryState used for status state op

|

||||

type CanaryState string

|

||||

// CanaryPhase is a label for the condition of a canary at the current time

|

||||

type CanaryPhase string

|

||||

|

||||

const (

|

||||

CanaryRunning CanaryState = "running"

|

||||

CanaryFinished CanaryState = "finished"

|

||||

CanaryFailed CanaryState = "failed"

|

||||

CanaryInitialized CanaryState = "initialized"

|

||||

// CanaryInitialized means the primary deployment, hpa and ClusterIP services

|

||||

// have been created along with the Istio virtual service

|

||||

CanaryInitialized CanaryPhase = "Initialized"

|

||||

// CanaryProgressing means the canary analysis is underway

|

||||

CanaryProgressing CanaryPhase = "Progressing"

|

||||

// CanarySucceeded means the canary analysis has been successful

|

||||

// and the canary deployment has been promoted

|

||||

CanarySucceeded CanaryPhase = "Succeeded"

|

||||

// CanaryFailed means the canary analysis failed

|

||||

// and the canary deployment has been scaled to zero

|

||||

CanaryFailed CanaryPhase = "Failed"

|

||||

)

|

||||

|

||||

// CanaryStatus is used for state persistence (read-only)

|

||||

type CanaryStatus struct {

|

||||

State CanaryState `json:"state"`

|

||||

CanaryRevision string `json:"canaryRevision"`

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

Phase CanaryPhase `json:"phase"`

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

CanaryWeight int `json:"canaryWeight"`

|

||||

// +optional

|

||||

LastAppliedSpec string `json:"lastAppliedSpec,omitempty"`

|

||||

// +optional

|

||||

LastTransitionTime metav1.Time `json:"lastTransitionTime,omitempty"`

|

||||

}

|

||||

@@ -96,6 +108,7 @@ type CanaryService struct {

|

||||

|

||||

// CanaryAnalysis is used to describe how the analysis should be done

|

||||

type CanaryAnalysis struct {

|

||||

Interval string `json:"interval"`

|

||||

Threshold int `json:"threshold"`

|

||||

MaxWeight int `json:"maxWeight"`

|

||||

StepWeight int `json:"stepWeight"`

|

||||

@@ -134,3 +147,17 @@ func (c *Canary) GetProgressDeadlineSeconds() int {

|

||||

|

||||

return ProgressDeadlineSeconds

|

||||

}

|

||||

|

||||

// GetAnalysisInterval returns the canary analysis interval (default 60s)

|

||||

func (c *Canary) GetAnalysisInterval() time.Duration {

|

||||

if c.Spec.CanaryAnalysis.Interval == "" {

|

||||

return AnalysisInterval

|

||||

}

|

||||

|

||||

interval, err := time.ParseDuration(c.Spec.CanaryAnalysis.Interval)

|

||||

if err != nil {

|

||||

return AnalysisInterval

|

||||

}

|

||||

|

||||

return interval

|

||||

}

|

||||

@@ -18,9 +18,10 @@ limitations under the License.

|

||||

|

||||

// Code generated by deepcopy-gen. DO NOT EDIT.

|

||||

|

||||

package v1alpha2

|

||||

package v1alpha3

|

||||

|

||||

import (

|

||||

v1 "k8s.io/api/autoscaling/v1"

|

||||

runtime "k8s.io/apimachinery/pkg/runtime"

|

||||

)

|

||||

|

||||

@@ -159,7 +160,11 @@ func (in *CanaryService) DeepCopy() *CanaryService {

|

||||

func (in *CanarySpec) DeepCopyInto(out *CanarySpec) {

|

||||

*out = *in

|

||||

out.TargetRef = in.TargetRef

|

||||

out.AutoscalerRef = in.AutoscalerRef

|

||||

if in.AutoscalerRef != nil {

|

||||

in, out := &in.AutoscalerRef, &out.AutoscalerRef

|

||||

*out = new(v1.CrossVersionObjectReference)

|

||||

**out = **in

|

||||

}

|

||||

in.Service.DeepCopyInto(&out.Service)

|

||||

in.CanaryAnalysis.DeepCopyInto(&out.CanaryAnalysis)

|

||||

if in.ProgressDeadlineSeconds != nil {

|

||||

@@ -19,7 +19,7 @@ limitations under the License.

|

||||

package versioned

|

||||

|

||||

import (

|

||||

flaggerv1alpha2 "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/typed/flagger/v1alpha2"

|

||||

flaggerv1alpha3 "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/typed/flagger/v1alpha3"

|

||||

discovery "k8s.io/client-go/discovery"

|

||||

rest "k8s.io/client-go/rest"

|

||||

flowcontrol "k8s.io/client-go/util/flowcontrol"

|

||||

@@ -27,27 +27,27 @@ import (

|

||||

|

||||

type Interface interface {

|

||||

Discovery() discovery.DiscoveryInterface

|

||||

FlaggerV1alpha2() flaggerv1alpha2.FlaggerV1alpha2Interface

|

||||

FlaggerV1alpha3() flaggerv1alpha3.FlaggerV1alpha3Interface

|

||||

// Deprecated: please explicitly pick a version if possible.

|

||||

Flagger() flaggerv1alpha2.FlaggerV1alpha2Interface

|

||||

Flagger() flaggerv1alpha3.FlaggerV1alpha3Interface

|

||||

}

|

||||

|

||||

// Clientset contains the clients for groups. Each group has exactly one

|

||||

// version included in a Clientset.

|

||||

type Clientset struct {

|

||||

*discovery.DiscoveryClient

|

||||

flaggerV1alpha2 *flaggerv1alpha2.FlaggerV1alpha2Client

|

||||

flaggerV1alpha3 *flaggerv1alpha3.FlaggerV1alpha3Client

|

||||

}

|

||||

|

||||

// FlaggerV1alpha2 retrieves the FlaggerV1alpha2Client

|

||||

func (c *Clientset) FlaggerV1alpha2() flaggerv1alpha2.FlaggerV1alpha2Interface {

|

||||

return c.flaggerV1alpha2

|

||||

// FlaggerV1alpha3 retrieves the FlaggerV1alpha3Client

|

||||

func (c *Clientset) FlaggerV1alpha3() flaggerv1alpha3.FlaggerV1alpha3Interface {

|

||||

return c.flaggerV1alpha3

|

||||

}

|

||||

|

||||

// Deprecated: Flagger retrieves the default version of FlaggerClient.

|

||||

// Please explicitly pick a version.

|

||||

func (c *Clientset) Flagger() flaggerv1alpha2.FlaggerV1alpha2Interface {

|

||||

return c.flaggerV1alpha2

|

||||

func (c *Clientset) Flagger() flaggerv1alpha3.FlaggerV1alpha3Interface {

|

||||

return c.flaggerV1alpha3

|

||||

}

|

||||

|

||||

// Discovery retrieves the DiscoveryClient

|

||||

@@ -66,7 +66,7 @@ func NewForConfig(c *rest.Config) (*Clientset, error) {

|

||||

}

|

||||

var cs Clientset

|

||||

var err error

|

||||

cs.flaggerV1alpha2, err = flaggerv1alpha2.NewForConfig(&configShallowCopy)

|

||||

cs.flaggerV1alpha3, err = flaggerv1alpha3.NewForConfig(&configShallowCopy)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

@@ -82,7 +82,7 @@ func NewForConfig(c *rest.Config) (*Clientset, error) {

|

||||

// panics if there is an error in the config.

|

||||

func NewForConfigOrDie(c *rest.Config) *Clientset {

|

||||

var cs Clientset

|

||||

cs.flaggerV1alpha2 = flaggerv1alpha2.NewForConfigOrDie(c)

|

||||

cs.flaggerV1alpha3 = flaggerv1alpha3.NewForConfigOrDie(c)

|

||||

|

||||

cs.DiscoveryClient = discovery.NewDiscoveryClientForConfigOrDie(c)

|

||||

return &cs

|

||||

@@ -91,7 +91,7 @@ func NewForConfigOrDie(c *rest.Config) *Clientset {

|

||||

// New creates a new Clientset for the given RESTClient.

|

||||

func New(c rest.Interface) *Clientset {

|

||||

var cs Clientset

|

||||

cs.flaggerV1alpha2 = flaggerv1alpha2.New(c)

|

||||

cs.flaggerV1alpha3 = flaggerv1alpha3.New(c)

|

||||

|

||||

cs.DiscoveryClient = discovery.NewDiscoveryClient(c)

|

||||

return &cs

|

||||

|

||||

@@ -20,8 +20,8 @@ package fake

|

||||

|

||||

import (

|

||||

clientset "github.com/stefanprodan/flagger/pkg/client/clientset/versioned"

|

||||

flaggerv1alpha2 "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/typed/flagger/v1alpha2"

|

||||

fakeflaggerv1alpha2 "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/typed/flagger/v1alpha2/fake"

|

||||

flaggerv1alpha3 "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/typed/flagger/v1alpha3"

|

||||

fakeflaggerv1alpha3 "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/typed/flagger/v1alpha3/fake"

|

||||

"k8s.io/apimachinery/pkg/runtime"

|

||||

"k8s.io/apimachinery/pkg/watch"

|

||||

"k8s.io/client-go/discovery"

|

||||

@@ -71,12 +71,12 @@ func (c *Clientset) Discovery() discovery.DiscoveryInterface {

|

||||

|

||||

var _ clientset.Interface = &Clientset{}

|

||||

|

||||

// FlaggerV1alpha2 retrieves the FlaggerV1alpha2Client

|

||||

func (c *Clientset) FlaggerV1alpha2() flaggerv1alpha2.FlaggerV1alpha2Interface {

|

||||

return &fakeflaggerv1alpha2.FakeFlaggerV1alpha2{Fake: &c.Fake}

|

||||

// FlaggerV1alpha3 retrieves the FlaggerV1alpha3Client

|

||||

func (c *Clientset) FlaggerV1alpha3() flaggerv1alpha3.FlaggerV1alpha3Interface {

|

||||

return &fakeflaggerv1alpha3.FakeFlaggerV1alpha3{Fake: &c.Fake}

|

||||

}

|

||||

|

||||

// Flagger retrieves the FlaggerV1alpha2Client

|

||||

func (c *Clientset) Flagger() flaggerv1alpha2.FlaggerV1alpha2Interface {

|

||||

return &fakeflaggerv1alpha2.FakeFlaggerV1alpha2{Fake: &c.Fake}

|

||||

// Flagger retrieves the FlaggerV1alpha3Client

|

||||

func (c *Clientset) Flagger() flaggerv1alpha3.FlaggerV1alpha3Interface {

|

||||

return &fakeflaggerv1alpha3.FakeFlaggerV1alpha3{Fake: &c.Fake}

|

||||

}

|

||||

|

||||

@@ -19,7 +19,7 @@ limitations under the License.

|

||||

package fake

|

||||

|

||||

import (

|

||||

flaggerv1alpha2 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha2"

|

||||

flaggerv1alpha3 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

v1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

runtime "k8s.io/apimachinery/pkg/runtime"

|

||||

schema "k8s.io/apimachinery/pkg/runtime/schema"

|

||||

@@ -50,5 +50,5 @@ func init() {

|

||||

// After this, RawExtensions in Kubernetes types will serialize kube-aggregator types

|

||||

// correctly.

|

||||

func AddToScheme(scheme *runtime.Scheme) {

|

||||

flaggerv1alpha2.AddToScheme(scheme)

|

||||

flaggerv1alpha3.AddToScheme(scheme)

|

||||

}

|

||||

|

||||

@@ -19,7 +19,7 @@ limitations under the License.

|

||||

package scheme

|

||||

|

||||

import (

|

||||

flaggerv1alpha2 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha2"

|

||||

flaggerv1alpha3 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

v1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

runtime "k8s.io/apimachinery/pkg/runtime"

|

||||

schema "k8s.io/apimachinery/pkg/runtime/schema"

|

||||

@@ -50,5 +50,5 @@ func init() {

|

||||

// After this, RawExtensions in Kubernetes types will serialize kube-aggregator types

|

||||

// correctly.

|

||||

func AddToScheme(scheme *runtime.Scheme) {

|

||||

flaggerv1alpha2.AddToScheme(scheme)

|

||||

flaggerv1alpha3.AddToScheme(scheme)

|

||||

}

|

||||

|

||||

@@ -16,10 +16,10 @@ limitations under the License.

|

||||

|

||||

// Code generated by client-gen. DO NOT EDIT.

|

||||

|

||||

package v1alpha2

|

||||

package v1alpha3

|

||||

|

||||

import (

|

||||

v1alpha2 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha2"

|

||||

v1alpha3 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

scheme "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/scheme"

|

||||

v1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

types "k8s.io/apimachinery/pkg/types"

|

||||

@@ -35,15 +35,15 @@ type CanariesGetter interface {

|

||||

|

||||

// CanaryInterface has methods to work with Canary resources.

|

||||

type CanaryInterface interface {

|

||||

Create(*v1alpha2.Canary) (*v1alpha2.Canary, error)

|

||||

Update(*v1alpha2.Canary) (*v1alpha2.Canary, error)

|

||||

UpdateStatus(*v1alpha2.Canary) (*v1alpha2.Canary, error)

|

||||

Create(*v1alpha3.Canary) (*v1alpha3.Canary, error)

|

||||

Update(*v1alpha3.Canary) (*v1alpha3.Canary, error)

|

||||

UpdateStatus(*v1alpha3.Canary) (*v1alpha3.Canary, error)

|

||||

Delete(name string, options *v1.DeleteOptions) error

|

||||

DeleteCollection(options *v1.DeleteOptions, listOptions v1.ListOptions) error

|

||||

Get(name string, options v1.GetOptions) (*v1alpha2.Canary, error)

|

||||

List(opts v1.ListOptions) (*v1alpha2.CanaryList, error)

|

||||

Get(name string, options v1.GetOptions) (*v1alpha3.Canary, error)

|

||||

List(opts v1.ListOptions) (*v1alpha3.CanaryList, error)

|

||||

Watch(opts v1.ListOptions) (watch.Interface, error)

|

||||

Patch(name string, pt types.PatchType, data []byte, subresources ...string) (result *v1alpha2.Canary, err error)

|

||||

Patch(name string, pt types.PatchType, data []byte, subresources ...string) (result *v1alpha3.Canary, err error)

|

||||

CanaryExpansion

|

||||

}

|

||||

|

||||

@@ -54,7 +54,7 @@ type canaries struct {

|

||||

}

|

||||

|

||||

// newCanaries returns a Canaries

|

||||

func newCanaries(c *FlaggerV1alpha2Client, namespace string) *canaries {

|

||||

func newCanaries(c *FlaggerV1alpha3Client, namespace string) *canaries {

|

||||

return &canaries{

|

||||

client: c.RESTClient(),

|

||||

ns: namespace,

|

||||

@@ -62,8 +62,8 @@ func newCanaries(c *FlaggerV1alpha2Client, namespace string) *canaries {

|

||||

}

|

||||

|

||||

// Get takes name of the canary, and returns the corresponding canary object, and an error if there is any.

|

||||

func (c *canaries) Get(name string, options v1.GetOptions) (result *v1alpha2.Canary, err error) {

|

||||

result = &v1alpha2.Canary{}

|

||||

func (c *canaries) Get(name string, options v1.GetOptions) (result *v1alpha3.Canary, err error) {

|

||||

result = &v1alpha3.Canary{}

|

||||

err = c.client.Get().

|

||||

Namespace(c.ns).

|

||||

Resource("canaries").

|

||||

@@ -75,8 +75,8 @@ func (c *canaries) Get(name string, options v1.GetOptions) (result *v1alpha2.Can

|

||||

}

|

||||

|

||||

// List takes label and field selectors, and returns the list of Canaries that match those selectors.

|

||||

func (c *canaries) List(opts v1.ListOptions) (result *v1alpha2.CanaryList, err error) {

|

||||

result = &v1alpha2.CanaryList{}

|

||||

func (c *canaries) List(opts v1.ListOptions) (result *v1alpha3.CanaryList, err error) {

|

||||

result = &v1alpha3.CanaryList{}

|

||||

err = c.client.Get().

|

||||

Namespace(c.ns).

|

||||

Resource("canaries").

|

||||

@@ -97,8 +97,8 @@ func (c *canaries) Watch(opts v1.ListOptions) (watch.Interface, error) {

|

||||

}

|

||||

|

||||

// Create takes the representation of a canary and creates it. Returns the server's representation of the canary, and an error, if there is any.

|

||||

func (c *canaries) Create(canary *v1alpha2.Canary) (result *v1alpha2.Canary, err error) {

|

||||

result = &v1alpha2.Canary{}

|

||||

func (c *canaries) Create(canary *v1alpha3.Canary) (result *v1alpha3.Canary, err error) {

|

||||

result = &v1alpha3.Canary{}

|

||||

err = c.client.Post().

|

||||

Namespace(c.ns).

|

||||

Resource("canaries").

|

||||

@@ -109,8 +109,8 @@ func (c *canaries) Create(canary *v1alpha2.Canary) (result *v1alpha2.Canary, err

|

||||

}

|

||||

|

||||

// Update takes the representation of a canary and updates it. Returns the server's representation of the canary, and an error, if there is any.

|

||||

func (c *canaries) Update(canary *v1alpha2.Canary) (result *v1alpha2.Canary, err error) {

|

||||

result = &v1alpha2.Canary{}

|

||||

func (c *canaries) Update(canary *v1alpha3.Canary) (result *v1alpha3.Canary, err error) {

|

||||

result = &v1alpha3.Canary{}

|

||||

err = c.client.Put().

|

||||

Namespace(c.ns).

|

||||

Resource("canaries").

|

||||

@@ -124,8 +124,8 @@ func (c *canaries) Update(canary *v1alpha2.Canary) (result *v1alpha2.Canary, err

|

||||

// UpdateStatus was generated because the type contains a Status member.

|

||||

// Add a +genclient:noStatus comment above the type to avoid generating UpdateStatus().

|

||||

|

||||

func (c *canaries) UpdateStatus(canary *v1alpha2.Canary) (result *v1alpha2.Canary, err error) {

|

||||

result = &v1alpha2.Canary{}

|

||||

func (c *canaries) UpdateStatus(canary *v1alpha3.Canary) (result *v1alpha3.Canary, err error) {

|

||||

result = &v1alpha3.Canary{}

|

||||

err = c.client.Put().

|

||||

Namespace(c.ns).

|

||||

Resource("canaries").

|

||||

@@ -160,8 +160,8 @@ func (c *canaries) DeleteCollection(options *v1.DeleteOptions, listOptions v1.Li

|

||||

}

|

||||

|

||||

// Patch applies the patch and returns the patched canary.

|

||||

func (c *canaries) Patch(name string, pt types.PatchType, data []byte, subresources ...string) (result *v1alpha2.Canary, err error) {

|

||||

result = &v1alpha2.Canary{}

|

||||

func (c *canaries) Patch(name string, pt types.PatchType, data []byte, subresources ...string) (result *v1alpha3.Canary, err error) {

|

||||

result = &v1alpha3.Canary{}

|

||||

err = c.client.Patch(pt).

|

||||

Namespace(c.ns).

|

||||

Resource("canaries").

|

||||