mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-15 18:40:12 +00:00

Compare commits

94 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

e7fc72e6b5 | ||

|

|

4203232b05 | ||

|

|

a06aa05201 | ||

|

|

8e582e9b73 | ||

|

|

0e9fe8a446 | ||

|

|

27b4bcc648 | ||

|

|

614b7c74c4 | ||

|

|

5901129ec6 | ||

|

|

ded14345b4 | ||

|

|

dd272c6870 | ||

|

|

b31c7c6230 | ||

|

|

b0297213c3 | ||

|

|

d0fba2d111 | ||

|

|

9924cc2152 | ||

|

|

008a74f86c | ||

|

|

4ca110292f | ||

|

|

55b4c19670 | ||

|

|

8349dd1cda | ||

|

|

402fb66b2a | ||

|

|

f991274b97 | ||

|

|

0d94a49b6a | ||

|

|

7c14225442 | ||

|

|

2af0a050bc | ||

|

|

582f8d6abd | ||

|

|

eeea3123ac | ||

|

|

51fe43e169 | ||

|

|

6e6b127092 | ||

|

|

c9bacdfe05 | ||

|

|

f56a69770c | ||

|

|

0196124c9f | ||

|

|

63756d9d5f | ||

|

|

8e346960ac | ||

|

|

1b485b3459 | ||

|

|

ee05108279 | ||

|

|

dfaa039c9c | ||

|

|

46579d2ee6 | ||

|

|

f372523fb8 | ||

|

|

5e434df6ea | ||

|

|

d6c5bdd241 | ||

|

|

cdcd97244c | ||

|

|

60c4bba263 | ||

|

|

2b73bc5e38 | ||

|

|

03652dc631 | ||

|

|

00155aff37 | ||

|

|

206c3e6d7a | ||

|

|

8345fea812 | ||

|

|

c11dba1e05 | ||

|

|

7d4c3c5814 | ||

|

|

9b36794c9d | ||

|

|

1f34c656e9 | ||

|

|

9982dc9c83 | ||

|

|

780f3d2ab9 | ||

|

|

1cb09890fb | ||

|

|

faae6a7c3b | ||

|

|

d4250f3248 | ||

|

|

a8ee477b62 | ||

|

|

673b6102a7 | ||

|

|

316de42a2c | ||

|

|

dfb4b35e6c | ||

|

|

61ab596d1b | ||

|

|

3345692751 | ||

|

|

dff9287c75 | ||

|

|

b5fb7cdae5 | ||

|

|

2e79817437 | ||

|

|

5f439adc36 | ||

|

|

45df96ff3c | ||

|

|

98ee150364 | ||

|

|

d328a2146a | ||

|

|

4513f2e8be | ||

|

|

095fef1de6 | ||

|

|

754f02a30f | ||

|

|

01a4e7f6a8 | ||

|

|

6bba84422d | ||

|

|

26190d0c6a | ||

|

|

2d9098e43c | ||

|

|

7581b396b2 | ||

|

|

67a6366906 | ||

|

|

5605fab740 | ||

|

|

b76d0001ed | ||

|

|

625eed0840 | ||

|

|

37f9151de3 | ||

|

|

20af98e4dc | ||

|

|

76800d0ed0 | ||

|

|

3103bde7f7 | ||

|

|

298d8c2d65 | ||

|

|

5cdacf81e3 | ||

|

|

2141d88ce1 | ||

|

|

e8a2d4be2e | ||

|

|

9a9baadf0e | ||

|

|

a21e53fa31 | ||

|

|

61f8aea7d8 | ||

|

|

e384b03d49 | ||

|

|

655df36913 | ||

|

|

2e079ba7a1 |

@@ -10,6 +10,9 @@ jobs:

|

||||

- restore_cache:

|

||||

keys:

|

||||

- go-mod-v3-{{ checksum "go.sum" }}

|

||||

- run:

|

||||

name: Run go mod download

|

||||

command: go mod download

|

||||

- run:

|

||||

name: Run go fmt

|

||||

command: make test-fmt

|

||||

@@ -100,17 +103,6 @@ jobs:

|

||||

- run: test/e2e-smi-istio.sh

|

||||

- run: test/e2e-tests.sh canary

|

||||

|

||||

e2e-supergloo-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- attach_workspace:

|

||||

at: /tmp/bin

|

||||

- run: test/container-build.sh

|

||||

- run: test/e2e-kind.sh 0.2.1

|

||||

- run: test/e2e-supergloo.sh

|

||||

- run: test/e2e-tests.sh canary

|

||||

|

||||

e2e-gloo-testing:

|

||||

machine: true

|

||||

steps:

|

||||

@@ -203,9 +195,6 @@ workflows:

|

||||

- e2e-kubernetes-testing:

|

||||

requires:

|

||||

- build-binary

|

||||

# - e2e-supergloo-testing:

|

||||

# requires:

|

||||

# - build-binary

|

||||

- e2e-gloo-testing:

|

||||

requires:

|

||||

- build-binary

|

||||

@@ -220,7 +209,6 @@ workflows:

|

||||

- build-binary

|

||||

- e2e-istio-testing

|

||||

- e2e-kubernetes-testing

|

||||

#- e2e-supergloo-testing

|

||||

- e2e-gloo-testing

|

||||

- e2e-nginx-testing

|

||||

- e2e-linkerd-testing

|

||||

|

||||

65

CHANGELOG.md

65

CHANGELOG.md

@@ -2,6 +2,71 @@

|

||||

|

||||

All notable changes to this project are documented in this file.

|

||||

|

||||

## 0.20.2 (2019-11-07)

|

||||

|

||||

Adds support for exposing canaries outside the cluster using App Mesh Gateway annotations

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Expose canaries on public domains with App Mesh Gateway [#358](https://github.com/weaveworks/flagger/pull/358)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Use the specified replicas when scaling up the canary [#363](https://github.com/weaveworks/flagger/pull/363)

|

||||

|

||||

## 0.20.1 (2019-11-03)

|

||||

|

||||

Fixes promql execution and updates the load testing tools

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Update load tester Helm tools [#8349dd1](https://github.com/weaveworks/flagger/commit/8349dd1cda59a741c7bed9a0f67c0fc0fbff4635)

|

||||

- e2e testing: update providers [#346](https://github.com/weaveworks/flagger/pull/346)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Fix Prometheus query escape [#353](https://github.com/weaveworks/flagger/pull/353)

|

||||

- Updating hey release link [#350](https://github.com/weaveworks/flagger/pull/350)

|

||||

|

||||

## 0.20.0 (2019-10-21)

|

||||

|

||||

Adds support for [A/B Testing](https://docs.flagger.app/usage/progressive-delivery#traffic-mirroring) and retry policies when using App Mesh

|

||||

|

||||

#### Features

|

||||

|

||||

- Implement App Mesh A/B testing based on HTTP headers match conditions [#340](https://github.com/weaveworks/flagger/pull/340)

|

||||

- Implement App Mesh HTTP retry policy [#338](https://github.com/weaveworks/flagger/pull/338)

|

||||

- Implement metrics server override [#342](https://github.com/weaveworks/flagger/pull/342)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Add the app/name label to services and primary deployment [#333](https://github.com/weaveworks/flagger/pull/333)

|

||||

- Allow setting Slack and Teams URLs with env vars [#334](https://github.com/weaveworks/flagger/pull/334)

|

||||

- Refactor Gloo integration [#344](https://github.com/weaveworks/flagger/pull/344)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Generate unique names for App Mesh virtual routers and routes [#336](https://github.com/weaveworks/flagger/pull/336)

|

||||

|

||||

## 0.19.0 (2019-10-08)

|

||||

|

||||

Adds support for canary and blue/green [traffic mirroring](https://docs.flagger.app/usage/progressive-delivery#traffic-mirroring)

|

||||

|

||||

#### Features

|

||||

|

||||

- Add traffic mirroring for Istio service mesh [#311](https://github.com/weaveworks/flagger/pull/311)

|

||||

- Implement canary service target port [#327](https://github.com/weaveworks/flagger/pull/327)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Allow gPRC protocol for App Mesh [#325](https://github.com/weaveworks/flagger/pull/325)

|

||||

- Enforce blue/green when using Kubernetes networking [#326](https://github.com/weaveworks/flagger/pull/326)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Fix port discovery diff [#324](https://github.com/weaveworks/flagger/pull/324)

|

||||

- Helm chart: Enable Prometheus scraping of Flagger metrics [#2141d88](https://github.com/weaveworks/flagger/commit/2141d88ce1cc6be220dab34171c215a334ecde24)

|

||||

|

||||

## 0.18.6 (2019-10-03)

|

||||

|

||||

Adds support for App Mesh conformance tests and latency metric checks

|

||||

|

||||

@@ -6,19 +6,22 @@ RUN addgroup -S app \

|

||||

|

||||

WORKDIR /home/app

|

||||

|

||||

RUN curl -sSLo hey "https://storage.googleapis.com/jblabs/dist/hey_linux_v0.1.2" && \

|

||||

RUN curl -sSLo hey "https://storage.googleapis.com/hey-release/hey_linux_amd64" && \

|

||||

chmod +x hey && mv hey /usr/local/bin/hey

|

||||

|

||||

RUN curl -sSL "https://get.helm.sh/helm-v2.14.3-linux-amd64.tar.gz" | tar xvz && \

|

||||

# verify hey works

|

||||

RUN hey -n 1 -c 1 https://flagger.app > /dev/null && echo $? | grep 0

|

||||

|

||||

RUN curl -sSL "https://get.helm.sh/helm-v2.15.1-linux-amd64.tar.gz" | tar xvz && \

|

||||

chmod +x linux-amd64/helm && mv linux-amd64/helm /usr/local/bin/helm && \

|

||||

chmod +x linux-amd64/tiller && mv linux-amd64/tiller /usr/local/bin/tiller && \

|

||||

rm -rf linux-amd64

|

||||

|

||||

RUN curl -sSL "https://get.helm.sh/helm-v3.0.0-beta.3-linux-amd64.tar.gz" | tar xvz && \

|

||||

RUN curl -sSL "https://get.helm.sh/helm-v3.0.0-rc.2-linux-amd64.tar.gz" | tar xvz && \

|

||||

chmod +x linux-amd64/helm && mv linux-amd64/helm /usr/local/bin/helmv3 && \

|

||||

rm -rf linux-amd64

|

||||

|

||||

RUN GRPC_HEALTH_PROBE_VERSION=v0.3.0 && \

|

||||

RUN GRPC_HEALTH_PROBE_VERSION=v0.3.1 && \

|

||||

wget -qO /usr/local/bin/grpc_health_probe https://github.com/grpc-ecosystem/grpc-health-probe/releases/download/${GRPC_HEALTH_PROBE_VERSION}/grpc_health_probe-linux-amd64 && \

|

||||

chmod +x /usr/local/bin/grpc_health_probe

|

||||

|

||||

@@ -35,7 +38,7 @@ RUN chown -R app:app ./

|

||||

|

||||

USER app

|

||||

|

||||

RUN curl -sSL "https://github.com/rimusz/helm-tiller/archive/v0.8.3.tar.gz" | tar xvz && \

|

||||

helm init --client-only && helm plugin install helm-tiller-0.8.3 && helm plugin list

|

||||

RUN curl -sSL "https://github.com/rimusz/helm-tiller/archive/v0.9.3.tar.gz" | tar xvz && \

|

||||

helm init --client-only && helm plugin install helm-tiller-0.9.3 && helm plugin list

|

||||

|

||||

ENTRYPOINT ["./loadtester"]

|

||||

|

||||

40

README.md

40

README.md

@@ -39,7 +39,6 @@ Flagger documentation can be found at [docs.flagger.app](https://docs.flagger.ap

|

||||

* [FAQ](https://docs.flagger.app/faq)

|

||||

* Usage

|

||||

* [Istio canary deployments](https://docs.flagger.app/usage/progressive-delivery)

|

||||

* [Istio A/B testing](https://docs.flagger.app/usage/ab-testing)

|

||||

* [Linkerd canary deployments](https://docs.flagger.app/usage/linkerd-progressive-delivery)

|

||||

* [App Mesh canary deployments](https://docs.flagger.app/usage/appmesh-progressive-delivery)

|

||||

* [NGINX ingress controller canary deployments](https://docs.flagger.app/usage/nginx-progressive-delivery)

|

||||

@@ -70,7 +69,6 @@ metadata:

|

||||

spec:

|

||||

# service mesh provider (optional)

|

||||

# can be: kubernetes, istio, linkerd, appmesh, nginx, gloo, supergloo

|

||||

# use the kubernetes provider for Blue/Green style deployments

|

||||

provider: istio

|

||||

# deployment reference

|

||||

targetRef:

|

||||

@@ -86,14 +84,12 @@ spec:

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

# ClusterIP port number

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- podinfo.example.com

|

||||

# container port name or number (optional)

|

||||

targetPort: 9898

|

||||

# port name can be http or grpc (default http)

|

||||

portName: http

|

||||

# HTTP match conditions (optional)

|

||||

match:

|

||||

- uri:

|

||||

@@ -101,10 +97,6 @@ spec:

|

||||

# HTTP rewrite (optional)

|

||||

rewrite:

|

||||

uri: /

|

||||

# cross-origin resource sharing policy (optional)

|

||||

corsPolicy:

|

||||

allowOrigin:

|

||||

- example.com

|

||||

# request timeout (optional)

|

||||

timeout: 5s

|

||||

# promote the canary without analysing it (default false)

|

||||

@@ -144,7 +136,7 @@ spec:

|

||||

topic="podinfo"

|

||||

}[1m]

|

||||

)

|

||||

# external checks (optional)

|

||||

# testing (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

@@ -157,15 +149,17 @@ For more details on how the canary analysis and promotion works please [read the

|

||||

|

||||

## Features

|

||||

|

||||

| Feature | Istio | Linkerd | App Mesh | NGINX | Gloo |

|

||||

| -------------------------------------------- | ------------------ | ------------------ |------------------ |------------------ |------------------ |

|

||||

| Canary deployments (weighted traffic) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| A/B testing (headers and cookies filters) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Webhooks (acceptance/load testing) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request success rate check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request duration check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Custom promql checks | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Traffic policy, CORS, retries and timeouts | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: |

|

||||

| Feature | Istio | Linkerd | App Mesh | NGINX | Gloo | Kubernetes CNI |

|

||||

| -------------------------------------------- | ------------------ | ------------------ |------------------ |------------------ |------------------ |------------------ |

|

||||

| Canary deployments (weighted traffic) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| A/B testing (headers and cookies routing) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: |

|

||||

| Blue/Green deployments (traffic switch) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Webhooks (acceptance/load testing) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Manual gating (approve/pause/resume) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request success rate check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Request duration check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Custom promql checks | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Traffic policy, CORS, retries and timeouts | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: |

|

||||

|

||||

## Roadmap

|

||||

|

||||

|

||||

@@ -1,67 +0,0 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: abtest

|

||||

namespace: test

|

||||

labels:

|

||||

app: abtest

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: abtest

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: abtest

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.7.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: blue

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

@@ -1,19 +0,0 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: abtest

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: abtest

|

||||

minReplicas: 2

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

@@ -20,8 +20,16 @@ spec:

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# container port name (optional)

|

||||

# can be http or grpc

|

||||

portName: http

|

||||

# App Mesh reference

|

||||

meshName: global

|

||||

# App Mesh retry policy (optional)

|

||||

retries:

|

||||

attempts: 3

|

||||

perTryTimeout: 1s

|

||||

retryOn: "gateway-error,client-error,stream-error"

|

||||

# define the canary analysis timing and KPIs

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

|

||||

@@ -1,14 +1,14 @@

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: abtest

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: abtest

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

@@ -16,7 +16,7 @@ spec:

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: abtest

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

@@ -26,7 +26,12 @@ spec:

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- abtest.istio.weavedx.com

|

||||

- app.example.com

|

||||

# Istio traffic policy (optional)

|

||||

trafficPolicy:

|

||||

tls:

|

||||

# use ISTIO_MUTUAL when mTLS is enabled

|

||||

mode: DISABLE

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

@@ -36,12 +41,12 @@ spec:

|

||||

iterations: 10

|

||||

# canary match condition

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: "^(?!.*Chrome)(?=.*\bSafari\b).*$"

|

||||

- headers:

|

||||

cookie:

|

||||

regex: "^(.*?;)?(type=insider)(;.*)?$"

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: "(?=.*Safari)(?!.*Chrome).*$"

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

@@ -37,7 +37,7 @@ spec:

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.istio.weavedx.com

|

||||

- app.example.com

|

||||

# Istio traffic policy (optional)

|

||||

trafficPolicy:

|

||||

tls:

|

||||

|

||||

@@ -20,12 +20,13 @@ spec:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

prometheus.io/port: "9898"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: stefanprodan/podinfo:2.0.0

|

||||

image: stefanprodan/podinfo:3.1.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

|

||||

@@ -41,6 +41,10 @@ spec:

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.interval

|

||||

priority: 1

|

||||

- name: Mirror

|

||||

type: boolean

|

||||

JSONPath: .spec.canaryAnalysis.mirror

|

||||

priority: 1

|

||||

- name: StepWeight

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.stepWeight

|

||||

@@ -64,6 +68,9 @@ spec:

|

||||

provider:

|

||||

description: Traffic managent provider

|

||||

type: string

|

||||

metricsServer:

|

||||

description: Prometheus URL

|

||||

type: string

|

||||

progressDeadlineSeconds:

|

||||

description: Deployment progress deadline

|

||||

type: number

|

||||

@@ -114,6 +121,11 @@ spec:

|

||||

portName:

|

||||

description: Container port name

|

||||

type: string

|

||||

targetPort:

|

||||

description: Container target port name

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: number

|

||||

portDiscovery:

|

||||

description: Enable port dicovery

|

||||

type: boolean

|

||||

@@ -183,6 +195,9 @@ spec:

|

||||

stepWeight:

|

||||

description: Canary incremental traffic percentage step

|

||||

type: number

|

||||

mirror:

|

||||

description: Mirror traffic to canary before shifting

|

||||

type: boolean

|

||||

match:

|

||||

description: A/B testing match conditions

|

||||

anyOf:

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: weaveworks/flagger:0.18.6

|

||||

image: weaveworks/flagger:0.20.2

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -4,6 +4,7 @@ metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

provider: gloo

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

@@ -28,9 +29,24 @@ spec:

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

webhooks:

|

||||

- name: acceptance-test

|

||||

type: pre-rollout

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 10s

|

||||

metadata:

|

||||

type: bash

|

||||

cmd: "curl -sd 'test' http://podinfo-canary:9898/token | grep token"

|

||||

- name: gloo-acceptance-test

|

||||

type: pre-rollout

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 10s

|

||||

metadata:

|

||||

type: bash

|

||||

cmd: "curl -sd 'test' -H 'Host: app.example.com' http://gateway-proxy-v2.gloo-system/token | grep token"

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://gloo.example.com/"

|

||||

cmd: "hey -z 2m -q 5 -c 2 -host app.example.com http://gateway-proxy-v2.gloo-system"

|

||||

logCmdOutput: "true"

|

||||

|

||||

@@ -1,67 +0,0 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.7.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: blue

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

@@ -1,19 +0,0 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 1

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

@@ -7,11 +7,11 @@ spec:

|

||||

virtualHost:

|

||||

domains:

|

||||

- '*'

|

||||

name: podinfo.default

|

||||

name: podinfo

|

||||

routes:

|

||||

- matcher:

|

||||

prefix: /

|

||||

routeAction:

|

||||

upstreamGroup:

|

||||

name: podinfo

|

||||

namespace: gloo

|

||||

namespace: test

|

||||

|

||||

@@ -17,7 +17,7 @@ spec:

|

||||

spec:

|

||||

containers:

|

||||

- name: loadtester

|

||||

image: weaveworks/flagger-loadtester:0.8.0

|

||||

image: weaveworks/flagger-loadtester:0.11.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -23,8 +23,10 @@ spec:

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# ClusterIP port number

|

||||

port: 80

|

||||

# container port number or name

|

||||

targetPort: 9898

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

|

||||

@@ -1,69 +0,0 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

replicas: 1

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.7.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: green

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

failureThreshold: 3

|

||||

periodSeconds: 10

|

||||

successThreshold: 1

|

||||

timeoutSeconds: 2

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

failureThreshold: 3

|

||||

periodSeconds: 3

|

||||

successThreshold: 1

|

||||

timeoutSeconds: 2

|

||||

resources:

|

||||

limits:

|

||||

cpu: 1000m

|

||||

memory: 256Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 16Mi

|

||||

@@ -1,19 +0,0 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 2

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

@@ -1,131 +0,0 @@

|

||||

apiVersion: apiextensions.k8s.io/v1beta1

|

||||

kind: CustomResourceDefinition

|

||||

metadata:

|

||||

name: trafficsplits.split.smi-spec.io

|

||||

spec:

|

||||

additionalPrinterColumns:

|

||||

- JSONPath: .spec.service

|

||||

description: The service

|

||||

name: Service

|

||||

type: string

|

||||

group: split.smi-spec.io

|

||||

names:

|

||||

kind: TrafficSplit

|

||||

listKind: TrafficSplitList

|

||||

plural: trafficsplits

|

||||

singular: trafficsplit

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

version: v1alpha1

|

||||

versions:

|

||||

- name: v1alpha1

|

||||

served: true

|

||||

storage: true

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

namespace: istio-system

|

||||

---

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

kind: ClusterRole

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

rules:

|

||||

- apiGroups:

|

||||

- ""

|

||||

resources:

|

||||

- pods

|

||||

- services

|

||||

- endpoints

|

||||

- persistentvolumeclaims

|

||||

- events

|

||||

- configmaps

|

||||

- secrets

|

||||

verbs:

|

||||

- '*'

|

||||

- apiGroups:

|

||||

- apps

|

||||

resources:

|

||||

- deployments

|

||||

- daemonsets

|

||||

- replicasets

|

||||

- statefulsets

|

||||

verbs:

|

||||

- '*'

|

||||

- apiGroups:

|

||||

- monitoring.coreos.com

|

||||

resources:

|

||||

- servicemonitors

|

||||

verbs:

|

||||

- get

|

||||

- create

|

||||

- apiGroups:

|

||||

- apps

|

||||

resourceNames:

|

||||

- smi-adapter-istio

|

||||

resources:

|

||||

- deployments/finalizers

|

||||

verbs:

|

||||

- update

|

||||

- apiGroups:

|

||||

- split.smi-spec.io

|

||||

resources:

|

||||

- '*'

|

||||

verbs:

|

||||

- '*'

|

||||

- apiGroups:

|

||||

- networking.istio.io

|

||||

resources:

|

||||

- '*'

|

||||

verbs:

|

||||

- '*'

|

||||

---

|

||||

kind: ClusterRoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

subjects:

|

||||

- kind: ServiceAccount

|

||||

name: smi-adapter-istio

|

||||

namespace: istio-system

|

||||

roleRef:

|

||||

kind: ClusterRole

|

||||

name: smi-adapter-istio

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

---

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

namespace: istio-system

|

||||

spec:

|

||||

replicas: 1

|

||||

selector:

|

||||

matchLabels:

|

||||

name: smi-adapter-istio

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

name: smi-adapter-istio

|

||||

annotations:

|

||||

sidecar.istio.io/inject: "false"

|

||||

spec:

|

||||

serviceAccountName: smi-adapter-istio

|

||||

containers:

|

||||

- name: smi-adapter-istio

|

||||

image: docker.io/stefanprodan/smi-adapter-istio:0.0.2-beta.1

|

||||

command:

|

||||

- smi-adapter-istio

|

||||

imagePullPolicy: Always

|

||||

env:

|

||||

- name: WATCH_NAMESPACE

|

||||

value: ""

|

||||

- name: POD_NAME

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: OPERATOR_NAME

|

||||

value: "smi-adapter-istio"

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.18.6

|

||||

appVersion: 0.18.6

|

||||

version: 0.20.2

|

||||

appVersion: 0.20.2

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio, Linkerd, App Mesh, Gloo or NGINX routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

@@ -68,6 +68,7 @@ Parameter | Description | Default

|

||||

`image.pullPolicy` | image pull policy | `IfNotPresent`

|

||||

`prometheus.install` | if `true`, installs Prometheus configured to scrape all pods in the custer including the App Mesh sidecar | `false`

|

||||

`metricsServer` | Prometheus URL, used when `prometheus.install` is `false` | `http://prometheus.istio-system:9090`

|

||||

`selectorLabels` | list of labels that Flagger uses to create pod selectors | `app,name,app.kubernetes.io/name`

|

||||

`slack.url` | Slack incoming webhook | None

|

||||

`slack.channel` | Slack channel | None

|

||||

`slack.user` | Slack username | `flagger`

|

||||

|

||||

@@ -42,6 +42,10 @@ spec:

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.interval

|

||||

priority: 1

|

||||

- name: Mirror

|

||||

type: boolean

|

||||

JSONPath: .spec.canaryAnalysis.mirror

|

||||

priority: 1

|

||||

- name: StepWeight

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.stepWeight

|

||||

@@ -65,6 +69,9 @@ spec:

|

||||

provider:

|

||||

description: Traffic managent provider

|

||||

type: string

|

||||

metricsServer:

|

||||

description: Prometheus URL

|

||||

type: string

|

||||

progressDeadlineSeconds:

|

||||

description: Deployment progress deadline

|

||||

type: number

|

||||

@@ -115,6 +122,11 @@ spec:

|

||||

portName:

|

||||

description: Container port name

|

||||

type: string

|

||||

targetPort:

|

||||

description: Container target port name

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: number

|

||||

portDiscovery:

|

||||

description: Enable port dicovery

|

||||

type: boolean

|

||||

@@ -184,6 +196,9 @@ spec:

|

||||

stepWeight:

|

||||

description: Canary incremental traffic percentage step

|

||||

type: number

|

||||

mirror:

|

||||

description: Mirror traffic to canary before shifting

|

||||

type: boolean

|

||||

match:

|

||||

description: A/B testing match conditions

|

||||

anyOf:

|

||||

|

||||

@@ -61,6 +61,9 @@ spec:

|

||||

{{- else }}

|

||||

- -metrics-server={{ .Values.metricsServer }}

|

||||

{{- end }}

|

||||

{{- if .Values.selectorLabels }}

|

||||

- -selector-labels={{ .Values.selectorLabels }}

|

||||

{{- end }}

|

||||

{{- if .Values.namespace }}

|

||||

- -namespace={{ .Values.namespace }}

|

||||

{{- end }}

|

||||

@@ -99,6 +102,10 @@ spec:

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

{{- if .Values.env }}

|

||||

env:

|

||||

{{ toYaml .Values.env | indent 12 }}

|

||||

{{- end }}

|

||||

resources:

|

||||

{{ toYaml .Values.resources | indent 12 }}

|

||||

{{- with .Values.nodeSelector }}

|

||||

|

||||

@@ -133,38 +133,22 @@ data:

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

insecure_skip_verify: true

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

relabel_configs:

|

||||

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

|

||||

action: keep

|

||||

regex: kubernetes;https

|

||||

|

||||

# Scrape config for nodes

|

||||

- job_name: 'kubernetes-nodes'

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

kubernetes_sd_configs:

|

||||

- role: node

|

||||

relabel_configs:

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_node_label_(.+)

|

||||

- target_label: __address__

|

||||

replacement: kubernetes.default.svc:443

|

||||

- source_labels: [__meta_kubernetes_node_name]

|

||||

regex: (.+)

|

||||

target_label: __metrics_path__

|

||||

replacement: /api/v1/nodes/${1}/proxy/metrics

|

||||

|

||||

# scrape config for cAdvisor

|

||||

- job_name: 'kubernetes-cadvisor'

|

||||

scheme: https

|

||||

tls_config:

|

||||

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

|

||||

insecure_skip_verify: true

|

||||

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

|

||||

kubernetes_sd_configs:

|

||||

- role: node

|

||||

- role: node

|

||||

relabel_configs:

|

||||

- action: labelmap

|

||||

regex: __meta_kubernetes_node_label_(.+)

|

||||

@@ -174,6 +158,14 @@ data:

|

||||

regex: (.+)

|

||||

target_label: __metrics_path__

|

||||

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

|

||||

# exclude high cardinality metrics

|

||||

metric_relabel_configs:

|

||||

- source_labels: [__name__]

|

||||

regex: (container|machine)_(cpu|memory|network|fs)_(.+)

|

||||

action: keep

|

||||

- source_labels: [__name__]

|

||||

regex: container_memory_failures_total

|

||||

action: drop

|

||||

|

||||

# scrape config for pods

|

||||

- job_name: kubernetes-pods

|

||||

|

||||

@@ -2,20 +2,26 @@

|

||||

|

||||

image:

|

||||

repository: weaveworks/flagger

|

||||

tag: 0.18.6

|

||||

tag: 0.20.2

|

||||

pullPolicy: IfNotPresent

|

||||

pullSecret:

|

||||

|

||||

podAnnotations: {}

|

||||

podAnnotations:

|

||||

prometheus.io/scrape: "true"

|

||||

prometheus.io/port: "8080"

|

||||

|

||||

metricsServer: "http://prometheus:9090"

|

||||

|

||||

# accepted values are istio, appmesh, nginx or supergloo:mesh.namespace (defaults to istio)

|

||||

# accepted values are kubernetes, istio, linkerd, appmesh, nginx, gloo or supergloo:mesh.namespace (defaults to istio)

|

||||

meshProvider: ""

|

||||

|

||||

# single namespace restriction

|

||||

namespace: ""

|

||||

|

||||

# list of pod labels that Flagger uses to create pod selectors

|

||||

# defaults to: app,name,app.kubernetes.io/name

|

||||

selectorLabels: ""

|

||||

|

||||

slack:

|

||||

user: flagger

|

||||

channel:

|

||||

@@ -26,6 +32,19 @@ msteams:

|

||||

# MS Teams incoming webhook URL

|

||||

url:

|

||||

|

||||

#env:

|

||||

#- name: SLACK_URL

|

||||

# valueFrom:

|

||||

# secretKeyRef:

|

||||

# name: slack

|

||||

# key: url

|

||||

#- name: MSTEAMS_URL

|

||||

# valueFrom:

|

||||

# secretKeyRef:

|

||||

# name: msteams

|

||||

# key: url

|

||||

env: []

|

||||

|

||||

leaderElection:

|

||||

enabled: false

|

||||

replicaCount: 1

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: loadtester

|

||||

version: 0.8.0

|

||||

appVersion: 0.8.0

|

||||

version: 0.11.0

|

||||

appVersion: 0.11.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger's load testing services based on rakyll/hey and bojand/ghz that generates traffic during canary analysis when configured as a webhook.

|

||||

|

||||

@@ -2,10 +2,12 @@ replicaCount: 1

|

||||

|

||||

image:

|

||||

repository: weaveworks/flagger-loadtester

|

||||

tag: 0.8.0

|

||||

tag: 0.11.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

podAnnotations: {}

|

||||

podAnnotations:

|

||||

prometheus.io/scrape: "true"

|

||||

prometheus.io/port: "8080"

|

||||

|

||||

logLevel: info

|

||||

cmd:

|

||||

|

||||

@@ -286,10 +286,10 @@ func startLeaderElection(ctx context.Context, run func(), ns string, kubeClient

|

||||

|

||||

func initNotifier(logger *zap.SugaredLogger) (client notifier.Interface) {

|

||||

provider := "slack"

|

||||

notifierURL := slackURL

|

||||

if msteamsURL != "" {

|

||||

notifierURL := fromEnv("SLACK_URL", slackURL)

|

||||

if msteamsURL != "" || os.Getenv("MSTEAMS_URL") != "" {

|

||||

provider = "msteams"

|

||||

notifierURL = msteamsURL

|

||||

notifierURL = fromEnv("MSTEAMS_URL", msteamsURL)

|

||||

}

|

||||

notifierFactory := notifier.NewFactory(notifierURL, slackUser, slackChannel)

|

||||

|

||||

@@ -304,3 +304,10 @@ func initNotifier(logger *zap.SugaredLogger) (client notifier.Interface) {

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

func fromEnv(envVar string, defaultVal string) string {

|

||||

if os.Getenv(envVar) != "" {

|

||||

return os.Getenv(envVar)

|

||||

}

|

||||

return defaultVal

|

||||

}

|

||||

|

||||

@@ -10,7 +10,7 @@ import (

|

||||

"time"

|

||||

)

|

||||

|

||||

var VERSION = "0.8.0"

|

||||

var VERSION = "0.11.0"

|

||||

var (

|

||||

logLevel string

|

||||

port string

|

||||

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 158 KiB After Width: | Height: | Size: 30 KiB |

BIN

docs/diagrams/flagger-canary-traffic-mirroring.png

Normal file

BIN

docs/diagrams/flagger-canary-traffic-mirroring.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 39 KiB |

@@ -7,10 +7,12 @@

|

||||

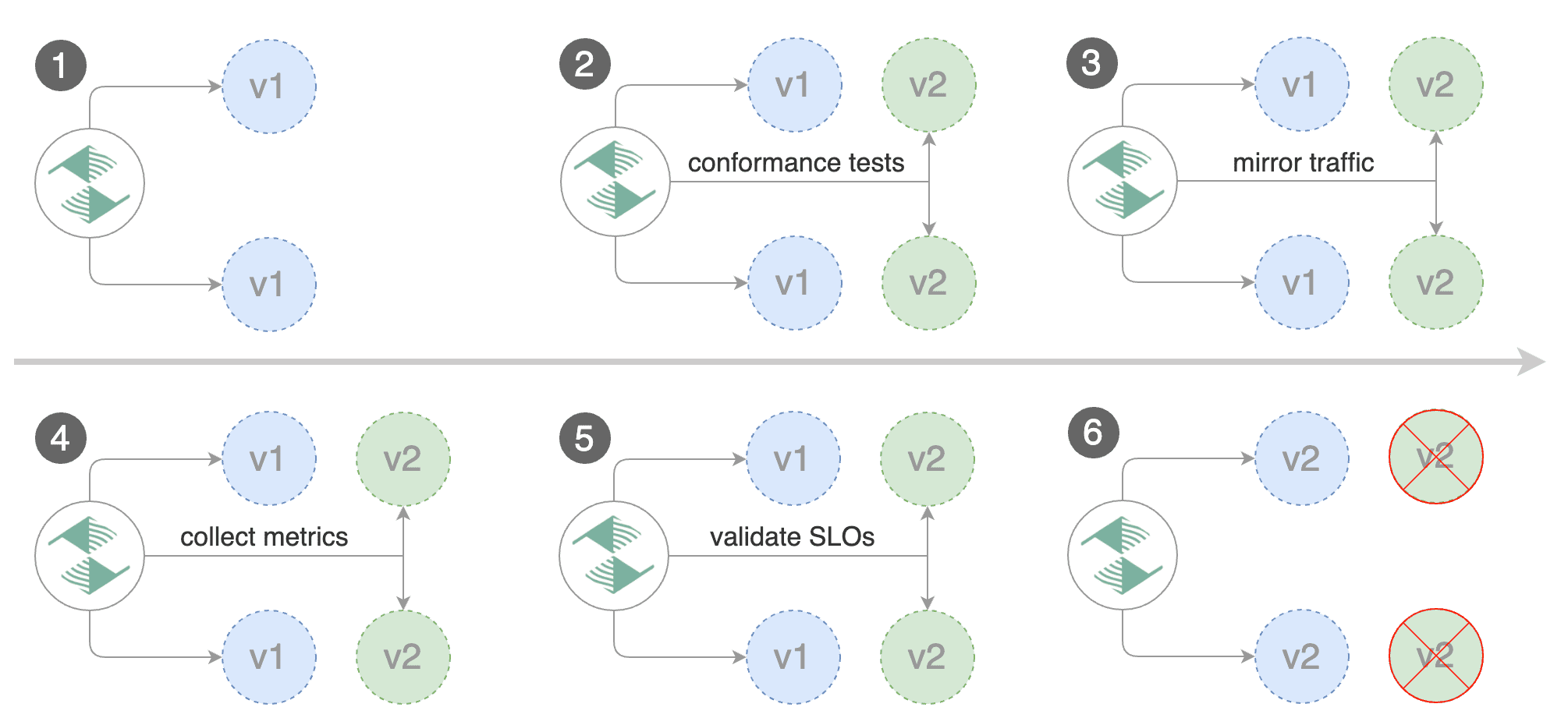

Flagger can run automated application analysis, promotion and rollback for the following deployment strategies:

|

||||

* Canary (progressive traffic shifting)

|

||||

* Istio, Linkerd, App Mesh, NGINX, Gloo

|

||||

* Canary (traffic mirroring)

|

||||

* Istio

|

||||

* A/B Testing (HTTP headers and cookies traffic routing)

|

||||

* Istio, NGINX

|

||||

* Istio, App Mesh, NGINX

|

||||

* Blue/Green (traffic switch)

|

||||

* Kubernetes CNI

|

||||

* Kubernetes CNI, Istio, Linkerd, App Mesh, NGINX, Gloo

|

||||

|

||||

For Canary deployments and A/B testing you'll need a Layer 7 traffic management solution like a service mesh or an ingress controller.

|

||||

For Blue/Green deployments no service mesh or ingress controller is required.

|

||||

@@ -41,6 +43,21 @@ Istio example:

|

||||

regex: "^(.*?;)?(canary=always)(;.*)?$"

|

||||

```

|

||||

|

||||

App Mesh example:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

interval: 1m

|

||||

threshold: 10

|

||||

iterations: 2

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: ".*Chrome.*"

|

||||

```

|

||||

|

||||

Note that App Mesh supports a single condition.

|

||||

|

||||

NGINX example:

|

||||

|

||||

```yaml

|

||||

@@ -102,6 +119,42 @@ The above configuration will run an analysis for five minutes.

|

||||

Flagger starts the load test for the canary service (green version) and checks the Prometheus metrics every 30 seconds.

|

||||

If the analysis result is positive, Flagger will promote the canary (green version) to primary (blue version).

|

||||

|

||||

**When can I use traffic mirroring?**

|

||||

|

||||

Traffic Mirroring is a pre-stage in a Canary (progressive traffic shifting) or

|

||||

Blue/Green deployment strategy. Traffic mirroring will copy each incoming

|

||||

request, sending one request to the primary and one to the canary service.

|

||||

The response from the primary is sent back to the user. The response from the canary

|

||||

is discarded. Metrics are collected on both requests so that the deployment will

|

||||

only proceed if the canary metrics are healthy.

|

||||

|

||||

Mirroring is supported by Istio only.

|

||||

|

||||

In Istio, mirrored requests have `-shadow` appended to the `Host` (HTTP) or

|

||||

`Authority` (HTTP/2) header; for example requests to `podinfo.test` that are

|

||||

mirrored will be reported in telemetry with a destination host `podinfo.test-shadow`.

|

||||

|

||||

Mirroring must only be used for requests that are **idempotent** or capable of

|

||||

being processed twice (once by the primary and once by the canary). Reads are

|

||||

idempotent. Before using mirroring on requests that may be writes, you should

|

||||

consider what will happen if a write is duplicated and handled by the primary

|

||||

and canary.

|

||||

|

||||

To use mirroring, set `spec.canaryAnalysis.mirror` to `true`. Example for

|

||||

traffic shifting:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

spec:

|

||||

provider: istio

|

||||

canaryAnalysis:

|

||||

mirror: true

|

||||

interval: 30s

|

||||

stepWeight: 20

|

||||

maxWeight: 50

|

||||

```

|

||||

|

||||

### Kubernetes services

|

||||

|

||||

**How is an application exposed inside the cluster?**

|

||||

@@ -120,8 +173,10 @@ spec:

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

service:

|

||||

# container port (required)

|

||||

# ClusterIP port number (required)

|

||||

port: 9898

|

||||

# container port name or number

|

||||

targetPort: http

|

||||

# port name can be http or grpc (default http)

|

||||

portName: http

|

||||

```

|

||||

@@ -291,6 +346,195 @@ spec:

|

||||

topologyKey: kubernetes.io/hostname

|

||||

```

|

||||

|

||||

### Istio routing

|

||||

|

||||

**How does Flagger interact with Istio?**

|

||||

|

||||

Flagger creates an Istio Virtual Service and Destination Rules based on the Canary service spec.

|

||||

The service configuration lets you expose an app inside or outside the mesh.

|

||||

You can also define traffic policies, HTTP match conditions, URI rewrite rules, CORS policies, timeout and retries.

|

||||

|

||||

The following spec exposes the `frontend` workload inside the mesh on `frontend.test.svc.cluster.local:9898`

|

||||

and outside the mesh on `frontend.example.com`. You'll have to specify an Istio ingress gateway for external hosts.

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: frontend

|

||||

namespace: test

|

||||

spec:

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# service port name (optional, will default to "http")

|

||||

portName: http-frontend

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- frontend.example.com

|

||||

# Istio traffic policy

|

||||

trafficPolicy:

|

||||

tls:

|

||||

# use ISTIO_MUTUAL when mTLS is enabled

|

||||

mode: DISABLE

|

||||

# HTTP match conditions (optional)

|

||||

match:

|

||||

- uri:

|

||||

prefix: /

|

||||

# HTTP rewrite (optional)

|

||||

rewrite:

|

||||

uri: /

|

||||

# Istio retry policy (optional)

|

||||

retries:

|

||||

attempts: 3

|

||||

perTryTimeout: 1s

|

||||

retryOn: "gateway-error,connect-failure,refused-stream"

|

||||

# Add headers (optional)

|

||||

headers:

|

||||

request:

|

||||

add:

|

||||

x-some-header: "value"

|

||||

# cross-origin resource sharing policy (optional)

|

||||

corsPolicy:

|

||||

allowOrigin:

|

||||

- example.com

|

||||

allowMethods:

|

||||

- GET

|

||||

allowCredentials: false

|

||||

allowHeaders:

|

||||

- x-some-header

|

||||

maxAge: 24h

|

||||

```

|

||||

|

||||

For the above spec Flagger will generate the following virtual service:

|

||||

|

||||

```yaml

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: VirtualService

|

||||

metadata:

|

||||

name: frontend

|

||||

namespace: test

|

||||

ownerReferences:

|

||||

- apiVersion: flagger.app/v1alpha3

|

||||

blockOwnerDeletion: true

|

||||

controller: true

|

||||

kind: Canary

|

||||

name: podinfo

|

||||

uid: 3a4a40dd-3875-11e9-8e1d-42010a9c0fd1

|

||||

spec:

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

hosts:

|

||||

- frontend.example.com

|

||||

- frontend

|

||||

http:

|

||||

- appendHeaders:

|

||||

x-some-header: "value"

|

||||

corsPolicy:

|

||||

allowHeaders:

|

||||

- x-some-header

|

||||

allowMethods:

|

||||

- GET

|

||||

allowOrigin:

|

||||

- example.com

|

||||

maxAge: 24h

|

||||

match:

|

||||

- uri:

|

||||

prefix: /

|

||||

rewrite:

|

||||

uri: /

|

||||

route:

|

||||

- destination:

|

||||

host: podinfo-primary

|

||||

weight: 100

|

||||

- destination:

|

||||

host: podinfo-canary

|

||||

weight: 0

|

||||

retries:

|

||||

attempts: 3

|

||||

perTryTimeout: 1s

|

||||

retryOn: "gateway-error,connect-failure,refused-stream"

|

||||

```

|

||||

|

||||

For each destination in the virtual service a rule is generated:

|

||||

|

||||

```yaml

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: DestinationRule

|

||||

metadata:

|

||||

name: frontend-primary

|

||||

namespace: test

|

||||

spec:

|

||||

host: frontend-primary

|

||||

trafficPolicy:

|

||||

tls:

|

||||

mode: DISABLE

|

||||

---

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: DestinationRule

|

||||

metadata:

|

||||

name: frontend-canary

|

||||

namespace: test

|

||||

spec:

|

||||

host: frontend-canary

|

||||

trafficPolicy:

|

||||

tls:

|

||||

mode: DISABLE

|

||||

```

|

||||

|

||||

Flagger keeps in sync the virtual service and destination rules with the canary service spec.

|

||||

Any direct modification to the virtual service spec will be overwritten.

|

||||

|

||||

To expose a workload inside the mesh on `http://backend.test.svc.cluster.local:9898`,

|

||||

the service spec can contain only the container port and the traffic policy:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: backend

|

||||

namespace: test

|

||||

spec:

|

||||

service:

|

||||

port: 9898

|

||||

trafficPolicy:

|

||||

tls:

|

||||

mode: DISABLE

|

||||

```

|

||||

|

||||

Based on the above spec, Flagger will create several ClusterIP services like:

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: backend-primary

|

||||

ownerReferences:

|

||||

- apiVersion: flagger.app/v1alpha3

|

||||

blockOwnerDeletion: true

|

||||

controller: true

|

||||

kind: Canary

|

||||

name: backend

|

||||

uid: 2ca1a9c7-2ef6-11e9-bd01-42010a9c0145

|

||||

spec:

|

||||

type: ClusterIP

|

||||

ports:

|

||||

- name: http

|

||||

port: 9898

|

||||

protocol: TCP

|

||||

targetPort: 9898

|

||||

selector:

|

||||

app: backend-primary

|

||||

```

|

||||

|

||||

Flagger works for user facing apps exposed outside the cluster via an ingress gateway

|

||||

and for backend HTTP APIs that are accessible only from inside the mesh.

|

||||

|

||||

### Istio Ingress Gateway

|

||||

|

||||

**How can I expose multiple canaries on the same external domain?**

|

||||

|

||||

@@ -4,8 +4,6 @@

|

||||

a horizontal pod autoscaler \(HPA\) and creates a series of objects

|

||||

\(Kubernetes deployments, ClusterIP services, virtual service, traffic split or ingress\) to drive the canary analysis and promotion.

|

||||

|

||||

|

||||

|

||||

### Canary Custom Resource

|

||||

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

@@ -19,8 +17,7 @@ metadata:

|

||||

spec:

|

||||

# service mesh provider (optional)

|

||||

# can be: kubernetes, istio, linkerd, appmesh, nginx, gloo, supergloo

|

||||

# use the kubernetes provider for Blue/Green style deployments

|

||||

provider: istio

|

||||

provider: linkerd

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

@@ -35,16 +32,15 @@ spec:

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

# ClusterIP port number

|

||||

port: 9898

|

||||

# service port name (optional, will default to "http")

|

||||

portName: http-podinfo

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- podinfo.example.com

|

||||

# ClusterIP port name can be http or grpc (default http)

|

||||

portName: http

|

||||

# container port number or name (optional)

|

||||

targetPort: 9898

|

||||

# add all the other container ports

|

||||

# to the ClusterIP services (default false)

|

||||

portDiscovery: false

|

||||

# promote the canary without analysing it (default false)

|

||||

skipAnalysis: false

|

||||

# define the canary analysis timing and KPIs

|

||||

@@ -71,15 +67,13 @@ spec:

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

# testing (optional)

|

||||

webhooks:

|

||||

- name: integration-tests

|

||||

url: http://podinfo.test:9898/echo

|

||||

timeout: 1m

|

||||

# key-value pairs (optional)

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||