mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-16 19:09:55 +00:00

Compare commits

77 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

1902884b56 | ||

|

|

98d2805267 | ||

|

|

24a74d3589 | ||

|

|

15463456ec | ||

|

|

752eceed4b | ||

|

|

eadce34d6f | ||

|

|

11ccf34bbc | ||

|

|

e308678ed5 | ||

|

|

cbe72f0aa2 | ||

|

|

bc84e1c154 | ||

|

|

344bd45a0e | ||

|

|

72014f736f | ||

|

|

0a2949b6ad | ||

|

|

2ff695ecfe | ||

|

|

8d0b54e059 | ||

|

|

121a65fad0 | ||

|

|

ecaa203091 | ||

|

|

6d0e3c6468 | ||

|

|

c933476fff | ||

|

|

1335210cf5 | ||

|

|

9d12794600 | ||

|

|

d57fc7d03e | ||

|

|

1f9f6fb55a | ||

|

|

948df55de3 | ||

|

|

8914f26754 | ||

|

|

79b3370892 | ||

|

|

a233b99f0b | ||

|

|

0d94c01678 | ||

|

|

00151e92fe | ||

|

|

f7db0210ea | ||

|

|

cf3ba35fb9 | ||

|

|

177dc824e3 | ||

|

|

5f544b90d6 | ||

|

|

921ac00383 | ||

|

|

7df7218978 | ||

|

|

e4c6903a01 | ||

|

|

027342dc72 | ||

|

|

e17a747785 | ||

|

|

e477b37bd0 | ||

|

|

ad25068375 | ||

|

|

c92230c109 | ||

|

|

9e082d9ee3 | ||

|

|

cfd610ac55 | ||

|

|

82067f13bf | ||

|

|

242d79e49d | ||

|

|

4f01ecde5a | ||

|

|

61141c7479 | ||

|

|

62429ff710 | ||

|

|

82a1f45cc1 | ||

|

|

1a95fc2a9c | ||

|

|

13816eeafa | ||

|

|

5279f73c17 | ||

|

|

d196bb2856 | ||

|

|

3f8f634a1b | ||

|

|

5ba27c898e | ||

|

|

57f1b63fa1 | ||

|

|

d69e203479 | ||

|

|

4d7fae39a8 | ||

|

|

2dc554c92a | ||

|

|

21c394ef7f | ||

|

|

2173bfc1a0 | ||

|

|

a19d016e14 | ||

|

|

8f1b5df9e2 | ||

|

|

2d6b8ecfdf | ||

|

|

8093612011 | ||

|

|

39dc761e32 | ||

|

|

0c68983c62 | ||

|

|

37ebbf14f9 | ||

|

|

156488c8d5 | ||

|

|

68d1f583cc | ||

|

|

aa24d6ff7e | ||

|

|

58c2c19f1e | ||

|

|

2a91149211 | ||

|

|

868482c240 | ||

|

|

e5612bca50 | ||

|

|

d21fb1afe8 | ||

|

|

89d0a533e2 |

@@ -1,6 +1,6 @@

|

||||

version: 2.1

|

||||

jobs:

|

||||

e2e-testing:

|

||||

e2e-istio-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

@@ -9,14 +9,46 @@ jobs:

|

||||

- run: test/e2e-build.sh

|

||||

- run: test/e2e-tests.sh

|

||||

|

||||

e2e-supergloo-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-supergloo.sh

|

||||

- run: test/e2e-build.sh supergloo:test.supergloo-system

|

||||

- run: test/e2e-tests.sh canary

|

||||

|

||||

e2e-nginx-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-nginx.sh

|

||||

- run: test/e2e-nginx-build.sh

|

||||

- run: test/e2e-nginx-tests.sh

|

||||

|

||||

workflows:

|

||||

version: 2

|

||||

build-and-test:

|

||||

jobs:

|

||||

- e2e-testing:

|

||||

- e2e-istio-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

- e2e-supergloo-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

- e2e-nginx-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

41

CHANGELOG.md

41

CHANGELOG.md

@@ -2,6 +2,47 @@

|

||||

|

||||

All notable changes to this project are documented in this file.

|

||||

|

||||

## 0.13.2 (2019-04-11)

|

||||

|

||||

Fixes for Jenkins X deployments (prevent the jx GC from removing the primary instance)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Do not copy labels from canary to primary deployment [#178](https://github.com/weaveworks/flagger/pull/178)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Add NGINX ingress controller e2e and unit tests [#176](https://github.com/weaveworks/flagger/pull/176)

|

||||

|

||||

## 0.13.1 (2019-04-09)

|

||||

|

||||

Fixes for custom metrics checks and NGINX Prometheus queries

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Fix promql queries for custom checks and NGINX [#174](https://github.com/weaveworks/flagger/pull/174)

|

||||

|

||||

## 0.13.0 (2019-04-08)

|

||||

|

||||

Adds support for [NGINX](https://docs.flagger.app/usage/nginx-progressive-delivery) ingress controller

|

||||

|

||||

#### Features

|

||||

|

||||

- Add support for nginx ingress controller (weighted traffic and A/B testing) [#170](https://github.com/weaveworks/flagger/pull/170)

|

||||

- Add Prometheus add-on to Flagger Helm chart for App Mesh and NGINX [79b3370](https://github.com/weaveworks/flagger/pull/170/commits/79b337089294a92961bc8446fd185b38c50a32df)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Fix duplicate hosts Istio error when using wildcards [#162](https://github.com/weaveworks/flagger/pull/162)

|

||||

|

||||

## 0.12.0 (2019-04-29)

|

||||

|

||||

Adds support for [SuperGloo](https://docs.flagger.app/install/flagger-install-with-supergloo)

|

||||

|

||||

#### Features

|

||||

|

||||

- Supergloo support for canary deployment (weighted traffic) [#151](https://github.com/weaveworks/flagger/pull/151)

|

||||

|

||||

## 0.11.1 (2019-04-18)

|

||||

|

||||

Move Flagger and the load tester container images to Docker Hub

|

||||

|

||||

609

Gopkg.lock

generated

609

Gopkg.lock

generated

@@ -6,16 +6,32 @@

|

||||

name = "cloud.google.com/go"

|

||||

packages = ["compute/metadata"]

|

||||

pruneopts = "NUT"

|

||||

revision = "c9474f2f8deb81759839474b6bd1726bbfe1c1c4"

|

||||

version = "v0.36.0"

|

||||

revision = "fcb9a2d5f791d07be64506ab54434de65989d370"

|

||||

version = "v0.37.4"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:f12358576cd79bba0ae626530d23cde63416744f486c8bc817802c6907eaadd7"

|

||||

name = "github.com/armon/go-metrics"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "f0300d1749da6fa982027e449ec0c7a145510c3c"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:13d5750ba049ce46bf931792803f1d5584b04026df9badea5931e33c22aa34ee"

|

||||

name = "github.com/avast/retry-go"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "08d411bf8302219fe47ca04dbdf9de892010c5e5"

|

||||

version = "v2.2.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:707ebe952a8b3d00b343c01536c79c73771d100f63ec6babeaed5c79e2b8a8dd"

|

||||

name = "github.com/beorn7/perks"

|

||||

packages = ["quantile"]

|

||||

pruneopts = "NUT"

|

||||

revision = "3a771d992973f24aa725d07868b467d1ddfceafb"

|

||||

revision = "4b2b341e8d7715fae06375aa633dbb6e91b3fb46"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:ffe9824d294da03b391f44e1ae8281281b4afc1bdaa9588c9097785e3af10cec"

|

||||

@@ -25,6 +41,14 @@

|

||||

revision = "8991bc29aa16c548c550c7ff78260e27b9ab7c73"

|

||||

version = "v1.1.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:32598368f409bbee79deb9d43569fcd92b9fb27f39155f5e166b3371217f051f"

|

||||

name = "github.com/evanphx/json-patch"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "72bf35d0ff611848c1dc9df0f976c81192392fa5"

|

||||

version = "v4.1.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:81466b4218bf6adddac2572a30ac733a9255919bc2f470b4827a317bd4ee1756"

|

||||

name = "github.com/ghodss/yaml"

|

||||

@@ -34,25 +58,20 @@

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:a1b2a5e38f79688ee8250942d5fa960525fceb1024c855c7bc76fa77b0f3cca2"

|

||||

digest = "1:895d2773c9e78e595dd5f946a25383d579d3094a9d8d9306dba27359f190f275"

|

||||

name = "github.com/gogo/protobuf"

|

||||

packages = [

|

||||

"gogoproto",

|

||||

"jsonpb",

|

||||

"proto",

|

||||

"protoc-gen-gogo/descriptor",

|

||||

"sortkeys",

|

||||

"types",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "ba06b47c162d49f2af050fb4c75bcbc86a159d5c"

|

||||

version = "v1.2.1"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:e0f096f9332ad5f84341de82db69fd098864b17c668333a1fbbffd1b846dcc2b"

|

||||

name = "github.com/golang/glog"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "2cc4b790554d1a0c48fcc3aeb891e3de70cf8de0"

|

||||

source = "github.com/istio/glog"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:b7cb6054d3dff43b38ad2e92492f220f57ae6087ee797dca298139776749ace8"

|

||||

@@ -62,26 +81,35 @@

|

||||

revision = "5b532d6fd5efaf7fa130d4e859a2fde0fc3a9e1b"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:2d0636a8c490d2272dd725db26f74a537111b99b9dbdda0d8b98febe63702aa4"

|

||||

digest = "1:a98a0b00720dc3149bf3d0c8d5726188899e5bab2f5072b9a7ef82958fbc98b2"

|

||||

name = "github.com/golang/protobuf"

|

||||

packages = [

|

||||

"proto",

|

||||

"protoc-gen-go/descriptor",

|

||||

"ptypes",

|

||||

"ptypes/any",

|

||||

"ptypes/duration",

|

||||

"ptypes/timestamp",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "c823c79ea1570fb5ff454033735a8e68575d1d0f"

|

||||

version = "v1.3.0"

|

||||

revision = "b5d812f8a3706043e23a9cd5babf2e5423744d30"

|

||||

version = "v1.3.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:7f114b78210bf5b75f307fc97cff293633c835bab1e0ea8a744a44b39c042dfe"

|

||||

name = "github.com/golang/snappy"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "2a8bb927dd31d8daada140a5d09578521ce5c36a"

|

||||

version = "v0.0.1"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:05f95ffdfcf651bdb0f05b40b69e7f5663047f8da75c72d58728acb59b5cc107"

|

||||

name = "github.com/google/btree"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "4030bb1f1f0c35b30ca7009e9ebd06849dd45306"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:d2754cafcab0d22c13541618a8029a70a8959eb3525ff201fe971637e2274cd0"

|

||||

@@ -98,12 +126,12 @@

|

||||

version = "v0.2.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:52c5834e2bebac9030c97cc0798ac11c3aa8a39f098aeb419f142533da6cd3cc"

|

||||

name = "github.com/google/gofuzz"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "24818f796faf91cd76ec7bddd72458fbced7a6c1"

|

||||

revision = "f140a6486e521aad38f5917de355cbf147cc0496"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:06a7dadb7b760767341ffb6c8d377238d68a1226f2b21b5d497d2e3f6ecf6b4e"

|

||||

@@ -136,6 +164,70 @@

|

||||

revision = "b4df798d65426f8c8ab5ca5f9987aec5575d26c9"

|

||||

version = "v2.0.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:adf097b949dbc1e452fbad15322c78651f6e7accb4661dffa38fed30273c5966"

|

||||

name = "github.com/hashicorp/consul"

|

||||

packages = ["api"]

|

||||

pruneopts = "NUT"

|

||||

revision = "ea5210a30e154f4da9a4c8e729b45b8ce7b9b92c"

|

||||

version = "v1.4.4"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:f0d9d74edbd40fdeada436d5ac9cb5197407899af3fef85ff0137077ffe8ae19"

|

||||

name = "github.com/hashicorp/errwrap"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "8a6fb523712970c966eefc6b39ed2c5e74880354"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:fff05cb0c34d2decaeb27bb6ab6b73a6947c3009d725160070da55f9511fd410"

|

||||

name = "github.com/hashicorp/go-cleanhttp"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "eda1e5db218aad1db63ca4642c8906b26bcf2744"

|

||||

version = "v0.5.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:1cf16b098a70d6c02899608abbb567296d11c7b830635014dfe6124a02dc1369"

|

||||

name = "github.com/hashicorp/go-immutable-radix"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "27df80928bb34bb1b0d6d0e01b9e679902e7a6b5"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:2ed138049ab373f696db2081ca48f15c5abdf20893803612a284f2bdce2bf443"

|

||||

name = "github.com/hashicorp/go-multierror"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "886a7fbe3eb1c874d46f623bfa70af45f425b3d1"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:b3496707ba69dd873a870238644aa8ac259ee67fc4fd05caf37b608e7053e1f7"

|

||||

name = "github.com/hashicorp/go-retryablehttp"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "357460732517ec3b57c05c51443296bdd6df1874"

|

||||

version = "v0.5.3"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:cdb5ce76cd7af19e3d2d5ba9b6458a2ee804f0d376711215dd3df5f51100d423"

|

||||

name = "github.com/hashicorp/go-rootcerts"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "63503fb4e1eca22f9ae0f90b49c5d5538a0e87eb"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:6c69626c7aacae1e573084cdb6ed55713094ba56263f687e5d1750053bd08598"

|

||||

name = "github.com/hashicorp/go-sockaddr"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "c7188e74f6acae5a989bdc959aa779f8b9f42faf"

|

||||

version = "v1.0.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:52094d0f8bdf831d1a2401e9b6fee5795fdc0b2a2d1f8bb1980834c289e79129"

|

||||

name = "github.com/hashicorp/golang-lru"

|

||||

@@ -147,6 +239,48 @@

|

||||

revision = "7087cb70de9f7a8bc0a10c375cb0d2280a8edf9c"

|

||||

version = "v0.5.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:39f543569bf189e228c84a294c50aca8ea56c82b3d9df5c9b788249907d7049a"

|

||||

name = "github.com/hashicorp/hcl"

|

||||

packages = [

|

||||

".",

|

||||

"hcl/ast",

|

||||

"hcl/parser",

|

||||

"hcl/scanner",

|

||||

"hcl/strconv",

|

||||

"hcl/token",

|

||||

"json/parser",

|

||||

"json/scanner",

|

||||

"json/token",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "8cb6e5b959231cc1119e43259c4a608f9c51a241"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:acc81e4e4289587b257ccdfccbc6eaf16d4c2fb57dda73c6bb349bf50f02501f"

|

||||

name = "github.com/hashicorp/serf"

|

||||

packages = ["coordinate"]

|

||||

pruneopts = "NUT"

|

||||

revision = "15cfd05de3dffb3664aa37b06e91f970b825e380"

|

||||

version = "v0.8.3"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:cded54cacfb6fdc86b916031e4113cbc50dfb55e92535651733604f1e3a8ce59"

|

||||

name = "github.com/hashicorp/vault"

|

||||

packages = [

|

||||

"api",

|

||||

"helper/compressutil",

|

||||

"helper/consts",

|

||||

"helper/hclutil",

|

||||

"helper/jsonutil",

|

||||

"helper/parseutil",

|

||||

"helper/strutil",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "36aa8c8dd1936e10ebd7a4c1d412ae0e6f7900bd"

|

||||

version = "v1.1.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:aaa38889f11896ee3644d77e17dc7764cc47f5f3d3b488268df2af2b52541c5f"

|

||||

name = "github.com/imdario/mergo"

|

||||

@@ -156,19 +290,55 @@

|

||||

version = "v0.3.7"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:e0f096f9332ad5f84341de82db69fd098864b17c668333a1fbbffd1b846dcc2b"

|

||||

name = "github.com/istio/glog"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "2cc4b790554d1a0c48fcc3aeb891e3de70cf8de0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:0243cffa4a3410f161ee613dfdd903a636d07e838a42d341da95d81f42cd1d41"

|

||||

digest = "1:4e903242fe176238aaa469f59d7035f5abf2aa9acfefb8964ddd203651b574e9"

|

||||

name = "github.com/json-iterator/go"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "f2b4162afba35581b6d4a50d3b8f34e33c144682"

|

||||

revision = "0ff49de124c6f76f8494e194af75bde0f1a49a29"

|

||||

version = "v1.1.6"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:2760a8fe9b7bcc95c397bc85b69bc7a11eed03c644b45e8c00c581c114486d3f"

|

||||

name = "github.com/k0kubun/pp"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "3d73dea227e0711e38b911ffa6fbafc8ff6b2991"

|

||||

version = "v3.0.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:493282a1185f77368678d3886b7e999e37e920d22f69669545f1ee5ae10743a2"

|

||||

name = "github.com/linkerd/linkerd2"

|

||||

packages = [

|

||||

"controller/gen/apis/serviceprofile",

|

||||

"controller/gen/apis/serviceprofile/v1alpha1",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "5e47cb150a33150e5aeddc6672d8a64701a970de"

|

||||

version = "stable-2.2.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:0dbba7d4d4f3eeb01acd81af338ff4a3c4b0bb814d87368ea536e616f383240d"

|

||||

name = "github.com/lyft/protoc-gen-validate"

|

||||

packages = ["validate"]

|

||||

pruneopts = "NUT"

|

||||

revision = "ff6f7a9bc2e5fe006509b9f8c7594c41a953d50f"

|

||||

version = "v0.0.14"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:9785a54031460a402fab4e4bbb3124c8dd9e9f7b1982109fef605cb91632d480"

|

||||

name = "github.com/mattn/go-colorable"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "3a70a971f94a22f2fa562ffcc7a0eb45f5daf045"

|

||||

version = "v0.1.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:85edcc76fa95b8b312642905b56284f4fe5c42d8becb219481adba7e97d4f5c5"

|

||||

name = "github.com/mattn/go-isatty"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "c2a7a6ca930a4cd0bc33a3f298eb71960732a3a7"

|

||||

version = "v0.0.7"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:5985ef4caf91ece5d54817c11ea25f182697534f8ae6521eadcd628c142ac4b6"

|

||||

@@ -178,6 +348,30 @@

|

||||

revision = "c12348ce28de40eed0136aa2b644d0ee0650e56c"

|

||||

version = "v1.0.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:f9f72e583aaacf1d1ac5d6121abd4afd3c690baa9e14e1d009df26bf831ba347"

|

||||

name = "github.com/mitchellh/go-homedir"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "af06845cf3004701891bf4fdb884bfe4920b3727"

|

||||

version = "v1.1.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:e34decedbcec12332c5836d16a6838f864e0b43c5b4f9aa9d9a85101015f87c2"

|

||||

name = "github.com/mitchellh/hashstructure"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "a38c50148365edc8df43c1580c48fb2b3a1e9cd7"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:a45ae66dea4c899d79fceb116accfa1892105c251f0dcd9a217ddc276b42ec68"

|

||||

name = "github.com/mitchellh/mapstructure"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "3536a929edddb9a5b34bd6861dc4a9647cb459fe"

|

||||

version = "v1.1.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:2f42fa12d6911c7b7659738758631bec870b7e9b4c6be5444f963cdcfccc191f"

|

||||

name = "github.com/modern-go/concurrent"

|

||||

@@ -210,6 +404,25 @@

|

||||

revision = "5f041e8faa004a95c88a202771f4cc3e991971e6"

|

||||

version = "v2.0.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:122724025b9505074138089f78f543f643ae3a8fab6d5b9edf72cce4dd49cc91"

|

||||

name = "github.com/pierrec/lz4"

|

||||

packages = [

|

||||

".",

|

||||

"internal/xxh32",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "315a67e90e415bcdaff33057da191569bf4d8479"

|

||||

version = "v2.1.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:14715f705ff5dfe0ffd6571d7d201dd8e921030f8070321a79380d8ca4ec1a24"

|

||||

name = "github.com/pkg/errors"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "ba968bfe8b2f7e042a574c888954fccecfa385b4"

|

||||

version = "v0.8.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:03bca087b180bf24c4f9060775f137775550a0834e18f0bca0520a868679dbd7"

|

||||

name = "github.com/prometheus/client_golang"

|

||||

@@ -238,22 +451,118 @@

|

||||

"model",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "cfeb6f9992ffa54aaa4f2170ade4067ee478b250"

|

||||

version = "v0.2.0"

|

||||

revision = "a82f4c12f983cc2649298185f296632953e50d3e"

|

||||

version = "v0.3.0"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:0a2e604afa3cbf53a1ddade2f240ee8472eded98856dd8c7cfbfea392ddbbfc7"

|

||||

digest = "1:7813f698f171bd7132b123364433e1b0362f7fdb4ed7f4a20df595a4c2410f8a"

|

||||

name = "github.com/prometheus/procfs"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "8368d24ba045f26503eb745b624d930cbe214c79"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:38969f56c08bdf302a73a7c8adb0520dc9cb4cd54206cbe7c8a147da52cc0890"

|

||||

name = "github.com/radovskyb/watcher"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "d8b41ca2397a9b5cfc26adb10edbbcde40187a87"

|

||||

version = "v1.0.6"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:09d61699d553a4e6ec998ad29816177b1f3d3ed0c18fe923d2c174ec065c99c8"

|

||||

name = "github.com/ryanuber/go-glob"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "51a8f68e6c24dc43f1e371749c89a267de4ebc53"

|

||||

version = "v1.0.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:cad7db5ed31bef1f9e4429ad0927b40dbf31535167ec4c768fd5985565111ea5"

|

||||

name = "github.com/solo-io/gloo"

|

||||

packages = [

|

||||

".",

|

||||

"internal/util",

|

||||

"iostats",

|

||||

"nfs",

|

||||

"xfs",

|

||||

"projects/gloo/pkg/api/v1",

|

||||

"projects/gloo/pkg/api/v1/plugins",

|

||||

"projects/gloo/pkg/api/v1/plugins/aws",

|

||||

"projects/gloo/pkg/api/v1/plugins/azure",

|

||||

"projects/gloo/pkg/api/v1/plugins/consul",

|

||||

"projects/gloo/pkg/api/v1/plugins/faultinjection",

|

||||

"projects/gloo/pkg/api/v1/plugins/grpc",

|

||||

"projects/gloo/pkg/api/v1/plugins/grpc_web",

|

||||

"projects/gloo/pkg/api/v1/plugins/hcm",

|

||||

"projects/gloo/pkg/api/v1/plugins/kubernetes",

|

||||

"projects/gloo/pkg/api/v1/plugins/rest",

|

||||

"projects/gloo/pkg/api/v1/plugins/retries",

|

||||

"projects/gloo/pkg/api/v1/plugins/static",

|

||||

"projects/gloo/pkg/api/v1/plugins/transformation",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "bbced9601137e764853b2fad7ec3e2dc4c504e02"

|

||||

revision = "f767e64f7ee60139ff79e2abb547cd149067da04"

|

||||

version = "v0.13.17"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:4a5b267a6929e4c3980066d745f87993c118f7797373a43537fdc69b3ee2d37e"

|

||||

name = "github.com/solo-io/go-utils"

|

||||

packages = [

|

||||

"contextutils",

|

||||

"errors",

|

||||

"kubeutils",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "a27432d89f419897df796a17456410e49a9727c3"

|

||||

version = "v0.7.11"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:1cc9a8be450b7d9e77f100c7662133bf7bc8f0832f4eed3542599d3f35d28c46"

|

||||

name = "github.com/solo-io/solo-kit"

|

||||

packages = [

|

||||

"pkg/api/v1/clients",

|

||||

"pkg/api/v1/clients/configmap",

|

||||

"pkg/api/v1/clients/consul",

|

||||

"pkg/api/v1/clients/factory",

|

||||

"pkg/api/v1/clients/file",

|

||||

"pkg/api/v1/clients/kube",

|

||||

"pkg/api/v1/clients/kube/cache",

|

||||

"pkg/api/v1/clients/kube/controller",

|

||||

"pkg/api/v1/clients/kube/crd",

|

||||

"pkg/api/v1/clients/kube/crd/client/clientset/versioned",

|

||||

"pkg/api/v1/clients/kube/crd/client/clientset/versioned/scheme",

|

||||

"pkg/api/v1/clients/kube/crd/client/clientset/versioned/typed/solo.io/v1",

|

||||

"pkg/api/v1/clients/kube/crd/solo.io/v1",

|

||||

"pkg/api/v1/clients/kubesecret",

|

||||

"pkg/api/v1/clients/memory",

|

||||

"pkg/api/v1/clients/vault",

|

||||

"pkg/api/v1/eventloop",

|

||||

"pkg/api/v1/reconcile",

|

||||

"pkg/api/v1/resources",

|

||||

"pkg/api/v1/resources/core",

|

||||

"pkg/errors",

|

||||

"pkg/utils/errutils",

|

||||

"pkg/utils/fileutils",

|

||||

"pkg/utils/hashutils",

|

||||

"pkg/utils/kubeutils",

|

||||

"pkg/utils/log",

|

||||

"pkg/utils/protoutils",

|

||||

"pkg/utils/stringutils",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "ab46647c2845a4830d09db3690b3ace1b06845cd"

|

||||

version = "v0.6.3"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:ba6f00e510774b2f1099d2f39a2ae36796ddbe406b02703b3395f26deb8d0f2c"

|

||||

name = "github.com/solo-io/supergloo"

|

||||

packages = [

|

||||

"api/custom/kubepod",

|

||||

"api/custom/linkerd",

|

||||

"pkg/api/external/istio/authorization/v1alpha1",

|

||||

"pkg/api/external/istio/networking/v1alpha3",

|

||||

"pkg/api/v1",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "cb84ba5d7bd1099c5e52c09fc9229d1ee0fed9f9"

|

||||

version = "v0.3.11"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:9d8420bbf131d1618bde6530af37c3799340d3762cc47210c1d9532a4c3a2779"

|

||||

@@ -263,6 +572,36 @@

|

||||

revision = "298182f68c66c05229eb03ac171abe6e309ee79a"

|

||||

version = "v1.0.3"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:755d83f10748295646cf74cd19611ebffad37807e49632feb8e3f47d43210c3d"

|

||||

name = "github.com/stefanprodan/klog"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "9cbb78b20423182f9e5b2a214dd255f5e117d2d1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:1349e632a9915b7075f74c13474bfcae2594750c390d3c0b236e48bf6bce3fa2"

|

||||

name = "go.opencensus.io"

|

||||

packages = [

|

||||

".",

|

||||

"internal",

|

||||

"internal/tagencoding",

|

||||

"metric/metricdata",

|

||||

"metric/metricproducer",

|

||||

"resource",

|

||||

"stats",

|

||||

"stats/internal",

|

||||

"stats/view",

|

||||

"tag",

|

||||

"trace",

|

||||

"trace/internal",

|

||||

"trace/tracestate",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "75c0cca22312e51bfd4fafdbe9197ae399e18b38"

|

||||

version = "v0.20.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:22f696cee54865fb8e9ff91df7b633f6b8f22037a8015253c6b6a71ca82219c7"

|

||||

name = "go.uber.org/atomic"

|

||||

@@ -296,15 +635,15 @@

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:058e9504b9a79bfe86092974d05bb3298d2aa0c312d266d43148de289a5065d9"

|

||||

digest = "1:bbe51412d9915d64ffaa96b51d409e070665efc5194fcf145c4a27d4133107a4"

|

||||

name = "golang.org/x/crypto"

|

||||

packages = ["ssh/terminal"]

|

||||

pruneopts = "NUT"

|

||||

revision = "8dd112bcdc25174059e45e07517d9fc663123347"

|

||||

revision = "b43e412143f90fca62516c457cae5a8dc1595586"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:e3477b53a5c2fb71a7c9688e9b3d58be702807a5a88def8b9a327259d46e4979"

|

||||

digest = "1:c86c292c268416012ab237c22c2c69fdd04cb891815b40343e75210472198455"

|

||||

name = "golang.org/x/net"

|

||||

packages = [

|

||||

"context",

|

||||

@@ -315,11 +654,11 @@

|

||||

"idna",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "16b79f2e4e95ea23b2bf9903c9809ff7b013ce85"

|

||||

revision = "1da14a5a36f220ea3f03470682b737b1dfd5de22"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:17ee74a4d9b6078611784b873cdbfe91892d2c73052c430724e66fcc015b6c7b"

|

||||

digest = "1:3121d742fbe48670a16d98b6da4693501fc33cd76d69ed6f35850c564f255c65"

|

||||

name = "golang.org/x/oauth2"

|

||||

packages = [

|

||||

".",

|

||||

@@ -329,18 +668,18 @@

|

||||

"jwt",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "e64efc72b421e893cbf63f17ba2221e7d6d0b0f3"

|

||||

revision = "9f3314589c9a9136388751d9adae6b0ed400978a"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:a0d91ab4d23badd4e64e115c6e6ba7dd56bd3cde5d287845822fb2599ac10236"

|

||||

digest = "1:bd7da85408c51d6ab079e1acc5a2872fdfbea42e845b8bbb538c3fac6ef43d2a"

|

||||

name = "golang.org/x/sys"

|

||||

packages = [

|

||||

"unix",

|

||||

"windows",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "30e92a19ae4a77dde818b8c3d41d51e4850cba12"

|

||||

revision = "f0ce4c0180bef7e9c51babed693a6e47fdd8962f"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:e7071ed636b5422cc51c0e3a6cebc229d6c9fffc528814b519a980641422d619"

|

||||

@@ -371,16 +710,15 @@

|

||||

name = "golang.org/x/time"

|

||||

packages = ["rate"]

|

||||

pruneopts = "NUT"

|

||||

revision = "85acf8d2951cb2a3bde7632f9ff273ef0379bcbd"

|

||||

revision = "9d24e82272b4f38b78bc8cff74fa936d31ccd8ef"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:e46d8e20161401a9cf8765dfa428494a3492a0b56fe114156b7da792bf41ba78"

|

||||

digest = "1:be1ab6d2b333b1d487c01f1328aef9dc76cee4ff4f780775a552d2a1653f0207"

|

||||

name = "golang.org/x/tools"

|

||||

packages = [

|

||||

"go/ast/astutil",

|

||||

"go/gcexportdata",

|

||||

"go/internal/cgo",

|

||||

"go/internal/gcimporter",

|

||||

"go/internal/packagesdriver",

|

||||

"go/packages",

|

||||

@@ -392,10 +730,10 @@

|

||||

"internal/semver",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "f8c04913dfb7b2339a756441456bdbe0af6eb508"

|

||||

revision = "6732636ccdfd99c4301d1d1ac2307f091331f767"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:d395d49d784dd3a11938a3e85091b6570664aa90ff2767a626565c6c130fa7e9"

|

||||

digest = "1:a4824d8df1fd1f63c6b3690bf4801d6ff1722adcb3e13c0489196a7e248d868a"

|

||||

name = "google.golang.org/appengine"

|

||||

packages = [

|

||||

".",

|

||||

@@ -410,8 +748,8 @@

|

||||

"urlfetch",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "e9657d882bb81064595ca3b56cbe2546bbabf7b1"

|

||||

version = "v1.4.0"

|

||||

revision = "54a98f90d1c46b7731eb8fb305d2a321c30ef610"

|

||||

version = "v1.5.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:fe9eb931d7b59027c4a3467f7edc16cc8552dac5328039bec05045143c18e1ce"

|

||||

@@ -438,7 +776,7 @@

|

||||

version = "v2.2.2"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:8960ef753a87391086a307122d23cd5007cee93c28189437e4f1b6ed72bffc50"

|

||||

digest = "1:c453ddc26bdab1e4267683a588ad9046e48d803a73f124fe2927adbab6ff02a5"

|

||||

name = "k8s.io/api"

|

||||

packages = [

|

||||

"admissionregistration/v1alpha1",

|

||||

@@ -446,16 +784,19 @@

|

||||

"apps/v1",

|

||||

"apps/v1beta1",

|

||||

"apps/v1beta2",

|

||||

"auditregistration/v1alpha1",

|

||||

"authentication/v1",

|

||||

"authentication/v1beta1",

|

||||

"authorization/v1",

|

||||

"authorization/v1beta1",

|

||||

"autoscaling/v1",

|

||||

"autoscaling/v2beta1",

|

||||

"autoscaling/v2beta2",

|

||||

"batch/v1",

|

||||

"batch/v1beta1",

|

||||

"batch/v2alpha1",

|

||||

"certificates/v1beta1",

|

||||

"coordination/v1beta1",

|

||||

"core/v1",

|

||||

"events/v1beta1",

|

||||

"extensions/v1beta1",

|

||||

@@ -472,11 +813,25 @@

|

||||

"storage/v1beta1",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "072894a440bdee3a891dea811fe42902311cd2a3"

|

||||

version = "kubernetes-1.11.0"

|

||||

revision = "05914d821849570fba9eacfb29466f2d8d3cd229"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:83b01e3d6f85c4e911de84febd69a2d3ece614c5a4a518fbc2b5d59000645980"

|

||||

digest = "1:501a73762f1b2c4530206ffb657b39d8b58a9b40280d30e4509ae1232767962c"

|

||||

name = "k8s.io/apiextensions-apiserver"

|

||||

packages = [

|

||||

"pkg/apis/apiextensions",

|

||||

"pkg/apis/apiextensions/v1beta1",

|

||||

"pkg/client/clientset/clientset",

|

||||

"pkg/client/clientset/clientset/scheme",

|

||||

"pkg/client/clientset/clientset/typed/apiextensions/v1beta1",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "0fe22c71c47604641d9aa352c785b7912c200562"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:5ac33dce66ac11d4f41c157be7f13ba30c968c74d25a3a3a0a1eddf44b6b2176"

|

||||

name = "k8s.io/apimachinery"

|

||||

packages = [

|

||||

"pkg/api/errors",

|

||||

@@ -508,6 +863,7 @@

|

||||

"pkg/util/intstr",

|

||||

"pkg/util/json",

|

||||

"pkg/util/mergepatch",

|

||||

"pkg/util/naming",

|

||||

"pkg/util/net",

|

||||

"pkg/util/runtime",

|

||||

"pkg/util/sets",

|

||||

@@ -523,15 +879,61 @@

|

||||

"third_party/forked/golang/reflect",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "103fd098999dc9c0c88536f5c9ad2e5da39373ae"

|

||||

version = "kubernetes-1.11.0"

|

||||

revision = "2b1284ed4c93a43499e781493253e2ac5959c4fd"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:c7d6cf5e28c377ab4000b94b6b9ff562c4b13e7e8b948ad943f133c5104be011"

|

||||

digest = "1:ef9bda0e29102ac26750517500a2cb0bd7be69ba21ad267ab89a0b35d035328b"

|

||||

name = "k8s.io/client-go"

|

||||

packages = [

|

||||

"discovery",

|

||||

"discovery/fake",

|

||||

"informers",

|

||||

"informers/admissionregistration",

|

||||

"informers/admissionregistration/v1alpha1",

|

||||

"informers/admissionregistration/v1beta1",

|

||||

"informers/apps",

|

||||

"informers/apps/v1",

|

||||

"informers/apps/v1beta1",

|

||||

"informers/apps/v1beta2",

|

||||

"informers/auditregistration",

|

||||

"informers/auditregistration/v1alpha1",

|

||||

"informers/autoscaling",

|

||||

"informers/autoscaling/v1",

|

||||

"informers/autoscaling/v2beta1",

|

||||

"informers/autoscaling/v2beta2",

|

||||

"informers/batch",

|

||||

"informers/batch/v1",

|

||||

"informers/batch/v1beta1",

|

||||

"informers/batch/v2alpha1",

|

||||

"informers/certificates",

|

||||

"informers/certificates/v1beta1",

|

||||

"informers/coordination",

|

||||

"informers/coordination/v1beta1",

|

||||

"informers/core",

|

||||

"informers/core/v1",

|

||||

"informers/events",

|

||||

"informers/events/v1beta1",

|

||||

"informers/extensions",

|

||||

"informers/extensions/v1beta1",

|

||||

"informers/internalinterfaces",

|

||||

"informers/networking",

|

||||

"informers/networking/v1",

|

||||

"informers/policy",

|

||||

"informers/policy/v1beta1",

|

||||

"informers/rbac",

|

||||

"informers/rbac/v1",

|

||||

"informers/rbac/v1alpha1",

|

||||

"informers/rbac/v1beta1",

|

||||

"informers/scheduling",

|

||||

"informers/scheduling/v1alpha1",

|

||||

"informers/scheduling/v1beta1",

|

||||

"informers/settings",

|

||||

"informers/settings/v1alpha1",

|

||||

"informers/storage",

|

||||

"informers/storage/v1",

|

||||

"informers/storage/v1alpha1",

|

||||

"informers/storage/v1beta1",

|

||||

"kubernetes",

|

||||

"kubernetes/fake",

|

||||

"kubernetes/scheme",

|

||||

@@ -545,6 +947,8 @@

|

||||

"kubernetes/typed/apps/v1beta1/fake",

|

||||

"kubernetes/typed/apps/v1beta2",

|

||||

"kubernetes/typed/apps/v1beta2/fake",

|

||||

"kubernetes/typed/auditregistration/v1alpha1",

|

||||

"kubernetes/typed/auditregistration/v1alpha1/fake",

|

||||

"kubernetes/typed/authentication/v1",

|

||||

"kubernetes/typed/authentication/v1/fake",

|

||||

"kubernetes/typed/authentication/v1beta1",

|

||||

@@ -557,6 +961,8 @@

|

||||

"kubernetes/typed/autoscaling/v1/fake",

|

||||

"kubernetes/typed/autoscaling/v2beta1",

|

||||

"kubernetes/typed/autoscaling/v2beta1/fake",

|

||||

"kubernetes/typed/autoscaling/v2beta2",

|

||||

"kubernetes/typed/autoscaling/v2beta2/fake",

|

||||

"kubernetes/typed/batch/v1",

|

||||

"kubernetes/typed/batch/v1/fake",

|

||||

"kubernetes/typed/batch/v1beta1",

|

||||

@@ -565,6 +971,8 @@

|

||||

"kubernetes/typed/batch/v2alpha1/fake",

|

||||

"kubernetes/typed/certificates/v1beta1",

|

||||

"kubernetes/typed/certificates/v1beta1/fake",

|

||||

"kubernetes/typed/coordination/v1beta1",

|

||||

"kubernetes/typed/coordination/v1beta1/fake",

|

||||

"kubernetes/typed/core/v1",

|

||||

"kubernetes/typed/core/v1/fake",

|

||||

"kubernetes/typed/events/v1beta1",

|

||||

@@ -593,6 +1001,34 @@

|

||||

"kubernetes/typed/storage/v1alpha1/fake",

|

||||

"kubernetes/typed/storage/v1beta1",

|

||||

"kubernetes/typed/storage/v1beta1/fake",

|

||||

"listers/admissionregistration/v1alpha1",

|

||||

"listers/admissionregistration/v1beta1",

|

||||

"listers/apps/v1",

|

||||

"listers/apps/v1beta1",

|

||||

"listers/apps/v1beta2",

|

||||

"listers/auditregistration/v1alpha1",

|

||||

"listers/autoscaling/v1",

|

||||

"listers/autoscaling/v2beta1",

|

||||

"listers/autoscaling/v2beta2",

|

||||

"listers/batch/v1",

|

||||

"listers/batch/v1beta1",

|

||||

"listers/batch/v2alpha1",

|

||||

"listers/certificates/v1beta1",

|

||||

"listers/coordination/v1beta1",

|

||||

"listers/core/v1",

|

||||

"listers/events/v1beta1",

|

||||

"listers/extensions/v1beta1",

|

||||

"listers/networking/v1",

|

||||

"listers/policy/v1beta1",

|

||||

"listers/rbac/v1",

|

||||

"listers/rbac/v1alpha1",

|

||||

"listers/rbac/v1beta1",

|

||||

"listers/scheduling/v1alpha1",

|

||||

"listers/scheduling/v1beta1",

|

||||

"listers/settings/v1alpha1",

|

||||

"listers/storage/v1",

|

||||

"listers/storage/v1alpha1",

|

||||

"listers/storage/v1beta1",

|

||||

"pkg/apis/clientauthentication",

|

||||

"pkg/apis/clientauthentication/v1alpha1",

|

||||

"pkg/apis/clientauthentication/v1beta1",

|

||||

@@ -625,11 +1061,11 @@

|

||||

"util/workqueue",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "7d04d0e2a0a1a4d4a1cd6baa432a2301492e4e65"

|

||||

version = "kubernetes-1.11.0"

|

||||

revision = "8d9ed539ba3134352c586810e749e58df4e94e4f"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:8ab487a323486c8bbbaa3b689850487fdccc6cbea8690620e083b2d230a4447e"

|

||||

digest = "1:dc1ae99dcab96913d81ae970b1f7a7411a54199b14bfb17a7e86f9a56979c720"

|

||||

name = "k8s.io/code-generator"

|

||||

packages = [

|

||||

"cmd/client-gen",

|

||||

@@ -653,12 +1089,12 @@

|

||||

"pkg/util",

|

||||

]

|

||||

pruneopts = "T"

|

||||

revision = "6702109cc68eb6fe6350b83e14407c8d7309fd1a"

|

||||

version = "kubernetes-1.11.0"

|

||||

revision = "c2090bec4d9b1fb25de3812f868accc2bc9ecbae"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:61024ed77a53ac618effed55043bf6a9afbdeb64136bd6a5b0c992d4c0363766"

|

||||

digest = "1:39912eb5f8eaf46486faae0839586c27c93423e552f76875defa048f52c15c15"

|

||||

name = "k8s.io/gengo"

|

||||

packages = [

|

||||

"args",

|

||||

@@ -671,23 +1107,32 @@

|

||||

"types",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "0689ccc1d7d65d9dd1bedcc3b0b1ed7df91ba266"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:c263611800c3a97991dbcf9d3bc4de390f6224aaa8ca0a7226a9d734f65a416a"

|

||||

name = "k8s.io/klog"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "71442cd4037d612096940ceb0f3fec3f7fff66e0"

|

||||

version = "v0.2.0"

|

||||

revision = "e17681d19d3ac4837a019ece36c2a0ec31ffe985"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:03a96603922fc1f6895ae083e1e16d943b55ef0656b56965351bd87e7d90485f"

|

||||

digest = "1:755d83f10748295646cf74cd19611ebffad37807e49632feb8e3f47d43210c3d"

|

||||

name = "k8s.io/klog"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "9cbb78b20423182f9e5b2a214dd255f5e117d2d1"

|

||||

source = "github.com/stefanprodan/klog"

|

||||

|

||||

[[projects]]

|

||||

branch = "master"

|

||||

digest = "1:42674e29bf0cf4662d49bd9528e24b9ecc4895b32d0be281f9cf04d3a7671846"

|

||||

name = "k8s.io/kube-openapi"

|

||||

packages = ["pkg/util/proto"]

|

||||

pruneopts = "NUT"

|

||||

revision = "b3a7cee44a305be0a69e1b9ac03018307287e1b0"

|

||||

revision = "6b3d3b2d5666c5912bab8b7bf26bf50f75a8f887"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:8730e0150dfb2b7e173890c8b9868e7a273082ef8e39f4940e3506a481cf895c"

|

||||

name = "sigs.k8s.io/yaml"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "fd68e9863619f6ec2fdd8625fe1f02e7c877e480"

|

||||

version = "v1.1.0"

|

||||

|

||||

[solve-meta]

|

||||

analyzer-name = "dep"

|

||||

@@ -695,9 +1140,17 @@

|

||||

input-imports = [

|

||||

"github.com/google/go-cmp/cmp",

|

||||

"github.com/google/go-cmp/cmp/cmpopts",

|

||||

"github.com/istio/glog",

|

||||

"github.com/prometheus/client_golang/prometheus",

|

||||

"github.com/prometheus/client_golang/prometheus/promhttp",

|

||||

"github.com/solo-io/gloo/projects/gloo/pkg/api/v1",

|

||||

"github.com/solo-io/solo-kit/pkg/api/v1/clients",

|

||||

"github.com/solo-io/solo-kit/pkg/api/v1/clients/factory",

|

||||

"github.com/solo-io/solo-kit/pkg/api/v1/clients/kube",

|

||||

"github.com/solo-io/solo-kit/pkg/api/v1/clients/memory",

|

||||

"github.com/solo-io/solo-kit/pkg/api/v1/resources/core",

|

||||

"github.com/solo-io/solo-kit/pkg/errors",

|

||||

"github.com/solo-io/supergloo/pkg/api/v1",

|

||||

"github.com/stefanprodan/klog",

|

||||

"go.uber.org/zap",

|

||||

"go.uber.org/zap/zapcore",

|

||||

"gopkg.in/h2non/gock.v1",

|

||||

|

||||

30

Gopkg.toml

30

Gopkg.toml

@@ -21,25 +21,27 @@ required = [

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/api"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/apimachinery"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/code-generator"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/client-go"

|

||||

version = "kubernetes-1.11.0"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "github.com/json-iterator/go"

|

||||

# This is the commit at which k8s depends on this in 1.11

|

||||

# It seems to be broken at HEAD.

|

||||

revision = "f2b4162afba35581b6d4a50d3b8f34e33c144682"

|

||||

name = "k8s.io/apiextensions-apiserver"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[override]]

|

||||

name = "k8s.io/apiserver"

|

||||

version = "kubernetes-1.13.1"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/prometheus/client_golang"

|

||||

@@ -50,8 +52,8 @@ required = [

|

||||

version = "v0.2.0"

|

||||

|

||||

[[override]]

|

||||

name = "github.com/golang/glog"

|

||||

source = "github.com/istio/glog"

|

||||

name = "k8s.io/klog"

|

||||

source = "github.com/stefanprodan/klog"

|

||||

|

||||

[prune]

|

||||

go-tests = true

|

||||

@@ -62,3 +64,11 @@ required = [

|

||||

name = "k8s.io/code-generator"

|

||||

unused-packages = false

|

||||

non-go = false

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/solo-io/supergloo"

|

||||

version = "v0.3.11"

|

||||

|

||||

[[constraint]]

|

||||

name = "github.com/solo-io/solo-kit"

|

||||

version = "v0.6.3"

|

||||

|

||||

11

Makefile

11

Makefile

@@ -4,6 +4,7 @@ VERSION_MINOR:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4

|

||||

PATCH:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | tr -d '"' | awk -F. '{print $$NF}')

|

||||

SOURCE_DIRS = cmd pkg/apis pkg/controller pkg/server pkg/logging pkg/version

|

||||

LT_VERSION?=$(shell grep 'VERSION' cmd/loadtester/main.go | awk '{ print $$4 }' | tr -d '"' | head -n1)

|

||||

TS=$(shell date +%Y-%m-%d_%H-%M-%S)

|

||||

|

||||

run:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info \

|

||||

@@ -17,12 +18,18 @@ run-appmesh:

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

run-nginx:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=nginx -namespace=nginx \

|

||||

-metrics-server=http://prometheus-weave.istio.weavedx.com \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

build:

|

||||

docker build -t weaveworks/flagger:$(TAG) . -f Dockerfile

|

||||

|

||||

push:

|

||||

docker tag weaveworks/flagger:$(TAG) quay.io/weaveworks/flagger:$(VERSION)

|

||||

docker push quay.io/weaveworks/flagger:$(VERSION)

|

||||

docker tag weaveworks/flagger:$(TAG) weaveworks/flagger:$(VERSION)

|

||||

docker push weaveworks/flagger:$(VERSION)

|

||||

|

||||

fmt:

|

||||

gofmt -l -s -w $(SOURCE_DIRS)

|

||||

|

||||

26

README.md

26

README.md

@@ -7,7 +7,7 @@

|

||||

[](https://github.com/weaveworks/flagger/releases)

|

||||

|

||||

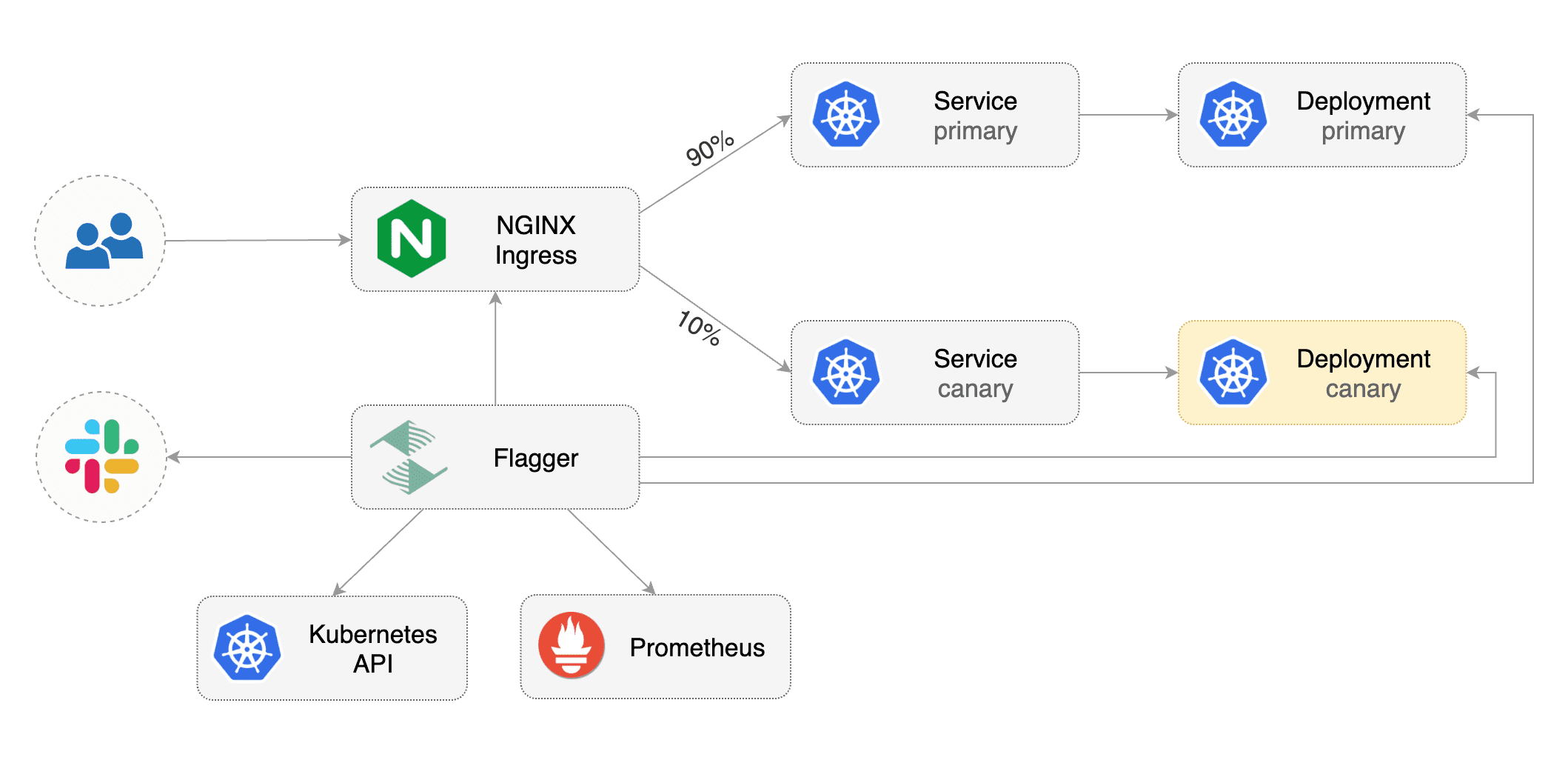

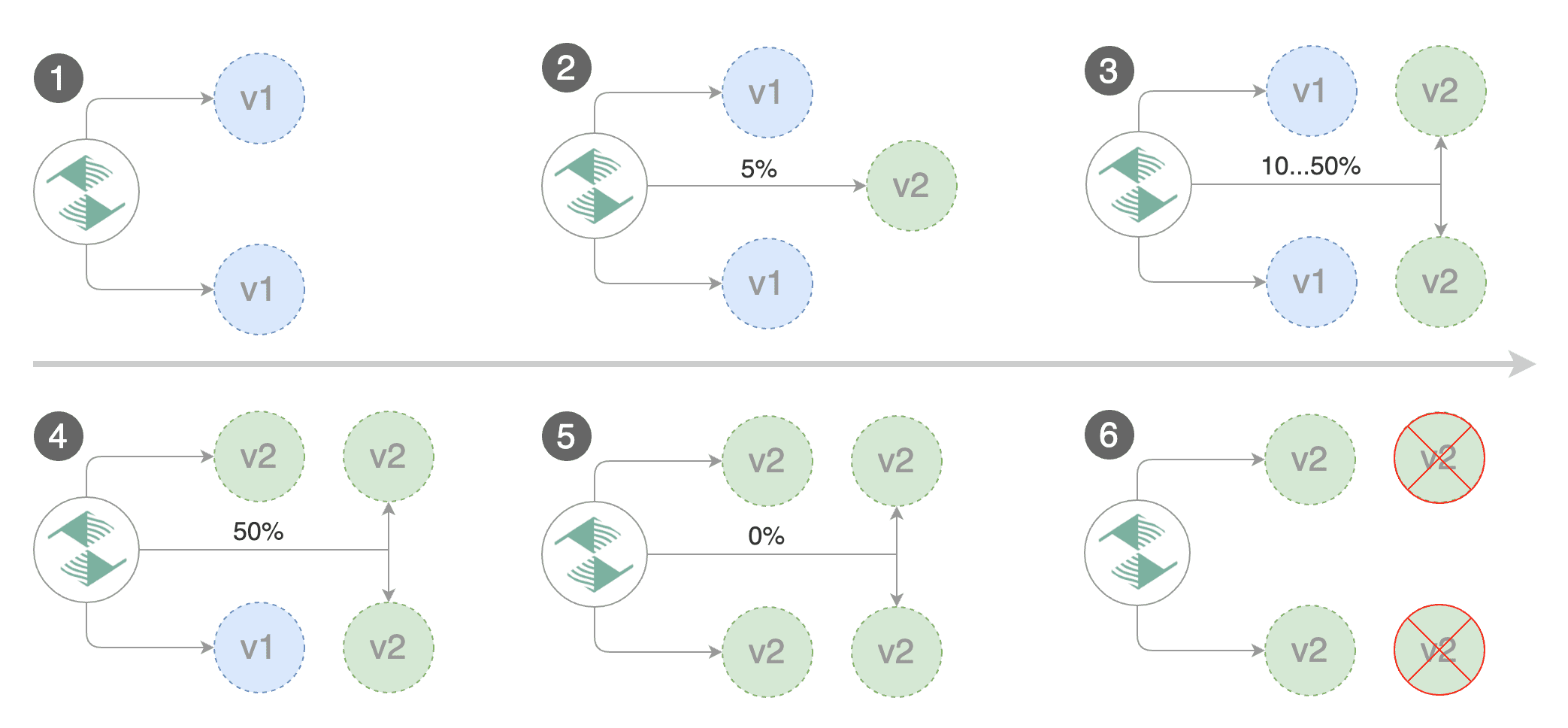

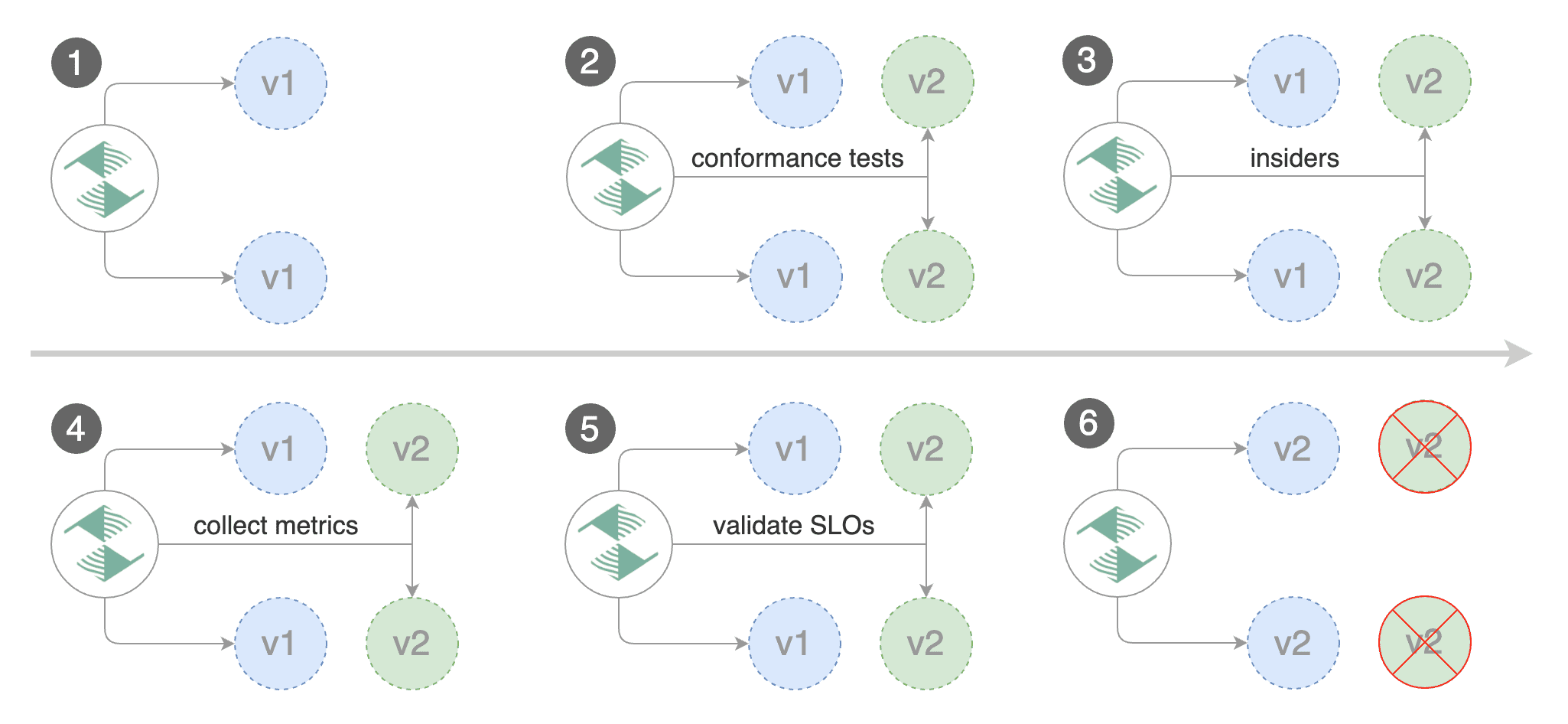

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio or App Mesh routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

using Istio, App Mesh or NGINX routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The canary analysis can be extended with webhooks for running acceptance tests,

|

||||

load tests or any other custom validation.

|

||||

|

||||

@@ -25,6 +25,7 @@ Flagger documentation can be found at [docs.flagger.app](https://docs.flagger.ap

|

||||

* [Flagger install on Kubernetes](https://docs.flagger.app/install/flagger-install-on-kubernetes)

|

||||

* [Flagger install on GKE Istio](https://docs.flagger.app/install/flagger-install-on-google-cloud)

|

||||

* [Flagger install on EKS App Mesh](https://docs.flagger.app/install/flagger-install-on-eks-appmesh)

|

||||

* [Flagger install with SuperGloo](https://docs.flagger.app/install/flagger-install-with-supergloo)

|

||||

* How it works

|

||||

* [Canary custom resource](https://docs.flagger.app/how-it-works#canary-custom-resource)

|

||||

* [Routing](https://docs.flagger.app/how-it-works#istio-routing)

|

||||

@@ -38,6 +39,7 @@ Flagger documentation can be found at [docs.flagger.app](https://docs.flagger.ap

|

||||

* [Istio canary deployments](https://docs.flagger.app/usage/progressive-delivery)

|

||||

* [Istio A/B testing](https://docs.flagger.app/usage/ab-testing)

|

||||

* [App Mesh canary deployments](https://docs.flagger.app/usage/appmesh-progressive-delivery)

|

||||

* [NGINX ingress controller canary deployments](https://docs.flagger.app/usage/nginx-progressive-delivery)

|

||||

* [Monitoring](https://docs.flagger.app/usage/monitoring)

|

||||

* [Alerting](https://docs.flagger.app/usage/alerting)

|

||||

* Tutorials

|

||||

@@ -152,20 +154,20 @@ For more details on how the canary analysis and promotion works please [read the

|

||||

|

||||

## Features

|

||||

|

||||

| Feature | Istio | App Mesh |

|

||||

| -------------------------------------------- | ------------------ | ------------------ |

|

||||

| Canary deployments (weighted traffic) | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| A/B testing (headers and cookies filters) | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Load testing | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Webhooks (custom acceptance tests) | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request success rate check (Envoy metric) | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request duration check (Envoy metric) | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Custom promql checks | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Ingress gateway (CORS, retries and timeouts) | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Feature | Istio | App Mesh | SuperGloo | NGINX Ingress |

|

||||

| -------------------------------------------- | ------------------ | ------------------ |------------------ |------------------ |

|

||||

| Canary deployments (weighted traffic) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| A/B testing (headers and cookies filters) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_check_mark: |

|

||||

| Load testing | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Webhooks (custom acceptance tests) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request success rate check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request duration check (L7 metric) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Custom promql checks | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Ingress gateway (CORS, retries and timeouts) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

|

||||

## Roadmap

|

||||

|

||||

* Integrate with other service mesh technologies like Linkerd v2, Super Gloo or Consul Mesh

|

||||

* Integrate with other service mesh technologies like Linkerd v2

|

||||

* Add support for comparing the canary metrics to the primary ones and do the validation based on the derivation between the two

|

||||

|

||||

## Contributing

|

||||

|

||||

@@ -31,6 +31,12 @@ rules:

|

||||

resources:

|

||||

- horizontalpodautoscalers

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- "extensions"

|

||||

resources:

|

||||

- ingresses

|

||||

- ingresses/status

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- flagger.app

|

||||

resources:

|

||||

|

||||

@@ -69,6 +69,18 @@ spec:

|

||||

type: string

|

||||

name:

|

||||

type: string

|

||||

ingressRef:

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

kind:

|

||||

type: string

|

||||

name:

|

||||

type: string

|

||||

service:

|

||||

type: object

|

||||

required: ['port']

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: weaveworks/flagger:0.11.1

|

||||

image: weaveworks/flagger:0.13.2

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

@@ -31,6 +31,7 @@ spec:

|

||||

- ./flagger

|

||||

- -log-level=info

|

||||

- -control-loop-interval=10s

|

||||

- -mesh-provider=$(MESH_PROVIDER)

|

||||

- -metrics-server=http://prometheus.istio-system.svc.cluster.local:9090

|

||||

livenessProbe:

|

||||

exec:

|

||||

|

||||

@@ -57,11 +57,11 @@ spec:

|

||||

securityContext:

|

||||

readOnlyRootFilesystem: true

|

||||

runAsUser: 10001

|

||||

volumeMounts:

|

||||

- name: tests

|

||||

mountPath: /bats

|

||||

readOnly: true

|

||||

volumes:

|

||||

- name: tests

|

||||

configMap:

|

||||

name: flagger-loadtester-bats

|

||||

# volumeMounts:

|

||||

# - name: tests

|

||||

# mountPath: /bats

|

||||

# readOnly: true

|

||||

# volumes:

|

||||

# - name: tests

|

||||

# configMap:

|

||||

# name: flagger-loadtester-bats

|

||||

68

artifacts/nginx/canary.yaml

Normal file

68

artifacts/nginx/canary.yaml

Normal file

@@ -0,0 +1,68 @@

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# ingress reference

|

||||

ingressRef:

|

||||

apiVersion: extensions/v1beta1

|

||||

kind: Ingress

|

||||

name: podinfo

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# NGINX Prometheus checks

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: "latency"

|

||||

threshold: 0.5

|

||||

interval: 1m

|

||||

query: |

|

||||

histogram_quantile(0.99,

|

||||

sum(

|

||||

rate(

|

||||

http_request_duration_seconds_bucket{

|

||||

kubernetes_namespace="test",

|

||||

kubernetes_pod_name=~"podinfo-[0-9a-zA-Z]+(-[0-9a-zA-Z]+)"

|

||||

}[1m]

|

||||

)

|

||||

) by (le)

|

||||

)

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://app.example.com/"

|

||||

logCmdOutput: "true"

|

||||

69

artifacts/nginx/deployment.yaml

Normal file

69

artifacts/nginx/deployment.yaml

Normal file

@@ -0,0 +1,69 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

replicas: 1

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.4.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: green

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

failureThreshold: 3

|

||||

periodSeconds: 10

|

||||

successThreshold: 1

|

||||

timeoutSeconds: 2

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||