mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-17 11:29:59 +00:00

Compare commits

22 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

71137ba3bb | ||

|

|

6372c7dfcc | ||

|

|

4584733f6f | ||

|

|

03408683c0 | ||

|

|

29137ae75b | ||

|

|

6bf85526d0 | ||

|

|

9f6a30f43e | ||

|

|

11bc0390c4 | ||

|

|

9a29ea69d7 | ||

|

|

2d8adbaca4 | ||

|

|

f3904ea099 | ||

|

|

1b2b13e77f | ||

|

|

8878f15806 | ||

|

|

5977ff9bae | ||

|

|

11ef6bdf37 | ||

|

|

9c342e35be | ||

|

|

c7e7785b06 | ||

|

|

4cb5ceb48b | ||

|

|

5a79402a73 | ||

|

|

c24b11ff8b | ||

|

|

042d3c1a5b | ||

|

|

f8821cf30b |

8

.codecov.yml

Normal file

8

.codecov.yml

Normal file

@@ -0,0 +1,8 @@

|

||||

coverage:

|

||||

status:

|

||||

project:

|

||||

default:

|

||||

target: auto

|

||||

threshold: 50

|

||||

base: auto

|

||||

patch: off

|

||||

3

Makefile

3

Makefile

@@ -13,7 +13,8 @@ build:

|

||||

docker build -t stefanprodan/flagger:$(TAG) . -f Dockerfile

|

||||

|

||||

push:

|

||||

docker push stefanprodan/flagger:$(TAG)

|

||||

docker tag stefanprodan/flagger:$(TAG) quay.io/stefanprodan/flagger:$(VERSION)

|

||||

docker push quay.io/stefanprodan/flagger:$(VERSION)

|

||||

|

||||

fmt:

|

||||

gofmt -l -s -w $(SOURCE_DIRS)

|

||||

|

||||

23

README.md

23

README.md

@@ -84,6 +84,9 @@ spec:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# hpa reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

@@ -364,7 +367,25 @@ helm upgrade -i flagger flagger/flagger \

|

||||

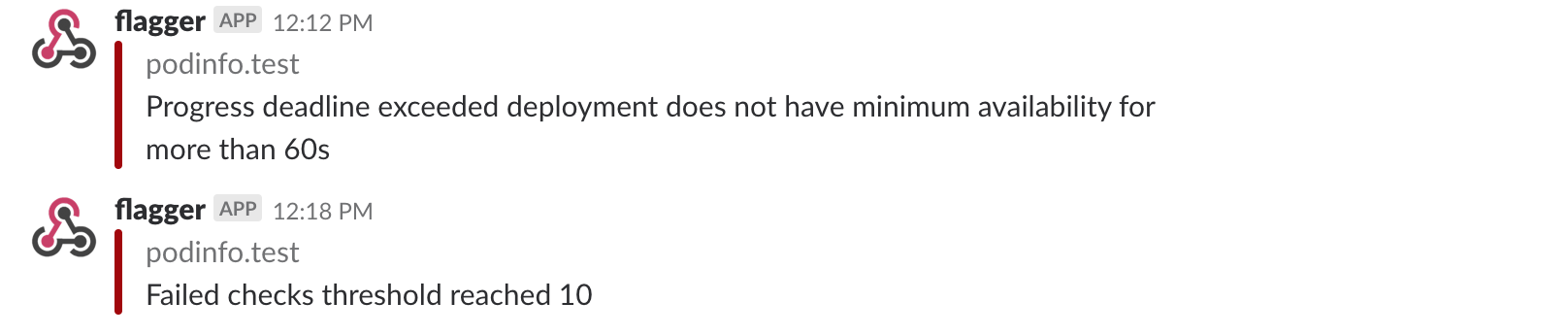

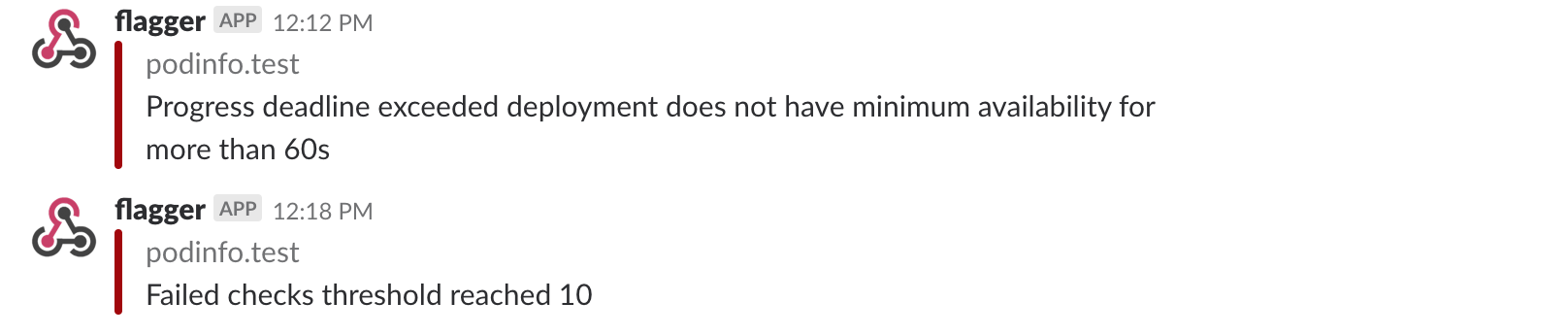

Once configured with a Slack incoming webhook, Flagger will post messages when a canary deployment has been initialized,

|

||||

when a new revision has been detected and if the canary analysis failed or succeeded.

|

||||

|

||||

|

||||

|

||||

|

||||

A canary deployment will be rolled back if the progress deadline exceeded or if the analysis

|

||||

reached the maximum number of failed checks:

|

||||

|

||||

|

||||

|

||||

Besides Slack, you can use Alertmanager to trigger alerts when a canary deployment failed:

|

||||

|

||||

```yaml

|

||||

- alert: canary_rollback

|

||||

expr: flagger_canary_status > 1

|

||||

for: 1m

|

||||

labels:

|

||||

severity: warning

|

||||

annotations:

|

||||

summary: "Canary failed"

|

||||

description: "Workload {{ $labels.name }} namespace {{ $labels.namespace }}"

|

||||

```

|

||||

|

||||

### Roadmap

|

||||

|

||||

|

||||

@@ -9,6 +9,9 @@ spec:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

|

||||

@@ -8,6 +8,7 @@ metadata:

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

|

||||

@@ -23,6 +23,8 @@ spec:

|

||||

- service

|

||||

- canaryAnalysis

|

||||

properties:

|

||||

progressDeadlineSeconds:

|

||||

type: number

|

||||

targetRef:

|

||||

properties:

|

||||

apiVersion:

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.1.0

|

||||

image: quay.io/stefanprodan/flagger:0.1.2

|

||||

imagePullPolicy: Always

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.1.0

|

||||

appVersion: 0.1.0

|

||||

version: 0.1.2

|

||||

appVersion: 0.1.2

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

home: https://github.com/stefanprodan/flagger

|

||||

|

||||

@@ -26,6 +26,8 @@ spec:

|

||||

- service

|

||||

- canaryAnalysis

|

||||

properties:

|

||||

progressDeadlineSeconds:

|

||||

type: number

|

||||

targetRef:

|

||||

properties:

|

||||

apiVersion:

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger

|

||||

tag: 0.1.0

|

||||

tag: 0.1.2

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

controlLoopInterval: "10s"

|

||||

|

||||

@@ -84,6 +84,9 @@ spec:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# hpa reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

@@ -364,7 +367,25 @@ helm upgrade -i flagger flagger/flagger \

|

||||

Once configured with a Slack incoming webhook, Flagger will post messages when a canary deployment has been initialized,

|

||||

when a new revision has been detected and if the canary analysis failed or succeeded.

|

||||

|

||||

|

||||

|

||||

|

||||

A canary deployment will be rolled back if the progress deadline exceeded or if the analysis

|

||||

reached the maximum number of failed checks:

|

||||

|

||||

|

||||

|

||||

Besides Slack, you can use Alertmanager to trigger alerts when a canary deployment failed:

|

||||

|

||||

```yaml

|

||||

- alert: canary_rollback

|

||||

expr: flagger_canary_status > 1

|

||||

for: 1m

|

||||

labels:

|

||||

severity: warning

|

||||

annotations:

|

||||

summary: "Canary failed"

|

||||

description: "Workload {{ $labels.name }} namespace {{ $labels.namespace }}"

|

||||

```

|

||||

|

||||

### Roadmap

|

||||

|

||||

|

||||

BIN

docs/flagger-0.1.1.tgz

Normal file

BIN

docs/flagger-0.1.1.tgz

Normal file

Binary file not shown.

BIN

docs/flagger-0.1.2.tgz

Normal file

BIN

docs/flagger-0.1.2.tgz

Normal file

Binary file not shown.

Binary file not shown.

@@ -1,9 +1,33 @@

|

||||

apiVersion: v1

|

||||

entries:

|

||||

flagger:

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.2

|

||||

created: 2018-12-06T13:57:50.322474+07:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: a52bf1bf797d60d3d92f46f84805edbd1ffb7d87504727266f08543532ff5e08

|

||||

home: https://github.com/stefanprodan/flagger

|

||||

name: flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.1.2.tgz

|

||||

version: 0.1.2

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.1

|

||||

created: 2018-12-06T13:57:50.322115+07:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 2bb8f72fcf63a5ba5ecbaa2ab0d0446f438ec93fbf3a598cd7de45e64d8f9628

|

||||

home: https://github.com/stefanprodan/flagger

|

||||

name: flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.1.1.tgz

|

||||

version: 0.1.1

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.0

|

||||

created: 2018-11-25T20:52:59.226156+02:00

|

||||

created: 2018-12-06T13:57:50.321509+07:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

@@ -16,13 +40,13 @@ entries:

|

||||

grafana:

|

||||

- apiVersion: v1

|

||||

appVersion: 5.3.1

|

||||

created: 2018-11-25T20:52:59.226488+02:00

|

||||

created: 2018-12-06T13:57:50.323051+07:00

|

||||

description: A Grafana Helm chart for monitoring progressive deployments powered

|

||||

by Istio and Flagger

|

||||

digest: 12ad252512006e91b6eb359c4e0c73e7f01f74f3c07c85bb1e66780bed6747f5

|

||||

digest: 692b7c545214b652374249cc814d37decd8df4f915530e82dff4d9dfa25e8762

|

||||

home: https://github.com/stefanprodan/flagger

|

||||

name: grafana

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/grafana-0.1.0.tgz

|

||||

version: 0.1.0

|

||||

generated: 2018-11-25T20:52:59.225755+02:00

|

||||

generated: 2018-12-06T13:57:50.320726+07:00

|

||||

|

||||

BIN

docs/screens/slack-canary-failed.png

Normal file

BIN

docs/screens/slack-canary-failed.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 78 KiB |

BIN

docs/screens/slack-canary-success.png

Normal file

BIN

docs/screens/slack-canary-success.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 113 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 114 KiB |

@@ -21,7 +21,10 @@ import (

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

)

|

||||

|

||||

const CanaryKind = "Canary"

|

||||

const (

|

||||

CanaryKind = "Canary"

|

||||

ProgressDeadlineSeconds = 600

|

||||

)

|

||||

|

||||

// +genclient

|

||||

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

|

||||

@@ -48,6 +51,10 @@ type CanarySpec struct {

|

||||

|

||||

// metrics and thresholds

|

||||

CanaryAnalysis CanaryAnalysis `json:"canaryAnalysis"`

|

||||

|

||||

// the maximum time in seconds for a canary deployment to make progress

|

||||

// before it is considered to be failed. Defaults to 60s.

|

||||

ProgressDeadlineSeconds *int32 `json:"progressDeadlineSeconds,omitempty"`

|

||||

}

|

||||

|

||||

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

|

||||

@@ -60,11 +67,21 @@ type CanaryList struct {

|

||||

Items []Canary `json:"items"`

|

||||

}

|

||||

|

||||

// CanaryState used for status state op

|

||||

type CanaryState string

|

||||

|

||||

const (

|

||||

CanaryRunning CanaryState = "running"

|

||||

CanaryFinished CanaryState = "finished"

|

||||

CanaryFailed CanaryState = "failed"

|

||||

CanaryInitialized CanaryState = "initialized"

|

||||

)

|

||||

|

||||

// CanaryStatus is used for state persistence (read-only)

|

||||

type CanaryStatus struct {

|

||||

State string `json:"state"`

|

||||

CanaryRevision string `json:"canaryRevision"`

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

State CanaryState `json:"state"`

|

||||

CanaryRevision string `json:"canaryRevision"`

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

// +optional

|

||||

LastTransitionTime metav1.Time `json:"lastTransitionTime,omitempty"`

|

||||

}

|

||||

@@ -91,3 +108,11 @@ type CanaryMetric struct {

|

||||

Interval string `json:"interval"`

|

||||

Threshold int `json:"threshold"`

|

||||

}

|

||||

|

||||

func (c *Canary) GetProgressDeadlineSeconds() int {

|

||||

if c.Spec.ProgressDeadlineSeconds != nil {

|

||||

return int(*c.Spec.ProgressDeadlineSeconds)

|

||||

}

|

||||

|

||||

return ProgressDeadlineSeconds

|

||||

}

|

||||

|

||||

@@ -155,6 +155,11 @@ func (in *CanarySpec) DeepCopyInto(out *CanarySpec) {

|

||||

out.AutoscalerRef = in.AutoscalerRef

|

||||

in.Service.DeepCopyInto(&out.Service)

|

||||

in.CanaryAnalysis.DeepCopyInto(&out.CanaryAnalysis)

|

||||

if in.ProgressDeadlineSeconds != nil {

|

||||

in, out := &in.ProgressDeadlineSeconds, &out.ProgressDeadlineSeconds

|

||||

*out = new(int32)

|

||||

**out = **in

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

|

||||

@@ -260,12 +260,36 @@ func (c *Controller) recordEventWarningf(r *flaggerv1.Canary, template string, a

|

||||

c.eventRecorder.Event(r, corev1.EventTypeWarning, "Synced", fmt.Sprintf(template, args...))

|

||||

}

|

||||

|

||||

func (c *Controller) sendNotification(workload string, namespace string, message string, warn bool) {

|

||||

func (c *Controller) sendNotification(cd *flaggerv1.Canary, message string, metadata bool, warn bool) {

|

||||

if c.notifier == nil {

|

||||

return

|

||||

}

|

||||

|

||||

err := c.notifier.Post(workload, namespace, message, warn)

|

||||

var fields []notifier.SlackField

|

||||

|

||||

if metadata {

|

||||

fields = append(fields,

|

||||

notifier.SlackField{

|

||||

Title: "Target",

|

||||

Value: fmt.Sprintf("%s/%s.%s", cd.Spec.TargetRef.Kind, cd.Spec.TargetRef.Name, cd.Namespace),

|

||||

},

|

||||

notifier.SlackField{

|

||||

Title: "Traffic routing",

|

||||

Value: fmt.Sprintf("Weight step: %v max: %v",

|

||||

cd.Spec.CanaryAnalysis.StepWeight,

|

||||

cd.Spec.CanaryAnalysis.MaxWeight),

|

||||

},

|

||||

notifier.SlackField{

|

||||

Title: "Failed checks threshold",

|

||||

Value: fmt.Sprintf("%v", cd.Spec.CanaryAnalysis.Threshold),

|

||||

},

|

||||

notifier.SlackField{

|

||||

Title: "Progress deadline",

|

||||

Value: fmt.Sprintf("%vs", cd.GetProgressDeadlineSeconds()),

|

||||

},

|

||||

)

|

||||

}

|

||||

err := c.notifier.Post(cd.Name, cd.Namespace, message, fields, warn)

|

||||

if err != nil {

|

||||

c.logger.Error(err)

|

||||

}

|

||||

|

||||

@@ -4,6 +4,7 @@ import (

|

||||

"encoding/base64"

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"time"

|

||||

|

||||

"github.com/google/go-cmp/cmp"

|

||||

"github.com/google/go-cmp/cmp/cmpopts"

|

||||

@@ -48,6 +49,7 @@ func (c *CanaryDeployer) Promote(cd *flaggerv1.Canary) error {

|

||||

return fmt.Errorf("deployment %s.%s query error %v", primaryName, cd.Namespace, err)

|

||||

}

|

||||

|

||||

primary.Spec.ProgressDeadlineSeconds = canary.Spec.ProgressDeadlineSeconds

|

||||

primary.Spec.MinReadySeconds = canary.Spec.MinReadySeconds

|

||||

primary.Spec.RevisionHistoryLimit = canary.Spec.RevisionHistoryLimit

|

||||

primary.Spec.Strategy = canary.Spec.Strategy

|

||||

@@ -61,37 +63,58 @@ func (c *CanaryDeployer) Promote(cd *flaggerv1.Canary) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

// IsReady checks the primary and canary deployment status and returns an error if

|

||||

// the deployments are in the middle of a rolling update or if the pods are unhealthy

|

||||

func (c *CanaryDeployer) IsReady(cd *flaggerv1.Canary) error {

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return fmt.Errorf("deployment %s.%s not found", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

}

|

||||

return fmt.Errorf("deployment %s.%s query error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

}

|

||||

if msg, healthy := c.getDeploymentStatus(canary); !healthy {

|

||||

return fmt.Errorf("Halt %s.%s advancement %s", cd.Name, cd.Namespace, msg)

|

||||

}

|

||||

|

||||

// IsPrimaryReady checks the primary deployment status and returns an error if

|

||||

// the deployment is in the middle of a rolling update or if the pods are unhealthy

|

||||

// it will return a non retriable error if the rolling update is stuck

|

||||

func (c *CanaryDeployer) IsPrimaryReady(cd *flaggerv1.Canary) (bool, error) {

|

||||

primaryName := fmt.Sprintf("%s-primary", cd.Spec.TargetRef.Name)

|

||||

primary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(primaryName, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return fmt.Errorf("deployment %s.%s not found", primaryName, cd.Namespace)

|

||||

return true, fmt.Errorf("deployment %s.%s not found", primaryName, cd.Namespace)

|

||||

}

|

||||

return fmt.Errorf("deployment %s.%s query error %v", primaryName, cd.Namespace, err)

|

||||

return true, fmt.Errorf("deployment %s.%s query error %v", primaryName, cd.Namespace, err)

|

||||

}

|

||||

if msg, healthy := c.getDeploymentStatus(primary); !healthy {

|

||||

return fmt.Errorf("Halt %s.%s advancement %s", cd.Name, cd.Namespace, msg)

|

||||

|

||||

retriable, err := c.isDeploymentReady(primary, cd.GetProgressDeadlineSeconds())

|

||||

if err != nil {

|

||||

if retriable {

|

||||

return retriable, fmt.Errorf("Halt %s.%s advancement %s", cd.Name, cd.Namespace, err.Error())

|

||||

} else {

|

||||

return retriable, err

|

||||

}

|

||||

}

|

||||

|

||||

if primary.Spec.Replicas == int32p(0) {

|

||||

return fmt.Errorf("halt %s.%s advancement %s",

|

||||

cd.Name, cd.Namespace, "primary deployment is scaled to zero")

|

||||

return true, fmt.Errorf("halt %s.%s advancement primary deployment is scaled to zero",

|

||||

cd.Name, cd.Namespace)

|

||||

}

|

||||

return nil

|

||||

return true, nil

|

||||

}

|

||||

|

||||

// IsCanaryReady checks the primary deployment status and returns an error if

|

||||

// the deployment is in the middle of a rolling update or if the pods are unhealthy

|

||||

// it will return a non retriable error if the rolling update is stuck

|

||||

func (c *CanaryDeployer) IsCanaryReady(cd *flaggerv1.Canary) (bool, error) {

|

||||

canary, err := c.kubeClient.AppsV1().Deployments(cd.Namespace).Get(cd.Spec.TargetRef.Name, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

if errors.IsNotFound(err) {

|

||||

return true, fmt.Errorf("deployment %s.%s not found", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

}

|

||||

return true, fmt.Errorf("deployment %s.%s query error %v", cd.Spec.TargetRef.Name, cd.Namespace, err)

|

||||

}

|

||||

|

||||

retriable, err := c.isDeploymentReady(canary, cd.GetProgressDeadlineSeconds())

|

||||

if err != nil {

|

||||

if retriable {

|

||||

return retriable, fmt.Errorf("Halt %s.%s advancement %s", cd.Name, cd.Namespace, err.Error())

|

||||

} else {

|

||||

return retriable, fmt.Errorf("deployment does not have minimum availability for more than %vs",

|

||||

cd.GetProgressDeadlineSeconds())

|

||||

}

|

||||

}

|

||||

|

||||

return true, nil

|

||||

}

|

||||

|

||||

// IsNewSpec returns true if the canary deployment pod spec has changed

|

||||

@@ -139,7 +162,7 @@ func (c *CanaryDeployer) SetFailedChecks(cd *flaggerv1.Canary, val int) error {

|

||||

}

|

||||

|

||||

// SetState updates the canary status state

|

||||

func (c *CanaryDeployer) SetState(cd *flaggerv1.Canary, state string) error {

|

||||

func (c *CanaryDeployer) SetState(cd *flaggerv1.Canary, state flaggerv1.CanaryState) error {

|

||||

cd.Status.State = state

|

||||

cd.Status.LastTransitionTime = metav1.Now()

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha1().Canaries(cd.Namespace).Update(cd)

|

||||

@@ -244,10 +267,11 @@ func (c *CanaryDeployer) createPrimaryDeployment(cd *flaggerv1.Canary) error {

|

||||

},

|

||||

},

|

||||

Spec: appsv1.DeploymentSpec{

|

||||

MinReadySeconds: canaryDep.Spec.MinReadySeconds,

|

||||

RevisionHistoryLimit: canaryDep.Spec.RevisionHistoryLimit,

|

||||

Replicas: canaryDep.Spec.Replicas,

|

||||

Strategy: canaryDep.Spec.Strategy,

|

||||

ProgressDeadlineSeconds: canaryDep.Spec.ProgressDeadlineSeconds,

|

||||

MinReadySeconds: canaryDep.Spec.MinReadySeconds,

|

||||

RevisionHistoryLimit: canaryDep.Spec.RevisionHistoryLimit,

|

||||

Replicas: canaryDep.Spec.Replicas,

|

||||

Strategy: canaryDep.Spec.Strategy,

|

||||

Selector: &metav1.LabelSelector{

|

||||

MatchLabels: map[string]string{

|

||||

"app": primaryName,

|

||||

@@ -322,26 +346,41 @@ func (c *CanaryDeployer) createPrimaryHpa(cd *flaggerv1.Canary) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

func (c *CanaryDeployer) getDeploymentStatus(deployment *appsv1.Deployment) (string, bool) {

|

||||

// isDeploymentReady determines if a deployment is ready by checking the status conditions

|

||||

// if a deployment has exceeded the progress deadline it returns a non retriable error

|

||||

func (c *CanaryDeployer) isDeploymentReady(deployment *appsv1.Deployment, deadline int) (bool, error) {

|

||||

retriable := true

|

||||

if deployment.Generation <= deployment.Status.ObservedGeneration {

|

||||

cond := c.getDeploymentCondition(deployment.Status, appsv1.DeploymentProgressing)

|

||||

if cond != nil && cond.Reason == "ProgressDeadlineExceeded" {

|

||||

return fmt.Sprintf("deployment %q exceeded its progress deadline", deployment.GetName()), false

|

||||

} else if deployment.Spec.Replicas != nil && deployment.Status.UpdatedReplicas < *deployment.Spec.Replicas {

|

||||

return fmt.Sprintf("waiting for rollout to finish: %d out of %d new replicas have been updated",

|

||||

deployment.Status.UpdatedReplicas, *deployment.Spec.Replicas), false

|

||||

} else if deployment.Status.Replicas > deployment.Status.UpdatedReplicas {

|

||||

return fmt.Sprintf("waiting for rollout to finish: %d old replicas are pending termination",

|

||||

deployment.Status.Replicas-deployment.Status.UpdatedReplicas), false

|

||||

} else if deployment.Status.AvailableReplicas < deployment.Status.UpdatedReplicas {

|

||||

return fmt.Sprintf("waiting for rollout to finish: %d of %d updated replicas are available",

|

||||

deployment.Status.AvailableReplicas, deployment.Status.UpdatedReplicas), false

|

||||

progress := c.getDeploymentCondition(deployment.Status, appsv1.DeploymentProgressing)

|

||||

if progress != nil {

|

||||

// Determine if the deployment is stuck by checking if there is a minimum replicas unavailable condition

|

||||

// and if the last update time exceeds the deadline

|

||||

available := c.getDeploymentCondition(deployment.Status, appsv1.DeploymentAvailable)

|

||||

if available != nil && available.Status == "False" && available.Reason == "MinimumReplicasUnavailable" {

|

||||

from := available.LastUpdateTime

|

||||

delta := time.Duration(deadline) * time.Second

|

||||

retriable = !from.Add(delta).Before(time.Now())

|

||||

}

|

||||

}

|

||||

|

||||

if progress != nil && progress.Reason == "ProgressDeadlineExceeded" {

|

||||

return false, fmt.Errorf("deployment %q exceeded its progress deadline", deployment.GetName())

|

||||

} else if deployment.Spec.Replicas != nil && deployment.Status.UpdatedReplicas < *deployment.Spec.Replicas {

|

||||

return retriable, fmt.Errorf("waiting for rollout to finish: %d out of %d new replicas have been updated",

|

||||

deployment.Status.UpdatedReplicas, *deployment.Spec.Replicas)

|

||||

} else if deployment.Status.Replicas > deployment.Status.UpdatedReplicas {

|

||||

return retriable, fmt.Errorf("waiting for rollout to finish: %d old replicas are pending termination",

|

||||

deployment.Status.Replicas-deployment.Status.UpdatedReplicas)

|

||||

} else if deployment.Status.AvailableReplicas < deployment.Status.UpdatedReplicas {

|

||||

return retriable, fmt.Errorf("waiting for rollout to finish: %d of %d updated replicas are available",

|

||||

deployment.Status.AvailableReplicas, deployment.Status.UpdatedReplicas)

|

||||

}

|

||||

|

||||

} else {

|

||||

return "waiting for rollout to finish: observed deployment generation less then desired generation", false

|

||||

return true, fmt.Errorf("waiting for rollout to finish: observed deployment generation less then desired generation")

|

||||

}

|

||||

|

||||

return "ready", true

|

||||

return true, nil

|

||||

}

|

||||

|

||||

func (c *CanaryDeployer) getDeploymentCondition(

|

||||

|

||||

@@ -319,7 +319,12 @@ func TestCanaryDeployer_IsReady(t *testing.T) {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

err = deployer.IsReady(canary)

|

||||

_, err = deployer.IsPrimaryReady(canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

_, err = deployer.IsCanaryReady(canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

@@ -382,7 +387,7 @@ func TestCanaryDeployer_SetState(t *testing.T) {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

err = deployer.SetState(canary, "running")

|

||||

err = deployer.SetState(canary, v1alpha1.CanaryRunning)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

@@ -392,8 +397,8 @@ func TestCanaryDeployer_SetState(t *testing.T) {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if res.Status.State != "running" {

|

||||

t.Errorf("Got %v wanted %v", res.Status.State, "running")

|

||||

if res.Status.State != v1alpha1.CanaryRunning {

|

||||

t.Errorf("Got %v wanted %v", res.Status.State, v1alpha1.CanaryRunning)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -419,7 +424,7 @@ func TestCanaryDeployer_SyncStatus(t *testing.T) {

|

||||

}

|

||||

|

||||

status := v1alpha1.CanaryStatus{

|

||||

State: "running",

|

||||

State: v1alpha1.CanaryRunning,

|

||||

FailedChecks: 2,

|

||||

}

|

||||

err = deployer.SyncStatus(canary, status)

|

||||

|

||||

@@ -73,9 +73,9 @@ func (cr *CanaryRecorder) SetTotal(namespace string, total int) {

|

||||

func (cr *CanaryRecorder) SetStatus(cd *flaggerv1.Canary) {

|

||||

status := 1

|

||||

switch cd.Status.State {

|

||||

case "running":

|

||||

case flaggerv1.CanaryRunning:

|

||||

status = 0

|

||||

case "failed":

|

||||

case flaggerv1.CanaryFailed:

|

||||

status = 2

|

||||

default:

|

||||

status = 1

|

||||

|

||||

@@ -56,8 +56,8 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

maxWeight = cd.Spec.CanaryAnalysis.MaxWeight

|

||||

}

|

||||

|

||||

// check primary and canary deployments status

|

||||

if err := c.deployer.IsReady(cd); err != nil {

|

||||

// check primary deployment status

|

||||

if _, err := c.deployer.IsPrimaryReady(cd); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

@@ -81,10 +81,30 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

c.recorder.SetDuration(cd, time.Since(begin))

|

||||

}()

|

||||

|

||||

// check canary deployment status

|

||||

retriable, err := c.deployer.IsCanaryReady(cd)

|

||||

if err != nil && retriable {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

|

||||

// check if the number of failed checks reached the threshold

|

||||

if cd.Status.State == "running" && cd.Status.FailedChecks >= cd.Spec.CanaryAnalysis.Threshold {

|

||||

c.recordEventWarningf(cd, "Rolling back %s.%s failed checks threshold reached %v",

|

||||

cd.Name, cd.Namespace, cd.Status.FailedChecks)

|

||||

if cd.Status.State == flaggerv1.CanaryRunning &&

|

||||

(!retriable || cd.Status.FailedChecks >= cd.Spec.CanaryAnalysis.Threshold) {

|

||||

|

||||

if cd.Status.FailedChecks >= cd.Spec.CanaryAnalysis.Threshold {

|

||||

c.recordEventWarningf(cd, "Rolling back %s.%s failed checks threshold reached %v",

|

||||

cd.Name, cd.Namespace, cd.Status.FailedChecks)

|

||||

c.sendNotification(cd, fmt.Sprintf("Failed checks threshold reached %v", cd.Status.FailedChecks),

|

||||

false, true)

|

||||

}

|

||||

|

||||

if !retriable {

|

||||

c.recordEventWarningf(cd, "Rolling back %s.%s progress deadline exceeded %v",

|

||||

cd.Name, cd.Namespace, err)

|

||||

c.sendNotification(cd, fmt.Sprintf("Progress deadline exceeded %v", err),

|

||||

false, true)

|

||||

}

|

||||

|

||||

// route all traffic back to primary

|

||||

primaryRoute.Weight = 100

|

||||

@@ -96,7 +116,7 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

|

||||

c.recorder.SetWeight(cd, primaryRoute.Weight, canaryRoute.Weight)

|

||||

c.recordEventWarningf(cd, "Canary failed! Scaling down %s.%s",

|

||||

cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

cd.Name, cd.Namespace)

|

||||

|

||||

// shutdown canary

|

||||

if err := c.deployer.Scale(cd, 0); err != nil {

|

||||

@@ -105,13 +125,12 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

}

|

||||

|

||||

// mark canary as failed

|

||||

if err := c.deployer.SetState(cd, "failed"); err != nil {

|

||||

if err := c.deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: flaggerv1.CanaryFailed}); err != nil {

|

||||

c.logger.Errorf("%v", err)

|

||||

return

|

||||

}

|

||||

|

||||

c.recorder.SetStatus(cd)

|

||||

c.sendNotification(cd.Spec.TargetRef.Name, cd.Namespace,

|

||||

"Canary analysis failed, rollback finished.", true)

|

||||

return

|

||||

}

|

||||

|

||||

@@ -177,13 +196,13 @@ func (c *Controller) advanceCanary(name string, namespace string) {

|

||||

}

|

||||

|

||||

// update status

|

||||

if err := c.deployer.SetState(cd, "finished"); err != nil {

|

||||

if err := c.deployer.SetState(cd, flaggerv1.CanaryFinished); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

c.recorder.SetStatus(cd)

|

||||

c.sendNotification(cd.Spec.TargetRef.Name, cd.Namespace,

|

||||

"Canary analysis completed successfully, promotion finished.", false)

|

||||

c.sendNotification(cd, "Canary analysis completed successfully, promotion finished.",

|

||||

false, false)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -194,26 +213,26 @@ func (c *Controller) checkCanaryStatus(cd *flaggerv1.Canary, deployer CanaryDepl

|

||||

}

|

||||

|

||||

if cd.Status.State == "" {

|

||||

if err := deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: "initialized"}); err != nil {

|

||||

if err := deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: flaggerv1.CanaryInitialized}); err != nil {

|

||||

c.logger.Errorf("%v", err)

|

||||

return false

|

||||

}

|

||||

c.recorder.SetStatus(cd)

|

||||

c.recordEventInfof(cd, "Initialization done! %s.%s", cd.Name, cd.Namespace)

|

||||

c.sendNotification(cd.Spec.TargetRef.Name, cd.Namespace,

|

||||

"New deployment detected, initialization completed.", false)

|

||||

c.sendNotification(cd, "New deployment detected, initialization completed.",

|

||||

true, false)

|

||||

return false

|

||||

}

|

||||

|

||||

if diff, err := deployer.IsNewSpec(cd); diff {

|

||||

c.recordEventInfof(cd, "New revision detected! Scaling up %s.%s", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

c.sendNotification(cd.Spec.TargetRef.Name, cd.Namespace,

|

||||

"New revision detected, starting canary analysis.", false)

|

||||

c.sendNotification(cd, "New revision detected, starting canary analysis.",

|

||||

true, false)

|

||||

if err = deployer.Scale(cd, 1); err != nil {

|

||||

c.recordEventErrorf(cd, "%v", err)

|

||||

return false

|

||||

}

|

||||

if err := deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: "running"}); err != nil {

|

||||

if err := deployer.SyncStatus(cd, flaggerv1.CanaryStatus{State: flaggerv1.CanaryRunning}); err != nil {

|

||||

c.logger.Errorf("%v", err)

|

||||

return false

|

||||

}

|

||||

|

||||

@@ -6,6 +6,7 @@ import (

|

||||

"time"

|

||||

|

||||

fakeIstio "github.com/knative/pkg/client/clientset/versioned/fake"

|

||||

"github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha1"

|

||||

fakeFlagger "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/fake"

|

||||

informers "github.com/stefanprodan/flagger/pkg/client/informers/externalversions"

|

||||

"github.com/stefanprodan/flagger/pkg/logging"

|

||||

@@ -142,3 +143,71 @@ func TestScheduler_NewRevision(t *testing.T) {

|

||||

t.Errorf("Got canary replicas %v wanted %v", *c.Spec.Replicas, 1)

|

||||

}

|

||||

}

|

||||

|

||||

func TestScheduler_Rollback(t *testing.T) {

|

||||

canary := newTestCanary()

|

||||

dep := newTestDeployment()

|

||||

hpa := newTestHPA()

|

||||

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary)

|

||||

kubeClient := fake.NewSimpleClientset(dep, hpa)

|

||||

istioClient := fakeIstio.NewSimpleClientset()

|

||||

|

||||

logger, _ := logging.NewLogger("debug")

|

||||

deployer := CanaryDeployer{

|

||||

flaggerClient: flaggerClient,

|

||||

kubeClient: kubeClient,

|

||||

logger: logger,

|

||||

}

|

||||

router := CanaryRouter{

|

||||

flaggerClient: flaggerClient,

|

||||

kubeClient: kubeClient,

|

||||

istioClient: istioClient,

|

||||

logger: logger,

|

||||

}

|

||||

observer := CanaryObserver{

|

||||

metricsServer: "fake",

|

||||

}

|

||||

|

||||

flaggerInformerFactory := informers.NewSharedInformerFactory(flaggerClient, noResyncPeriodFunc())

|

||||

flaggerInformer := flaggerInformerFactory.Flagger().V1alpha1().Canaries()

|

||||

|

||||

ctrl := &Controller{

|

||||

kubeClient: kubeClient,

|

||||

istioClient: istioClient,

|

||||

flaggerClient: flaggerClient,

|

||||

flaggerLister: flaggerInformer.Lister(),

|

||||

flaggerSynced: flaggerInformer.Informer().HasSynced,

|

||||

workqueue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), controllerAgentName),

|

||||

eventRecorder: &record.FakeRecorder{},

|

||||

logger: logger,

|

||||

canaries: new(sync.Map),

|

||||

flaggerWindow: time.Second,

|

||||

deployer: deployer,

|

||||

router: router,

|

||||

observer: observer,

|

||||

recorder: NewCanaryRecorder(false),

|

||||

}

|

||||

ctrl.flaggerSynced = alwaysReady

|

||||

|

||||

// init

|

||||

ctrl.advanceCanary("podinfo", "default")

|

||||

|

||||

// update failed checks to max

|

||||

err := deployer.SyncStatus(canary, v1alpha1.CanaryStatus{State: v1alpha1.CanaryRunning, FailedChecks: 11})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

// detect changes

|

||||

ctrl.advanceCanary("podinfo", "default")

|

||||

|

||||

c, err := flaggerClient.FlaggerV1alpha1().Canaries("default").Get("podinfo", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if c.Status.State != v1alpha1.CanaryFailed {

|

||||

t.Errorf("Got canary state %v wanted %v", c.Status.State, v1alpha1.CanaryFailed)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -30,10 +30,17 @@ type SlackPayload struct {

|

||||

|

||||

// SlackAttachment holds the markdown message body

|

||||

type SlackAttachment struct {

|

||||

Color string `json:"color"`

|

||||

AuthorName string `json:"author_name"`

|

||||

Text string `json:"text"`

|

||||

MrkdwnIn []string `json:"mrkdwn_in"`

|

||||

Color string `json:"color"`

|

||||

AuthorName string `json:"author_name"`

|

||||

Text string `json:"text"`

|

||||

MrkdwnIn []string `json:"mrkdwn_in"`

|

||||

Fields []SlackField `json:"fields"`

|

||||

}

|

||||

|

||||

type SlackField struct {

|

||||

Title string `json:"title"`

|

||||

Value string `json:"value"`

|

||||

Short bool `json:"short"`

|

||||

}

|

||||

|

||||

// NewSlack validates the Slack URL and returns a Slack object

|

||||

@@ -60,7 +67,7 @@ func NewSlack(hookURL string, username string, channel string) (*Slack, error) {

|

||||

}

|

||||

|

||||

// Post Slack message

|

||||

func (s *Slack) Post(workload string, namespace string, message string, warn bool) error {

|

||||

func (s *Slack) Post(workload string, namespace string, message string, fields []SlackField, warn bool) error {

|

||||

payload := SlackPayload{

|

||||

Channel: s.Channel,

|

||||

Username: s.Username,

|

||||

@@ -76,6 +83,7 @@ func (s *Slack) Post(workload string, namespace string, message string, warn boo

|

||||

AuthorName: fmt.Sprintf("%s.%s", workload, namespace),

|

||||

Text: message,

|

||||

MrkdwnIn: []string{"text"},

|

||||

Fields: fields,

|

||||

}

|

||||

|

||||

payload.Attachments = []SlackAttachment{a}

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

package version

|

||||

|

||||

var VERSION = "0.1.0"

|

||||

var VERSION = "0.1.2"

|

||||

var REVISION = "unknown"

|

||||

|

||||

Reference in New Issue

Block a user