mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-15 18:40:12 +00:00

Compare commits

18 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

b8a7ea8534 | ||

|

|

afe4d59d5a | ||

|

|

0f2697df23 | ||

|

|

05664fa648 | ||

|

|

3b2564f34b | ||

|

|

dd0cf2d588 | ||

|

|

7c66f23c6a | ||

|

|

a9f034de1a | ||

|

|

6ad2dca57a | ||

|

|

e8353c110b | ||

|

|

dbf26ddf53 | ||

|

|

acc72d207f | ||

|

|

a784f83464 | ||

|

|

07d8355363 | ||

|

|

f7a439274e | ||

|

|

bd6d446cb8 | ||

|

|

385d0e0549 | ||

|

|

02236374d8 |

17

.github/main.workflow

vendored

Normal file

17

.github/main.workflow

vendored

Normal file

@@ -0,0 +1,17 @@

|

||||

workflow "Publish Helm charts" {

|

||||

on = "push"

|

||||

resolves = ["helm-push"]

|

||||

}

|

||||

|

||||

action "helm-lint" {

|

||||

uses = "stefanprodan/gh-actions/helm@master"

|

||||

args = ["lint charts/*"]

|

||||

}

|

||||

|

||||

action "helm-push" {

|

||||

needs = ["helm-lint"]

|

||||

uses = "stefanprodan/gh-actions/helm-gh-pages@master"

|

||||

args = ["charts/*","https://flagger.app"]

|

||||

secrets = ["GITHUB_TOKEN"]

|

||||

}

|

||||

|

||||

@@ -23,9 +23,10 @@ after_success:

|

||||

- if [ -z "$DOCKER_USER" ]; then

|

||||

echo "PR build, skipping image push";

|

||||

else

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

BRANCH_COMMIT=${TRAVIS_BRANCH}-$(echo ${TRAVIS_COMMIT} | head -c7);

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${BRANCH_COMMIT};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin quay.io;

|

||||

docker push quay.io/stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

docker push quay.io/stefanprodan/flagger:${BRANCH_COMMIT};

|

||||

fi

|

||||

- if [ -z "$TRAVIS_TAG" ]; then

|

||||

echo "Not a release, skipping image push";

|

||||

|

||||

44

Dockerfile.loadtester

Normal file

44

Dockerfile.loadtester

Normal file

@@ -0,0 +1,44 @@

|

||||

FROM golang:1.11 AS hey-builder

|

||||

|

||||

RUN mkdir -p /go/src/github.com/rakyll/hey/

|

||||

|

||||

WORKDIR /go/src/github.com/rakyll/hey

|

||||

|

||||

ADD https://github.com/rakyll/hey/archive/v0.1.1.tar.gz .

|

||||

|

||||

RUN tar xzf v0.1.1.tar.gz --strip 1

|

||||

|

||||

RUN go get ./...

|

||||

|

||||

RUN CGO_ENABLED=0 GOOS=linux GOARCH=amd64 \

|

||||

go install -ldflags '-w -extldflags "-static"' \

|

||||

/go/src/github.com/rakyll/hey

|

||||

|

||||

FROM golang:1.11 AS builder

|

||||

|

||||

RUN mkdir -p /go/src/github.com/stefanprodan/flagger/

|

||||

|

||||

WORKDIR /go/src/github.com/stefanprodan/flagger

|

||||

|

||||

COPY . .

|

||||

|

||||

RUN go test -race ./pkg/loadtester/

|

||||

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o loadtester ./cmd/loadtester/*

|

||||

|

||||

FROM alpine:3.8

|

||||

|

||||

RUN addgroup -S app \

|

||||

&& adduser -S -g app app \

|

||||

&& apk --no-cache add ca-certificates curl

|

||||

|

||||

WORKDIR /home/app

|

||||

|

||||

COPY --from=hey-builder /go/bin/hey /usr/local/bin/hey

|

||||

COPY --from=builder /go/src/github.com/stefanprodan/flagger/loadtester .

|

||||

|

||||

RUN chown -R app:app ./

|

||||

|

||||

USER app

|

||||

|

||||

ENTRYPOINT ["./loadtester"]

|

||||

8

Makefile

8

Makefile

@@ -3,6 +3,8 @@ VERSION?=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | t

|

||||

VERSION_MINOR:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | tr -d '"' | rev | cut -d'.' -f2- | rev)

|

||||

PATCH:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | tr -d '"' | awk -F. '{print $$NF}')

|

||||

SOURCE_DIRS = cmd pkg/apis pkg/controller pkg/server pkg/logging pkg/version

|

||||

LT_VERSION?=$(shell grep 'VERSION' cmd/loadtester/main.go | awk '{ print $$4 }' | tr -d '"' | head -n1)

|

||||

|

||||

run:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info \

|

||||

-metrics-server=https://prometheus.iowa.weavedx.com \

|

||||

@@ -29,7 +31,7 @@ test: test-fmt test-codegen

|

||||

go test ./...

|

||||

|

||||

helm-package:

|

||||

cd charts/ && helm package flagger/ && helm package grafana/

|

||||

cd charts/ && helm package flagger/ && helm package grafana/ && helm package loadtester/

|

||||

mv charts/*.tgz docs/

|

||||

helm repo index docs --url https://stefanprodan.github.io/flagger --merge ./docs/index.yaml

|

||||

|

||||

@@ -77,3 +79,7 @@ reset-test:

|

||||

kubectl delete -f ./artifacts/namespaces

|

||||

kubectl apply -f ./artifacts/namespaces

|

||||

kubectl apply -f ./artifacts/canaries

|

||||

|

||||

loadtester-push:

|

||||

docker build -t quay.io/stefanprodan/flagger-loadtester:$(LT_VERSION) . -f Dockerfile.loadtester

|

||||

docker push quay.io/stefanprodan/flagger-loadtester:$(LT_VERSION)

|

||||

18

README.md

18

README.md

@@ -127,12 +127,11 @@ spec:

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: integration-tests

|

||||

url: http://podinfo.test:9898/echo

|

||||

timeout: 1m

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

test: "all"

|

||||

token: "16688eb5e9f289f1991c"

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

The canary analysis is using the following promql queries:

|

||||

@@ -211,6 +210,13 @@ kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary promotion custom resource (replace the Istio gateway and the internet domain with your own):

|

||||

|

||||

```bash

|

||||

@@ -239,7 +245,7 @@ Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.0

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new canary analysis:

|

||||

|

||||

@@ -51,9 +51,8 @@ spec:

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: integration-tests

|

||||

url: https://httpbin.org/post

|

||||

timeout: 1m

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

test: "all"

|

||||

token: "16688eb5e9f289f1991c"

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.4.0

|

||||

image: quay.io/stefanprodan/flagger:0.4.1

|

||||

imagePullPolicy: Always

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

60

artifacts/loadtester/deployment.yaml

Normal file

60

artifacts/loadtester/deployment.yaml

Normal file

@@ -0,0 +1,60 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: flagger-loadtester

|

||||

labels:

|

||||

app: flagger-loadtester

|

||||

spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: flagger-loadtester

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app: flagger-loadtester

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

spec:

|

||||

containers:

|

||||

- name: loadtester

|

||||

image: quay.io/stefanprodan/flagger-loadtester:0.1.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

containerPort: 8080

|

||||

command:

|

||||

- ./loadtester

|

||||

- -port=8080

|

||||

- -log-level=info

|

||||

- -timeout=1h

|

||||

- -log-cmd-output=true

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

memory: "512Mi"

|

||||

cpu: "1000m"

|

||||

requests:

|

||||

memory: "32Mi"

|

||||

cpu: "10m"

|

||||

securityContext:

|

||||

readOnlyRootFilesystem: true

|

||||

runAsUser: 10001

|

||||

15

artifacts/loadtester/service.yaml

Normal file

15

artifacts/loadtester/service.yaml

Normal file

@@ -0,0 +1,15 @@

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: flagger-loadtester

|

||||

labels:

|

||||

app: flagger-loadtester

|

||||

spec:

|

||||

type: ClusterIP

|

||||

selector:

|

||||

app: flagger-loadtester

|

||||

ports:

|

||||

- name: http

|

||||

port: 80

|

||||

protocol: TCP

|

||||

targetPort: http

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.4.0

|

||||

appVersion: 0.4.0

|

||||

version: 0.4.1

|

||||

appVersion: 0.4.1

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger

|

||||

tag: 0.4.0

|

||||

tag: 0.4.1

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

metricsServer: "http://prometheus.istio-system.svc.cluster.local:9090"

|

||||

|

||||

@@ -6,7 +6,7 @@ Grafana dashboards for monitoring progressive deployments powered by Istio, Prom

|

||||

|

||||

## Prerequisites

|

||||

|

||||

* Kubernetes >= 1.9

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

* Prometheus >= 2.6

|

||||

|

||||

@@ -75,5 +75,5 @@ helm install flagger/grafana --name flagger-grafana -f values.yaml

|

||||

```

|

||||

|

||||

> **Tip**: You can use the default [values.yaml](values.yaml)

|

||||

```

|

||||

|

||||

|

||||

|

||||

22

charts/loadtester/.helmignore

Normal file

22

charts/loadtester/.helmignore

Normal file

@@ -0,0 +1,22 @@

|

||||

# Patterns to ignore when building packages.

|

||||

# This supports shell glob matching, relative path matching, and

|

||||

# negation (prefixed with !). Only one pattern per line.

|

||||

.DS_Store

|

||||

# Common VCS dirs

|

||||

.git/

|

||||

.gitignore

|

||||

.bzr/

|

||||

.bzrignore

|

||||

.hg/

|

||||

.hgignore

|

||||

.svn/

|

||||

# Common backup files

|

||||

*.swp

|

||||

*.bak

|

||||

*.tmp

|

||||

*~

|

||||

# Various IDEs

|

||||

.project

|

||||

.idea/

|

||||

*.tmproj

|

||||

.vscode/

|

||||

20

charts/loadtester/Chart.yaml

Normal file

20

charts/loadtester/Chart.yaml

Normal file

@@ -0,0 +1,20 @@

|

||||

apiVersion: v1

|

||||

name: loadtester

|

||||

version: 0.1.0

|

||||

appVersion: 0.1.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger's load testing services based on rakyll/hey that generates traffic during canary analysis when configured as a webhook.

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

maintainers:

|

||||

- name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

email: stefanprodan@users.noreply.github.com

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

- load testing

|

||||

78

charts/loadtester/README.md

Normal file

78

charts/loadtester/README.md

Normal file

@@ -0,0 +1,78 @@

|

||||

# Flagger load testing service

|

||||

|

||||

[Flagger's](https://github.com/stefanprodan/flagger) load testing service is based on

|

||||

[rakyll/hey](https://github.com/rakyll/hey)

|

||||

and can be used to generates traffic during canary analysis when configured as a webhook.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

* Kubernetes >= 1.11

|

||||

* Istio >= 1.0

|

||||

|

||||

## Installing the Chart

|

||||

|

||||

Add Flagger Helm repository:

|

||||

|

||||

```console

|

||||

helm repo add flagger https://flagger.app

|

||||

```

|

||||

|

||||

To install the chart with the release name `flagger-loadtester`:

|

||||

|

||||

```console

|

||||

helm upgrade -i flagger-loadtester flagger/loadtester

|

||||

```

|

||||

|

||||

The command deploys Grafana on the Kubernetes cluster in the default namespace.

|

||||

|

||||

> **Tip**: Note that the namespace where you deploy the load tester should have the Istio sidecar injection enabled

|

||||

|

||||

The [configuration](#configuration) section lists the parameters that can be configured during installation.

|

||||

|

||||

## Uninstalling the Chart

|

||||

|

||||

To uninstall/delete the `flagger-loadtester` deployment:

|

||||

|

||||

```console

|

||||

helm delete --purge flagger-loadtester

|

||||

```

|

||||

|

||||

The command removes all the Kubernetes components associated with the chart and deletes the release.

|

||||

|

||||

## Configuration

|

||||

|

||||

The following tables lists the configurable parameters of the load tester chart and their default values.

|

||||

|

||||

Parameter | Description | Default

|

||||

--- | --- | ---

|

||||

`image.repository` | Image repository | `quay.io/stefanprodan/flagger-loadtester`

|

||||

`image.pullPolicy` | Image pull policy | `IfNotPresent`

|

||||

`image.tag` | Image tag | `<VERSION>`

|

||||

`replicaCount` | desired number of pods | `1`

|

||||

`resources.requests.cpu` | CPU requests | `10m`

|

||||

`resources.requests.memory` | memory requests | `64Mi`

|

||||

`tolerations` | List of node taints to tolerate | `[]`

|

||||

`affinity` | node/pod affinities | `node`

|

||||

`nodeSelector` | node labels for pod assignment | `{}`

|

||||

`service.type` | type of service | `ClusterIP`

|

||||

`service.port` | ClusterIP port | `80`

|

||||

`cmd.logOutput` | Log the command output to stderr | `true`

|

||||

`cmd.timeout` | Command execution timeout | `1h`

|

||||

`logLevel` | Log level can be debug, info, warning, error or panic | `info`

|

||||

|

||||

Specify each parameter using the `--set key=value[,key=value]` argument to `helm install`. For example,

|

||||

|

||||

```console

|

||||

helm install flagger/loadtester --name flagger-loadtester \

|

||||

--set cmd.logOutput=false

|

||||

```

|

||||

|

||||

Alternatively, a YAML file that specifies the values for the above parameters can be provided while installing the chart. For example,

|

||||

|

||||

```console

|

||||

helm install flagger/loadtester --name flagger-loadtester -f values.yaml

|

||||

```

|

||||

|

||||

> **Tip**: You can use the default [values.yaml](values.yaml)

|

||||

|

||||

|

||||

1

charts/loadtester/templates/NOTES.txt

Normal file

1

charts/loadtester/templates/NOTES.txt

Normal file

@@ -0,0 +1 @@

|

||||

Flagger's load testing service is available at http://{{ include "loadtester.fullname" . }}.{{ .Release.Namespace }}/

|

||||

32

charts/loadtester/templates/_helpers.tpl

Normal file

32

charts/loadtester/templates/_helpers.tpl

Normal file

@@ -0,0 +1,32 @@

|

||||

{{/* vim: set filetype=mustache: */}}

|

||||

{{/*

|

||||

Expand the name of the chart.

|

||||

*/}}

|

||||

{{- define "loadtester.name" -}}

|

||||

{{- default .Chart.Name .Values.nameOverride | trunc 63 | trimSuffix "-" -}}

|

||||

{{- end -}}

|

||||

|

||||

{{/*

|

||||

Create a default fully qualified app name.

|

||||

We truncate at 63 chars because some Kubernetes name fields are limited to this (by the DNS naming spec).

|

||||

If release name contains chart name it will be used as a full name.

|

||||

*/}}

|

||||

{{- define "loadtester.fullname" -}}

|

||||

{{- if .Values.fullnameOverride -}}

|

||||

{{- .Values.fullnameOverride | trunc 63 | trimSuffix "-" -}}

|

||||

{{- else -}}

|

||||

{{- $name := default .Chart.Name .Values.nameOverride -}}

|

||||

{{- if contains $name .Release.Name -}}

|

||||

{{- .Release.Name | trunc 63 | trimSuffix "-" -}}

|

||||

{{- else -}}

|

||||

{{- printf "%s-%s" .Release.Name $name | trunc 63 | trimSuffix "-" -}}

|

||||

{{- end -}}

|

||||

{{- end -}}

|

||||

{{- end -}}

|

||||

|

||||

{{/*

|

||||

Create chart name and version as used by the chart label.

|

||||

*/}}

|

||||

{{- define "loadtester.chart" -}}

|

||||

{{- printf "%s-%s" .Chart.Name .Chart.Version | replace "+" "_" | trunc 63 | trimSuffix "-" -}}

|

||||

{{- end -}}

|

||||

66

charts/loadtester/templates/deployment.yaml

Normal file

66

charts/loadtester/templates/deployment.yaml

Normal file

@@ -0,0 +1,66 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: {{ include "loadtester.fullname" . }}

|

||||

labels:

|

||||

app.kubernetes.io/name: {{ include "loadtester.name" . }}

|

||||

helm.sh/chart: {{ include "loadtester.chart" . }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

spec:

|

||||

replicas: {{ .Values.replicaCount }}

|

||||

selector:

|

||||

matchLabels:

|

||||

app: {{ include "loadtester.name" . }}

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app: {{ include "loadtester.name" . }}

|

||||

spec:

|

||||

containers:

|

||||

- name: {{ .Chart.Name }}

|

||||

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

|

||||

imagePullPolicy: {{ .Values.image.pullPolicy }}

|

||||

ports:

|

||||

- name: http

|

||||

containerPort: 8080

|

||||

command:

|

||||

- ./loadtester

|

||||

- -port=8080

|

||||

- -log-level={{ .Values.logLevel }}

|

||||

- -timeout={{ .Values.cmd.timeout }}

|

||||

- -log-cmd-output={{ .Values.cmd.logOutput }}

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- wget

|

||||

- --quiet

|

||||

- --tries=1

|

||||

- --timeout=4

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

{{- toYaml .Values.resources | nindent 12 }}

|

||||

{{- with .Values.nodeSelector }}

|

||||

nodeSelector:

|

||||

{{- toYaml . | nindent 8 }}

|

||||

{{- end }}

|

||||

{{- with .Values.affinity }}

|

||||

affinity:

|

||||

{{- toYaml . | nindent 8 }}

|

||||

{{- end }}

|

||||

{{- with .Values.tolerations }}

|

||||

tolerations:

|

||||

{{- toYaml . | nindent 8 }}

|

||||

{{- end }}

|

||||

18

charts/loadtester/templates/service.yaml

Normal file

18

charts/loadtester/templates/service.yaml

Normal file

@@ -0,0 +1,18 @@

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: {{ include "loadtester.fullname" . }}

|

||||

labels:

|

||||

app.kubernetes.io/name: {{ include "loadtester.name" . }}

|

||||

helm.sh/chart: {{ include "loadtester.chart" . }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

spec:

|

||||

type: {{ .Values.service.type }}

|

||||

ports:

|

||||

- port: {{ .Values.service.port }}

|

||||

targetPort: http

|

||||

protocol: TCP

|

||||

name: http

|

||||

selector:

|

||||

app: {{ include "loadtester.name" . }}

|

||||

29

charts/loadtester/values.yaml

Normal file

29

charts/loadtester/values.yaml

Normal file

@@ -0,0 +1,29 @@

|

||||

replicaCount: 1

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger-loadtester

|

||||

tag: 0.1.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

logLevel: info

|

||||

cmd:

|

||||

logOutput: true

|

||||

timeout: 1h

|

||||

|

||||

nameOverride: ""

|

||||

fullnameOverride: ""

|

||||

|

||||

service:

|

||||

type: ClusterIP

|

||||

port: 80

|

||||

|

||||

resources:

|

||||

requests:

|

||||

cpu: 10m

|

||||

memory: 64Mi

|

||||

|

||||

nodeSelector: {}

|

||||

|

||||

tolerations: []

|

||||

|

||||

affinity: {}

|

||||

44

cmd/loadtester/main.go

Normal file

44

cmd/loadtester/main.go

Normal file

@@ -0,0 +1,44 @@

|

||||

package main

|

||||

|

||||

import (

|

||||

"flag"

|

||||

"github.com/knative/pkg/signals"

|

||||

"github.com/stefanprodan/flagger/pkg/loadtester"

|

||||

"github.com/stefanprodan/flagger/pkg/logging"

|

||||

"log"

|

||||

"time"

|

||||

)

|

||||

|

||||

var VERSION = "0.1.0"

|

||||

var (

|

||||

logLevel string

|

||||

port string

|

||||

timeout time.Duration

|

||||

logCmdOutput bool

|

||||

)

|

||||

|

||||

func init() {

|

||||

flag.StringVar(&logLevel, "log-level", "debug", "Log level can be: debug, info, warning, error.")

|

||||

flag.StringVar(&port, "port", "9090", "Port to listen on.")

|

||||

flag.DurationVar(&timeout, "timeout", time.Hour, "Command exec timeout.")

|

||||

flag.BoolVar(&logCmdOutput, "log-cmd-output", true, "Log command output to stderr")

|

||||

}

|

||||

|

||||

func main() {

|

||||

flag.Parse()

|

||||

|

||||

logger, err := logging.NewLogger(logLevel)

|

||||

if err != nil {

|

||||

log.Fatalf("Error creating logger: %v", err)

|

||||

}

|

||||

defer logger.Sync()

|

||||

|

||||

stopCh := signals.SetupSignalHandler()

|

||||

|

||||

taskRunner := loadtester.NewTaskRunner(logger, timeout, logCmdOutput)

|

||||

|

||||

go taskRunner.Start(100*time.Millisecond, stopCh)

|

||||

|

||||

logger.Infof("Starting load tester v%s API on port %s", VERSION, port)

|

||||

loadtester.ListenAndServe(port, time.Minute, logger, taskRunner, stopCh)

|

||||

}

|

||||

@@ -1 +0,0 @@

|

||||

flagger.app

|

||||

@@ -1,11 +0,0 @@

|

||||

# Flagger

|

||||

|

||||

Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic

|

||||

shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance

|

||||

indicators like HTTP requests success rate, requests average duration and pods health. Based on the KPIs analysis

|

||||

a canary is promoted or aborted and the analysis result is published to Slack.

|

||||

|

||||

### For the install instructions and usage examples please see [docs.flagger.app](https://docs.flagger.app)

|

||||

|

||||

@@ -1,55 +0,0 @@

|

||||

title: Flagger - Istio Progressive Delivery Kubernetes Operator

|

||||

|

||||

remote_theme: errordeveloper/simple-project-homepage

|

||||

repository: stefanprodan/flagger

|

||||

by_weaveworks: true

|

||||

|

||||

url: "https://flagger.app"

|

||||

baseurl: "/"

|

||||

|

||||

twitter:

|

||||

username: "stefanprodan"

|

||||

author:

|

||||

twitter: "stefanprodan"

|

||||

|

||||

# Set default og:image

|

||||

defaults:

|

||||

- scope: {path: ""}

|

||||

values: {image: "diagrams/flagger-overview.png"}

|

||||

|

||||

# See: https://material.io/guidelines/style/color.html

|

||||

# Use color-name-value, like pink-200 or deep-purple-100

|

||||

brand_color: "amber-400"

|

||||

|

||||

# How article URLs are structured.

|

||||

# See: https://jekyllrb.com/docs/permalinks/

|

||||

permalink: posts/:title/

|

||||

|

||||

# "UA-NNNNNNNN-N"

|

||||

google_analytics: ""

|

||||

|

||||

# Language. For example, if you write in Japanese, use "ja"

|

||||

lang: "en"

|

||||

|

||||

# How many posts are visible on the home page without clicking "View More"

|

||||

num_posts_visible_initially: 5

|

||||

|

||||

# Date format: See http://strftime.net/

|

||||

date_format: "%b %-d, %Y"

|

||||

|

||||

plugins:

|

||||

- jekyll-feed

|

||||

- jekyll-readme-index

|

||||

- jekyll-seo-tag

|

||||

- jekyll-sitemap

|

||||

- jemoji

|

||||

# # required for local builds with starefossen/github-pages

|

||||

# - jekyll-github-metadata

|

||||

# - jekyll-mentions

|

||||

# - jekyll-redirect-from

|

||||

# - jekyll-remote-theme

|

||||

|

||||

exclude:

|

||||

- CNAME

|

||||

- gitbook

|

||||

|

||||

BIN

docs/diagrams/flagger-load-testing.png

Normal file

BIN

docs/diagrams/flagger-load-testing.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 159 KiB |

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

Binary file not shown.

@@ -1,6 +1,8 @@

|

||||

# How it works

|

||||

|

||||

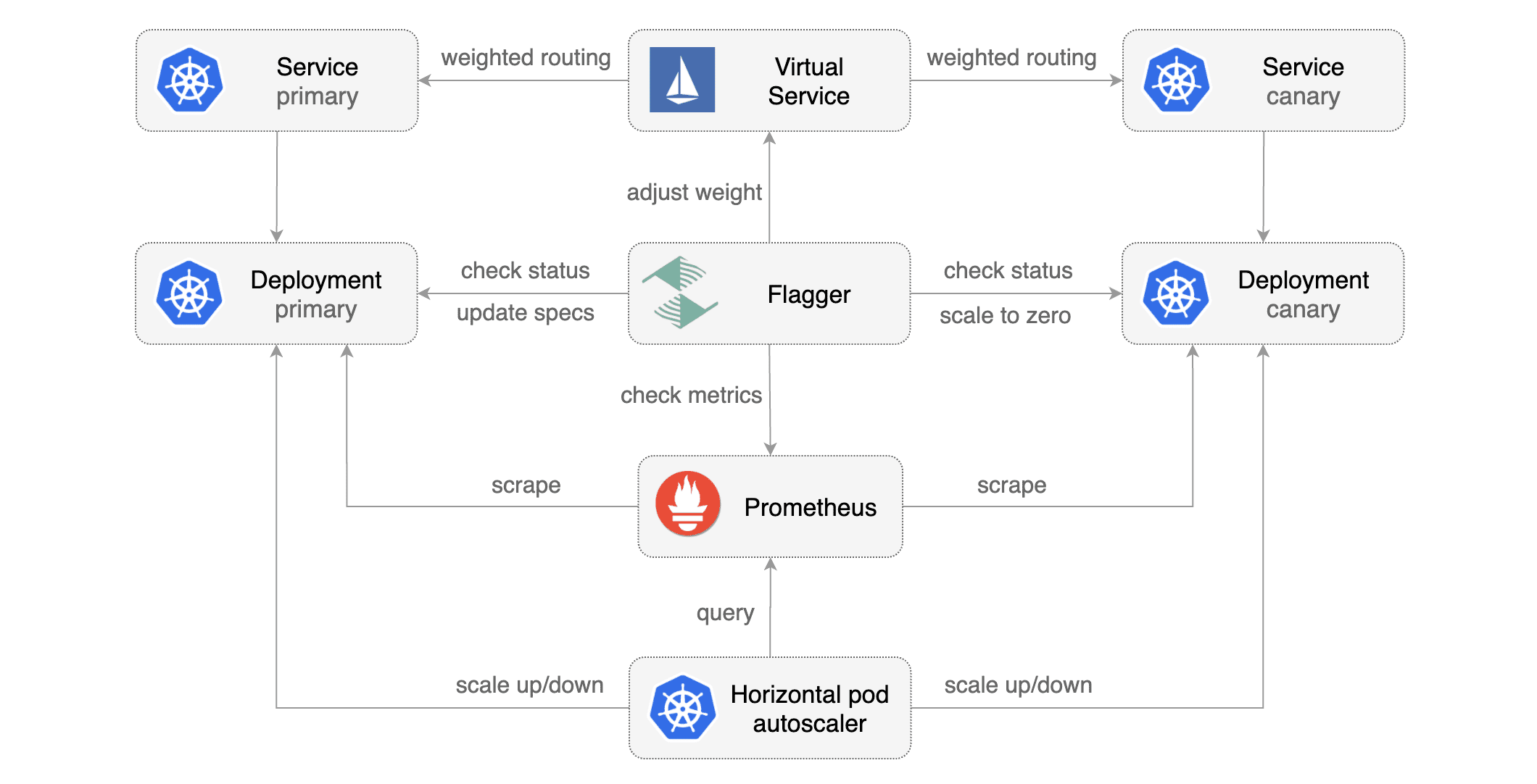

[Flagger](https://github.com/stefanprodan/flagger) takes a Kubernetes deployment and optionally a horizontal pod autoscaler \(HPA\) and creates a series of objects \(Kubernetes deployments, ClusterIP services and Istio virtual services\) to drive the canary analysis and promotion.

|

||||

[Flagger](https://github.com/stefanprodan/flagger) takes a Kubernetes deployment and optionally

|

||||

a horizontal pod autoscaler \(HPA\) and creates a series of objects

|

||||

\(Kubernetes deployments, ClusterIP services and Istio virtual services\) to drive the canary analysis and promotion.

|

||||

|

||||

|

||||

|

||||

@@ -112,10 +114,11 @@ Gated canary promotion stages:

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated \(revision bump\) and start over

|

||||

* increase canary traffic weight by 5% \(step weight\) till it reaches 50% \(max weight\)

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* halt advancement if any of the webhook calls are failing

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* promote canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

@@ -281,4 +284,78 @@ Response status codes:

|

||||

|

||||

On a non-2xx response Flagger will include the response body (if any) in the failed checks log and Kubernetes events.

|

||||

|

||||

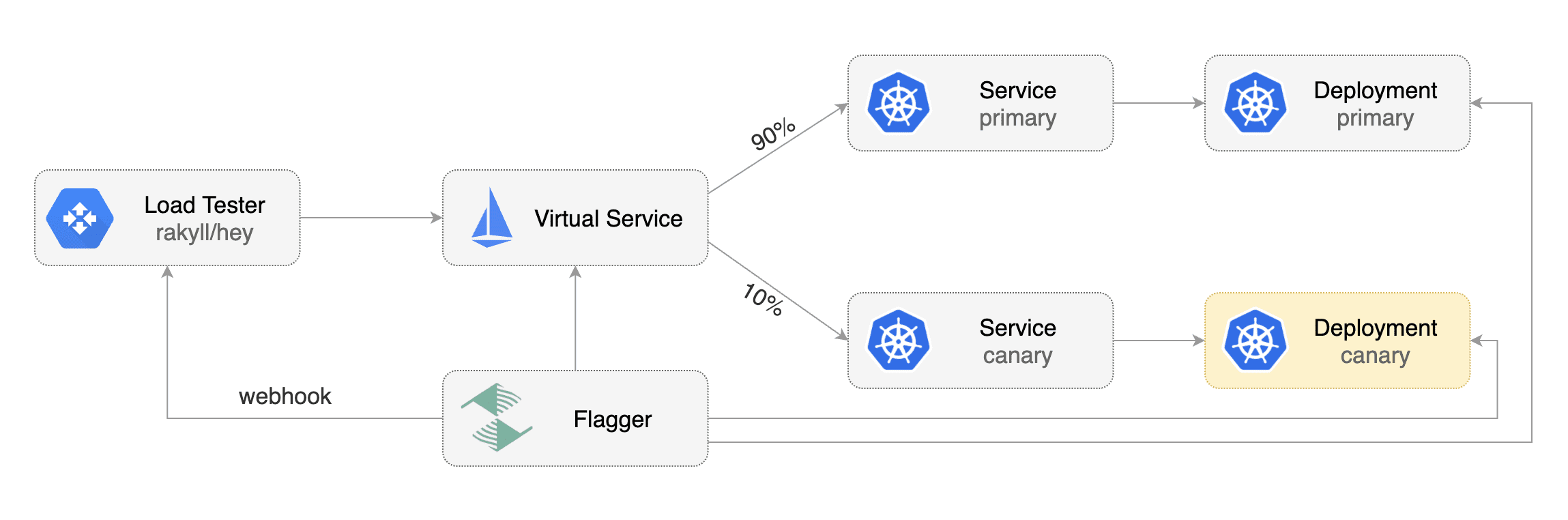

### Load Testing

|

||||

|

||||

For workloads that are not receiving constant traffic Flagger can be configured with a webhook,

|

||||

that when called, will start a load test for the target workload.

|

||||

If the target workload doesn't receive any traffic during the canary analysis,

|

||||

Flagger metric checks will fail with "no values found for metric istio_requests_total".

|

||||

|

||||

Flagger comes with a load testing service based on [rakyll/hey](https://github.com/rakyll/hey)

|

||||

that generates traffic during analysis when configured as a webhook.

|

||||

|

||||

|

||||

|

||||

First you need to deploy the load test runner in a namespace with Istio sidecar injection enabled:

|

||||

|

||||

```bash

|

||||

export REPO=https://raw.githubusercontent.com/stefanprodan/flagger/master

|

||||

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Or by using Helm:

|

||||

|

||||

```bash

|

||||

helm repo add flagger https://flagger.app

|

||||

|

||||

helm upgrade -i flagger-loadtester flagger/loadtester \

|

||||

--namepace=test \

|

||||

--set cmd.logOutput=true \

|

||||

--set cmd.timeout=1h

|

||||

```

|

||||

|

||||

When deployed the load tester API will be available at `http://flagger-loadtester.test/`.

|

||||

|

||||

Now you can add webhooks to the canary analysis spec:

|

||||

|

||||

```yaml

|

||||

webhooks:

|

||||

- name: load-test-get

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

- name: load-test-post

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 -m POST -d '{test: 2}' http://podinfo.test:9898/echo"

|

||||

```

|

||||

|

||||

When the canary analysis starts, Flagger will call the webhooks and the load tester will run the `hey` commands

|

||||

in the background, if they are not already running. This will ensure that during the

|

||||

analysis, the `podinfo.test` virtual service will receive a steady steam of GET and POST requests.

|

||||

|

||||

If your workload is exposed outside the mesh with the Istio Gateway and TLS you can point `hey` to the

|

||||

public URL and use HTTP2.

|

||||

|

||||

```yaml

|

||||

webhooks:

|

||||

- name: load-test-get

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 -h2 https://podinfo.example.com/"

|

||||

```

|

||||

|

||||

The load tester can run arbitrary commands as long as the binary is present in the container image.

|

||||

For example if you you want to replace `hey` with another CLI, you can create your own Docker image:

|

||||

|

||||

```dockerfile

|

||||

FROM quay.io/stefanprodan/flagger-loadtester:<VER>

|

||||

|

||||

RUN curl -Lo /usr/local/bin/my-cli https://github.com/user/repo/releases/download/ver/my-cli \

|

||||

&& chmod +x /usr/local/bin/my-cli

|

||||

```

|

||||

|

||||

@@ -17,6 +17,13 @@ kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource \(replace example.com with your own domain\):

|

||||

|

||||

```yaml

|

||||

@@ -70,6 +77,13 @@ spec:

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# generate traffic during analysis

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

Save the above resource as podinfo-canary.yaml and then apply it:

|

||||

@@ -99,7 +113,7 @@ Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.0

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

|

||||

Binary file not shown.

146

docs/index.yaml

146

docs/index.yaml

@@ -1,146 +0,0 @@

|

||||

apiVersion: v1

|

||||

entries:

|

||||

flagger:

|

||||

- apiVersion: v1

|

||||

appVersion: 0.4.0

|

||||

created: 2019-01-18T12:49:18.099861+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: fe06de1c68c6cc414440ef681cde67ae02c771de9b1e4d2d264c38a7a9c37b3d

|

||||

engine: gotpl

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.11.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: flagger

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.4.0.tgz

|

||||

version: 0.4.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.3.0

|

||||

created: 2019-01-18T12:49:18.099501+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 8baa478cc802f4e6b7593934483359b8f70ec34413ca3b8de3a692e347a9bda4

|

||||

engine: gotpl

|

||||

home: https://docs.flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.9.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: flagger

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.3.0.tgz

|

||||

version: 0.3.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.2.0

|

||||

created: 2019-01-18T12:49:18.099162+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 800b5fd1a0b2854ee8412b3170c36ecda3d382f209e18b475ee1d5e3c7fa2f83

|

||||

engine: gotpl

|

||||

home: https://flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.9.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: flagger

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.2.0.tgz

|

||||

version: 0.2.0

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.2

|

||||

created: 2019-01-18T12:49:18.098811+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 0029ef8dd20ebead3d84638eaa4b44d60b3e2bd953b4b7a1169963ce93a4e87c

|

||||

engine: gotpl

|

||||

home: https://flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

keywords:

|

||||

- canary

|

||||

- istio

|

||||

- gitops

|

||||

kubeVersion: '>=1.9.0-0'

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: flagger

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.1.2.tgz

|

||||

version: 0.1.2

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.1

|

||||

created: 2019-01-18T12:49:18.098439+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 2bb8f72fcf63a5ba5ecbaa2ab0d0446f438ec93fbf3a598cd7de45e64d8f9628

|

||||

home: https://github.com/stefanprodan/flagger

|

||||

name: flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.1.1.tgz

|

||||

version: 0.1.1

|

||||

- apiVersion: v1

|

||||

appVersion: 0.1.0

|

||||

created: 2019-01-18T12:49:18.098153+02:00

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of

|

||||

canary deployments using Istio routing for traffic shifting and Prometheus metrics

|

||||

for canary analysis.

|

||||

digest: 03e05634149e13ddfddae6757266d65c271878a026c21c7d1429c16712bf3845

|

||||

home: https://github.com/stefanprodan/flagger

|

||||

name: flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/flagger-0.1.0.tgz

|

||||

version: 0.1.0

|

||||

grafana:

|

||||

- apiVersion: v1

|

||||

appVersion: 5.4.2

|

||||

created: 2019-01-18T12:49:18.100331+02:00

|

||||

description: Grafana dashboards for monitoring Flagger canary deployments

|

||||

digest: 97257d1742aca506f8703922d67863c459c1b43177870bc6050d453d19a683c0

|

||||

home: https://flagger.app

|

||||

icon: https://raw.githubusercontent.com/stefanprodan/flagger/master/docs/logo/flagger-icon.png

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

url: https://github.com/stefanprodan

|

||||

name: grafana

|

||||

sources:

|

||||

- https://github.com/stefanprodan/flagger

|

||||

urls:

|

||||

- https://stefanprodan.github.io/flagger/grafana-0.1.0.tgz

|

||||

version: 0.1.0

|

||||

generated: 2019-01-18T12:49:18.097682+02:00

|

||||

@@ -134,9 +134,9 @@ type CanaryWebhook struct {

|

||||

|

||||

// CanaryWebhookPayload holds the deployment info and metadata sent to webhooks

|

||||

type CanaryWebhookPayload struct {

|

||||

Name string `json:"name"`

|

||||

Namespace string `json:"namespace"`

|

||||

Metadata *map[string]string `json:"metadata,omitempty"`

|

||||

Name string `json:"name"`

|

||||

Namespace string `json:"namespace"`

|

||||

Metadata map[string]string `json:"metadata,omitempty"`

|

||||

}

|

||||

|

||||

// GetProgressDeadlineSeconds returns the progress deadline (default 600s)

|

||||

|

||||

@@ -234,13 +234,9 @@ func (in *CanaryWebhookPayload) DeepCopyInto(out *CanaryWebhookPayload) {

|

||||

*out = *in

|

||||

if in.Metadata != nil {

|

||||

in, out := &in.Metadata, &out.Metadata

|

||||

*out = new(map[string]string)

|

||||

if **in != nil {

|

||||

in, out := *in, *out

|

||||

*out = make(map[string]string, len(*in))

|

||||

for key, val := range *in {

|

||||

(*out)[key] = val

|

||||

}

|

||||

*out = make(map[string]string, len(*in))

|

||||

for key, val := range *in {

|

||||

(*out)[key] = val

|

||||

}

|

||||

}

|

||||

return

|

||||

|

||||

@@ -2,6 +2,7 @@ package controller

|

||||

|

||||

import (

|

||||

"fmt"

|

||||

"strings"

|

||||

"time"

|

||||

|

||||

flaggerv1 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

@@ -336,12 +337,27 @@ func (c *Controller) hasCanaryRevisionChanged(cd *flaggerv1.Canary) bool {

|

||||

}

|

||||

|

||||

func (c *Controller) analyseCanary(r *flaggerv1.Canary) bool {

|

||||

// run external checks

|

||||

for _, webhook := range r.Spec.CanaryAnalysis.Webhooks {

|

||||

err := CallWebhook(r.Name, r.Namespace, webhook)

|

||||

if err != nil {

|

||||

c.recordEventWarningf(r, "Halt %s.%s advancement external check %s failed %v",

|

||||

r.Name, r.Namespace, webhook.Name, err)

|

||||

return false

|

||||

}

|

||||

}

|

||||

|

||||

// run metrics checks

|

||||

for _, metric := range r.Spec.CanaryAnalysis.Metrics {

|

||||

if metric.Name == "istio_requests_total" {

|

||||

val, err := c.observer.GetDeploymentCounter(r.Spec.TargetRef.Name, r.Namespace, metric.Name, metric.Interval)

|

||||

if err != nil {

|

||||

c.recordEventErrorf(r, "Metrics server %s query failed: %v", c.observer.metricsServer, err)

|

||||

if strings.Contains(err.Error(), "no values found") {

|

||||

c.recordEventWarningf(r, "Halt advancement no values found for metric %s probably %s.%s is not receiving traffic",

|

||||

metric.Name, r.Spec.TargetRef.Name, r.Namespace)

|

||||

} else {

|

||||

c.recordEventErrorf(r, "Metrics server %s query failed: %v", c.observer.metricsServer, err)

|

||||

}

|

||||

return false

|

||||

}

|

||||

if float64(metric.Threshold) > val {

|

||||

@@ -366,15 +382,5 @@ func (c *Controller) analyseCanary(r *flaggerv1.Canary) bool {

|

||||

}

|

||||

}

|

||||

|

||||

// run external checks

|

||||

for _, webhook := range r.Spec.CanaryAnalysis.Webhooks {

|

||||

err := CallWebhook(r.Name, r.Namespace, webhook)

|

||||

if err != nil {

|

||||

c.recordEventWarningf(r, "Halt %s.%s advancement external check %s failed %v",

|

||||

r.Name, r.Namespace, webhook.Name, err)

|

||||

return false

|

||||

}

|

||||

}

|

||||

|

||||

return true

|

||||

}

|

||||

|

||||

@@ -19,7 +19,10 @@ func CallWebhook(name string, namepace string, w flaggerv1.CanaryWebhook) error

|

||||

payload := flaggerv1.CanaryWebhookPayload{

|

||||

Name: name,

|

||||

Namespace: namepace,

|

||||

Metadata: w.Metadata,

|

||||

}

|

||||

|

||||

if w.Metadata != nil {

|

||||

payload.Metadata = *w.Metadata

|

||||

}

|

||||

|

||||

payloadBin, err := json.Marshal(payload)

|

||||

|

||||

107

pkg/loadtester/runner.go

Normal file

107

pkg/loadtester/runner.go

Normal file

@@ -0,0 +1,107 @@

|

||||

package loadtester

|

||||

|

||||

import (

|

||||

"context"

|

||||

"encoding/hex"

|

||||

"fmt"

|

||||

"go.uber.org/zap"

|

||||

"hash/fnv"

|

||||

"os/exec"

|

||||

"sync"

|

||||

"sync/atomic"

|

||||

"time"

|

||||

)

|

||||

|

||||

type TaskRunner struct {

|

||||

logger *zap.SugaredLogger

|

||||

timeout time.Duration

|

||||

todoTasks *sync.Map

|

||||

runningTasks *sync.Map

|

||||

totalExecs uint64

|

||||

logCmdOutput bool

|

||||

}

|

||||

|

||||

type Task struct {

|

||||

Canary string

|

||||

Command string

|

||||

}

|

||||

|

||||

func (t Task) Hash() string {

|

||||

fnvHash := fnv.New32()

|

||||

fnvBytes := fnvHash.Sum([]byte(t.Canary + t.Command))

|

||||

return hex.EncodeToString(fnvBytes[:])

|

||||

}

|

||||

|

||||

func NewTaskRunner(logger *zap.SugaredLogger, timeout time.Duration, logCmdOutput bool) *TaskRunner {

|

||||

return &TaskRunner{

|

||||

logger: logger,

|

||||

todoTasks: new(sync.Map),

|

||||

runningTasks: new(sync.Map),

|

||||

timeout: timeout,

|

||||

logCmdOutput: logCmdOutput,

|

||||

}

|

||||

}

|

||||

|

||||

func (tr *TaskRunner) Add(task Task) {

|

||||

tr.todoTasks.Store(task.Hash(), task)

|

||||

}

|

||||

|

||||

func (tr *TaskRunner) GetTotalExecs() uint64 {

|

||||

return atomic.LoadUint64(&tr.totalExecs)

|

||||

}

|

||||

|

||||

func (tr *TaskRunner) runAll() {

|

||||

tr.todoTasks.Range(func(key interface{}, value interface{}) bool {

|

||||

task := value.(Task)

|

||||

go func(t Task) {

|

||||

// remove task from the to do list

|

||||

tr.todoTasks.Delete(t.Hash())

|

||||

|

||||

// check if task is already running, if not run the task's command

|

||||

if _, exists := tr.runningTasks.Load(t.Hash()); !exists {

|

||||

// save the task in the running list

|

||||

tr.runningTasks.Store(t.Hash(), t)

|

||||

|

||||

// create timeout context

|

||||

ctx, cancel := context.WithTimeout(context.Background(), tr.timeout)

|

||||

defer cancel()

|

||||

|

||||

// increment the total exec counter

|

||||

atomic.AddUint64(&tr.totalExecs, 1)

|

||||

|

||||

tr.logger.With("canary", t.Canary).Infof("command starting %s", t.Command)

|

||||

cmd := exec.CommandContext(ctx, "sh", "-c", t.Command)

|

||||

|

||||

// execute task

|

||||

out, err := cmd.CombinedOutput()

|

||||

if err != nil {

|

||||

tr.logger.With("canary", t.Canary).Errorf("command failed %s %v %s", t.Command, err, out)

|

||||

} else {

|

||||

if tr.logCmdOutput {

|

||||

fmt.Printf("%s\n", out)

|

||||

}

|

||||

tr.logger.With("canary", t.Canary).Infof("command finished %s", t.Command)

|

||||

}

|

||||

|

||||

// remove task from the running list

|

||||

tr.runningTasks.Delete(t.Hash())

|

||||

} else {

|

||||

tr.logger.With("canary", t.Canary).Infof("command skipped %s is already running", t.Command)

|

||||

}

|

||||

}(task)

|

||||

return true

|

||||

})

|

||||

}

|

||||

|

||||

func (tr *TaskRunner) Start(interval time.Duration, stopCh <-chan struct{}) {

|

||||

tickChan := time.NewTicker(interval).C

|

||||

for {

|

||||

select {

|

||||

case <-tickChan:

|

||||

tr.runAll()

|

||||

case <-stopCh:

|

||||

tr.logger.Info("shutting down the task runner")

|

||||

return

|

||||

}

|

||||

}

|

||||

}

|

||||

52

pkg/loadtester/runner_test.go

Normal file

52

pkg/loadtester/runner_test.go

Normal file

@@ -0,0 +1,52 @@

|

||||

package loadtester

|

||||

|

||||

import (

|

||||

"github.com/stefanprodan/flagger/pkg/logging"

|

||||

"testing"

|

||||

"time"

|

||||

)

|

||||

|

||||

func TestTaskRunner_Start(t *testing.T) {

|

||||

stop := make(chan struct{})

|

||||

logger, _ := logging.NewLogger("debug")

|

||||

tr := NewTaskRunner(logger, time.Hour, false)

|

||||

|

||||

go tr.Start(10*time.Millisecond, stop)

|

||||

|

||||

task1 := Task{

|

||||

Canary: "podinfo.default",

|

||||

Command: "sleep 0.6",

|

||||

}

|

||||

task2 := Task{

|

||||

Canary: "podinfo.default",

|

||||

Command: "sleep 0.7",

|

||||

}

|

||||

|

||||

tr.Add(task1)

|

||||

tr.Add(task2)

|

||||

|

||||

time.Sleep(100 * time.Millisecond)

|

||||

|

||||

tr.Add(task1)

|

||||

tr.Add(task2)

|

||||

|

||||

time.Sleep(100 * time.Millisecond)

|

||||

|

||||

tr.Add(task1)

|

||||

tr.Add(task2)

|

||||

|

||||

if tr.GetTotalExecs() != 2 {

|

||||

t.Errorf("Got total executed commands %v wanted %v", tr.GetTotalExecs(), 2)

|

||||

}

|

||||

|

||||

time.Sleep(time.Second)

|

||||

|

||||

tr.Add(task1)

|

||||

tr.Add(task2)

|

||||

|

||||

time.Sleep(time.Second)

|

||||

|

||||

if tr.GetTotalExecs() != 4 {

|

||||

t.Errorf("Got total executed commands %v wanted %v", tr.GetTotalExecs(), 4)

|

||||

}

|

||||

}

|

||||

85

pkg/loadtester/server.go

Normal file

85

pkg/loadtester/server.go

Normal file

@@ -0,0 +1,85 @@

|

||||

package loadtester

|

||||

|

||||

import (

|

||||

"context"

|

||||

"encoding/json"

|

||||

"fmt"

|

||||

"io/ioutil"

|

||||

"net/http"

|

||||

"time"

|

||||

|

||||

"github.com/prometheus/client_golang/prometheus/promhttp"

|

||||

flaggerv1 "github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

"go.uber.org/zap"

|

||||

)

|

||||

|

||||

// ListenAndServe starts a web server and waits for SIGTERM

|

||||

func ListenAndServe(port string, timeout time.Duration, logger *zap.SugaredLogger, taskRunner *TaskRunner, stopCh <-chan struct{}) {

|

||||

mux := http.DefaultServeMux

|

||||

mux.Handle("/metrics", promhttp.Handler())

|

||||

mux.HandleFunc("/healthz", func(w http.ResponseWriter, r *http.Request) {

|

||||

w.WriteHeader(http.StatusOK)

|

||||

w.Write([]byte("OK"))

|

||||

})

|

||||

mux.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

|

||||

body, err := ioutil.ReadAll(r.Body)

|

||||

if err != nil {

|

||||

logger.Error("reading the request body failed", zap.Error(err))

|

||||

w.WriteHeader(http.StatusBadRequest)

|

||||

return

|

||||

}

|

||||

defer r.Body.Close()

|

||||

|

||||

payload := &flaggerv1.CanaryWebhookPayload{}

|

||||

err = json.Unmarshal(body, payload)

|

||||

if err != nil {

|

||||

logger.Error("decoding the request body failed", zap.Error(err))

|

||||

w.WriteHeader(http.StatusBadRequest)

|

||||

return

|

||||

}

|

||||

|

||||

if len(payload.Metadata) > 0 {

|

||||

if cmd, ok := payload.Metadata["cmd"]; ok {

|

||||

taskRunner.Add(Task{

|

||||

Canary: fmt.Sprintf("%s.%s", payload.Name, payload.Namespace),

|

||||

Command: cmd,

|

||||

})

|

||||

} else {

|

||||

w.WriteHeader(http.StatusBadRequest)

|

||||

w.Write([]byte("cmd not found in metadata"))

|

||||

return

|

||||

}

|

||||

} else {

|

||||

w.WriteHeader(http.StatusBadRequest)

|

||||

w.Write([]byte("metadata not found in payload"))

|

||||

return

|

||||

}

|

||||

|

||||

w.WriteHeader(http.StatusAccepted)

|

||||

})

|

||||

srv := &http.Server{

|

||||

Addr: ":" + port,

|

||||

Handler: mux,

|

||||

ReadTimeout: 5 * time.Second,

|

||||

WriteTimeout: 1 * time.Minute,

|

||||

IdleTimeout: 15 * time.Second,

|

||||

}

|

||||

|

||||

// run server in background

|

||||

go func() {

|

||||

if err := srv.ListenAndServe(); err != http.ErrServerClosed {

|

||||

logger.Fatalf("HTTP server crashed %v", err)

|

||||

}

|

||||

}()

|

||||

|

||||

// wait for SIGTERM or SIGINT

|

||||

<-stopCh

|

||||

ctx, cancel := context.WithTimeout(context.Background(), timeout)

|

||||

defer cancel()

|

||||

|

||||

if err := srv.Shutdown(ctx); err != nil {

|

||||

logger.Errorf("HTTP server graceful shutdown failed %v", err)

|

||||

} else {

|

||||

logger.Info("HTTP server stopped")

|

||||

}

|

||||

}

|

||||

@@ -1,4 +1,4 @@

|

||||

package version

|

||||

|

||||

var VERSION = "0.4.0"

|

||||

var VERSION = "0.4.1"

|

||||

var REVISION = "unknown"

|

||||

|

||||

Reference in New Issue

Block a user