mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-19 20:40:05 +00:00

Compare commits

20 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

83985ae482 | ||

|

|

3adfcc837e | ||

|

|

c720fee3ab | ||

|

|

881387e522 | ||

|

|

d9f3378e29 | ||

|

|

ba87620225 | ||

|

|

1cd0c49872 | ||

|

|

12ac96deeb | ||

|

|

bd115633a3 | ||

|

|

86ea172380 | ||

|

|

d87bbbbc1e | ||

|

|

6196f69f4d | ||

|

|

d8b847a973 | ||

|

|

e80a3d3232 | ||

|

|

780ba82385 | ||

|

|

6ba69dce0a | ||

|

|

3c7a561db8 | ||

|

|

49c942bea0 | ||

|

|

bf1ca293dc | ||

|

|

62b906d30b |

13

CHANGELOG.md

13

CHANGELOG.md

@@ -2,6 +2,19 @@

|

||||

|

||||

All notable changes to this project are documented in this file.

|

||||

|

||||

## 0.9.0 (2019-03-11)

|

||||

|

||||

Allows A/B testing scenarios where instead of weighted routing, the traffic is split between the

|

||||

primary and canary based on HTTP headers or cookies.

|

||||

|

||||

#### Features

|

||||

|

||||

- A/B testing - canary with session affinity [#88](https://github.com/stefanprodan/flagger/pull/88)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Update the analysis interval when the custom resource changes [#91](https://github.com/stefanprodan/flagger/pull/91)

|

||||

|

||||

## 0.8.0 (2019-03-06)

|

||||

|

||||

Adds support for CORS policy and HTTP request headers manipulation

|

||||

|

||||

@@ -35,6 +35,7 @@ Flagger documentation can be found at [docs.flagger.app](https://docs.flagger.ap

|

||||

* [Load testing](https://docs.flagger.app/how-it-works#load-testing)

|

||||

* Usage

|

||||

* [Canary promotions and rollbacks](https://docs.flagger.app/usage/progressive-delivery)

|

||||

* [A/B testing](https://docs.flagger.app/usage/ab-testing)

|

||||

* [Monitoring](https://docs.flagger.app/usage/monitoring)

|

||||

* [Alerting](https://docs.flagger.app/usage/alerting)

|

||||

* Tutorials

|

||||

@@ -167,7 +168,6 @@ For more details on how the canary analysis and promotion works please [read the

|

||||

|

||||

### Roadmap

|

||||

|

||||

* Add A/B testing capabilities using fixed routing based on HTTP headers and cookies match conditions

|

||||

* Integrate with other service mesh technologies like AWS AppMesh and Linkerd v2

|

||||

* Add support for comparing the canary metrics to the primary ones and do the validation based on the derivation between the two

|

||||

|

||||

|

||||

61

artifacts/ab-testing/canary.yaml

Normal file

61

artifacts/ab-testing/canary.yaml

Normal file

@@ -0,0 +1,61 @@

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: abtest

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: abtest

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: abtest

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- abtest.istio.weavedx.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# total number of iterations

|

||||

iterations: 10

|

||||

# canary match condition

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: "^(?!.*Chrome)(?=.*\bSafari\b).*$"

|

||||

- headers:

|

||||

cookie:

|

||||

regex: "^(.*?;)?(type=insider)(;.*)?$"

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# external checks (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 -H 'Cookie: type=insider' http://podinfo.test:9898/"

|

||||

67

artifacts/ab-testing/deployment.yaml

Normal file

67

artifacts/ab-testing/deployment.yaml

Normal file

@@ -0,0 +1,67 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: abtest

|

||||

namespace: test

|

||||

labels:

|

||||

app: abtest

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: abtest

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: abtest

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.4.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: blue

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

19

artifacts/ab-testing/hpa.yaml

Normal file

19

artifacts/ab-testing/hpa.yaml

Normal file

@@ -0,0 +1,19 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: abtest

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: abtest

|

||||

minReplicas: 2

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

@@ -82,6 +82,8 @@ spec:

|

||||

interval:

|

||||

type: string

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

iterations:

|

||||

type: number

|

||||

threshold:

|

||||

type: number

|

||||

maxWeight:

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: quay.io/stefanprodan/flagger:0.8.0

|

||||

image: quay.io/stefanprodan/flagger:0.9.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.8.0

|

||||

appVersion: 0.8.0

|

||||

version: 0.9.0

|

||||

appVersion: 0.9.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

@@ -83,6 +83,8 @@ spec:

|

||||

interval:

|

||||

type: string

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

iterations:

|

||||

type: number

|

||||

threshold:

|

||||

type: number

|

||||

maxWeight:

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

image:

|

||||

repository: quay.io/stefanprodan/flagger

|

||||

tag: 0.8.0

|

||||

tag: 0.9.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

metricsServer: "http://prometheus.istio-system.svc.cluster.local:9090"

|

||||

|

||||

@@ -87,12 +87,6 @@ func main() {

|

||||

logger.Fatalf("Error building example clientset: %s", err.Error())

|

||||

}

|

||||

|

||||

if namespace == "" {

|

||||

logger.Infof("Flagger Canary's Watcher is on all namespace")

|

||||

} else {

|

||||

logger.Infof("Flagger Canary's Watcher is on namespace %s", namespace)

|

||||

}

|

||||

|

||||

flaggerInformerFactory := informers.NewSharedInformerFactoryWithOptions(flaggerClient, time.Second*30, informers.WithNamespace(namespace))

|

||||

|

||||

canaryInformer := flaggerInformerFactory.Flagger().V1alpha3().Canaries()

|

||||

@@ -105,6 +99,9 @@ func main() {

|

||||

}

|

||||

|

||||

logger.Infof("Connected to Kubernetes API %s", ver)

|

||||

if namespace != "" {

|

||||

logger.Infof("Watching namespace %s", namespace)

|

||||

}

|

||||

|

||||

ok, err := controller.CheckMetricsServer(metricsServer)

|

||||

if ok {

|

||||

|

||||

BIN

docs/diagrams/flagger-abtest-steps.png

Normal file

BIN

docs/diagrams/flagger-abtest-steps.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 158 KiB |

@@ -11,6 +11,7 @@

|

||||

## Usage

|

||||

|

||||

* [Canary Deployments](usage/progressive-delivery.md)

|

||||

* [A/B Testing](usage/ab-testing.md)

|

||||

* [Monitoring](usage/monitoring.md)

|

||||

* [Alerting](usage/alerting.md)

|

||||

|

||||

|

||||

@@ -327,6 +327,49 @@ At any time you can set the `spec.skipAnalysis: true`.

|

||||

When skip analysis is enabled, Flagger checks if the canary deployment is healthy and

|

||||

promotes it without analysing it. If an analysis is underway, Flagger cancels it and runs the promotion.

|

||||

|

||||

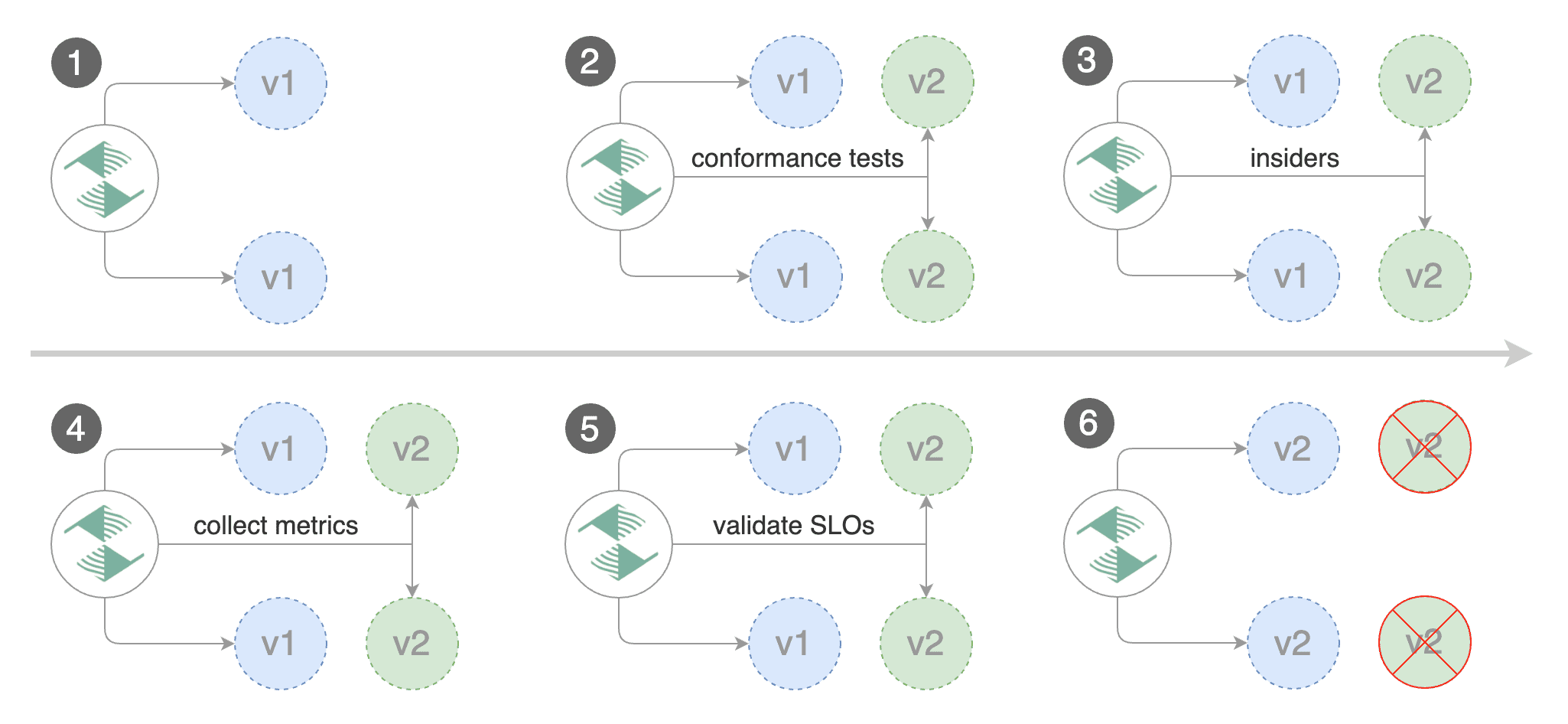

### A/B Testing

|

||||

|

||||

Besides weighted routing, Flagger can be configured to route traffic to the canary based on HTTP match conditions.

|

||||

In an A/B testing scenario, you'll be using HTTP headers or cookies to target a certain segment of your users.

|

||||

This is particularly useful for frontend applications that require session affinity.

|

||||

|

||||

You can enable A/B testing by specifying the HTTP match conditions and the number of iterations:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# total number of iterations

|

||||

iterations: 10

|

||||

# max number of failed iterations before rollback

|

||||

threshold: 2

|

||||

# canary match condition

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: "^(?!.*Chrome).*Safari.*"

|

||||

- headers:

|

||||

cookie:

|

||||

regex: "^(.*?;)?(user=test)(;.*)?$"

|

||||

```

|

||||

|

||||

If Flagger finds a HTTP match condition, it will ignore the `maxWeight` and `stepWeight` settings.

|

||||

|

||||

The above configuration will run an analysis for ten minutes targeting the Safari users and those that have a test cookie.

|

||||

You can determine the minimum time that it takes to validate and promote a canary deployment using this formula:

|

||||

|

||||

```

|

||||

interval * iterations

|

||||

```

|

||||

|

||||

And the time it takes for a canary to be rollback when the metrics or webhook checks are failing:

|

||||

|

||||

```

|

||||

interval * threshold

|

||||

```

|

||||

|

||||

Make sure that the analysis threshold is lower than the number of iterations.

|

||||

|

||||

### HTTP Metrics

|

||||

|

||||

The canary analysis is using the following Prometheus queries:

|

||||

|

||||

207

docs/gitbook/usage/ab-testing.md

Normal file

207

docs/gitbook/usage/ab-testing.md

Normal file

@@ -0,0 +1,207 @@

|

||||

# A/B Testing

|

||||

|

||||

This guide shows you how to automate A/B testing with Flagger.

|

||||

|

||||

Besides weighted routing, Flagger can be configured to route traffic to the canary based on HTTP match conditions.

|

||||

In an A/B testing scenario, you'll be using HTTP headers or cookies to target a certain segment of your users.

|

||||

This is particularly useful for frontend applications that require session affinity.

|

||||

|

||||

|

||||

|

||||

Create a test namespace with Istio sidecar injection enabled:

|

||||

|

||||

```bash

|

||||

export REPO=https://raw.githubusercontent.com/stefanprodan/flagger/master

|

||||

|

||||

kubectl apply -f ${REPO}/artifacts/namespaces/test.yaml

|

||||

```

|

||||

|

||||

Create a deployment and a horizontal pod autoscaler:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/ab-testing/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/ab-testing/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource (replace example.com with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: abtest

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: abtest

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: abtest

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.example.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 1m

|

||||

# total number of iterations

|

||||

iterations: 10

|

||||

# max number of failed iterations before rollback

|

||||

threshold: 2

|

||||

# canary match condition

|

||||

match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: "^(?!.*Chrome).*Safari.*"

|

||||

- headers:

|

||||

cookie:

|

||||

regex: "^(.*?;)?(type=insider)(;.*)?$"

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# generate traffic during analysis

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 -H 'Cookie: type=insider' http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

The above configuration will run an analysis for ten minutes targeting Safari users and those that have an insider cookie.

|

||||

|

||||

Save the above resource as podinfo-abtest.yaml and then apply it:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./podinfo-abtest.yaml

|

||||

```

|

||||

|

||||

After a couple of seconds Flagger will create the canary objects:

|

||||

|

||||

```bash

|

||||

# applied

|

||||

deployment.apps/abtest

|

||||

horizontalpodautoscaler.autoscaling/abtest

|

||||

canary.flagger.app/abtest

|

||||

|

||||

# generated

|

||||

deployment.apps/abtest-primary

|

||||

horizontalpodautoscaler.autoscaling/abtest-primary

|

||||

service/abtest

|

||||

service/abtest-canary

|

||||

service/abtest-primary

|

||||

virtualservice.networking.istio.io/abtest

|

||||

```

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/abtest \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.1

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/abtest

|

||||

|

||||

Status:

|

||||

Failed Checks: 0

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger New revision detected abtest.test

|

||||

Normal Synced 3m flagger Scaling up abtest.test

|

||||

Warning Synced 3m flagger Waiting for abtest.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance abtest.test canary iteration 1/10

|

||||

Normal Synced 3m flagger Advance abtest.test canary iteration 2/10

|

||||

Normal Synced 3m flagger Advance abtest.test canary iteration 3/10

|

||||

Normal Synced 2m flagger Advance abtest.test canary iteration 4/10

|

||||

Normal Synced 2m flagger Advance abtest.test canary iteration 5/10

|

||||

Normal Synced 1m flagger Advance abtest.test canary iteration 6/10

|

||||

Normal Synced 1m flagger Advance abtest.test canary iteration 7/10

|

||||

Normal Synced 55s flagger Advance abtest.test canary iteration 8/10

|

||||

Normal Synced 45s flagger Advance abtest.test canary iteration 9/10

|

||||

Normal Synced 35s flagger Advance abtest.test canary iteration 10/10

|

||||

Normal Synced 25s flagger Copying abtest.test template spec to abtest-primary.test

|

||||

Warning Synced 15s flagger Waiting for abtest-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down abtest.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test abtest Progressing 100 2019-03-16T14:05:07Z

|

||||

prod frontend Succeeded 0 2019-03-15T16:15:07Z

|

||||

prod backend Failed 0 2019-03-14T17:05:07Z

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test Flagger's rollback.

|

||||

|

||||

Generate HTTP 500 errors:

|

||||

|

||||

```bash

|

||||

watch curl -b 'type=insider' http://app.example.com/status/500

|

||||

```

|

||||

|

||||

Generate latency:

|

||||

|

||||

```bash

|

||||

watch curl -b 'type=insider' http://app.example.com/delay/1

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/abtest

|

||||

|

||||

Status:

|

||||

Failed Checks: 2

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger Starting canary deployment for abtest.test

|

||||

Normal Synced 3m flagger Advance abtest.test canary iteration 1/10

|

||||

Normal Synced 3m flagger Advance abtest.test canary iteration 2/10

|

||||

Normal Synced 3m flagger Advance abtest.test canary iteration 3/10

|

||||

Normal Synced 3m flagger Halt abtest.test advancement success rate 69.17% < 99%

|

||||

Normal Synced 2m flagger Halt abtest.test advancement success rate 61.39% < 99%

|

||||

Warning Synced 2m flagger Rolling back abtest.test failed checks threshold reached 2

|

||||

Warning Synced 1m flagger Canary failed! Scaling down abtest.test

|

||||

```

|

||||

@@ -98,6 +98,7 @@ type CanaryStatus struct {

|

||||

Phase CanaryPhase `json:"phase"`

|

||||

FailedChecks int `json:"failedChecks"`

|

||||

CanaryWeight int `json:"canaryWeight"`

|

||||

Iterations int `json:"iterations"`

|

||||

// +optional

|

||||

TrackedConfigs *map[string]string `json:"trackedConfigs,omitempty"`

|

||||

// +optional

|

||||

@@ -122,12 +123,14 @@ type CanaryService struct {

|

||||

|

||||

// CanaryAnalysis is used to describe how the analysis should be done

|

||||

type CanaryAnalysis struct {

|

||||

Interval string `json:"interval"`

|

||||

Threshold int `json:"threshold"`

|

||||

MaxWeight int `json:"maxWeight"`

|

||||

StepWeight int `json:"stepWeight"`

|

||||

Metrics []CanaryMetric `json:"metrics"`

|

||||

Webhooks []CanaryWebhook `json:"webhooks,omitempty"`

|

||||

Interval string `json:"interval"`

|

||||

Threshold int `json:"threshold"`

|

||||

MaxWeight int `json:"maxWeight"`

|

||||

StepWeight int `json:"stepWeight"`

|

||||

Metrics []CanaryMetric `json:"metrics"`

|

||||

Webhooks []CanaryWebhook `json:"webhooks,omitempty"`

|

||||

Match []istiov1alpha3.HTTPMatchRequest `json:"match,omitempty"`

|

||||

Iterations int `json:"iterations,omitempty"`

|

||||

}

|

||||

|

||||

// CanaryMetric holds the reference to Istio metrics used for canary analysis

|

||||

|

||||

@@ -69,6 +69,13 @@ func (in *CanaryAnalysis) DeepCopyInto(out *CanaryAnalysis) {

|

||||

(*in)[i].DeepCopyInto(&(*out)[i])

|

||||

}

|

||||

}

|

||||

if in.Match != nil {

|

||||

in, out := &in.Match, &out.Match

|

||||

*out = make([]istiov1alpha3.HTTPMatchRequest, len(*in))

|

||||

for i := range *in {

|

||||

(*in)[i].DeepCopyInto(&(*out)[i])

|

||||

}

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

|

||||

@@ -2,6 +2,8 @@ package controller

|

||||

|

||||

import (

|

||||

"github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

istiov1alpha1 "github.com/stefanprodan/flagger/pkg/apis/istio/common/v1alpha1"

|

||||

istiov1alpha3 "github.com/stefanprodan/flagger/pkg/apis/istio/v1alpha3"

|

||||

clientset "github.com/stefanprodan/flagger/pkg/client/clientset/versioned"

|

||||

fakeFlagger "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/fake"

|

||||

informers "github.com/stefanprodan/flagger/pkg/client/informers/externalversions"

|

||||

@@ -38,9 +40,12 @@ type Mocks struct {

|

||||

router router.Interface

|

||||

}

|

||||

|

||||

func SetupMocks() Mocks {

|

||||

func SetupMocks(abtest bool) Mocks {

|

||||

// init canary

|

||||

canary := newTestCanary()

|

||||

if abtest {

|

||||

canary = newTestCanaryAB()

|

||||

}

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary)

|

||||

|

||||

// init kube clientset and register mock objects

|

||||

@@ -261,6 +266,55 @@ func newTestCanary() *v1alpha3.Canary {

|

||||

return cd

|

||||

}

|

||||

|

||||

func newTestCanaryAB() *v1alpha3.Canary {

|

||||

cd := &v1alpha3.Canary{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: v1alpha3.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "podinfo",

|

||||

},

|

||||

Spec: v1alpha3.CanarySpec{

|

||||

TargetRef: hpav1.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "apps/v1",

|

||||

Kind: "Deployment",

|

||||

},

|

||||

AutoscalerRef: &hpav1.CrossVersionObjectReference{

|

||||

Name: "podinfo",

|

||||

APIVersion: "autoscaling/v2beta1",

|

||||

Kind: "HorizontalPodAutoscaler",

|

||||

}, Service: v1alpha3.CanaryService{

|

||||

Port: 9898,

|

||||

}, CanaryAnalysis: v1alpha3.CanaryAnalysis{

|

||||

Threshold: 10,

|

||||

Iterations: 10,

|

||||

Match: []istiov1alpha3.HTTPMatchRequest{

|

||||

{

|

||||

Headers: map[string]istiov1alpha1.StringMatch{

|

||||

"x-user-type": {

|

||||

Exact: "test",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

Metrics: []v1alpha3.CanaryMetric{

|

||||

{

|

||||

Name: "istio_requests_total",

|

||||

Threshold: 99,

|

||||

Interval: "1m",

|

||||

},

|

||||

{

|

||||

Name: "istio_request_duration_seconds_bucket",

|

||||

Threshold: 500,

|

||||

Interval: "1m",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

return cd

|

||||

}

|

||||

|

||||

func newTestDeployment() *appsv1.Deployment {

|

||||

d := &appsv1.Deployment{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: appsv1.SchemeGroupVersion.String()},

|

||||

|

||||

@@ -220,6 +220,32 @@ func (c *CanaryDeployer) SetStatusWeight(cd *flaggerv1.Canary, val int) error {

|

||||

return nil

|

||||

}

|

||||

|

||||

// SetStatusIterations updates the canary status iterations value

|

||||

func (c *CanaryDeployer) SetStatusIterations(cd *flaggerv1.Canary, val int) error {

|

||||

cdCopy := cd.DeepCopy()

|

||||

cdCopy.Status.Iterations = val

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("canary %s.%s status update error %v", cdCopy.Name, cdCopy.Namespace, err)

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// SetStatusWeight updates the canary status weight value

|

||||

func (c *CanaryDeployer) IncrementStatusIterations(cd *flaggerv1.Canary) error {

|

||||

cdCopy := cd.DeepCopy()

|

||||

cdCopy.Status.Iterations = cdCopy.Status.Iterations + 1

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("canary %s.%s status update error %v", cdCopy.Name, cdCopy.Namespace, err)

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

// SetStatusPhase updates the canary status phase

|

||||

func (c *CanaryDeployer) SetStatusPhase(cd *flaggerv1.Canary, phase flaggerv1.CanaryPhase) error {

|

||||

cdCopy := cd.DeepCopy()

|

||||

@@ -228,6 +254,7 @@ func (c *CanaryDeployer) SetStatusPhase(cd *flaggerv1.Canary, phase flaggerv1.Ca

|

||||

|

||||

if phase != flaggerv1.CanaryProgressing {

|

||||

cdCopy.Status.CanaryWeight = 0

|

||||

cdCopy.Status.Iterations = 0

|

||||

}

|

||||

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(cd.Namespace).UpdateStatus(cdCopy)

|

||||

@@ -261,6 +288,7 @@ func (c *CanaryDeployer) SyncStatus(cd *flaggerv1.Canary, status flaggerv1.Canar

|

||||

cdCopy.Status.Phase = status.Phase

|

||||

cdCopy.Status.CanaryWeight = status.CanaryWeight

|

||||

cdCopy.Status.FailedChecks = status.FailedChecks

|

||||

cdCopy.Status.Iterations = status.Iterations

|

||||

cdCopy.Status.LastAppliedSpec = base64.StdEncoding.EncodeToString(specJson)

|

||||

cdCopy.Status.LastTransitionTime = metav1.Now()

|

||||

cdCopy.Status.TrackedConfigs = configs

|

||||

|

||||

@@ -8,7 +8,7 @@ import (

|

||||

)

|

||||

|

||||

func TestCanaryDeployer_Sync(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

@@ -94,7 +94,7 @@ func TestCanaryDeployer_Sync(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_IsNewSpec(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

@@ -117,7 +117,7 @@ func TestCanaryDeployer_IsNewSpec(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_Promote(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

@@ -162,7 +162,7 @@ func TestCanaryDeployer_Promote(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_IsReady(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

@@ -180,7 +180,7 @@ func TestCanaryDeployer_IsReady(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_SetFailedChecks(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

@@ -202,7 +202,7 @@ func TestCanaryDeployer_SetFailedChecks(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_SetState(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

@@ -224,7 +224,7 @@ func TestCanaryDeployer_SetState(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_SyncStatus(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

@@ -263,7 +263,7 @@ func TestCanaryDeployer_SyncStatus(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestCanaryDeployer_Scale(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

err := mocks.deployer.Sync(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

|

||||

@@ -4,12 +4,13 @@ import "time"

|

||||

|

||||

// CanaryJob holds the reference to a canary deployment schedule

|

||||

type CanaryJob struct {

|

||||

Name string

|

||||

Namespace string

|

||||

SkipTests bool

|

||||

function func(name string, namespace string, skipTests bool)

|

||||

done chan bool

|

||||

ticker *time.Ticker

|

||||

Name string

|

||||

Namespace string

|

||||

SkipTests bool

|

||||

function func(name string, namespace string, skipTests bool)

|

||||

done chan bool

|

||||

ticker *time.Ticker

|

||||

analysisInterval time.Duration

|

||||

}

|

||||

|

||||

// Start runs the canary analysis on a schedule

|

||||

@@ -33,3 +34,7 @@ func (j CanaryJob) Stop() {

|

||||

close(j.done)

|

||||

j.ticker.Stop()

|

||||

}

|

||||

|

||||

func (j CanaryJob) GetCanaryAnalysisInterval() time.Duration {

|

||||

return j.analysisInterval

|

||||

}

|

||||

|

||||

@@ -24,18 +24,24 @@ func (c *Controller) scheduleCanaries() {

|

||||

name := key.(string)

|

||||

current[name] = fmt.Sprintf("%s.%s", canary.Spec.TargetRef.Name, canary.Namespace)

|

||||

|

||||

// schedule new jobs

|

||||

if _, exists := c.jobs[name]; !exists {

|

||||

job := CanaryJob{

|

||||

Name: canary.Name,

|

||||

Namespace: canary.Namespace,

|

||||

function: c.advanceCanary,

|

||||

done: make(chan bool),

|

||||

ticker: time.NewTicker(canary.GetAnalysisInterval()),

|

||||

job, exists := c.jobs[name]

|

||||

// schedule new job for exsiting job with different analysisInterval or non-existing job

|

||||

if (exists && job.GetCanaryAnalysisInterval() != canary.GetAnalysisInterval()) || !exists {

|

||||

if exists {

|

||||

job.Stop()

|

||||

}

|

||||

|

||||

c.jobs[name] = job

|

||||

job.Start()

|

||||

newJob := CanaryJob{

|

||||

Name: canary.Name,

|

||||

Namespace: canary.Namespace,

|

||||

function: c.advanceCanary,

|

||||

done: make(chan bool),

|

||||

ticker: time.NewTicker(canary.GetAnalysisInterval()),

|

||||

analysisInterval: canary.GetAnalysisInterval(),

|

||||

}

|

||||

|

||||

c.jobs[name] = newJob

|

||||

newJob.Start()

|

||||

}

|

||||

|

||||

// compute canaries per namespace total

|

||||

@@ -76,10 +82,13 @@ func (c *Controller) advanceCanary(name string, namespace string, skipLivenessCh

|

||||

// check if the canary exists

|

||||

cd, err := c.flaggerClient.FlaggerV1alpha3().Canaries(namespace).Get(name, v1.GetOptions{})

|

||||

if err != nil {

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", name, namespace)).Errorf("Canary %s.%s not found", name, namespace)

|

||||

c.logger.With("canary", fmt.Sprintf("%s.%s", name, namespace)).

|

||||

Errorf("Canary %s.%s not found", name, namespace)

|

||||

return

|

||||

}

|

||||

|

||||

primaryName := fmt.Sprintf("%s-primary", cd.Spec.TargetRef.Name)

|

||||

|

||||

// create primary deployment and hpa if needed

|

||||

if err := c.deployer.Sync(cd); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

@@ -160,6 +169,7 @@ func (c *Controller) advanceCanary(name string, namespace string, skipLivenessCh

|

||||

Phase: flaggerv1.CanaryProgressing,

|

||||

CanaryWeight: 0,

|

||||

FailedChecks: 0,

|

||||

Iterations: 0,

|

||||

}

|

||||

if err := c.deployer.SyncStatus(cd, status); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

@@ -247,7 +257,72 @@ func (c *Controller) advanceCanary(name string, namespace string, skipLivenessCh

|

||||

}

|

||||

}

|

||||

|

||||

// increase canary traffic percentage

|

||||

// canary fix routing: A/B testing

|

||||

if len(cd.Spec.CanaryAnalysis.Match) > 0 {

|

||||

// route traffic to canary and increment iterations

|

||||

if cd.Spec.CanaryAnalysis.Iterations > cd.Status.Iterations {

|

||||

if err := meshRouter.SetRoutes(cd, 0, 100); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

c.recorder.SetWeight(cd, 0, 100)

|

||||

|

||||

if err := c.deployer.SetStatusIterations(cd, cd.Status.Iterations+1); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

c.recordEventInfof(cd, "Advance %s.%s canary iteration %v/%v",

|

||||

cd.Name, cd.Namespace, cd.Status.Iterations+1, cd.Spec.CanaryAnalysis.Iterations)

|

||||

return

|

||||

}

|

||||

|

||||

// promote canary - max iterations reached

|

||||

if cd.Spec.CanaryAnalysis.Iterations == cd.Status.Iterations {

|

||||

c.recordEventInfof(cd, "Copying %s.%s template spec to %s.%s",

|

||||

cd.Spec.TargetRef.Name, cd.Namespace, primaryName, cd.Namespace)

|

||||

if err := c.deployer.Promote(cd); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

// increment iterations

|

||||

if err := c.deployer.SetStatusIterations(cd, cd.Status.Iterations+1); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

return

|

||||

}

|

||||

|

||||

// shutdown canary

|

||||

if cd.Spec.CanaryAnalysis.Iterations < cd.Status.Iterations {

|

||||

// route all traffic to the primary

|

||||

if err := meshRouter.SetRoutes(cd, 100, 0); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

c.recorder.SetWeight(cd, 100, 0)

|

||||

c.recordEventInfof(cd, "Promotion completed! Scaling down %s.%s", cd.Spec.TargetRef.Name, cd.Namespace)

|

||||

|

||||

// canary scale to zero

|

||||

if err := c.deployer.Scale(cd, 0); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

|

||||

// update status phase

|

||||

if err := c.deployer.SetStatusPhase(cd, flaggerv1.CanarySucceeded); err != nil {

|

||||

c.recordEventWarningf(cd, "%v", err)

|

||||

return

|

||||

}

|

||||

c.recorder.SetStatus(cd)

|

||||

c.sendNotification(cd, "Canary analysis completed successfully, promotion finished.",

|

||||

false, false)

|

||||

return

|

||||

}

|

||||

|

||||

return

|

||||

}

|

||||

|

||||

// canary incremental traffic weight

|

||||

if canaryWeight < maxWeight {

|

||||

primaryWeight -= cd.Spec.CanaryAnalysis.StepWeight

|

||||

if primaryWeight < 0 {

|

||||

@@ -273,7 +348,6 @@ func (c *Controller) advanceCanary(name string, namespace string, skipLivenessCh

|

||||

c.recordEventInfof(cd, "Advance %s.%s canary weight %v", cd.Name, cd.Namespace, canaryWeight)

|

||||

|

||||

// promote canary

|

||||

primaryName := fmt.Sprintf("%s-primary", cd.Spec.TargetRef.Name)

|

||||

if canaryWeight == maxWeight {

|

||||

c.recordEventInfof(cd, "Copying %s.%s template spec to %s.%s",

|

||||

cd.Spec.TargetRef.Name, cd.Namespace, primaryName, cd.Namespace)

|

||||

|

||||

@@ -7,7 +7,7 @@ import (

|

||||

)

|

||||

|

||||

func TestScheduler_Init(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", false)

|

||||

|

||||

_, err := mocks.kubeClient.AppsV1().Deployments("default").Get("podinfo-primary", metav1.GetOptions{})

|

||||

@@ -17,7 +17,7 @@ func TestScheduler_Init(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestScheduler_NewRevision(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", false)

|

||||

|

||||

// update

|

||||

@@ -41,7 +41,7 @@ func TestScheduler_NewRevision(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestScheduler_Rollback(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

// init

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", true)

|

||||

|

||||

@@ -65,7 +65,7 @@ func TestScheduler_Rollback(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestScheduler_SkipAnalysis(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

// init

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", false)

|

||||

|

||||

@@ -106,7 +106,7 @@ func TestScheduler_SkipAnalysis(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestScheduler_NewRevisionReset(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

// init

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", false)

|

||||

|

||||

@@ -160,7 +160,7 @@ func TestScheduler_NewRevisionReset(t *testing.T) {

|

||||

}

|

||||

|

||||

func TestScheduler_Promotion(t *testing.T) {

|

||||

mocks := SetupMocks()

|

||||

mocks := SetupMocks(false)

|

||||

// init

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", false)

|

||||

|

||||

@@ -258,3 +258,74 @@ func TestScheduler_Promotion(t *testing.T) {

|

||||

t.Errorf("Got canary state %v wanted %v", c.Status.Phase, v1alpha3.CanarySucceeded)

|

||||

}

|

||||

}

|

||||

|

||||

func TestScheduler_ABTesting(t *testing.T) {

|

||||

mocks := SetupMocks(true)

|

||||

// init

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", false)

|

||||

|

||||

// update

|

||||

dep2 := newTestDeploymentV2()

|

||||

_, err := mocks.kubeClient.AppsV1().Deployments("default").Update(dep2)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

// detect pod spec changes

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", true)

|

||||

|

||||

// advance

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", true)

|

||||

|

||||

// check if traffic is routed to canary

|

||||

primaryWeight, canaryWeight, err := mocks.router.GetRoutes(mocks.canary)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if primaryWeight != 0 {

|

||||

t.Errorf("Got primary route %v wanted %v", primaryWeight, 0)

|

||||

}

|

||||

|

||||

if canaryWeight != 100 {

|

||||

t.Errorf("Got canary route %v wanted %v", canaryWeight, 100)

|

||||

}

|

||||

|

||||

cd, err := mocks.flaggerClient.FlaggerV1alpha3().Canaries("default").Get("podinfo", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

// set max iterations

|

||||

if err := mocks.deployer.SetStatusIterations(cd, 10); err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

// promote

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", true)

|

||||

|

||||

// check if the container image tag was updated

|

||||

primaryDep, err := mocks.kubeClient.AppsV1().Deployments("default").Get("podinfo-primary", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

primaryImage := primaryDep.Spec.Template.Spec.Containers[0].Image

|

||||

canaryImage := dep2.Spec.Template.Spec.Containers[0].Image

|

||||

if primaryImage != canaryImage {

|

||||

t.Errorf("Got primary image %v wanted %v", primaryImage, canaryImage)

|

||||

}

|

||||

|

||||

// shutdown canary

|

||||

mocks.ctrl.advanceCanary("podinfo", "default", true)

|

||||

|

||||

// check rollout status

|

||||

c, err := mocks.flaggerClient.FlaggerV1alpha3().Canaries("default").Get("podinfo", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if c.Status.Phase != v1alpha3.CanarySucceeded {

|

||||

t.Errorf("Got canary state %v wanted %v", c.Status.Phase, v1alpha3.CanarySucceeded)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -55,7 +55,7 @@ func (ir *IstioRouter) Sync(canary *flaggerv1.Canary) error {

|

||||

}

|

||||

|

||||

// create destinations with primary weight 100% and canary weight 0%

|

||||

route := []istiov1alpha3.DestinationWeight{

|

||||

canaryRoute := []istiov1alpha3.DestinationWeight{

|

||||

{

|

||||

Destination: istiov1alpha3.Destination{

|

||||

Host: primaryName,

|

||||

@@ -87,11 +87,45 @@ func (ir *IstioRouter) Sync(canary *flaggerv1.Canary) error {

|

||||

Retries: canary.Spec.Service.Retries,

|

||||

CorsPolicy: canary.Spec.Service.CorsPolicy,

|

||||

AppendHeaders: addHeaders(canary),

|

||||

Route: route,

|

||||

Route: canaryRoute,

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

if len(canary.Spec.CanaryAnalysis.Match) > 0 {

|

||||

canaryMatch := mergeMatchConditions(canary.Spec.CanaryAnalysis.Match, canary.Spec.Service.Match)

|

||||

newSpec.Http = []istiov1alpha3.HTTPRoute{

|

||||

{

|

||||

Match: canaryMatch,

|

||||

Rewrite: canary.Spec.Service.Rewrite,

|

||||

Timeout: canary.Spec.Service.Timeout,

|

||||

Retries: canary.Spec.Service.Retries,

|

||||

CorsPolicy: canary.Spec.Service.CorsPolicy,

|

||||

AppendHeaders: addHeaders(canary),

|

||||

Route: canaryRoute,

|

||||

},

|

||||

{

|

||||

Match: canary.Spec.Service.Match,

|

||||

Rewrite: canary.Spec.Service.Rewrite,

|

||||

Timeout: canary.Spec.Service.Timeout,

|

||||

Retries: canary.Spec.Service.Retries,

|

||||

CorsPolicy: canary.Spec.Service.CorsPolicy,

|

||||

AppendHeaders: addHeaders(canary),

|

||||

Route: []istiov1alpha3.DestinationWeight{

|

||||

{

|

||||

Destination: istiov1alpha3.Destination{

|

||||

Host: primaryName,

|

||||

Port: istiov1alpha3.PortSelector{

|

||||

Number: uint32(canary.Spec.Service.Port),

|

||||

},

|

||||

},

|

||||

Weight: 100,

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

virtualService, err := ir.istioClient.NetworkingV1alpha3().VirtualServices(canary.Namespace).Get(targetName, metav1.GetOptions{})

|

||||

// insert

|

||||

if errors.IsNotFound(err) {

|

||||

@@ -160,17 +194,25 @@ func (ir *IstioRouter) GetRoutes(canary *flaggerv1.Canary) (

|

||||

return

|

||||

}

|

||||

|

||||

var httpRoute istiov1alpha3.HTTPRoute

|

||||

for _, http := range vs.Spec.Http {

|

||||

for _, route := range http.Route {

|

||||

if route.Destination.Host == fmt.Sprintf("%s-primary", targetName) {

|

||||

primaryWeight = route.Weight

|

||||

}

|

||||

if route.Destination.Host == fmt.Sprintf("%s-canary", targetName) {

|

||||

canaryWeight = route.Weight

|

||||

for _, r := range http.Route {

|

||||

if r.Destination.Host == fmt.Sprintf("%s-canary", targetName) {

|

||||

httpRoute = http

|

||||

break

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

for _, route := range httpRoute.Route {

|

||||

if route.Destination.Host == fmt.Sprintf("%s-primary", targetName) {

|

||||

primaryWeight = route.Weight

|

||||

}

|

||||

if route.Destination.Host == fmt.Sprintf("%s-canary", targetName) {

|

||||

canaryWeight = route.Weight

|

||||

}

|

||||

}

|

||||

|

||||

if primaryWeight == 0 && canaryWeight == 0 {

|

||||

err = fmt.Errorf("VirtualService %s.%s does not contain routes for %s-primary and %s-canary",

|

||||

targetName, canary.Namespace, targetName, targetName)

|

||||

@@ -196,6 +238,8 @@ func (ir *IstioRouter) SetRoutes(

|

||||

}

|

||||

|

||||

vsCopy := vs.DeepCopy()

|

||||

|

||||

// weighted routing (progressive canary)

|

||||

vsCopy.Spec.Http = []istiov1alpha3.HTTPRoute{

|

||||

{

|

||||

Match: canary.Spec.Service.Match,

|

||||

@@ -227,6 +271,61 @@ func (ir *IstioRouter) SetRoutes(

|

||||

},

|

||||

}

|

||||

|

||||

// fix routing (A/B testing)

|

||||

if len(canary.Spec.CanaryAnalysis.Match) > 0 {

|

||||

// merge the common routes with the canary ones

|

||||

canaryMatch := mergeMatchConditions(canary.Spec.CanaryAnalysis.Match, canary.Spec.Service.Match)

|

||||

vsCopy.Spec.Http = []istiov1alpha3.HTTPRoute{

|

||||

{

|

||||

Match: canaryMatch,

|

||||

Rewrite: canary.Spec.Service.Rewrite,

|

||||

Timeout: canary.Spec.Service.Timeout,

|

||||

Retries: canary.Spec.Service.Retries,

|

||||

CorsPolicy: canary.Spec.Service.CorsPolicy,

|

||||

AppendHeaders: addHeaders(canary),

|

||||

Route: []istiov1alpha3.DestinationWeight{

|

||||

{

|

||||

Destination: istiov1alpha3.Destination{

|

||||

Host: fmt.Sprintf("%s-primary", targetName),

|

||||

Port: istiov1alpha3.PortSelector{

|

||||

Number: uint32(canary.Spec.Service.Port),

|

||||

},

|

||||

},

|

||||

Weight: primaryWeight,

|

||||

},

|

||||

{

|

||||

Destination: istiov1alpha3.Destination{

|

||||

Host: fmt.Sprintf("%s-canary", targetName),

|

||||

Port: istiov1alpha3.PortSelector{

|

||||

Number: uint32(canary.Spec.Service.Port),

|

||||

},

|

||||

},

|

||||

Weight: canaryWeight,

|

||||

},

|

||||

},

|

||||

},

|

||||

{

|

||||

Match: canary.Spec.Service.Match,

|

||||

Rewrite: canary.Spec.Service.Rewrite,

|

||||

Timeout: canary.Spec.Service.Timeout,

|

||||

Retries: canary.Spec.Service.Retries,

|

||||

CorsPolicy: canary.Spec.Service.CorsPolicy,

|

||||

AppendHeaders: addHeaders(canary),

|

||||

Route: []istiov1alpha3.DestinationWeight{

|

||||

{

|

||||

Destination: istiov1alpha3.Destination{

|

||||

Host: fmt.Sprintf("%s-primary", targetName),

|

||||

Port: istiov1alpha3.PortSelector{

|

||||

Number: uint32(canary.Spec.Service.Port),

|

||||

},

|

||||

},

|

||||

Weight: primaryWeight,

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

}

|

||||

|

||||

vs, err = ir.istioClient.NetworkingV1alpha3().VirtualServices(canary.Namespace).Update(vsCopy)

|

||||

if err != nil {

|

||||

return fmt.Errorf("VirtualService %s.%s update failed: %v", targetName, canary.Namespace, err)

|

||||

@@ -246,3 +345,16 @@ func addHeaders(canary *flaggerv1.Canary) (headers map[string]string) {

|

||||

|

||||

return

|

||||

}

|

||||

|

||||

// mergeMatchConditions appends the URI match rules to canary conditions

|

||||

func mergeMatchConditions(canary, defaults []istiov1alpha3.HTTPMatchRequest) []istiov1alpha3.HTTPMatchRequest {

|

||||

for i := range canary {

|

||||

for _, d := range defaults {

|

||||

if d.Uri != nil {

|

||||

canary[i].Uri = d.Uri

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

return canary

|

||||

}

|

||||

|

||||

@@ -239,3 +239,63 @@ func TestIstioRouter_CORS(t *testing.T) {

|

||||

t.Fatalf("Got CORS allow methods %v wanted %v", len(methods), 2)

|

||||

}

|

||||

}

|

||||

|

||||

func TestIstioRouter_ABTest(t *testing.T) {

|

||||

mocks := setupfakeClients()

|

||||

router := &IstioRouter{

|

||||

logger: mocks.logger,

|

||||

flaggerClient: mocks.flaggerClient,

|

||||

istioClient: mocks.istioClient,

|

||||

kubeClient: mocks.kubeClient,

|

||||

}

|

||||

|

||||

err := router.Sync(mocks.abtest)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

// test insert

|

||||

vs, err := mocks.istioClient.NetworkingV1alpha3().VirtualServices("default").Get("abtest", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

if len(vs.Spec.Http) != 2 {

|

||||

t.Errorf("Got Istio VS Http %v wanted %v", len(vs.Spec.Http), 2)

|

||||

}

|

||||

|

||||

p := 0

|

||||

c := 100

|

||||

|

||||

err = router.SetRoutes(mocks.abtest, p, c)

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

vs, err = mocks.istioClient.NetworkingV1alpha3().VirtualServices("default").Get("abtest", metav1.GetOptions{})

|

||||

if err != nil {

|

||||

t.Fatal(err.Error())

|

||||

}

|

||||

|

||||

pRoute := istiov1alpha3.DestinationWeight{}

|

||||

cRoute := istiov1alpha3.DestinationWeight{}

|

||||

|

||||

for _, http := range vs.Spec.Http {

|

||||

for _, route := range http.Route {

|

||||

if route.Destination.Host == fmt.Sprintf("%s-primary", mocks.abtest.Spec.TargetRef.Name) {

|

||||

pRoute = route

|

||||

}

|

||||

if route.Destination.Host == fmt.Sprintf("%s-canary", mocks.abtest.Spec.TargetRef.Name) {

|

||||

cRoute = route

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

if pRoute.Weight != p {

|

||||

t.Errorf("Got primary weight %v wanted %v", pRoute.Weight, p)

|

||||

}

|

||||

|

||||

if cRoute.Weight != c {

|

||||

t.Errorf("Got canary weight %v wanted %v", cRoute.Weight, c)

|

||||

}

|

||||

}

|

||||

|

||||

@@ -2,6 +2,7 @@ package router

|

||||

|

||||

import (

|

||||

"github.com/stefanprodan/flagger/pkg/apis/flagger/v1alpha3"

|

||||

istiov1alpha1 "github.com/stefanprodan/flagger/pkg/apis/istio/common/v1alpha1"

|

||||

istiov1alpha3 "github.com/stefanprodan/flagger/pkg/apis/istio/v1alpha3"

|

||||

clientset "github.com/stefanprodan/flagger/pkg/client/clientset/versioned"

|

||||

fakeFlagger "github.com/stefanprodan/flagger/pkg/client/clientset/versioned/fake"

|

||||

@@ -17,6 +18,7 @@ import (

|

||||

|

||||

type fakeClients struct {

|

||||

canary *v1alpha3.Canary

|

||||

abtest *v1alpha3.Canary

|

||||

kubeClient kubernetes.Interface

|

||||

istioClient clientset.Interface

|

||||

flaggerClient clientset.Interface

|

||||

@@ -25,17 +27,17 @@ type fakeClients struct {

|

||||

|

||||

func setupfakeClients() fakeClients {

|

||||

canary := newMockCanary()

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary)

|

||||

abtest := newMockABTest()

|

||||

flaggerClient := fakeFlagger.NewSimpleClientset(canary, abtest)

|

||||

|

||||

kubeClient := fake.NewSimpleClientset(

|

||||

newMockDeployment(),

|

||||

)

|

||||

kubeClient := fake.NewSimpleClientset(newMockDeployment(), newMockABTestDeployment())

|

||||

|

||||

istioClient := fakeFlagger.NewSimpleClientset()

|

||||

logger, _ := logging.NewLogger("debug")

|

||||

|

||||

return fakeClients{

|

||||

canary: canary,

|

||||

abtest: abtest,

|

||||

kubeClient: kubeClient,

|

||||

istioClient: istioClient,

|

||||

flaggerClient: flaggerClient,

|

||||

@@ -93,6 +95,51 @@ func newMockCanary() *v1alpha3.Canary {

|

||||

return cd

|

||||

}

|

||||

|

||||

func newMockABTest() *v1alpha3.Canary {

|

||||

cd := &v1alpha3.Canary{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: v1alpha3.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "abtest",

|

||||

},

|

||||

Spec: v1alpha3.CanarySpec{

|

||||

TargetRef: hpav1.CrossVersionObjectReference{

|

||||

Name: "abtest",

|

||||

APIVersion: "apps/v1",

|

||||

Kind: "Deployment",

|

||||

},

|

||||

Service: v1alpha3.CanaryService{

|

||||

Port: 9898,

|

||||

}, CanaryAnalysis: v1alpha3.CanaryAnalysis{

|

||||

Threshold: 10,

|

||||

Iterations: 2,

|

||||

Match: []istiov1alpha3.HTTPMatchRequest{

|

||||

{

|

||||

Headers: map[string]istiov1alpha1.StringMatch{

|

||||

"x-user-type": {

|

||||

Exact: "test",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

Metrics: []v1alpha3.CanaryMetric{

|

||||

{

|

||||

Name: "istio_requests_total",

|

||||

Threshold: 99,

|

||||

Interval: "1m",

|

||||

},

|

||||

{

|

||||

Name: "istio_request_duration_seconds_bucket",

|

||||

Threshold: 500,

|

||||

Interval: "1m",

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

return cd

|

||||

}

|

||||

|

||||

func newMockDeployment() *appsv1.Deployment {

|

||||

d := &appsv1.Deployment{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: appsv1.SchemeGroupVersion.String()},

|

||||

@@ -137,3 +184,48 @@ func newMockDeployment() *appsv1.Deployment {

|

||||

|

||||

return d

|

||||

}

|

||||

|

||||

func newMockABTestDeployment() *appsv1.Deployment {

|

||||

d := &appsv1.Deployment{

|

||||

TypeMeta: metav1.TypeMeta{APIVersion: appsv1.SchemeGroupVersion.String()},

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Namespace: "default",

|

||||

Name: "abtest",

|

||||

},

|

||||

Spec: appsv1.DeploymentSpec{

|

||||

Selector: &metav1.LabelSelector{

|

||||

MatchLabels: map[string]string{

|

||||

"app": "abtest",

|

||||

},

|

||||

},

|

||||

Template: corev1.PodTemplateSpec{

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Labels: map[string]string{

|

||||

"app": "abtest",

|

||||

},

|

||||

},

|

||||

Spec: corev1.PodSpec{

|

||||

Containers: []corev1.Container{

|

||||

{

|

||||

Name: "podinfo",

|

||||

Image: "quay.io/stefanprodan/podinfo:1.4.0",

|

||||

Command: []string{

|

||||

"./podinfo",

|

||||

"--port=9898",

|

||||

},

|

||||

Ports: []corev1.ContainerPort{

|

||||

{

|

||||

Name: "http",

|

||||

ContainerPort: 9898,

|

||||

Protocol: corev1.ProtocolTCP,

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

}

|

||||

|

||||

return d

|

||||

}

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

package version

|

||||

|

||||

var VERSION = "0.8.0"

|

||||

var VERSION = "0.9.0"

|

||||

var REVISION = "unknown"

|

||||

|

||||

Reference in New Issue

Block a user