mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-16 02:49:51 +00:00

Compare commits

128 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

316de42a2c | ||

|

|

dfb4b35e6c | ||

|

|

61ab596d1b | ||

|

|

3345692751 | ||

|

|

dff9287c75 | ||

|

|

b5fb7cdae5 | ||

|

|

2e79817437 | ||

|

|

5f439adc36 | ||

|

|

45df96ff3c | ||

|

|

98ee150364 | ||

|

|

d328a2146a | ||

|

|

4513f2e8be | ||

|

|

095fef1de6 | ||

|

|

754f02a30f | ||

|

|

01a4e7f6a8 | ||

|

|

6bba84422d | ||

|

|

26190d0c6a | ||

|

|

2d9098e43c | ||

|

|

7581b396b2 | ||

|

|

67a6366906 | ||

|

|

5605fab740 | ||

|

|

b76d0001ed | ||

|

|

625eed0840 | ||

|

|

37f9151de3 | ||

|

|

20af98e4dc | ||

|

|

76800d0ed0 | ||

|

|

3103bde7f7 | ||

|

|

298d8c2d65 | ||

|

|

5cdacf81e3 | ||

|

|

2141d88ce1 | ||

|

|

e8a2d4be2e | ||

|

|

9a9baadf0e | ||

|

|

a21e53fa31 | ||

|

|

61f8aea7d8 | ||

|

|

e384b03d49 | ||

|

|

0c60cf39f8 | ||

|

|

268fa9999f | ||

|

|

ff7d4e747c | ||

|

|

121fc57aa6 | ||

|

|

991fa1cfc8 | ||

|

|

fb2961715d | ||

|

|

74c1c2f1ef | ||

|

|

4da6c1b6e4 | ||

|

|

fff03b170f | ||

|

|

434acbb71b | ||

|

|

01962c32cd | ||

|

|

6b0856a054 | ||

|

|

708dbd6bbc | ||

|

|

e3801cbff6 | ||

|

|

fc68635098 | ||

|

|

6706ca5d65 | ||

|

|

44c2fd57c5 | ||

|

|

a9aab3e3ac | ||

|

|

6478d0b6cf | ||

|

|

958af18dc0 | ||

|

|

54b8257c60 | ||

|

|

e86f62744e | ||

|

|

0734773993 | ||

|

|

888cc667f1 | ||

|

|

053d0da617 | ||

|

|

7a4e0bc80c | ||

|

|

7b7306584f | ||

|

|

d6027af632 | ||

|

|

761746af21 | ||

|

|

510a6eaaed | ||

|

|

655df36913 | ||

|

|

2e079ba7a1 | ||

|

|

9df6bfbb5e | ||

|

|

2ff86fa56e | ||

|

|

1b2e0481b9 | ||

|

|

fe96af64e9 | ||

|

|

77d8e4e4d3 | ||

|

|

800b0475ee | ||

|

|

b58e13809c | ||

|

|

9845578cdd | ||

|

|

96ccfa54fb | ||

|

|

b8a64c79be | ||

|

|

4a4c261a88 | ||

|

|

8282f86d9c | ||

|

|

2b6966d8e3 | ||

|

|

c667c947ad | ||

|

|

105b28bf42 | ||

|

|

37a1ff5c99 | ||

|

|

d19a070faf | ||

|

|

d908355ab3 | ||

|

|

a6d86f2e81 | ||

|

|

9d856a4f96 | ||

|

|

a7112fafb0 | ||

|

|

93f9e51280 | ||

|

|

65e9a402cf | ||

|

|

f7513b33a6 | ||

|

|

0b3fa517d3 | ||

|

|

507075920c | ||

|

|

a212f032a6 | ||

|

|

eb8755249f | ||

|

|

73bb2a9fa2 | ||

|

|

5d3ffa8c90 | ||

|

|

87f143f5fd | ||

|

|

f56b6dd6a7 | ||

|

|

5e40340f9c | ||

|

|

2456737df7 | ||

|

|

1191d708de | ||

|

|

4d26971fc7 | ||

|

|

0421b32834 | ||

|

|

360dd63e49 | ||

|

|

f1670dbe6a | ||

|

|

e7ad5c0381 | ||

|

|

2cfe2a105a | ||

|

|

bc83cee503 | ||

|

|

5091d3573c | ||

|

|

ffe5dd91c5 | ||

|

|

d76b560967 | ||

|

|

f062ef3a57 | ||

|

|

5fc1baf4df | ||

|

|

777b77b69e | ||

|

|

5d221e781a | ||

|

|

ddab72cd59 | ||

|

|

87d0b33327 | ||

|

|

225a9015bb | ||

|

|

c0b60b1497 | ||

|

|

0463c19825 | ||

|

|

8e70aa90c1 | ||

|

|

0a418eb88a | ||

|

|

040dbb8d03 | ||

|

|

64f2288bdd | ||

|

|

8008562a33 | ||

|

|

a39652724d | ||

|

|

691c3c4f36 |

@@ -3,7 +3,7 @@ jobs:

|

||||

|

||||

build-binary:

|

||||

docker:

|

||||

- image: circleci/golang:1.12

|

||||

- image: circleci/golang:1.13

|

||||

working_directory: ~/build

|

||||

steps:

|

||||

- checkout

|

||||

@@ -44,7 +44,7 @@ jobs:

|

||||

|

||||

push-container:

|

||||

docker:

|

||||

- image: circleci/golang:1.12

|

||||

- image: circleci/golang:1.13

|

||||

steps:

|

||||

- checkout

|

||||

- setup_remote_docker:

|

||||

@@ -56,7 +56,7 @@ jobs:

|

||||

|

||||

push-binary:

|

||||

docker:

|

||||

- image: circleci/golang:1.12

|

||||

- image: circleci/golang:1.13

|

||||

working_directory: ~/build

|

||||

steps:

|

||||

- checkout

|

||||

@@ -132,6 +132,9 @@ jobs:

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-nginx.sh

|

||||

- run: test/e2e-nginx-tests.sh

|

||||

- run: test/e2e-nginx-cleanup.sh

|

||||

- run: test/e2e-nginx-custom-annotations.sh

|

||||

- run: test/e2e-nginx-tests.sh

|

||||

|

||||

e2e-linkerd-testing:

|

||||

machine: true

|

||||

@@ -146,7 +149,7 @@ jobs:

|

||||

|

||||

push-helm-charts:

|

||||

docker:

|

||||

- image: circleci/golang:1.12

|

||||

- image: circleci/golang:1.13

|

||||

steps:

|

||||

- checkout

|

||||

- run:

|

||||

|

||||

84

CHANGELOG.md

84

CHANGELOG.md

@@ -2,6 +2,90 @@

|

||||

|

||||

All notable changes to this project are documented in this file.

|

||||

|

||||

## 0.19.0 (2019-10-08)

|

||||

|

||||

Adds support for canary and blue/green [traffic mirroring](https://docs.flagger.app/usage/progressive-delivery#traffic-mirroring)

|

||||

|

||||

#### Features

|

||||

|

||||

- Add traffic mirroring for Istio service mesh [#311](https://github.com/weaveworks/flagger/pull/311)

|

||||

- Implement canary service target port [#327](https://github.com/weaveworks/flagger/pull/327)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Allow gPRC protocol for App Mesh [#325](https://github.com/weaveworks/flagger/pull/325)

|

||||

- Enforce blue/green when using Kubernetes networking [#326](https://github.com/weaveworks/flagger/pull/326)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Fix port discovery diff [#324](https://github.com/weaveworks/flagger/pull/324)

|

||||

- Helm chart: Enable Prometheus scraping of Flagger metrics [#2141d88](https://github.com/weaveworks/flagger/commit/2141d88ce1cc6be220dab34171c215a334ecde24)

|

||||

|

||||

## 0.18.6 (2019-10-03)

|

||||

|

||||

Adds support for App Mesh conformance tests and latency metric checks

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Add support for acceptance testing when using App Mesh [#322](https://github.com/weaveworks/flagger/pull/322)

|

||||

- Add Kustomize installer for App Mesh [#310](https://github.com/weaveworks/flagger/pull/310)

|

||||

- Update Linkerd to v2.5.0 and Prometheus to v2.12.0 [#323](https://github.com/weaveworks/flagger/pull/323)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Fix slack/teams notification fields mapping [#318](https://github.com/weaveworks/flagger/pull/318)

|

||||

|

||||

## 0.18.5 (2019-10-02)

|

||||

|

||||

Adds support for [confirm-promotion](https://docs.flagger.app/how-it-works#webhooks) webhooks and blue/green deployments when using a service mesh

|

||||

|

||||

#### Features

|

||||

|

||||

- Implement confirm-promotion hook [#307](https://github.com/weaveworks/flagger/pull/307)

|

||||

- Implement B/G for service mesh providers [#305](https://github.com/weaveworks/flagger/pull/305)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Canary promotion improvements to avoid dropping in-flight requests [#310](https://github.com/weaveworks/flagger/pull/310)

|

||||

- Update end-to-end tests to Kubernetes v1.15.3 and Istio 1.3.0 [#306](https://github.com/weaveworks/flagger/pull/306)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Skip primary check for App Mesh [#315](https://github.com/weaveworks/flagger/pull/315)

|

||||

|

||||

## 0.18.4 (2019-09-08)

|

||||

|

||||

Adds support for NGINX custom annotations and Helm v3 acceptance testing

|

||||

|

||||

#### Features

|

||||

|

||||

- Add annotations prefix for NGINX ingresses [#293](https://github.com/weaveworks/flagger/pull/293)

|

||||

- Add wide columns in CRD [#289](https://github.com/weaveworks/flagger/pull/289)

|

||||

- loadtester: implement Helm v3 test command [#296](https://github.com/weaveworks/flagger/pull/296)

|

||||

- loadtester: add gPRC health check to load tester image [#295](https://github.com/weaveworks/flagger/pull/295)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- loadtester: fix tests error logging [#286](https://github.com/weaveworks/flagger/pull/286)

|

||||

|

||||

## 0.18.3 (2019-08-22)

|

||||

|

||||

Adds support for tillerless helm tests and protobuf health checking

|

||||

|

||||

#### Features

|

||||

|

||||

- loadtester: add support for tillerless helm [#280](https://github.com/weaveworks/flagger/pull/280)

|

||||

- loadtester: add support for protobuf health checking [#280](https://github.com/weaveworks/flagger/pull/280)

|

||||

|

||||

#### Improvements

|

||||

|

||||

- Set HTTP listeners for AppMesh virtual routers [#272](https://github.com/weaveworks/flagger/pull/272)

|

||||

|

||||

#### Fixes

|

||||

|

||||

- Add missing fields to CRD validation spec [#271](https://github.com/weaveworks/flagger/pull/271)

|

||||

- Fix App Mesh backends validation in CRD [#281](https://github.com/weaveworks/flagger/pull/281)

|

||||

|

||||

## 0.18.2 (2019-08-05)

|

||||

|

||||

Fixes multi-port support for Istio

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

FROM alpine:3.9

|

||||

FROM alpine:3.10

|

||||

|

||||

RUN addgroup -S flagger \

|

||||

&& adduser -S -g flagger flagger \

|

||||

|

||||

@@ -9,13 +9,24 @@ WORKDIR /home/app

|

||||

RUN curl -sSLo hey "https://storage.googleapis.com/jblabs/dist/hey_linux_v0.1.2" && \

|

||||

chmod +x hey && mv hey /usr/local/bin/hey

|

||||

|

||||

RUN curl -sSL "https://get.helm.sh/helm-v2.12.3-linux-amd64.tar.gz" | tar xvz && \

|

||||

RUN curl -sSL "https://get.helm.sh/helm-v2.14.3-linux-amd64.tar.gz" | tar xvz && \

|

||||

chmod +x linux-amd64/helm && mv linux-amd64/helm /usr/local/bin/helm && \

|

||||

chmod +x linux-amd64/tiller && mv linux-amd64/tiller /usr/local/bin/tiller && \

|

||||

rm -rf linux-amd64

|

||||

|

||||

RUN curl -sSL "https://get.helm.sh/helm-v3.0.0-beta.3-linux-amd64.tar.gz" | tar xvz && \

|

||||

chmod +x linux-amd64/helm && mv linux-amd64/helm /usr/local/bin/helmv3 && \

|

||||

rm -rf linux-amd64

|

||||

|

||||

RUN GRPC_HEALTH_PROBE_VERSION=v0.3.0 && \

|

||||

wget -qO /usr/local/bin/grpc_health_probe https://github.com/grpc-ecosystem/grpc-health-probe/releases/download/${GRPC_HEALTH_PROBE_VERSION}/grpc_health_probe-linux-amd64 && \

|

||||

chmod +x /usr/local/bin/grpc_health_probe

|

||||

|

||||

RUN curl -sSL "https://github.com/bojand/ghz/releases/download/v0.39.0/ghz_0.39.0_Linux_x86_64.tar.gz" | tar xz -C /tmp && \

|

||||

mv /tmp/ghz /usr/local/bin && chmod +x /usr/local/bin/ghz && rm -rf /tmp/ghz-web

|

||||

|

||||

ADD https://raw.githubusercontent.com/grpc/grpc-proto/master/grpc/health/v1/health.proto /tmp/ghz/health.proto

|

||||

|

||||

RUN ls /tmp

|

||||

|

||||

COPY ./bin/loadtester .

|

||||

@@ -24,4 +35,7 @@ RUN chown -R app:app ./

|

||||

|

||||

USER app

|

||||

|

||||

RUN curl -sSL "https://github.com/rimusz/helm-tiller/archive/v0.8.3.tar.gz" | tar xvz && \

|

||||

helm init --client-only && helm plugin install helm-tiller-0.8.3 && helm plugin list

|

||||

|

||||

ENTRYPOINT ["./loadtester"]

|

||||

|

||||

15

Makefile

15

Makefile

@@ -7,15 +7,8 @@ LT_VERSION?=$(shell grep 'VERSION' cmd/loadtester/main.go | awk '{ print $$4 }'

|

||||

TS=$(shell date +%Y-%m-%d_%H-%M-%S)

|

||||

|

||||

run:

|

||||

GO111MODULE=on go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=istio -namespace=test \

|

||||

-metrics-server=https://prometheus.istio.weavedx.com \

|

||||

-enable-leader-election=true

|

||||

|

||||

run2:

|

||||

GO111MODULE=on go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=istio -namespace=test \

|

||||

-metrics-server=https://prometheus.istio.weavedx.com \

|

||||

-enable-leader-election=true \

|

||||

-port=9092

|

||||

GO111MODULE=on go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=istio -namespace=test-istio \

|

||||

-metrics-server=https://prometheus.istio.flagger.dev

|

||||

|

||||

run-appmesh:

|

||||

GO111MODULE=on go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=appmesh \

|

||||

@@ -38,8 +31,8 @@ run-nop:

|

||||

-metrics-server=https://prometheus.istio.weavedx.com

|

||||

|

||||

run-linkerd:

|

||||

GO111MODULE=on go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=smi:linkerd -namespace=demo \

|

||||

-metrics-server=https://linkerd-prometheus.istio.weavedx.com

|

||||

GO111MODULE=on go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=linkerd -namespace=dev \

|

||||

-metrics-server=https://prometheus.linkerd.flagger.dev

|

||||

|

||||

build:

|

||||

GIT_COMMIT=$$(git rev-list -1 HEAD) && GO111MODULE=on CGO_ENABLED=0 GOOS=linux go build -ldflags "-s -w -X github.com/weaveworks/flagger/pkg/version.REVISION=$${GIT_COMMIT}" -a -installsuffix cgo -o ./bin/flagger ./cmd/flagger/*

|

||||

|

||||

39

README.md

39

README.md

@@ -70,7 +70,6 @@ metadata:

|

||||

spec:

|

||||

# service mesh provider (optional)

|

||||

# can be: kubernetes, istio, linkerd, appmesh, nginx, gloo, supergloo

|

||||

# use the kubernetes provider for Blue/Green style deployments

|

||||

provider: istio

|

||||

# deployment reference

|

||||

targetRef:

|

||||

@@ -86,14 +85,12 @@ spec:

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

# ClusterIP port number

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- podinfo.example.com

|

||||

# container port name or number (optional)

|

||||

targetPort: 9898

|

||||

# port name can be http or grpc (default http)

|

||||

portName: http

|

||||

# HTTP match conditions (optional)

|

||||

match:

|

||||

- uri:

|

||||

@@ -101,10 +98,6 @@ spec:

|

||||

# HTTP rewrite (optional)

|

||||

rewrite:

|

||||

uri: /

|

||||

# cross-origin resource sharing policy (optional)

|

||||

corsPolicy:

|

||||

allowOrigin:

|

||||

- example.com

|

||||

# request timeout (optional)

|

||||

timeout: 5s

|

||||

# promote the canary without analysing it (default false)

|

||||

@@ -144,7 +137,7 @@ spec:

|

||||

topic="podinfo"

|

||||

}[1m]

|

||||

)

|

||||

# external checks (optional)

|

||||

# testing (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

@@ -157,15 +150,17 @@ For more details on how the canary analysis and promotion works please [read the

|

||||

|

||||

## Features

|

||||

|

||||

| Feature | Istio | Linkerd | App Mesh | NGINX | Gloo |

|

||||

| -------------------------------------------- | ------------------ | ------------------ |------------------ |------------------ |------------------ |

|

||||

| Canary deployments (weighted traffic) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| A/B testing (headers and cookies filters) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Webhooks (acceptance/load testing) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request success rate check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request duration check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Custom promql checks | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Traffic policy, CORS, retries and timeouts | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: |

|

||||

| Feature | Istio | Linkerd | App Mesh | NGINX | Gloo | Kubernetes CNI |

|

||||

| -------------------------------------------- | ------------------ | ------------------ |------------------ |------------------ |------------------ |------------------ |

|

||||

| Canary deployments (weighted traffic) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| A/B testing (headers and cookies routing) | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: |

|

||||

| Blue/Green deployments (traffic switch) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Webhooks (acceptance/load testing) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Manual gating (approve/pause/resume) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Request success rate check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Request duration check (L7 metric) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_minus_sign: |

|

||||

| Custom promql checks | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

|

||||

| Traffic policy, CORS, retries and timeouts | :heavy_check_mark: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: | :heavy_minus_sign: |

|

||||

|

||||

## Roadmap

|

||||

|

||||

|

||||

@@ -20,6 +20,9 @@ spec:

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# container port name (optional)

|

||||

# can be http or grpc

|

||||

portName: http

|

||||

# App Mesh reference

|

||||

meshName: global

|

||||

# define the canary analysis timing and KPIs

|

||||

@@ -41,8 +44,20 @@ spec:

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

# external checks (optional)

|

||||

- name: request-duration

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# testing (optional)

|

||||

webhooks:

|

||||

- name: acceptance-test

|

||||

type: pre-rollout

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 30s

|

||||

metadata:

|

||||

type: bash

|

||||

cmd: "curl -sd 'test' http://podinfo-canary.test:9898/token | grep token"

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

|

||||

@@ -25,7 +25,7 @@ spec:

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.7.0

|

||||

image: stefanprodan/podinfo:3.1.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

|

||||

@@ -13,7 +13,7 @@ data:

|

||||

- address:

|

||||

socket_address:

|

||||

address: 0.0.0.0

|

||||

port_value: 80

|

||||

port_value: 8080

|

||||

filter_chains:

|

||||

- filters:

|

||||

- name: envoy.http_connection_manager

|

||||

@@ -48,11 +48,15 @@ data:

|

||||

connect_timeout: 0.30s

|

||||

type: strict_dns

|

||||

lb_policy: round_robin

|

||||

http2_protocol_options: {}

|

||||

hosts:

|

||||

- socket_address:

|

||||

address: podinfo.test

|

||||

port_value: 9898

|

||||

load_assignment:

|

||||

cluster_name: podinfo

|

||||

endpoints:

|

||||

- lb_endpoints:

|

||||

- endpoint:

|

||||

address:

|

||||

socket_address:

|

||||

address: podinfo.test

|

||||

port_value: 9898

|

||||

admin:

|

||||

access_log_path: /dev/null

|

||||

address:

|

||||

@@ -91,7 +95,7 @@ spec:

|

||||

terminationGracePeriodSeconds: 30

|

||||

containers:

|

||||

- name: ingress

|

||||

image: "envoyproxy/envoy-alpine:d920944aed67425f91fc203774aebce9609e5d9a"

|

||||

image: "envoyproxy/envoy-alpine:v1.11.1"

|

||||

securityContext:

|

||||

capabilities:

|

||||

drop:

|

||||

@@ -99,25 +103,20 @@ spec:

|

||||

add:

|

||||

- NET_BIND_SERVICE

|

||||

command:

|

||||

- /usr/bin/dumb-init

|

||||

- --

|

||||

args:

|

||||

- /usr/local/bin/envoy

|

||||

- --base-id 30

|

||||

- --v2-config-only

|

||||

args:

|

||||

- -l

|

||||

- $loglevel

|

||||

- -c

|

||||

- /config/envoy.yaml

|

||||

- --base-id

|

||||

- "1234"

|

||||

ports:

|

||||

- name: admin

|

||||

containerPort: 9999

|

||||

protocol: TCP

|

||||

- name: http

|

||||

containerPort: 80

|

||||

protocol: TCP

|

||||

- name: https

|

||||

containerPort: 443

|

||||

containerPort: 8080

|

||||

protocol: TCP

|

||||

livenessProbe:

|

||||

initialDelaySeconds: 5

|

||||

@@ -151,11 +150,7 @@ spec:

|

||||

- protocol: TCP

|

||||

name: http

|

||||

port: 80

|

||||

targetPort: 80

|

||||

- protocol: TCP

|

||||

name: https

|

||||

port: 443

|

||||

targetPort: 443

|

||||

targetPort: http

|

||||

type: LoadBalancer

|

||||

---

|

||||

apiVersion: appmesh.k8s.aws/v1beta1

|

||||

|

||||

@@ -20,12 +20,13 @@ spec:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

prometheus.io/port: "9898"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.7.0

|

||||

image: stefanprodan/podinfo:3.1.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

|

||||

@@ -33,6 +33,26 @@ spec:

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: FailedChecks

|

||||

type: string

|

||||

JSONPath: .status.failedChecks

|

||||

priority: 1

|

||||

- name: Interval

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.interval

|

||||

priority: 1

|

||||

- name: Mirror

|

||||

type: boolean

|

||||

JSONPath: .spec.canaryAnalysis.mirror

|

||||

priority: 1

|

||||

- name: StepWeight

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.stepWeight

|

||||

priority: 1

|

||||

- name: MaxWeight

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.maxWeight

|

||||

priority: 1

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

@@ -46,12 +66,15 @@ spec:

|

||||

- canaryAnalysis

|

||||

properties:

|

||||

provider:

|

||||

description: Traffic managent provider

|

||||

type: string

|

||||

progressDeadlineSeconds:

|

||||

description: Deployment progress deadline

|

||||

type: number

|

||||

targetRef:

|

||||

description: Deployment selector

|

||||

type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

required: ["apiVersion", "kind", "name"]

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

@@ -60,10 +83,11 @@ spec:

|

||||

name:

|

||||

type: string

|

||||

autoscalerRef:

|

||||

description: HPA selector

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

required: ["apiVersion", "kind", "name"]

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

@@ -72,10 +96,11 @@ spec:

|

||||

name:

|

||||

type: string

|

||||

ingressRef:

|

||||

description: NGINX ingress selector

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

required: ["apiVersion", "kind", "name"]

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

@@ -85,18 +110,68 @@ spec:

|

||||

type: string

|

||||

service:

|

||||

type: object

|

||||

required: ['port']

|

||||

required: ["port"]

|

||||

properties:

|

||||

port:

|

||||

description: Container port number

|

||||

type: number

|

||||

portName:

|

||||

description: Container port name

|

||||

type: string

|

||||

targetPort:

|

||||

description: Container target port name

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: number

|

||||

portDiscovery:

|

||||

description: Enable port dicovery

|

||||

type: boolean

|

||||

meshName:

|

||||

description: AppMesh mesh name

|

||||

type: string

|

||||

backends:

|

||||

description: AppMesh backend array

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

timeout:

|

||||

description: Istio HTTP or gRPC request timeout

|

||||

type: string

|

||||

trafficPolicy:

|

||||

description: Istio traffic policy

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

match:

|

||||

description: Istio URL match conditions

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

rewrite:

|

||||

description: Istio URL rewrite

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

headers:

|

||||

description: Istio headers operations

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

corsPolicy:

|

||||

description: Istio CORS policy

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

gateways:

|

||||

description: Istio gateways list

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

hosts:

|

||||

description: Istio hosts list

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

skipAnalysis:

|

||||

type: boolean

|

||||

canaryAnalysis:

|

||||

@@ -117,13 +192,21 @@ spec:

|

||||

stepWeight:

|

||||

description: Canary incremental traffic percentage step

|

||||

type: number

|

||||

mirror:

|

||||

description: Mirror traffic to canary before shifting

|

||||

type: boolean

|

||||

match:

|

||||

description: A/B testing match conditions

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

metrics:

|

||||

description: Prometheus query list for this canary

|

||||

type: array

|

||||

properties:

|

||||

items:

|

||||

type: object

|

||||

required: ['name', 'threshold']

|

||||

required: ["name", "threshold"]

|

||||

properties:

|

||||

name:

|

||||

description: Name of the Prometheus metric

|

||||

@@ -144,7 +227,7 @@ spec:

|

||||

properties:

|

||||

items:

|

||||

type: object

|

||||

required: ['name', 'url', 'timeout']

|

||||

required: ["name", "url"]

|

||||

properties:

|

||||

name:

|

||||

description: Name of the webhook

|

||||

@@ -154,8 +237,10 @@ spec:

|

||||

type: string

|

||||

enum:

|

||||

- ""

|

||||

- confirm-rollout

|

||||

- pre-rollout

|

||||

- rollout

|

||||

- confirm-promotion

|

||||

- post-rollout

|

||||

url:

|

||||

description: URL address of this webhook

|

||||

@@ -165,6 +250,11 @@ spec:

|

||||

description: Request timeout for this webhook

|

||||

type: string

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

metadata:

|

||||

description: Metadata (key-value pairs) for this webhook

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

status:

|

||||

properties:

|

||||

phase:

|

||||

@@ -176,6 +266,7 @@ spec:

|

||||

- Initialized

|

||||

- Waiting

|

||||

- Progressing

|

||||

- Promoting

|

||||

- Finalising

|

||||

- Succeeded

|

||||

- Failed

|

||||

@@ -201,7 +292,7 @@ spec:

|

||||

properties:

|

||||

items:

|

||||

type: object

|

||||

required: ['type', 'status', 'reason']

|

||||

required: ["type", "status", "reason"]

|

||||

properties:

|

||||

lastTransitionTime:

|

||||

description: LastTransitionTime of this condition

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: weaveworks/flagger:0.18.2

|

||||

image: weaveworks/flagger:0.19.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -19,7 +19,7 @@ spec:

|

||||

serviceAccountName: tiller

|

||||

containers:

|

||||

- name: helmtester

|

||||

image: weaveworks/flagger-loadtester:0.4.0

|

||||

image: weaveworks/flagger-loadtester:0.8.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -17,7 +17,7 @@ spec:

|

||||

spec:

|

||||

containers:

|

||||

- name: loadtester

|

||||

image: weaveworks/flagger-loadtester:0.6.1

|

||||

image: weaveworks/flagger-loadtester:0.9.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.18.2

|

||||

appVersion: 0.18.2

|

||||

version: 0.19.0

|

||||

appVersion: 0.19.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio, Linkerd, App Mesh, Gloo or NGINX routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

@@ -74,6 +74,7 @@ Parameter | Description | Default

|

||||

`msteams.url` | Microsoft Teams incoming webhook | None

|

||||

`leaderElection.enabled` | leader election must be enabled when running more than one replica | `false`

|

||||

`leaderElection.replicaCount` | number of replicas | `1`

|

||||

`ingressAnnotationsPrefix` | annotations prefix for ingresses | `custom.ingress.kubernetes.io`

|

||||

`rbac.create` | if `true`, create and use RBAC resources | `true`

|

||||

`rbac.pspEnabled` | If `true`, create and use a restricted pod security policy | `false`

|

||||

`crd.create` | if `true`, create Flagger's CRDs | `true`

|

||||

|

||||

@@ -34,6 +34,26 @@ spec:

|

||||

- name: Weight

|

||||

type: string

|

||||

JSONPath: .status.canaryWeight

|

||||

- name: FailedChecks

|

||||

type: string

|

||||

JSONPath: .status.failedChecks

|

||||

priority: 1

|

||||

- name: Interval

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.interval

|

||||

priority: 1

|

||||

- name: Mirror

|

||||

type: boolean

|

||||

JSONPath: .spec.canaryAnalysis.mirror

|

||||

priority: 1

|

||||

- name: StepWeight

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.stepWeight

|

||||

priority: 1

|

||||

- name: MaxWeight

|

||||

type: string

|

||||

JSONPath: .spec.canaryAnalysis.maxWeight

|

||||

priority: 1

|

||||

- name: LastTransitionTime

|

||||

type: string

|

||||

JSONPath: .status.lastTransitionTime

|

||||

@@ -47,10 +67,13 @@ spec:

|

||||

- canaryAnalysis

|

||||

properties:

|

||||

provider:

|

||||

description: Traffic managent provider

|

||||

type: string

|

||||

progressDeadlineSeconds:

|

||||

description: Deployment progress deadline

|

||||

type: number

|

||||

targetRef:

|

||||

description: Deployment selector

|

||||

type: object

|

||||

required: ['apiVersion', 'kind', 'name']

|

||||

properties:

|

||||

@@ -61,6 +84,7 @@ spec:

|

||||

name:

|

||||

type: string

|

||||

autoscalerRef:

|

||||

description: HPA selector

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

@@ -73,6 +97,7 @@ spec:

|

||||

name:

|

||||

type: string

|

||||

ingressRef:

|

||||

description: NGINX ingress selector

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

@@ -89,15 +114,65 @@ spec:

|

||||

required: ['port']

|

||||

properties:

|

||||

port:

|

||||

description: Container port number

|

||||

type: number

|

||||

portName:

|

||||

description: Container port name

|

||||

type: string

|

||||

targetPort:

|

||||

description: Container target port name

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: number

|

||||

portDiscovery:

|

||||

description: Enable port dicovery

|

||||

type: boolean

|

||||

meshName:

|

||||

description: AppMesh mesh name

|

||||

type: string

|

||||

backends:

|

||||

description: AppMesh backend array

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

timeout:

|

||||

description: Istio HTTP or gRPC request timeout

|

||||

type: string

|

||||

trafficPolicy:

|

||||

description: Istio traffic policy

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

match:

|

||||

description: Istio URL match conditions

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

rewrite:

|

||||

description: Istio URL rewrite

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

headers:

|

||||

description: Istio headers operations

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

corsPolicy:

|

||||

description: Istio CORS policy

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

gateways:

|

||||

description: Istio gateways list

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

hosts:

|

||||

description: Istio hosts list

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

skipAnalysis:

|

||||

type: boolean

|

||||

canaryAnalysis:

|

||||

@@ -118,6 +193,14 @@ spec:

|

||||

stepWeight:

|

||||

description: Canary incremental traffic percentage step

|

||||

type: number

|

||||

mirror:

|

||||

description: Mirror traffic to canary before shifting

|

||||

type: boolean

|

||||

match:

|

||||

description: A/B testing match conditions

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: array

|

||||

metrics:

|

||||

description: Prometheus query list for this canary

|

||||

type: array

|

||||

@@ -145,7 +228,7 @@ spec:

|

||||

properties:

|

||||

items:

|

||||

type: object

|

||||

required: ['name', 'url', 'timeout']

|

||||

required: ["name", "url"]

|

||||

properties:

|

||||

name:

|

||||

description: Name of the webhook

|

||||

@@ -155,8 +238,10 @@ spec:

|

||||

type: string

|

||||

enum:

|

||||

- ""

|

||||

- confirm-rollout

|

||||

- pre-rollout

|

||||

- rollout

|

||||

- confirm-promotion

|

||||

- post-rollout

|

||||

url:

|

||||

description: URL address of this webhook

|

||||

@@ -166,6 +251,11 @@ spec:

|

||||

description: Request timeout for this webhook

|

||||

type: string

|

||||

pattern: "^[0-9]+(m|s)"

|

||||

metadata:

|

||||

description: Metadata (key-value pairs) for this webhook

|

||||

anyOf:

|

||||

- type: string

|

||||

- type: object

|

||||

status:

|

||||

properties:

|

||||

phase:

|

||||

@@ -177,6 +267,7 @@ spec:

|

||||

- Initialized

|

||||

- Waiting

|

||||

- Progressing

|

||||

- Promoting

|

||||

- Finalising

|

||||

- Succeeded

|

||||

- Failed

|

||||

|

||||

@@ -20,6 +20,10 @@ spec:

|

||||

labels:

|

||||

app.kubernetes.io/name: {{ template "flagger.name" . }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

annotations:

|

||||

{{- if .Values.podAnnotations }}

|

||||

{{ toYaml .Values.podAnnotations | indent 8 }}

|

||||

{{- end }}

|

||||

spec:

|

||||

serviceAccountName: {{ template "flagger.serviceAccountName" . }}

|

||||

affinity:

|

||||

@@ -72,6 +76,9 @@ spec:

|

||||

- -enable-leader-election=true

|

||||

- -leader-election-namespace={{ .Release.Namespace }}

|

||||

{{- end }}

|

||||

{{- if .Values.ingressAnnotationsPrefix }}

|

||||

- -ingress-annotations-prefix={{ .Values.ingressAnnotationsPrefix }}

|

||||

{{- end }}

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

|

||||

@@ -238,7 +238,7 @@ spec:

|

||||

serviceAccountName: {{ template "flagger.serviceAccountName" . }}-prometheus

|

||||

containers:

|

||||

- name: prometheus

|

||||

image: "docker.io/prom/prometheus:v2.10.0"

|

||||

image: "docker.io/prom/prometheus:v2.12.0"

|

||||

imagePullPolicy: IfNotPresent

|

||||

args:

|

||||

- '--storage.tsdb.retention=2h'

|

||||

|

||||

@@ -2,10 +2,14 @@

|

||||

|

||||

image:

|

||||

repository: weaveworks/flagger

|

||||

tag: 0.18.2

|

||||

tag: 0.19.0

|

||||

pullPolicy: IfNotPresent

|

||||

pullSecret:

|

||||

|

||||

podAnnotations:

|

||||

prometheus.io/scrape: "true"

|

||||

prometheus.io/port: "8080"

|

||||

|

||||

metricsServer: "http://prometheus:9090"

|

||||

|

||||

# accepted values are istio, appmesh, nginx or supergloo:mesh.namespace (defaults to istio)

|

||||

|

||||

@@ -20,6 +20,9 @@ spec:

|

||||

release: {{ .Release.Name }}

|

||||

annotations:

|

||||

prometheus.io/scrape: 'false'

|

||||

{{- if .Values.podAnnotations }}

|

||||

{{ toYaml .Values.podAnnotations | indent 8 }}

|

||||

{{- end }}

|

||||

spec:

|

||||

containers:

|

||||

- name: {{ .Chart.Name }}

|

||||

|

||||

@@ -9,6 +9,8 @@ image:

|

||||

tag: 6.2.5

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

podAnnotations: {}

|

||||

|

||||

service:

|

||||

type: ClusterIP

|

||||

port: 80

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: loadtester

|

||||

version: 0.6.0

|

||||

appVersion: 0.6.1

|

||||

version: 0.9.0

|

||||

appVersion: 0.9.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger's load testing services based on rakyll/hey and bojand/ghz that generates traffic during canary analysis when configured as a webhook.

|

||||

|

||||

@@ -18,9 +18,14 @@ spec:

|

||||

app: {{ include "loadtester.name" . }}

|

||||

annotations:

|

||||

appmesh.k8s.aws/ports: "444"

|

||||

{{- if .Values.podAnnotations }}

|

||||

{{ toYaml .Values.podAnnotations | indent 8 }}

|

||||

{{- end }}

|

||||

spec:

|

||||

{{- if .Values.serviceAccountName }}

|

||||

serviceAccountName: {{ .Values.serviceAccountName }}

|

||||

{{- else if .Values.rbac.create }}

|

||||

serviceAccountName: {{ include "loadtester.fullname" . }}

|

||||

{{- end }}

|

||||

containers:

|

||||

- name: {{ .Chart.Name }}

|

||||

|

||||

54

charts/loadtester/templates/rbac.yaml

Normal file

54

charts/loadtester/templates/rbac.yaml

Normal file

@@ -0,0 +1,54 @@

|

||||

---

|

||||

{{- if .Values.rbac.create }}

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

{{- if eq .Values.rbac.scope "cluster" }}

|

||||

kind: ClusterRole

|

||||

{{- else }}

|

||||

kind: Role

|

||||

{{- end }}

|

||||

metadata:

|

||||

name: {{ template "loadtester.fullname" . }}

|

||||

labels:

|

||||

helm.sh/chart: {{ template "loadtester.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "loadtester.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

rules:

|

||||

{{ toYaml .Values.rbac.rules | indent 2 }}

|

||||

---

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

{{- if eq .Values.rbac.scope "cluster" }}

|

||||

kind: ClusterRoleBinding

|

||||

{{- else }}

|

||||

kind: RoleBinding

|

||||

{{- end }}

|

||||

metadata:

|

||||

name: {{ template "loadtester.fullname" . }}

|

||||

labels:

|

||||

helm.sh/chart: {{ template "loadtester.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "loadtester.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

roleRef:

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- if eq .Values.rbac.scope "cluster" }}

|

||||

kind: ClusterRole

|

||||

{{- else }}

|

||||

kind: Role

|

||||

{{- end }}

|

||||

name: {{ template "loadtester.fullname" . }}

|

||||

subjects:

|

||||

- kind: ServiceAccount

|

||||

name: {{ template "loadtester.fullname" . }}

|

||||

namespace: {{ .Release.Namespace }}

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

metadata:

|

||||

name: {{ template "loadtester.fullname" . }}

|

||||

labels:

|

||||

helm.sh/chart: {{ template "loadtester.chart" . }}

|

||||

app.kubernetes.io/name: {{ template "loadtester.name" . }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

{{- end }}

|

||||

@@ -2,9 +2,13 @@ replicaCount: 1

|

||||

|

||||

image:

|

||||

repository: weaveworks/flagger-loadtester

|

||||

tag: 0.6.1

|

||||

tag: 0.9.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

podAnnotations:

|

||||

prometheus.io/scrape: "true"

|

||||

prometheus.io/port: "8080"

|

||||

|

||||

logLevel: info

|

||||

cmd:

|

||||

timeout: 1h

|

||||

@@ -27,6 +31,20 @@ tolerations: []

|

||||

|

||||

affinity: {}

|

||||

|

||||

rbac:

|

||||

# rbac.create: `true` if rbac resources should be created

|

||||

create: false

|

||||

# rbac.scope: `cluster` to create cluster-scope rbac resources (ClusterRole/ClusterRoleBinding)

|

||||

# otherwise, namespace-scope rbac resources will be created (Role/RoleBinding)

|

||||

scope:

|

||||

# rbac.rules: array of rules to apply to the role. example:

|

||||

# rules:

|

||||

# - apiGroups: [""]

|

||||

# resources: ["pods"]

|

||||

# verbs: ["list", "get"]

|

||||

rules: []

|

||||

|

||||

# name of an existing service account to use - if not creating rbac resources

|

||||

serviceAccountName: ""

|

||||

|

||||

# App Mesh virtual node settings

|

||||

|

||||

@@ -1,10 +1,10 @@

|

||||

apiVersion: v1

|

||||

version: 2.3.0

|

||||

appVersion: 1.7.0

|

||||

version: 3.1.0

|

||||

appVersion: 3.1.0

|

||||

name: podinfo

|

||||

engine: gotpl

|

||||

description: Flagger canary deployment demo chart

|

||||

home: https://github.com/weaveworks/flagger

|

||||

home: https://flagger.app

|

||||

maintainers:

|

||||

- email: stefanprodan@users.noreply.github.com

|

||||

name: stefanprodan

|

||||

|

||||

@@ -21,10 +21,13 @@ spec:

|

||||

app: {{ template "podinfo.fullname" . }}

|

||||

annotations:

|

||||

prometheus.io/scrape: 'true'

|

||||

{{- if .Values.podAnnotations }}

|

||||

{{ toYaml .Values.podAnnotations | indent 8 }}

|

||||

{{- end }}

|

||||

spec:

|

||||

terminationGracePeriodSeconds: 30

|

||||

containers:

|

||||

- name: {{ .Chart.Name }}

|

||||

- name: podinfo

|

||||

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

|

||||

imagePullPolicy: {{ .Values.image.pullPolicy }}

|

||||

command:

|

||||

@@ -34,6 +37,9 @@ spec:

|

||||

- --random-delay={{ .Values.faults.delay }}

|

||||

- --random-error={{ .Values.faults.error }}

|

||||

- --config-path=/podinfo/config

|

||||

{{- range .Values.backends }}

|

||||

- --backend-url={{ . }}

|

||||

{{- end }}

|

||||

env:

|

||||

{{- if .Values.message }}

|

||||

- name: PODINFO_UI_MESSAGE

|

||||

|

||||

29

charts/podinfo/templates/tests/jwt.yaml

Normal file

29

charts/podinfo/templates/tests/jwt.yaml

Normal file

@@ -0,0 +1,29 @@

|

||||

apiVersion: v1

|

||||

kind: Pod

|

||||

metadata:

|

||||

name: {{ template "podinfo.fullname" . }}-jwt-test-{{ randAlphaNum 5 | lower }}

|

||||

labels:

|

||||

heritage: {{ .Release.Service }}

|

||||

release: {{ .Release.Name }}

|

||||

chart: {{ .Chart.Name }}-{{ .Chart.Version }}

|

||||

app: {{ template "podinfo.name" . }}

|

||||

annotations:

|

||||

"helm.sh/hook": test-success

|

||||

sidecar.istio.io/inject: "false"

|

||||

linkerd.io/inject: disabled

|

||||

appmesh.k8s.aws/sidecarInjectorWebhook: disabled

|

||||

spec:

|

||||

containers:

|

||||

- name: tools

|

||||

image: giantswarm/tiny-tools

|

||||

command:

|

||||

- sh

|

||||

- -c

|

||||

- |

|

||||

TOKEN=$(curl -sd 'test' ${PODINFO_SVC}/token | jq -r .token) &&

|

||||

curl -H "Authorization: Bearer ${TOKEN}" ${PODINFO_SVC}/token/validate | grep test

|

||||

env:

|

||||

- name: PODINFO_SVC

|

||||

value: {{ template "podinfo.fullname" . }}:{{ .Values.service.port }}

|

||||

restartPolicy: Never

|

||||

|

||||

@@ -1,22 +0,0 @@

|

||||

{{- $url := printf "%s%s.%s:%v" (include "podinfo.fullname" .) (include "podinfo.suffix" .) .Release.Namespace .Values.service.port -}}

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: {{ template "podinfo.fullname" . }}-tests

|

||||

labels:

|

||||

heritage: {{ .Release.Service }}

|

||||

release: {{ .Release.Name }}

|

||||

chart: {{ .Chart.Name }}-{{ .Chart.Version }}

|

||||

app: {{ template "podinfo.name" . }}

|

||||

data:

|

||||

run.sh: |-

|

||||

@test "HTTP POST /echo" {

|

||||

run curl --retry 3 --connect-timeout 2 -sSX POST -d 'test' {{ $url }}/echo

|

||||

[ $output = "test" ]

|

||||

}

|

||||

@test "HTTP POST /store" {

|

||||

curl --retry 3 --connect-timeout 2 -sSX POST -d 'test' {{ $url }}/store

|

||||

}

|

||||

@test "HTTP GET /" {

|

||||

curl --retry 3 --connect-timeout 2 -sS {{ $url }} | grep hostname

|

||||

}

|

||||

@@ -1,43 +0,0 @@

|

||||

apiVersion: v1

|

||||

kind: Pod

|

||||

metadata:

|

||||

name: {{ template "podinfo.fullname" . }}-tests-{{ randAlphaNum 5 | lower }}

|

||||

annotations:

|

||||

"helm.sh/hook": test-success

|

||||

sidecar.istio.io/inject: "false"

|

||||

labels:

|

||||

heritage: {{ .Release.Service }}

|

||||

release: {{ .Release.Name }}

|

||||

chart: {{ .Chart.Name }}-{{ .Chart.Version }}

|

||||

app: {{ template "podinfo.name" . }}

|

||||

spec:

|

||||

initContainers:

|

||||

- name: "test-framework"

|

||||

image: "dduportal/bats:0.4.0"

|

||||

command:

|

||||

- "bash"

|

||||

- "-c"

|

||||

- |

|

||||

set -ex

|

||||

# copy bats to tools dir

|

||||

cp -R /usr/local/libexec/ /tools/bats/

|

||||

volumeMounts:

|

||||

- mountPath: /tools

|

||||

name: tools

|

||||

containers:

|

||||

- name: {{ .Release.Name }}-ui-test

|

||||

image: dduportal/bats:0.4.0

|

||||

command: ["/tools/bats/bats", "-t", "/tests/run.sh"]

|

||||

volumeMounts:

|

||||

- mountPath: /tests

|

||||

name: tests

|

||||

readOnly: true

|

||||

- mountPath: /tools

|

||||

name: tools

|

||||

volumes:

|

||||

- name: tests

|

||||

configMap:

|

||||

name: {{ template "podinfo.fullname" . }}-tests

|

||||

- name: tools

|

||||

emptyDir: {}

|

||||

restartPolicy: Never

|

||||

@@ -1,22 +1,25 @@

|

||||

# Default values for podinfo.

|

||||

image:

|

||||

repository: quay.io/stefanprodan/podinfo

|

||||

tag: 1.7.0

|

||||

repository: stefanprodan/podinfo

|

||||

tag: 3.1.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

podAnnotations: {}

|

||||

|

||||

service:

|

||||

enabled: false

|

||||

type: ClusterIP

|

||||

port: 9898

|

||||

|

||||

hpa:

|

||||

enabled: true

|

||||

minReplicas: 2

|

||||

maxReplicas: 2

|

||||

maxReplicas: 4

|

||||

cpu: 80

|

||||

memory: 512Mi

|

||||

|

||||

canary:

|

||||

enabled: true

|

||||

enabled: false

|

||||

# Istio traffic policy tls can be DISABLE or ISTIO_MUTUAL

|

||||

istioTLS: DISABLE

|

||||

istioIngress:

|

||||

@@ -69,6 +72,7 @@ fullnameOverride: ""

|

||||

|

||||

logLevel: info

|

||||

backend: #http://backend-podinfo:9898/echo

|

||||

backends: []

|

||||

message: #UI greetings

|

||||

|

||||

faults:

|

||||

|

||||

@@ -33,25 +33,26 @@ import (

|

||||

)

|

||||

|

||||

var (

|

||||

masterURL string

|

||||

kubeconfig string

|

||||

metricsServer string

|

||||

controlLoopInterval time.Duration

|

||||

logLevel string

|

||||

port string

|

||||

msteamsURL string

|

||||

slackURL string

|

||||

slackUser string

|

||||

slackChannel string

|

||||

threadiness int

|

||||

zapReplaceGlobals bool

|

||||

zapEncoding string

|

||||

namespace string

|

||||

meshProvider string

|

||||

selectorLabels string

|

||||

enableLeaderElection bool

|

||||

leaderElectionNamespace string

|

||||

ver bool

|

||||

masterURL string

|

||||

kubeconfig string

|

||||

metricsServer string

|

||||

controlLoopInterval time.Duration

|

||||

logLevel string

|

||||

port string

|

||||

msteamsURL string

|

||||

slackURL string

|

||||

slackUser string

|

||||

slackChannel string

|

||||

threadiness int

|

||||

zapReplaceGlobals bool

|

||||

zapEncoding string

|

||||

namespace string

|

||||

meshProvider string

|

||||

selectorLabels string

|

||||

ingressAnnotationsPrefix string

|

||||

enableLeaderElection bool

|

||||

leaderElectionNamespace string

|

||||

ver bool

|

||||

)

|

||||

|

||||

func init() {

|

||||

@@ -71,6 +72,7 @@ func init() {

|

||||

flag.StringVar(&namespace, "namespace", "", "Namespace that flagger would watch canary object.")

|

||||

flag.StringVar(&meshProvider, "mesh-provider", "istio", "Service mesh provider, can be istio, linkerd, appmesh, supergloo, nginx or smi.")

|

||||

flag.StringVar(&selectorLabels, "selector-labels", "app,name,app.kubernetes.io/name", "List of pod labels that Flagger uses to create pod selectors.")

|

||||

flag.StringVar(&ingressAnnotationsPrefix, "ingress-annotations-prefix", "nginx.ingress.kubernetes.io", "Annotations prefix for ingresses.")

|

||||

flag.BoolVar(&enableLeaderElection, "enable-leader-election", false, "Enable leader election.")

|

||||

flag.StringVar(&leaderElectionNamespace, "leader-election-namespace", "kube-system", "Namespace used to create the leader election config map.")

|

||||

flag.BoolVar(&ver, "version", false, "Print version")

|

||||

@@ -175,7 +177,7 @@ func main() {

|

||||

// start HTTP server

|

||||

go server.ListenAndServe(port, 3*time.Second, logger, stopCh)

|

||||

|

||||

routerFactory := router.NewFactory(cfg, kubeClient, flaggerClient, logger, meshClient)

|

||||

routerFactory := router.NewFactory(cfg, kubeClient, flaggerClient, ingressAnnotationsPrefix, logger, meshClient)

|

||||

|

||||

c := controller.NewController(

|

||||

kubeClient,

|

||||

|

||||

@@ -10,7 +10,7 @@ import (

|

||||

"time"

|

||||

)

|

||||

|

||||

var VERSION = "0.6.1"

|

||||

var VERSION = "0.9.0"

|

||||

var (

|

||||

logLevel string

|

||||

port string

|

||||

|

||||

BIN

docs/diagrams/flagger-canary-traffic-mirroring.png

Normal file

BIN

docs/diagrams/flagger-canary-traffic-mirroring.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 39 KiB |

@@ -7,10 +7,12 @@

|

||||

Flagger can run automated application analysis, promotion and rollback for the following deployment strategies:

|

||||

* Canary (progressive traffic shifting)

|

||||

* Istio, Linkerd, App Mesh, NGINX, Gloo

|

||||

* Canary (traffic mirroring)

|

||||

* Istio

|

||||

* A/B Testing (HTTP headers and cookies traffic routing)

|

||||

* Istio, NGINX

|

||||

* Blue/Green (traffic switch)

|

||||

* Kubernetes CNI

|

||||

* Kubernetes CNI, Istio, Linkerd, App Mesh, NGINX, Gloo

|

||||

|

||||

For Canary deployments and A/B testing you'll need a Layer 7 traffic management solution like a service mesh or an ingress controller.

|

||||

For Blue/Green deployments no service mesh or ingress controller is required.

|

||||

@@ -102,6 +104,42 @@ The above configuration will run an analysis for five minutes.

|

||||

Flagger starts the load test for the canary service (green version) and checks the Prometheus metrics every 30 seconds.

|

||||

If the analysis result is positive, Flagger will promote the canary (green version) to primary (blue version).

|

||||

|

||||

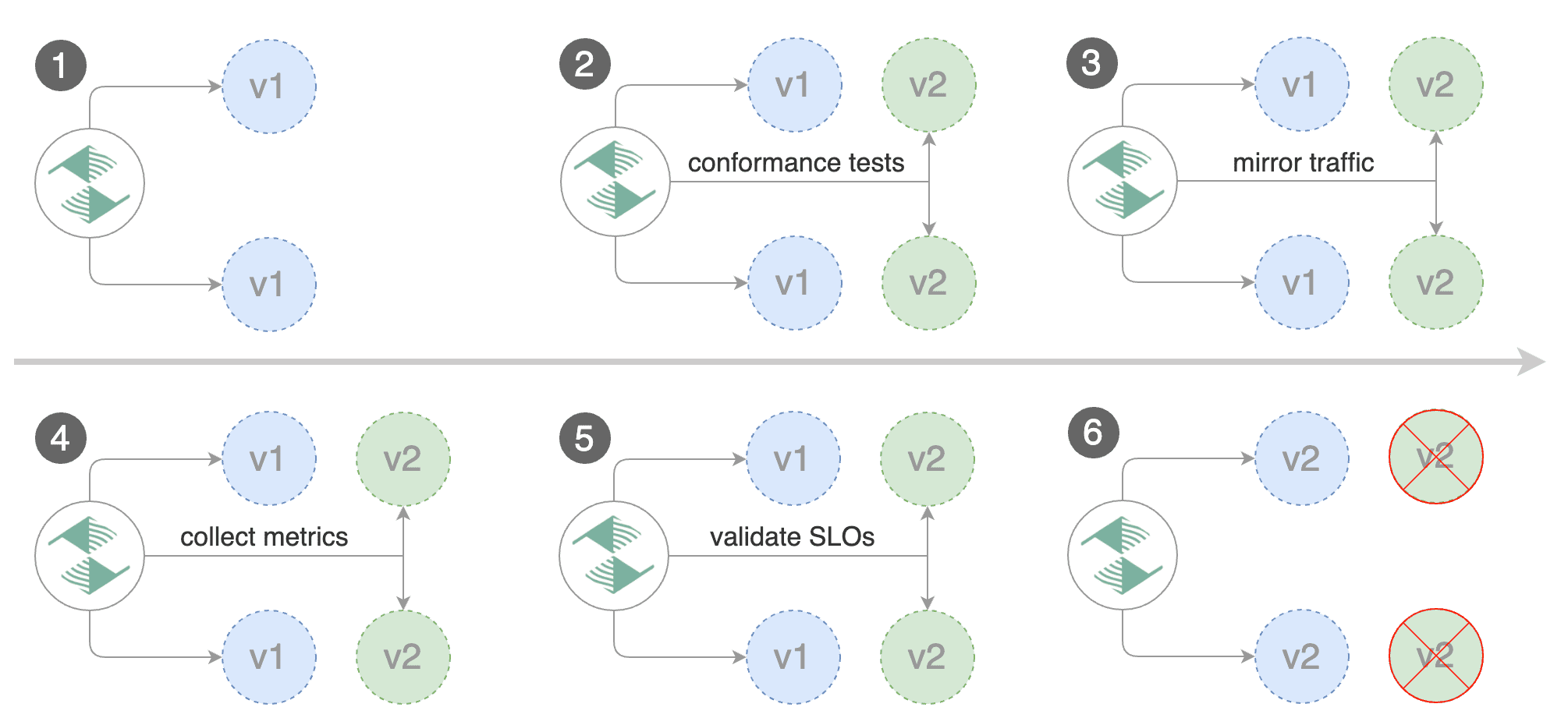

**When can I use traffic mirroring?**

|

||||

|

||||

Traffic Mirroring is a pre-stage in a Canary (progressive traffic shifting) or

|

||||

Blue/Green deployment strategy. Traffic mirroring will copy each incoming

|

||||

request, sending one request to the primary and one to the canary service.

|

||||

The response from the primary is sent back to the user. The response from the canary

|

||||

is discarded. Metrics are collected on both requests so that the deployment will

|

||||

only proceed if the canary metrics are healthy.

|

||||

|

||||

Mirroring is supported by Istio only.

|

||||

|

||||

In Istio, mirrored requests have `-shadow` appended to the `Host` (HTTP) or

|

||||

`Authority` (HTTP/2) header; for example requests to `podinfo.test` that are

|

||||

mirrored will be reported in telemetry with a destination host `podinfo.test-shadow`.

|

||||

|

||||

Mirroring must only be used for requests that are **idempotent** or capable of

|

||||

being processed twice (once by the primary and once by the canary). Reads are

|

||||

idempotent. Before using mirroring on requests that may be writes, you should

|

||||

consider what will happen if a write is duplicated and handled by the primary

|

||||

and canary.

|

||||

|

||||

To use mirroring, set `spec.canaryAnalysis.mirror` to `true`. Example for

|

||||

traffic shifting:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

spec:

|

||||

provider: istio

|

||||

canaryAnalysis:

|

||||

mirror: true

|

||||

interval: 30s

|

||||

stepWeight: 20

|

||||

maxWeight: 50

|

||||

```

|

||||

|

||||

### Kubernetes services

|

||||

|

||||

**How is an application exposed inside the cluster?**

|

||||

@@ -120,8 +158,10 @@ spec:

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

service:

|

||||

# container port (required)

|

||||

# ClusterIP port number (required)

|

||||

port: 9898

|

||||

# container port name or number

|

||||

targetPort: http

|

||||

# port name can be http or grpc (default http)

|

||||

portName: http

|

||||

```

|

||||

@@ -291,6 +331,190 @@ spec:

|

||||

topologyKey: kubernetes.io/hostname

|

||||

```

|

||||

|

||||

### Istio routing

|

||||

|

||||

**How does Flagger interact with Istio?**

|

||||

|

||||

Flagger creates an Istio Virtual Service and Destination Rules based on the Canary service spec.