mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-16 02:49:51 +00:00

Compare commits

43 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

bb620ad94a | ||

|

|

7c6d1c48a3 | ||

|

|

bd5d884c8b | ||

|

|

1c06721c9a | ||

|

|

1e29e2c4eb | ||

|

|

88c39d7379 | ||

|

|

da43a152ba | ||

|

|

ec63aa9999 | ||

|

|

7b9df746ad | ||

|

|

52d93ddda2 | ||

|

|

eb0331f2bf | ||

|

|

6a66a87a44 | ||

|

|

f3cc810948 | ||

|

|

12d84b2e24 | ||

|

|

58bde24ece | ||

|

|

5b3fd0efca | ||

|

|

ee6e39afa6 | ||

|

|

677b9d9197 | ||

|

|

786c5aa93a | ||

|

|

fd44f1fabf | ||

|

|

b20e0178e1 | ||

|

|

5a490abfdd | ||

|

|

674c79da94 | ||

|

|

23ebb4235d | ||

|

|

b2500d0ccb | ||

|

|

ee500d83ac | ||

|

|

0032c14a78 | ||

|

|

8fd3e927b8 | ||

|

|

7fe273a21d | ||

|

|

bd817cc520 | ||

|

|

eb856fda13 | ||

|

|

d63f05c92e | ||

|

|

8fde6bdb8a | ||

|

|

8148120421 | ||

|

|

95b8840bf2 | ||

|

|

0e8b1ef20f | ||

|

|

0fbf4dcdb2 | ||

|

|

7aca9468ac | ||

|

|

a6c0f08fcc | ||

|

|

9c1bcc08bb | ||

|

|

87e9dfe3d3 | ||

|

|

d7be66743e | ||

|

|

350efb2bfe |

@@ -9,6 +9,15 @@ jobs:

|

||||

- run: test/e2e-build.sh

|

||||

- run: test/e2e-tests.sh

|

||||

|

||||

e2e-smi-istio-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-istio.sh

|

||||

- run: test/e2e-smi-istio-build.sh

|

||||

- run: test/e2e-tests.sh canary

|

||||

|

||||

e2e-supergloo-testing:

|

||||

machine: true

|

||||

steps:

|

||||

@@ -18,6 +27,15 @@ jobs:

|

||||

- run: test/e2e-build.sh supergloo:test.supergloo-system

|

||||

- run: test/e2e-tests.sh canary

|

||||

|

||||

e2e-gloo-testing:

|

||||

machine: true

|

||||

steps:

|

||||

- checkout

|

||||

- run: test/e2e-kind.sh

|

||||

- run: test/e2e-gloo.sh

|

||||

- run: test/e2e-gloo-build.sh

|

||||

- run: test/e2e-gloo-tests.sh

|

||||

|

||||

e2e-nginx-testing:

|

||||

machine: true

|

||||

steps:

|

||||

@@ -38,6 +56,13 @@ workflows:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

- e2e-smi-istio-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

- e2e-supergloo-testing:

|

||||

filters:

|

||||

branches:

|

||||

@@ -46,6 +71,13 @@ workflows:

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

- e2e-nginx-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

- /gh-pages.*/

|

||||

- /docs-.*/

|

||||

- /release-.*/

|

||||

- e2e-gloo-testing:

|

||||

filters:

|

||||

branches:

|

||||

ignore:

|

||||

|

||||

@@ -2,6 +2,15 @@

|

||||

|

||||

All notable changes to this project are documented in this file.

|

||||

|

||||

## 0.14.0 (2019-05-21)

|

||||

|

||||

Adds support for Service Mesh Interface and [Gloo](https://docs.flagger.app/usage/gloo-progressive-delivery) ingress controller

|

||||

|

||||

#### Features

|

||||

|

||||

- Add support for SMI (Istio weighted traffic) [#180](https://github.com/weaveworks/flagger/pull/180)

|

||||

- Add support for Gloo ingress controller (weighted traffic) [#179](https://github.com/weaveworks/flagger/pull/179)

|

||||

|

||||

## 0.13.2 (2019-04-11)

|

||||

|

||||

Fixes for Jenkins X deployments (prevent the jx GC from removing the primary instance)

|

||||

|

||||

12

Makefile

12

Makefile

@@ -24,6 +24,18 @@ run-nginx:

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

run-smi:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=smi:istio -namespace=smi \

|

||||

-metrics-server=https://prometheus.istio.weavedx.com \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

run-gloo:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -mesh-provider=gloo -namespace=gloo \

|

||||

-metrics-server=https://prometheus.istio.weavedx.com \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

build:

|

||||

docker build -t weaveworks/flagger:$(TAG) . -f Dockerfile

|

||||

|

||||

|

||||

11

README.md

11

README.md

@@ -40,6 +40,7 @@ Flagger documentation can be found at [docs.flagger.app](https://docs.flagger.ap

|

||||

* [Istio A/B testing](https://docs.flagger.app/usage/ab-testing)

|

||||

* [App Mesh canary deployments](https://docs.flagger.app/usage/appmesh-progressive-delivery)

|

||||

* [NGINX ingress controller canary deployments](https://docs.flagger.app/usage/nginx-progressive-delivery)

|

||||

* [Gloo Canary Deployments](https://docs.flagger.app/usage/gloo-progressive-delivery.md)

|

||||

* [Monitoring](https://docs.flagger.app/usage/monitoring)

|

||||

* [Alerting](https://docs.flagger.app/usage/alerting)

|

||||

* Tutorials

|

||||

@@ -82,7 +83,6 @@ spec:

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- podinfo.example.com

|

||||

@@ -93,17 +93,12 @@ spec:

|

||||

# HTTP rewrite (optional)

|

||||

rewrite:

|

||||

uri: /

|

||||

# Envoy timeout and retry policy (optional)

|

||||

headers:

|

||||

request:

|

||||

add:

|

||||

x-envoy-upstream-rq-timeout-ms: "15000"

|

||||

x-envoy-max-retries: "10"

|

||||

x-envoy-retry-on: "gateway-error,connect-failure,refused-stream"

|

||||

# cross-origin resource sharing policy (optional)

|

||||

corsPolicy:

|

||||

allowOrigin:

|

||||

- example.com

|

||||

# request timeout (optional)

|

||||

timeout: 5s

|

||||

# promote the canary without analysing it (default false)

|

||||

skipAnalysis: false

|

||||

# define the canary analysis timing and KPIs

|

||||

|

||||

@@ -59,6 +59,26 @@ rules:

|

||||

- virtualservices

|

||||

- virtualservices/status

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- split.smi-spec.io

|

||||

resources:

|

||||

- trafficsplits

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- gloo.solo.io

|

||||

resources:

|

||||

- settings

|

||||

- upstreams

|

||||

- upstreamgroups

|

||||

- proxies

|

||||

- virtualservices

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- gateway.solo.io

|

||||

resources:

|

||||

- virtualservices

|

||||

- gateways

|

||||

verbs: ["*"]

|

||||

- nonResourceURLs:

|

||||

- /version

|

||||

verbs:

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: weaveworks/flagger:0.13.2

|

||||

image: weaveworks/flagger:0.14.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- name: http

|

||||

|

||||

36

artifacts/gloo/canary.yaml

Normal file

36

artifacts/gloo/canary.yaml

Normal file

@@ -0,0 +1,36 @@

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

progressDeadlineSeconds: 60

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

port: 9898

|

||||

canaryAnalysis:

|

||||

interval: 10s

|

||||

threshold: 10

|

||||

maxWeight: 50

|

||||

stepWeight: 5

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://gloo.example.com/"

|

||||

67

artifacts/gloo/deployment.yaml

Normal file

67

artifacts/gloo/deployment.yaml

Normal file

@@ -0,0 +1,67 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

progressDeadlineSeconds: 60

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.4.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: blue

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

19

artifacts/gloo/hpa.yaml

Normal file

19

artifacts/gloo/hpa.yaml

Normal file

@@ -0,0 +1,19 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 1

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

17

artifacts/gloo/virtual-service.yaml

Normal file

17

artifacts/gloo/virtual-service.yaml

Normal file

@@ -0,0 +1,17 @@

|

||||

apiVersion: gateway.solo.io/v1

|

||||

kind: VirtualService

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

virtualHost:

|

||||

domains:

|

||||

- '*'

|

||||

name: podinfo.default

|

||||

routes:

|

||||

- matcher:

|

||||

prefix: /

|

||||

routeAction:

|

||||

upstreamGroup:

|

||||

name: podinfo

|

||||

namespace: gloo

|

||||

131

artifacts/smi/istio-adapter.yaml

Normal file

131

artifacts/smi/istio-adapter.yaml

Normal file

@@ -0,0 +1,131 @@

|

||||

apiVersion: apiextensions.k8s.io/v1beta1

|

||||

kind: CustomResourceDefinition

|

||||

metadata:

|

||||

name: trafficsplits.split.smi-spec.io

|

||||

spec:

|

||||

additionalPrinterColumns:

|

||||

- JSONPath: .spec.service

|

||||

description: The service

|

||||

name: Service

|

||||

type: string

|

||||

group: split.smi-spec.io

|

||||

names:

|

||||

kind: TrafficSplit

|

||||

listKind: TrafficSplitList

|

||||

plural: trafficsplits

|

||||

singular: trafficsplit

|

||||

scope: Namespaced

|

||||

subresources:

|

||||

status: {}

|

||||

version: v1alpha1

|

||||

versions:

|

||||

- name: v1alpha1

|

||||

served: true

|

||||

storage: true

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

namespace: istio-system

|

||||

---

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

kind: ClusterRole

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

rules:

|

||||

- apiGroups:

|

||||

- ""

|

||||

resources:

|

||||

- pods

|

||||

- services

|

||||

- endpoints

|

||||

- persistentvolumeclaims

|

||||

- events

|

||||

- configmaps

|

||||

- secrets

|

||||

verbs:

|

||||

- '*'

|

||||

- apiGroups:

|

||||

- apps

|

||||

resources:

|

||||

- deployments

|

||||

- daemonsets

|

||||

- replicasets

|

||||

- statefulsets

|

||||

verbs:

|

||||

- '*'

|

||||

- apiGroups:

|

||||

- monitoring.coreos.com

|

||||

resources:

|

||||

- servicemonitors

|

||||

verbs:

|

||||

- get

|

||||

- create

|

||||

- apiGroups:

|

||||

- apps

|

||||

resourceNames:

|

||||

- smi-adapter-istio

|

||||

resources:

|

||||

- deployments/finalizers

|

||||

verbs:

|

||||

- update

|

||||

- apiGroups:

|

||||

- split.smi-spec.io

|

||||

resources:

|

||||

- '*'

|

||||

verbs:

|

||||

- '*'

|

||||

- apiGroups:

|

||||

- networking.istio.io

|

||||

resources:

|

||||

- '*'

|

||||

verbs:

|

||||

- '*'

|

||||

---

|

||||

kind: ClusterRoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

subjects:

|

||||

- kind: ServiceAccount

|

||||

name: smi-adapter-istio

|

||||

namespace: istio-system

|

||||

roleRef:

|

||||

kind: ClusterRole

|

||||

name: smi-adapter-istio

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

---

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: smi-adapter-istio

|

||||

namespace: istio-system

|

||||

spec:

|

||||

replicas: 1

|

||||

selector:

|

||||

matchLabels:

|

||||

name: smi-adapter-istio

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

name: smi-adapter-istio

|

||||

annotations:

|

||||

sidecar.istio.io/inject: "false"

|

||||

spec:

|

||||

serviceAccountName: smi-adapter-istio

|

||||

containers:

|

||||

- name: smi-adapter-istio

|

||||

image: docker.io/stefanprodan/smi-adapter-istio:0.0.2-beta.1

|

||||

command:

|

||||

- smi-adapter-istio

|

||||

imagePullPolicy: Always

|

||||

env:

|

||||

- name: WATCH_NAMESPACE

|

||||

value: ""

|

||||

- name: POD_NAME

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: OPERATOR_NAME

|

||||

value: "smi-adapter-istio"

|

||||

@@ -1,7 +1,7 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.13.2

|

||||

appVersion: 0.13.2

|

||||

version: 0.14.0

|

||||

appVersion: 0.14.0

|

||||

kubeVersion: ">=1.11.0-0"

|

||||

engine: gotpl

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio, App Mesh or NGINX routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

@@ -55,6 +55,26 @@ rules:

|

||||

- virtualservices

|

||||

- virtualservices/status

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- split.smi-spec.io

|

||||

resources:

|

||||

- trafficsplits

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- gloo.solo.io

|

||||

resources:

|

||||

- settings

|

||||

- upstreams

|

||||

- upstreamgroups

|

||||

- proxies

|

||||

- virtualservices

|

||||

verbs: ["*"]

|

||||

- apiGroups:

|

||||

- gateway.solo.io

|

||||

resources:

|

||||

- virtualservices

|

||||

- gateways

|

||||

verbs: ["*"]

|

||||

- nonResourceURLs:

|

||||

- /version

|

||||

verbs:

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

image:

|

||||

repository: weaveworks/flagger

|

||||

tag: 0.13.2

|

||||

tag: 0.14.0

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

metricsServer: "http://prometheus:9090"

|

||||

|

||||

@@ -45,8 +45,8 @@ var (

|

||||

func init() {

|

||||

flag.StringVar(&kubeconfig, "kubeconfig", "", "Path to a kubeconfig. Only required if out-of-cluster.")

|

||||

flag.StringVar(&masterURL, "master", "", "The address of the Kubernetes API server. Overrides any value in kubeconfig. Only required if out-of-cluster.")

|

||||

flag.StringVar(&metricsServer, "metrics-server", "http://prometheus:9090", "Prometheus URL")

|

||||

flag.DurationVar(&controlLoopInterval, "control-loop-interval", 10*time.Second, "Kubernetes API sync interval")

|

||||

flag.StringVar(&metricsServer, "metrics-server", "http://prometheus:9090", "Prometheus URL.")

|

||||

flag.DurationVar(&controlLoopInterval, "control-loop-interval", 10*time.Second, "Kubernetes API sync interval.")

|

||||

flag.StringVar(&logLevel, "log-level", "debug", "Log level can be: debug, info, warning, error.")

|

||||

flag.StringVar(&port, "port", "8080", "Port to listen on.")

|

||||

flag.StringVar(&slackURL, "slack-url", "", "Slack hook URL.")

|

||||

@@ -55,9 +55,9 @@ func init() {

|

||||

flag.IntVar(&threadiness, "threadiness", 2, "Worker concurrency.")

|

||||

flag.BoolVar(&zapReplaceGlobals, "zap-replace-globals", false, "Whether to change the logging level of the global zap logger.")

|

||||

flag.StringVar(&zapEncoding, "zap-encoding", "json", "Zap logger encoding.")

|

||||

flag.StringVar(&namespace, "namespace", "", "Namespace that flagger would watch canary object")

|

||||

flag.StringVar(&meshProvider, "mesh-provider", "istio", "Service mesh provider, can be istio or appmesh")

|

||||

flag.StringVar(&selectorLabels, "selector-labels", "app,name,app.kubernetes.io/name", "List of pod labels that Flagger uses to create pod selectors")

|

||||

flag.StringVar(&namespace, "namespace", "", "Namespace that flagger would watch canary object.")

|

||||

flag.StringVar(&meshProvider, "mesh-provider", "istio", "Service mesh provider, can be istio, appmesh, supergloo, nginx or smi.")

|

||||

flag.StringVar(&selectorLabels, "selector-labels", "app,name,app.kubernetes.io/name", "List of pod labels that Flagger uses to create pod selectors.")

|

||||

}

|

||||

|

||||

func main() {

|

||||

@@ -87,12 +87,12 @@ func main() {

|

||||

|

||||

meshClient, err := clientset.NewForConfig(cfg)

|

||||

if err != nil {

|

||||

logger.Fatalf("Error building istio clientset: %v", err)

|

||||

logger.Fatalf("Error building mesh clientset: %v", err)

|

||||

}

|

||||

|

||||

flaggerClient, err := clientset.NewForConfig(cfg)

|

||||

if err != nil {

|

||||

logger.Fatalf("Error building example clientset: %s", err.Error())

|

||||

logger.Fatalf("Error building flagger clientset: %s", err.Error())

|

||||

}

|

||||

|

||||

flaggerInformerFactory := informers.NewSharedInformerFactoryWithOptions(flaggerClient, time.Second*30, informers.WithNamespace(namespace))

|

||||

@@ -116,7 +116,12 @@ func main() {

|

||||

logger.Infof("Watching namespace %s", namespace)

|

||||

}

|

||||

|

||||

ok, err := metrics.CheckMetricsServer(metricsServer)

|

||||

observerFactory, err := metrics.NewFactory(metricsServer, meshProvider, 5*time.Second)

|

||||

if err != nil {

|

||||

logger.Fatalf("Error building prometheus client: %s", err.Error())

|

||||

}

|

||||

|

||||

ok, err := observerFactory.Client.IsOnline()

|

||||

if ok {

|

||||

logger.Infof("Connected to metrics server %s", metricsServer)

|

||||

} else {

|

||||

@@ -148,6 +153,7 @@ func main() {

|

||||

logger,

|

||||

slack,

|

||||

routerFactory,

|

||||

observerFactory,

|

||||

meshProvider,

|

||||

version.VERSION,

|

||||

labels,

|

||||

|

||||

BIN

docs/diagrams/flagger-gloo-overview.png

Normal file

BIN

docs/diagrams/flagger-gloo-overview.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 41 KiB |

@@ -16,10 +16,12 @@

|

||||

* [Istio A/B Testing](usage/ab-testing.md)

|

||||

* [App Mesh Canary Deployments](usage/appmesh-progressive-delivery.md)

|

||||

* [NGINX Canary Deployments](usage/nginx-progressive-delivery.md)

|

||||

* [Gloo Canary Deployments](usage/gloo-progressive-delivery.md)

|

||||

* [Monitoring](usage/monitoring.md)

|

||||

* [Alerting](usage/alerting.md)

|

||||

|

||||

## Tutorials

|

||||

|

||||

* [SMI Istio Canary Deployments](tutorials/flagger-smi-istio.md)

|

||||

* [Canaries with Helm charts and GitOps](tutorials/canary-helm-gitops.md)

|

||||

* [Zero downtime deployments](tutorials/zero-downtime-deployments.md)

|

||||

|

||||

@@ -38,7 +38,6 @@ spec:

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- podinfo.example.com

|

||||

|

||||

@@ -163,7 +163,7 @@ Deploy Grafana in the _**appmesh-system**_ namespace:

|

||||

```bash

|

||||

helm upgrade -i flagger-grafana flagger/grafana \

|

||||

--namespace=appmesh-system \

|

||||

--set url=http://prometheus.appmesh-system:9090

|

||||

--set url=http://flagger-prometheus.appmesh-system:9090

|

||||

```

|

||||

|

||||

You can access Grafana using port forwarding:

|

||||

|

||||

332

docs/gitbook/tutorials/flagger-smi-istio.md

Normal file

332

docs/gitbook/tutorials/flagger-smi-istio.md

Normal file

@@ -0,0 +1,332 @@

|

||||

# Flagger SMI

|

||||

|

||||

This guide shows you how to use the SMI Istio adapter and Flagger to automate canary deployments.

|

||||

|

||||

### Prerequisites

|

||||

|

||||

Flagger requires a Kubernetes cluster **v1.11** or newer with the following admission controllers enabled:

|

||||

|

||||

* MutatingAdmissionWebhook

|

||||

* ValidatingAdmissionWebhook

|

||||

|

||||

Flagger depends on [Istio](https://istio.io/docs/setup/kubernetes/quick-start/) **v1.0.3** or newer

|

||||

with traffic management, telemetry and Prometheus enabled.

|

||||

|

||||

A minimal Istio installation should contain the following services:

|

||||

|

||||

* istio-pilot

|

||||

* istio-ingressgateway

|

||||

* istio-sidecar-injector

|

||||

* istio-telemetry

|

||||

* prometheus

|

||||

|

||||

### Install Istio and the SMI adapter

|

||||

|

||||

Add Istio Helm repository:

|

||||

|

||||

```bash

|

||||

helm repo add istio.io https://storage.googleapis.com/istio-release/releases/1.1.5/charts

|

||||

```

|

||||

|

||||

Install Istio CRDs:

|

||||

|

||||

```bash

|

||||

helm upgrade -i istio-init istio.io/istio-init --wait --namespace istio-system

|

||||

|

||||

kubectl -n istio-system wait --for=condition=complete job/istio-init-crd-11

|

||||

```

|

||||

|

||||

Install Istio:

|

||||

|

||||

```bash

|

||||

helm upgrade -i istio istio.io/istio --wait --namespace istio-system

|

||||

```

|

||||

|

||||

Create a generic Istio gateway to expose services outside the mesh on HTTP:

|

||||

|

||||

```yaml

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: Gateway

|

||||

metadata:

|

||||

name: public-gateway

|

||||

namespace: istio-system

|

||||

spec:

|

||||

selector:

|

||||

istio: ingressgateway

|

||||

servers:

|

||||

- port:

|

||||

number: 80

|

||||

name: http

|

||||

protocol: HTTP

|

||||

hosts:

|

||||

- "*"

|

||||

```

|

||||

|

||||

Save the above resource as public-gateway.yaml and then apply it:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./public-gateway.yaml

|

||||

```

|

||||

|

||||

Find the Gateway load balancer IP and add a DNS record for it:

|

||||

|

||||

```bash

|

||||

kubectl -n istio-system get svc/istio-ingressgateway -ojson | jq -r .status.loadBalancer.ingress[0].ip

|

||||

```

|

||||

|

||||

Install the SMI adapter:

|

||||

|

||||

```bash

|

||||

REPO=https://raw.githubusercontent.com/weaveworks/flagger/master

|

||||

|

||||

kubectl apply -f ${REPO}/artifacts/smi/istio-adapter.yaml

|

||||

```

|

||||

|

||||

### Install Flagger and Grafana

|

||||

|

||||

Add Flagger Helm repository:

|

||||

|

||||

```bash

|

||||

helm repo add flagger https://flagger.app

|

||||

```

|

||||

|

||||

Deploy Flagger in the _**istio-system**_ namespace:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace=istio-system \

|

||||

--set image.tag=master-12d84b2 \

|

||||

--set meshProvider=smi:istio

|

||||

```

|

||||

|

||||

Flagger comes with a Grafana dashboard made for monitoring the canary deployments.

|

||||

|

||||

Deploy Grafana in the _**istio-system**_ namespace:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger-grafana flagger/grafana \

|

||||

--namespace=istio-system \

|

||||

--set url=http://prometheus.istio-system:9090

|

||||

```

|

||||

|

||||

You can access Grafana using port forwarding:

|

||||

|

||||

```bash

|

||||

kubectl -n istio-system port-forward svc/flagger-grafana 3000:80

|

||||

```

|

||||

|

||||

### Workloads bootstrap

|

||||

|

||||

Create a test namespace with Istio sidecar injection enabled:

|

||||

|

||||

```bash

|

||||

export REPO=https://raw.githubusercontent.com/weaveworks/flagger/master

|

||||

|

||||

kubectl apply -f ${REPO}/artifacts/namespaces/test.yaml

|

||||

```

|

||||

|

||||

Create a deployment and a horizontal pod autoscaler:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/deployment.yaml

|

||||

kubectl -n test apply -f ${REPO}/artifacts/loadtester/service.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource (replace example.com with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.example.com

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 5

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 10

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# generate traffic during analysis

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://podinfo.test:9898/"

|

||||

```

|

||||

|

||||

Save the above resource as podinfo-canary.yaml and then apply it:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./podinfo-canary.yaml

|

||||

```

|

||||

|

||||

After a couple of seconds Flagger will create the canary objects:

|

||||

|

||||

```bash

|

||||

# applied

|

||||

deployment.apps/podinfo

|

||||

horizontalpodautoscaler.autoscaling/podinfo

|

||||

canary.flagger.app/podinfo

|

||||

|

||||

# generated

|

||||

deployment.apps/podinfo-primary

|

||||

horizontalpodautoscaler.autoscaling/podinfo-primary

|

||||

service/podinfo

|

||||

service/podinfo-canary

|

||||

service/podinfo-primary

|

||||

trafficsplits.split.smi-spec.io/podinfo

|

||||

```

|

||||

|

||||

### Automated canary promotion

|

||||

|

||||

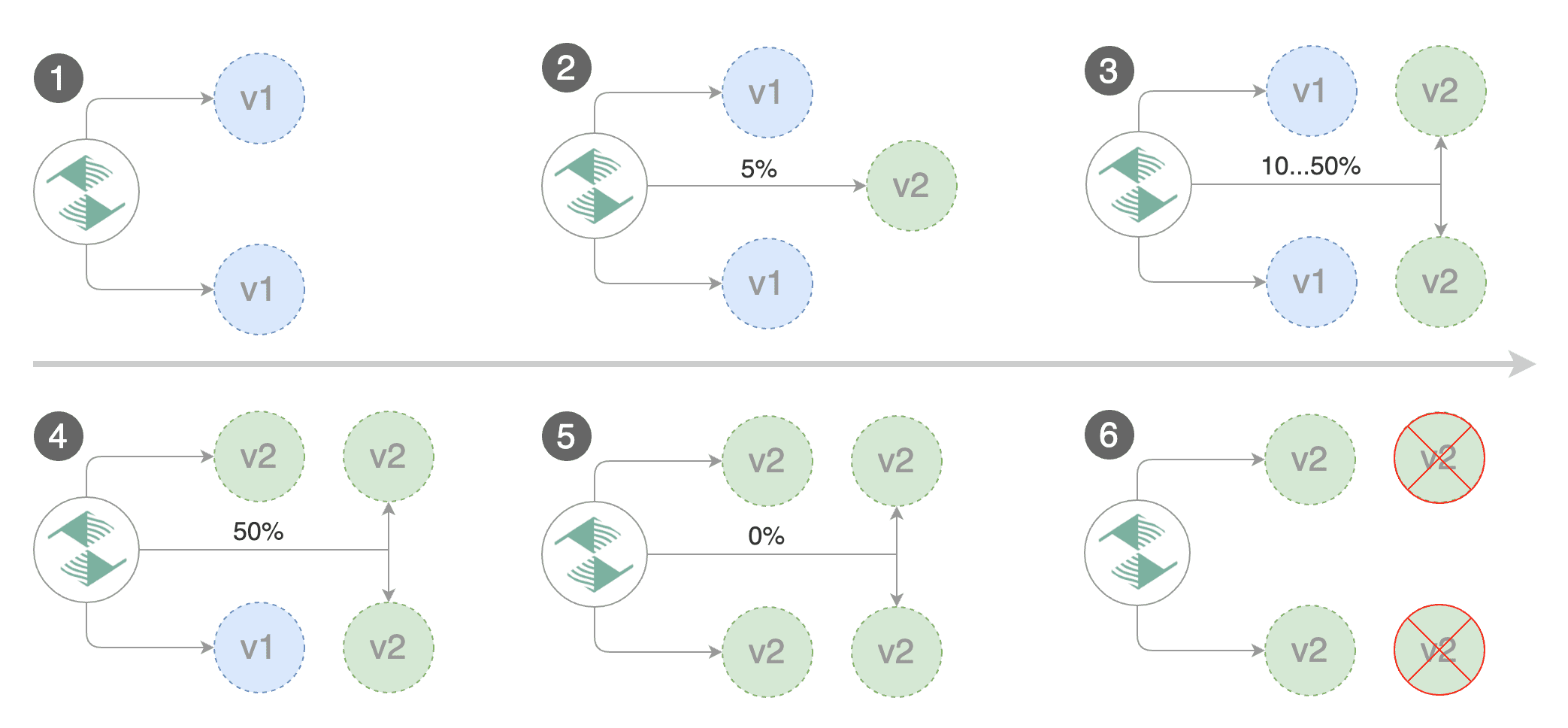

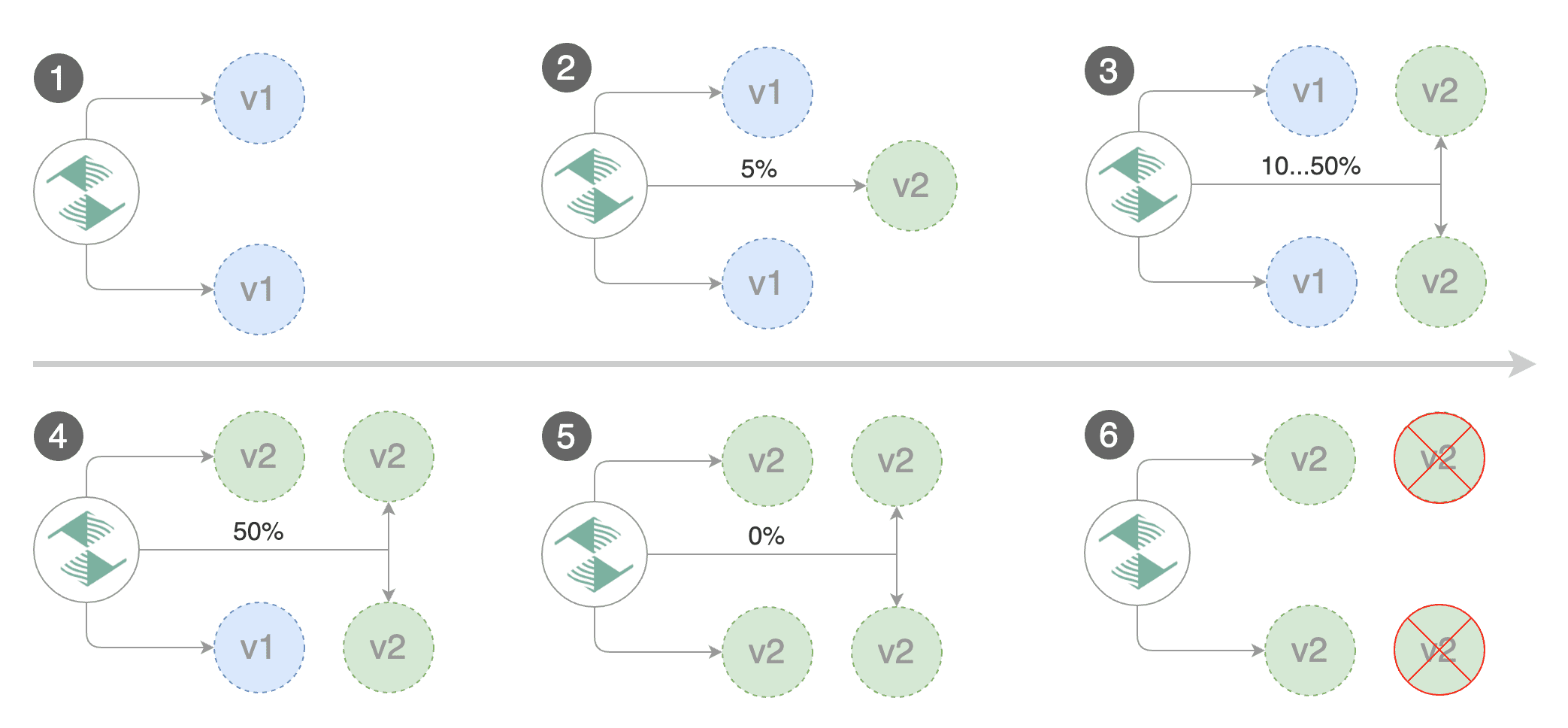

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators

|

||||

like HTTP requests success rate, requests average duration and pod health.

|

||||

Based on analysis of the KPIs a canary is promoted or aborted, and the analysis result is published to Slack.

|

||||

|

||||

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.1

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

|

||||

```text

|

||||

kubectl -n istio-system logs deployment/flagger -f | jq .msg

|

||||

|

||||

|

||||

New revision detected podinfo.test

|

||||

Scaling up podinfo.test

|

||||

Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Advance podinfo.test canary weight 5

|

||||

Advance podinfo.test canary weight 10

|

||||

Advance podinfo.test canary weight 15

|

||||

Advance podinfo.test canary weight 20

|

||||

Advance podinfo.test canary weight 25

|

||||

Advance podinfo.test canary weight 30

|

||||

Advance podinfo.test canary weight 35

|

||||

Advance podinfo.test canary weight 40

|

||||

Advance podinfo.test canary weight 45

|

||||

Advance podinfo.test canary weight 50

|

||||

Copying podinfo.test template spec to podinfo-primary.test

|

||||

Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

During the analysis the canary’s progress can be monitored with Grafana. The Istio dashboard URL is

|

||||

http://localhost:3000/d/flagger-istio/istio-canary?refresh=10s&orgId=1&var-namespace=test&var-primary=podinfo-primary&var-canary=podinfo

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 15 2019-05-16T14:05:07Z

|

||||

prod frontend Succeeded 0 2019-05-15T16:15:07Z

|

||||

prod backend Failed 0 2019-05-14T17:05:07Z

|

||||

```

|

||||

|

||||

### Automated rollback

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

|

||||

Create a tester pod and exec into it:

|

||||

|

||||

```bash

|

||||

kubectl -n test run tester \

|

||||

--image=quay.io/stefanprodan/podinfo:1.2.1 \

|

||||

-- ./podinfo --port=9898

|

||||

|

||||

kubectl -n test exec -it tester-xx-xx sh

|

||||

```

|

||||

|

||||

Generate HTTP 500 errors:

|

||||

|

||||

```bash

|

||||

watch curl http://podinfo-canary:9898/status/500

|

||||

```

|

||||

|

||||

Generate latency:

|

||||

|

||||

```bash

|

||||

watch curl http://podinfo-canary:9898/delay/1

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger Starting canary deployment for podinfo.test

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 3m flagger Halt podinfo.test advancement success rate 69.17% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 61.39% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 55.06% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 47.00% < 99%

|

||||

Normal Synced 2m flagger (combined from similar events): Halt podinfo.test advancement success rate 38.08% < 99%

|

||||

Warning Synced 1m flagger Rolling back podinfo.test failed checks threshold reached 10

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

@@ -60,7 +60,6 @@ spec:

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.example.com

|

||||

|

||||

366

docs/gitbook/usage/gloo-progressive-delivery.md

Normal file

366

docs/gitbook/usage/gloo-progressive-delivery.md

Normal file

@@ -0,0 +1,366 @@

|

||||

# NGNIX Ingress Controller Canary Deployments

|

||||

|

||||

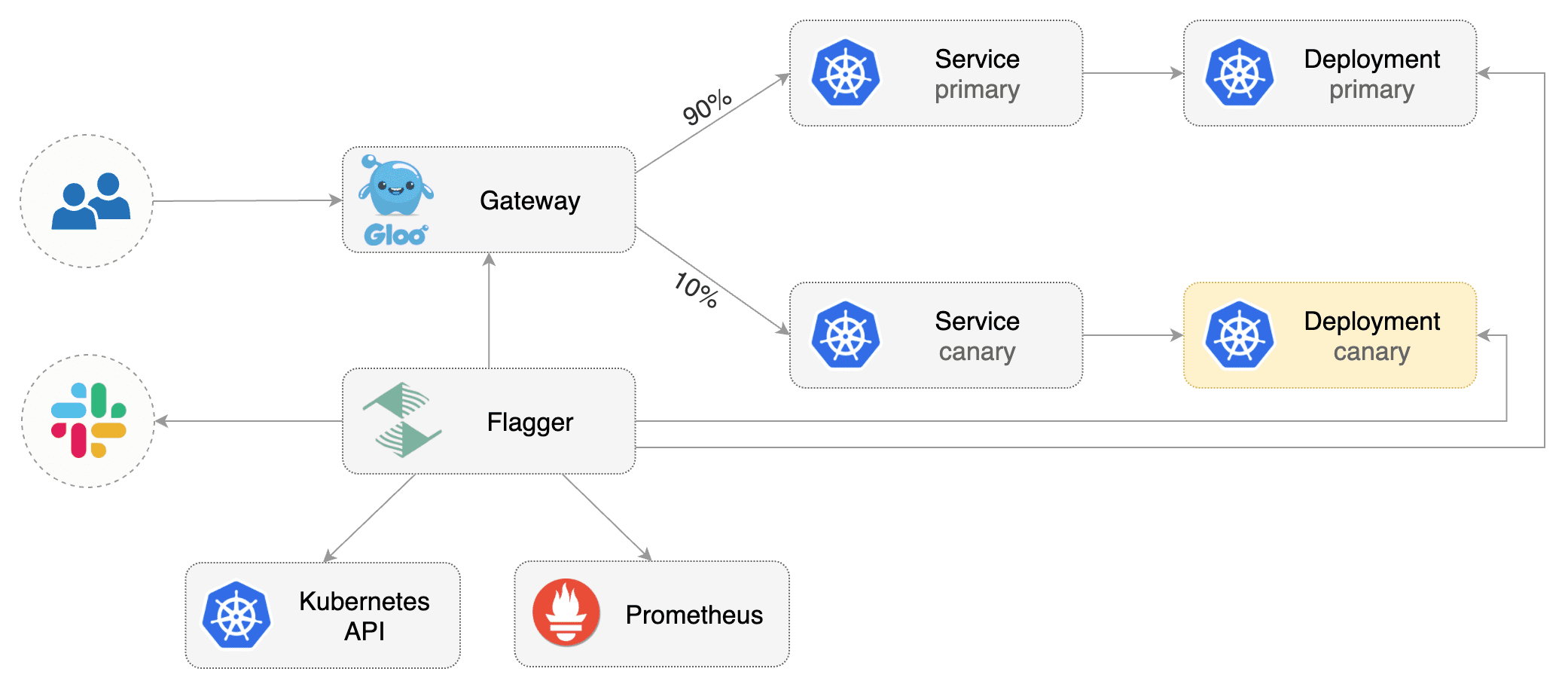

This guide shows you how to use the [Gloo](https://gloo.solo.io/) ingress controller and Flagger to automate canary deployments.

|

||||

|

||||

|

||||

|

||||

### Prerequisites

|

||||

|

||||

Flagger requires a Kubernetes cluster **v1.11** or newer and Gloo ingress **0.13.29** or newer.

|

||||

|

||||

Install Gloo with Helm:

|

||||

|

||||

```bash

|

||||

helm repo add gloo https://storage.googleapis.com/solo-public-helm

|

||||

|

||||

helm upgrade -i gloo gloo/gloo \

|

||||

--namespace gloo-system

|

||||

```

|

||||

|

||||

Install Flagger and the Prometheus add-on in the same namespace as Gloo:

|

||||

|

||||

```bash

|

||||

helm repo add flagger https://flagger.app

|

||||

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace gloo-system \

|

||||

--set prometheus.install=true \

|

||||

--set meshProvider=gloo

|

||||

```

|

||||

|

||||

Optionally you can enable Slack notifications:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--reuse-values \

|

||||

--namespace gloo-system \

|

||||

--set slack.url=https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK \

|

||||

--set slack.channel=general \

|

||||

--set slack.user=flagger

|

||||

```

|

||||

|

||||

### Bootstrap

|

||||

|

||||

Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler (HPA),

|

||||

then creates a series of objects (Kubernetes deployments, ClusterIP services and Gloo upstream groups).

|

||||

These objects expose the application outside the cluster and drive the canary analysis and promotion.

|

||||

|

||||

Create a test namespace:

|

||||

|

||||

```bash

|

||||

kubectl create ns test

|

||||

```

|

||||

|

||||

Create a deployment and a horizontal pod autoscaler:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/gloo/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/gloo/hpa.yaml

|

||||

```

|

||||

|

||||

Deploy the load testing service to generate traffic during the canary analysis:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger-loadtester flagger/loadtester \

|

||||

--namespace=test

|

||||

```

|

||||

|

||||

Create an virtual service definition that references an upstream group that will be generated by Flagger

|

||||

(replace `app.example.com` with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: gateway.solo.io/v1

|

||||

kind: VirtualService

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

virtualHost:

|

||||

domains:

|

||||

- 'app.example.com'

|

||||

name: podinfo.test

|

||||

routes:

|

||||

- matcher:

|

||||

prefix: /

|

||||

routeAction:

|

||||

upstreamGroup:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

```

|

||||

|

||||

Save the above resource as podinfo-virtualservice.yaml and then apply it:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./podinfo-virtualservice.yaml

|

||||

```

|

||||

|

||||

Create a canary custom resource (replace `app.example.com` with your own domain):

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1alpha3

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

# the maximum time in seconds for the canary deployment

|

||||

# to make progress before it is rollback (default 600s)

|

||||

progressDeadlineSeconds: 60

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

canaryAnalysis:

|

||||

# schedule interval (default 60s)

|

||||

interval: 10s

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 5

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

# Gloo Prometheus checks

|

||||

metrics:

|

||||

- name: request-success-rate

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: request-duration

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

# load testing (optional)

|

||||

webhooks:

|

||||

- name: load-test

|

||||

url: http://flagger-loadtester.test/

|

||||

timeout: 5s

|

||||

metadata:

|

||||

type: cmd

|

||||

cmd: "hey -z 1m -q 10 -c 2 http://app.example.com/"

|

||||

```

|

||||

|

||||

Save the above resource as podinfo-canary.yaml and then apply it:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./podinfo-canary.yaml

|

||||

```

|

||||

|

||||

After a couple of seconds Flagger will create the canary objects:

|

||||

|

||||

```bash

|

||||

# applied

|

||||

deployment.apps/podinfo

|

||||

horizontalpodautoscaler.autoscaling/podinfo

|

||||

virtualservices.gateway.solo.io/podinfo

|

||||

canary.flagger.app/podinfo

|

||||

|

||||

# generated

|

||||

deployment.apps/podinfo-primary

|

||||

horizontalpodautoscaler.autoscaling/podinfo-primary

|

||||

service/podinfo

|

||||

service/podinfo-canary

|

||||

service/podinfo-primary

|

||||

upstreamgroups.gloo.solo.io/podinfo

|

||||

```

|

||||

|

||||

When the bootstrap finishes Flagger will set the canary status to initialized:

|

||||

|

||||

```bash

|

||||

kubectl -n test get canary podinfo

|

||||

|

||||

NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

podinfo Initialized 0 2019-05-17T08:09:51Z

|

||||

```

|

||||

|

||||

### Automated canary promotion

|

||||

|

||||

Flagger implements a control loop that gradually shifts traffic to the canary while measuring key performance indicators

|

||||

like HTTP requests success rate, requests average duration and pod health.

|

||||

Based on analysis of the KPIs a canary is promoted or aborted, and the analysis result is published to Slack.

|

||||

|

||||

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.1

|

||||

```

|

||||

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 0

|

||||

Phase: Succeeded

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger New revision detected podinfo.test

|

||||

Normal Synced 3m flagger Scaling up podinfo.test

|

||||

Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 20

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 25

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 30

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 35

|

||||

Normal Synced 55s flagger Advance podinfo.test canary weight 40

|

||||

Normal Synced 45s flagger Advance podinfo.test canary weight 45

|

||||

Normal Synced 35s flagger Advance podinfo.test canary weight 50

|

||||

Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test

|

||||

Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

**Note** that if you apply new changes to the deployment during the canary analysis, Flagger will restart the analysis.

|

||||

|

||||

You can monitor all canaries with:

|

||||

|

||||

```bash

|

||||

watch kubectl get canaries --all-namespaces

|

||||

|

||||

NAMESPACE NAME STATUS WEIGHT LASTTRANSITIONTIME

|

||||

test podinfo Progressing 15 2019-05-17T14:05:07Z

|

||||

prod frontend Succeeded 0 2019-05-17T16:15:07Z

|

||||

prod backend Failed 0 2019-05-17T17:05:07Z

|

||||

```

|

||||

|

||||

### Automated rollback

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses and rolls back the faulted version.

|

||||

|

||||

Trigger another canary deployment:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.2

|

||||

```

|

||||

|

||||

Generate HTTP 500 errors:

|

||||

|

||||

```bash

|

||||

watch curl http://app.example.com/status/500

|

||||

```

|

||||

|

||||

Generate high latency:

|

||||

|

||||

```bash

|

||||

watch curl http://app.example.com/delay/2

|

||||

```

|

||||

|

||||

When the number of failed checks reaches the canary analysis threshold, the traffic is routed back to the primary,

|

||||

the canary is scaled to zero and the rollout is marked as failed.

|

||||

|

||||

```text

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Weight: 0

|

||||

Failed Checks: 10

|

||||

Phase: Failed

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger Starting canary deployment for podinfo.test

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 3m flagger Halt podinfo.test advancement success rate 69.17% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 61.39% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 55.06% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 47.00% < 99%

|

||||

Normal Synced 2m flagger (combined from similar events): Halt podinfo.test advancement success rate 38.08% < 99%

|

||||

Warning Synced 1m flagger Rolling back podinfo.test failed checks threshold reached 10

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

### Custom metrics

|

||||

|

||||

The canary analysis can be extended with Prometheus queries.

|

||||

|

||||

The demo app is instrumented with Prometheus so you can create a custom check that will use the HTTP request duration

|

||||

histogram to validate the canary.

|

||||

|

||||

Edit the canary analysis and add the following metric:

|

||||

|

||||

```yaml

|

||||

canaryAnalysis:

|

||||

metrics:

|

||||

- name: "404s percentage"

|

||||

threshold: 5

|

||||

query: |

|

||||

100 - sum(

|

||||

rate(

|

||||

http_request_duration_seconds_count{

|

||||

kubernetes_namespace="test",

|

||||

kubernetes_pod_name=~"podinfo-[0-9a-zA-Z]+(-[0-9a-zA-Z]+)"

|

||||

status!="404"

|

||||

}[1m]

|

||||

)

|

||||

)

|

||||

/

|

||||

sum(

|

||||

rate(

|

||||

http_request_duration_seconds_count{

|

||||

kubernetes_namespace="test",

|

||||

kubernetes_pod_name=~"podinfo-[0-9a-zA-Z]+(-[0-9a-zA-Z]+)"

|

||||

}[1m]

|

||||

)

|

||||

) * 100

|

||||

```

|

||||

|

||||

The above configuration validates the canary by checking if the HTTP 404 req/sec percentage is below 5

|

||||

percent of the total traffic. If the 404s rate reaches the 5% threshold, then the canary fails.

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.4.3

|

||||

```

|

||||

|

||||

Generate 404s:

|

||||

|

||||

```bash

|

||||

watch curl http://app.example.com/status/400

|

||||

```

|

||||

|

||||

Watch Flagger logs:

|

||||

|

||||

```

|

||||

kubectl -n gloo-system logs deployment/flagger -f | jq .msg

|

||||

|

||||

Starting canary deployment for podinfo.test

|

||||

Advance podinfo.test canary weight 5

|

||||

Advance podinfo.test canary weight 10

|

||||

Advance podinfo.test canary weight 15

|

||||

Halt podinfo.test advancement 404s percentage 6.20 > 5

|

||||

Halt podinfo.test advancement 404s percentage 6.45 > 5

|

||||

Halt podinfo.test advancement 404s percentage 7.60 > 5

|

||||

Halt podinfo.test advancement 404s percentage 8.69 > 5

|

||||

Halt podinfo.test advancement 404s percentage 9.70 > 5

|

||||

Rolling back podinfo.test failed checks threshold reached 5

|

||||

Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

If you have Slack configured, Flagger will send a notification with the reason why the canary failed.

|

||||

|

||||

|

||||

@@ -54,7 +54,6 @@ spec:

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.example.com

|

||||

|

||||

@@ -23,5 +23,5 @@ CODEGEN_PKG=${CODEGEN_PKG:-$(cd ${SCRIPT_ROOT}; ls -d -1 ./vendor/k8s.io/code-ge

|

||||

|

||||

${CODEGEN_PKG}/generate-groups.sh "deepcopy,client,informer,lister" \

|

||||

github.com/weaveworks/flagger/pkg/client github.com/weaveworks/flagger/pkg/apis \

|

||||

"appmesh:v1beta1 istio:v1alpha3 flagger:v1alpha3" \

|

||||

"appmesh:v1beta1 istio:v1alpha3 flagger:v1alpha3 smi:v1alpha1" \

|

||||

--go-header-file ${SCRIPT_ROOT}/hack/boilerplate.go.txt

|

||||

|

||||

5

pkg/apis/smi/register.go

Normal file

5

pkg/apis/smi/register.go

Normal file

@@ -0,0 +1,5 @@

|

||||

package smi

|

||||

|

||||

const (

|

||||

GroupName = "split.smi-spec.io"

|

||||

)

|

||||

4

pkg/apis/smi/v1alpha1/doc.go

Normal file

4

pkg/apis/smi/v1alpha1/doc.go

Normal file

@@ -0,0 +1,4 @@

|

||||

// +k8s:deepcopy-gen=package

|

||||

// +groupName=split.smi-spec.io

|

||||

|

||||

package v1alpha1

|

||||

48

pkg/apis/smi/v1alpha1/register.go

Normal file

48

pkg/apis/smi/v1alpha1/register.go

Normal file

@@ -0,0 +1,48 @@

|

||||

package v1alpha1

|

||||

|

||||

import (

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

"k8s.io/apimachinery/pkg/runtime"

|

||||

"k8s.io/apimachinery/pkg/runtime/schema"

|

||||

|

||||

ts "github.com/weaveworks/flagger/pkg/apis/smi"

|

||||

)

|

||||

|

||||

// SchemeGroupVersion is the identifier for the API which includes

|

||||

// the name of the group and the version of the API

|

||||

var SchemeGroupVersion = schema.GroupVersion{

|

||||

Group: ts.GroupName,

|

||||

Version: "v1alpha1",

|

||||

}

|

||||

|

||||

// Kind takes an unqualified kind and returns back a Group qualified GroupKind

|

||||

func Kind(kind string) schema.GroupKind {

|

||||

return SchemeGroupVersion.WithKind(kind).GroupKind()

|

||||

}

|

||||

|

||||

// Resource takes an unqualified resource and returns a Group qualified GroupResource

|

||||

func Resource(resource string) schema.GroupResource {

|

||||

return SchemeGroupVersion.WithResource(resource).GroupResource()

|

||||

}

|

||||

|

||||

var (

|

||||

// SchemeBuilder collects functions that add things to a scheme. It's to allow

|

||||

// code to compile without explicitly referencing generated types. You should

|

||||

// declare one in each package that will have generated deep copy or conversion

|

||||

// functions.

|

||||

SchemeBuilder = runtime.NewSchemeBuilder(addKnownTypes)

|

||||

|

||||

// AddToScheme applies all the stored functions to the scheme. A non-nil error

|

||||

// indicates that one function failed and the attempt was abandoned.

|

||||

AddToScheme = SchemeBuilder.AddToScheme

|

||||

)

|

||||

|

||||

// Adds the list of known types to Scheme.

|

||||

func addKnownTypes(scheme *runtime.Scheme) error {

|

||||

scheme.AddKnownTypes(SchemeGroupVersion,

|

||||

&TrafficSplit{},

|

||||

&TrafficSplitList{},

|

||||

)

|

||||

metav1.AddToGroupVersion(scheme, SchemeGroupVersion)

|

||||

return nil

|

||||

}

|

||||

56

pkg/apis/smi/v1alpha1/traffic_split.go

Normal file

56

pkg/apis/smi/v1alpha1/traffic_split.go

Normal file

@@ -0,0 +1,56 @@

|

||||

package v1alpha1

|

||||

|

||||

import (

|

||||

"k8s.io/apimachinery/pkg/api/resource"

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

)

|

||||

|

||||

// +genclient

|

||||

// +genclient:noStatus

|

||||

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

|

||||

|

||||

// TrafficSplit allows users to incrementally direct percentages of traffic

|

||||

// between various services. It will be used by clients such as ingress

|

||||

// controllers or service mesh sidecars to split the outgoing traffic to

|

||||

// different destinations.

|

||||

type TrafficSplit struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

// Standard object's metadata.

|

||||

// More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#metadata

|

||||

// +optional

|

||||

metav1.ObjectMeta `json:"metadata,omitempty" protobuf:"bytes,1,opt,name=metadata"`

|

||||

|

||||

// Specification of the desired behavior of the traffic split.

|

||||

// More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status

|

||||

// +optional

|

||||

Spec TrafficSplitSpec `json:"spec,omitempty" protobuf:"bytes,2,opt,name=spec"`

|

||||

|

||||

// Most recently observed status of the pod.

|

||||

// This data may not be up to date.

|

||||

// Populated by the system.

|

||||

// Read-only.

|

||||

// More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#spec-and-status

|

||||

// +optional

|

||||

//Status Status `json:"status,omitempty" protobuf:"bytes,3,opt,name=status"`

|

||||

}

|

||||

|

||||

// TrafficSplitSpec is the specification for a TrafficSplit

|

||||

type TrafficSplitSpec struct {

|

||||

Service string `json:"service,omitempty"`

|

||||

Backends []TrafficSplitBackend `json:"backends,omitempty"`

|

||||

}

|

||||

|

||||

// TrafficSplitBackend defines a backend

|

||||

type TrafficSplitBackend struct {

|

||||

Service string `json:"service,omitempty"`

|

||||

Weight *resource.Quantity `json:"weight,omitempty"`

|

||||

}

|

||||

|

||||

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

|

||||

|

||||

type TrafficSplitList struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ListMeta `json:"metadata"`

|

||||

|

||||

Items []TrafficSplit `json:"items"`

|

||||

}

|

||||

129

pkg/apis/smi/v1alpha1/zz_generated.deepcopy.go

Normal file

129

pkg/apis/smi/v1alpha1/zz_generated.deepcopy.go

Normal file

@@ -0,0 +1,129 @@

|

||||

// +build !ignore_autogenerated

|

||||

|

||||

/*

|

||||

Copyright The Flagger Authors.

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

*/

|

||||

|

||||

// Code generated by deepcopy-gen. DO NOT EDIT.

|

||||

|

||||

package v1alpha1

|

||||

|

||||

import (

|

||||

runtime "k8s.io/apimachinery/pkg/runtime"

|

||||

)

|

||||

|

||||