mirror of

https://github.com/fluxcd/flagger.git

synced 2026-02-15 02:20:22 +00:00

Compare commits

58 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

8c12cdb21d | ||

|

|

923799dce7 | ||

|

|

ebc932fba5 | ||

|

|

3d8d30db47 | ||

|

|

1022c3438a | ||

|

|

9159855df2 | ||

|

|

7927ac0a5d | ||

|

|

f438e9a4b2 | ||

|

|

4c70a330d4 | ||

|

|

d8875a3da1 | ||

|

|

769aff57cb | ||

|

|

4138f37f9a | ||

|

|

583c9cc004 | ||

|

|

c5930e6f70 | ||

|

|

423d9bbbb3 | ||

|

|

07771f500f | ||

|

|

65bd77c88f | ||

|

|

82bf63f89b | ||

|

|

7f735ead07 | ||

|

|

56ffd618d6 | ||

|

|

19cb34479e | ||

|

|

2d906f0b71 | ||

|

|

3eaeec500e | ||

|

|

df98de7d11 | ||

|

|

580924e63b | ||

|

|

1b2108001f | ||

|

|

3a28768bf9 | ||

|

|

53c09f40eb | ||

|

|

074e57aa12 | ||

|

|

e16dde809d | ||

|

|

188e4ea82e | ||

|

|

4a8aa3b547 | ||

|

|

6bf4a8f95b | ||

|

|

c5ea947899 | ||

|

|

344c7db968 | ||

|

|

65b908e702 | ||

|

|

8e66baa0e7 | ||

|

|

667e915700 | ||

|

|

7af103f112 | ||

|

|

8e2f538e4c | ||

|

|

be289ef7ce | ||

|

|

4a074e50c4 | ||

|

|

fa13c92a15 | ||

|

|

dbd0908313 | ||

|

|

9b5c4586b9 | ||

|

|

bfbb272c88 | ||

|

|

4b4a88cbe5 | ||

|

|

b022124415 | ||

|

|

663dc82574 | ||

|

|

baeee62a26 | ||

|

|

56f2ee9078 | ||

|

|

a4f890c8b2 | ||

|

|

a03cf43a1d | ||

|

|

302de10fec | ||

|

|

5a1412549d | ||

|

|

e2be4fdaed | ||

|

|

3eb60a8447 | ||

|

|

276fdfc0ff |

25

.travis.yml

25

.travis.yml

@@ -2,7 +2,7 @@ sudo: required

|

||||

language: go

|

||||

|

||||

go:

|

||||

- 1.10.x

|

||||

- 1.11.x

|

||||

|

||||

services:

|

||||

- docker

|

||||

@@ -14,25 +14,28 @@ addons:

|

||||

|

||||

script:

|

||||

- set -e

|

||||

- make test

|

||||

- make test-fmt

|

||||

- make test-codegen

|

||||

- go test -race -coverprofile=coverage.txt -covermode=atomic ./pkg/controller/

|

||||

- make build

|

||||

|

||||

after_success:

|

||||

- if [ -z "$DOCKER_USER" ]; then

|

||||

echo "PR build, skipping Docker Hub push";

|

||||

echo "PR build, skipping image push";

|

||||

else

|

||||

docker tag stefanprodan/flagger:latest stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin;

|

||||

docker push stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin quay.io;

|

||||

docker push quay.io/stefanprodan/flagger:${TRAVIS_COMMIT};

|

||||

fi

|

||||

- if [ -z "$TRAVIS_TAG" ]; then

|

||||

echo "Not a release, skipping Docker Hub push";

|

||||

echo "Not a release, skipping image push";

|

||||

else

|

||||

docker tag stefanprodan/flagger:latest stefanprodan/flagger:$TRAVIS_TAG;

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin;

|

||||

docker push stefanprodan/flagger:latest;

|

||||

docker push stefanprodan/flagger:$TRAVIS_TAG;

|

||||

docker tag stefanprodan/flagger:latest quay.io/stefanprodan/flagger:${TRAVIS_TAG};

|

||||

echo $DOCKER_PASS | docker login -u=$DOCKER_USER --password-stdin quay.io;

|

||||

docker push quay.io/stefanprodan/flagger:$TRAVIS_TAG;

|

||||

fi

|

||||

- bash <(curl -s https://codecov.io/bash)

|

||||

- rm coverage.txt

|

||||

|

||||

deploy:

|

||||

- provider: script

|

||||

|

||||

72

CONTRIBUTING.md

Normal file

72

CONTRIBUTING.md

Normal file

@@ -0,0 +1,72 @@

|

||||

# How to Contribute

|

||||

|

||||

Flagger is [Apache 2.0 licensed](LICENSE) and accepts contributions via GitHub

|

||||

pull requests. This document outlines some of the conventions on development

|

||||

workflow, commit message formatting, contact points and other resources to make

|

||||

it easier to get your contribution accepted.

|

||||

|

||||

We gratefully welcome improvements to documentation as well as to code.

|

||||

|

||||

## Certificate of Origin

|

||||

|

||||

By contributing to this project you agree to the Developer Certificate of

|

||||

Origin (DCO). This document was created by the Linux Kernel community and is a

|

||||

simple statement that you, as a contributor, have the legal right to make the

|

||||

contribution.

|

||||

|

||||

## Chat

|

||||

|

||||

The project uses Slack: To join the conversation, simply join the

|

||||

[Weave community](https://slack.weave.works/) Slack workspace.

|

||||

|

||||

## Getting Started

|

||||

|

||||

- Fork the repository on GitHub

|

||||

- If you want to contribute as a developer, continue reading this document for further instructions

|

||||

- If you have questions, concerns, get stuck or need a hand, let us know

|

||||

on the Slack channel. We are happy to help and look forward to having

|

||||

you part of the team. No matter in which capacity.

|

||||

- Play with the project, submit bugs, submit pull requests!

|

||||

|

||||

## Contribution workflow

|

||||

|

||||

This is a rough outline of how to prepare a contribution:

|

||||

|

||||

- Create a topic branch from where you want to base your work (usually branched from master).

|

||||

- Make commits of logical units.

|

||||

- Make sure your commit messages are in the proper format (see below).

|

||||

- Push your changes to a topic branch in your fork of the repository.

|

||||

- If you changed code:

|

||||

- add automated tests to cover your changes

|

||||

- Submit a pull request to the original repository.

|

||||

|

||||

## Acceptance policy

|

||||

|

||||

These things will make a PR more likely to be accepted:

|

||||

|

||||

- a well-described requirement

|

||||

- new code and tests follow the conventions in old code and tests

|

||||

- a good commit message (see below)

|

||||

- All code must abide [Go Code Review Comments](https://github.com/golang/go/wiki/CodeReviewComments)

|

||||

- Names should abide [What's in a name](https://talks.golang.org/2014/names.slide#1)

|

||||

- Code must build on both Linux and Darwin, via plain `go build`

|

||||

- Code should have appropriate test coverage and tests should be written

|

||||

to work with `go test`

|

||||

|

||||

In general, we will merge a PR once one maintainer has endorsed it.

|

||||

For substantial changes, more people may become involved, and you might

|

||||

get asked to resubmit the PR or divide the changes into more than one PR.

|

||||

|

||||

### Format of the Commit Message

|

||||

|

||||

For Flux we prefer the following rules for good commit messages:

|

||||

|

||||

- Limit the subject to 50 characters and write as the continuation

|

||||

of the sentence "If applied, this commit will ..."

|

||||

- Explain what and why in the body, if more than a trivial change;

|

||||

wrap it at 72 characters.

|

||||

|

||||

The [following article](https://chris.beams.io/posts/git-commit/#seven-rules)

|

||||

has some more helpful advice on documenting your work.

|

||||

|

||||

This doc is adapted from the [Weaveworks Flux](https://github.com/weaveworks/flux/blob/master/CONTRIBUTING.md)

|

||||

12

Dockerfile

12

Dockerfile

@@ -1,4 +1,4 @@

|

||||

FROM golang:1.10

|

||||

FROM golang:1.11

|

||||

|

||||

RUN mkdir -p /go/src/github.com/stefanprodan/flagger/

|

||||

|

||||

@@ -13,17 +13,17 @@ RUN GIT_COMMIT=$(git rev-list -1 HEAD) && \

|

||||

|

||||

FROM alpine:3.8

|

||||

|

||||

RUN addgroup -S app \

|

||||

&& adduser -S -g app app \

|

||||

RUN addgroup -S flagger \

|

||||

&& adduser -S -g flagger flagger \

|

||||

&& apk --no-cache add ca-certificates

|

||||

|

||||

WORKDIR /home/app

|

||||

WORKDIR /home/flagger

|

||||

|

||||

COPY --from=0 /go/src/github.com/stefanprodan/flagger/flagger .

|

||||

|

||||

RUN chown -R app:app ./

|

||||

RUN chown -R flagger:flagger ./

|

||||

|

||||

USER app

|

||||

USER flagger

|

||||

|

||||

ENTRYPOINT ["./flagger"]

|

||||

|

||||

|

||||

73

Gopkg.lock

generated

73

Gopkg.lock

generated

@@ -25,14 +25,6 @@

|

||||

revision = "8991bc29aa16c548c550c7ff78260e27b9ab7c73"

|

||||

version = "v1.1.1"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:ade392a843b2035effb4b4a2efa2c3bab3eb29b992e98bacf9c898b0ecb54e45"

|

||||

name = "github.com/fatih/color"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "5b77d2a35fb0ede96d138fc9a99f5c9b6aef11b4"

|

||||

version = "v1.7.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:81466b4218bf6adddac2572a30ac733a9255919bc2f470b4827a317bd4ee1756"

|

||||

name = "github.com/ghodss/yaml"

|

||||

@@ -92,10 +84,11 @@

|

||||

revision = "4030bb1f1f0c35b30ca7009e9ebd06849dd45306"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:2e3c336fc7fde5c984d2841455a658a6d626450b1754a854b3b32e7a8f49a07a"

|

||||

digest = "1:d2754cafcab0d22c13541618a8029a70a8959eb3525ff201fe971637e2274cd0"

|

||||

name = "github.com/google/go-cmp"

|

||||

packages = [

|

||||

"cmp",

|

||||

"cmp/cmpopts",

|

||||

"cmp/internal/diff",

|

||||

"cmp/internal/function",

|

||||

"cmp/internal/value",

|

||||

@@ -170,7 +163,7 @@

|

||||

revision = "f2b4162afba35581b6d4a50d3b8f34e33c144682"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:555e31114bd0e89c6340c47ab73162e8c8d873e4d88914310923566f487bfcd5"

|

||||

digest = "1:03a74b0d86021c8269b52b7c908eb9bb3852ff590b363dad0a807cf58cec2f89"

|

||||

name = "github.com/knative/pkg"

|

||||

packages = [

|

||||

"apis",

|

||||

@@ -182,31 +175,19 @@

|

||||

"apis/istio/common/v1alpha1",

|

||||

"apis/istio/v1alpha3",

|

||||

"client/clientset/versioned",

|

||||

"client/clientset/versioned/fake",

|

||||

"client/clientset/versioned/scheme",

|

||||

"client/clientset/versioned/typed/authentication/v1alpha1",

|

||||

"client/clientset/versioned/typed/authentication/v1alpha1/fake",

|

||||

"client/clientset/versioned/typed/duck/v1alpha1",

|

||||

"client/clientset/versioned/typed/duck/v1alpha1/fake",

|

||||

"client/clientset/versioned/typed/istio/v1alpha3",

|

||||

"client/clientset/versioned/typed/istio/v1alpha3/fake",

|

||||

"signals",

|

||||

]

|

||||

pruneopts = "NUT"

|

||||

revision = "c15d7c8f2220a7578b33504df6edefa948c845ae"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:08c231ec84231a7e23d67e4b58f975e1423695a32467a362ee55a803f9de8061"

|

||||

name = "github.com/mattn/go-colorable"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "167de6bfdfba052fa6b2d3664c8f5272e23c9072"

|

||||

version = "v0.0.9"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:bffa444ca07c69c599ae5876bc18b25bfd5fa85b297ca10a25594d284a7e9c5d"

|

||||

name = "github.com/mattn/go-isatty"

|

||||

packages = ["."]

|

||||

pruneopts = "NUT"

|

||||

revision = "6ca4dbf54d38eea1a992b3c722a76a5d1c4cb25c"

|

||||

version = "v0.0.4"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:5985ef4caf91ece5d54817c11ea25f182697534f8ae6521eadcd628c142ac4b6"

|

||||

name = "github.com/matttproud/golang_protobuf_extensions"

|

||||

@@ -547,42 +528,72 @@

|

||||

version = "kubernetes-1.11.0"

|

||||

|

||||

[[projects]]

|

||||

digest = "1:29e55bcff61dd3d1f768724450a3933ea76e6277684796eb7c315154f41db902"

|

||||

digest = "1:c7d6cf5e28c377ab4000b94b6b9ff562c4b13e7e8b948ad943f133c5104be011"

|

||||

name = "k8s.io/client-go"

|

||||

packages = [

|

||||

"discovery",

|

||||

"discovery/fake",

|

||||

"kubernetes",

|

||||

"kubernetes/fake",

|

||||

"kubernetes/scheme",

|

||||

"kubernetes/typed/admissionregistration/v1alpha1",

|

||||

"kubernetes/typed/admissionregistration/v1alpha1/fake",

|

||||

"kubernetes/typed/admissionregistration/v1beta1",

|

||||

"kubernetes/typed/admissionregistration/v1beta1/fake",

|

||||

"kubernetes/typed/apps/v1",

|

||||

"kubernetes/typed/apps/v1/fake",

|

||||

"kubernetes/typed/apps/v1beta1",

|

||||

"kubernetes/typed/apps/v1beta1/fake",

|

||||

"kubernetes/typed/apps/v1beta2",

|

||||

"kubernetes/typed/apps/v1beta2/fake",

|

||||

"kubernetes/typed/authentication/v1",

|

||||

"kubernetes/typed/authentication/v1/fake",

|

||||

"kubernetes/typed/authentication/v1beta1",

|

||||

"kubernetes/typed/authentication/v1beta1/fake",

|

||||

"kubernetes/typed/authorization/v1",

|

||||

"kubernetes/typed/authorization/v1/fake",

|

||||

"kubernetes/typed/authorization/v1beta1",

|

||||

"kubernetes/typed/authorization/v1beta1/fake",

|

||||

"kubernetes/typed/autoscaling/v1",

|

||||

"kubernetes/typed/autoscaling/v1/fake",

|

||||

"kubernetes/typed/autoscaling/v2beta1",

|

||||

"kubernetes/typed/autoscaling/v2beta1/fake",

|

||||

"kubernetes/typed/batch/v1",

|

||||

"kubernetes/typed/batch/v1/fake",

|

||||

"kubernetes/typed/batch/v1beta1",

|

||||

"kubernetes/typed/batch/v1beta1/fake",

|

||||

"kubernetes/typed/batch/v2alpha1",

|

||||

"kubernetes/typed/batch/v2alpha1/fake",

|

||||

"kubernetes/typed/certificates/v1beta1",

|

||||

"kubernetes/typed/certificates/v1beta1/fake",

|

||||

"kubernetes/typed/core/v1",

|

||||

"kubernetes/typed/core/v1/fake",

|

||||

"kubernetes/typed/events/v1beta1",

|

||||

"kubernetes/typed/events/v1beta1/fake",

|

||||

"kubernetes/typed/extensions/v1beta1",

|

||||

"kubernetes/typed/extensions/v1beta1/fake",

|

||||

"kubernetes/typed/networking/v1",

|

||||

"kubernetes/typed/networking/v1/fake",

|

||||

"kubernetes/typed/policy/v1beta1",

|

||||

"kubernetes/typed/policy/v1beta1/fake",

|

||||

"kubernetes/typed/rbac/v1",

|

||||

"kubernetes/typed/rbac/v1/fake",

|

||||

"kubernetes/typed/rbac/v1alpha1",

|

||||

"kubernetes/typed/rbac/v1alpha1/fake",

|

||||

"kubernetes/typed/rbac/v1beta1",

|

||||

"kubernetes/typed/rbac/v1beta1/fake",

|

||||

"kubernetes/typed/scheduling/v1alpha1",

|

||||

"kubernetes/typed/scheduling/v1alpha1/fake",

|

||||

"kubernetes/typed/scheduling/v1beta1",

|

||||

"kubernetes/typed/scheduling/v1beta1/fake",

|

||||

"kubernetes/typed/settings/v1alpha1",

|

||||

"kubernetes/typed/settings/v1alpha1/fake",

|

||||

"kubernetes/typed/storage/v1",

|

||||

"kubernetes/typed/storage/v1/fake",

|

||||

"kubernetes/typed/storage/v1alpha1",

|

||||

"kubernetes/typed/storage/v1alpha1/fake",

|

||||

"kubernetes/typed/storage/v1beta1",

|

||||

"kubernetes/typed/storage/v1beta1/fake",

|

||||

"pkg/apis/clientauthentication",

|

||||

"pkg/apis/clientauthentication/v1alpha1",

|

||||

"pkg/apis/clientauthentication/v1beta1",

|

||||

@@ -675,24 +686,29 @@

|

||||

analyzer-name = "dep"

|

||||

analyzer-version = 1

|

||||

input-imports = [

|

||||

"github.com/fatih/color",

|

||||

"github.com/google/go-cmp/cmp",

|

||||

"github.com/google/go-cmp/cmp/cmpopts",

|

||||

"github.com/istio/glog",

|

||||

"github.com/knative/pkg/apis/istio/v1alpha3",

|

||||

"github.com/knative/pkg/client/clientset/versioned",

|

||||

"github.com/knative/pkg/client/clientset/versioned/fake",

|

||||

"github.com/knative/pkg/signals",

|

||||

"github.com/prometheus/client_golang/prometheus/promhttp",

|

||||

"go.uber.org/zap",

|

||||

"go.uber.org/zap/zapcore",

|

||||

"k8s.io/api/apps/v1",

|

||||

"k8s.io/api/autoscaling/v1",

|

||||

"k8s.io/api/autoscaling/v2beta1",

|

||||

"k8s.io/api/core/v1",

|

||||

"k8s.io/apimachinery/pkg/api/errors",

|

||||

"k8s.io/apimachinery/pkg/api/resource",

|

||||

"k8s.io/apimachinery/pkg/apis/meta/v1",

|

||||

"k8s.io/apimachinery/pkg/labels",

|

||||

"k8s.io/apimachinery/pkg/runtime",

|

||||

"k8s.io/apimachinery/pkg/runtime/schema",

|

||||

"k8s.io/apimachinery/pkg/runtime/serializer",

|

||||

"k8s.io/apimachinery/pkg/types",

|

||||

"k8s.io/apimachinery/pkg/util/intstr",

|

||||

"k8s.io/apimachinery/pkg/util/runtime",

|

||||

"k8s.io/apimachinery/pkg/util/sets/types",

|

||||

"k8s.io/apimachinery/pkg/util/wait",

|

||||

@@ -700,6 +716,7 @@

|

||||

"k8s.io/client-go/discovery",

|

||||

"k8s.io/client-go/discovery/fake",

|

||||

"k8s.io/client-go/kubernetes",

|

||||

"k8s.io/client-go/kubernetes/fake",

|

||||

"k8s.io/client-go/kubernetes/scheme",

|

||||

"k8s.io/client-go/kubernetes/typed/core/v1",

|

||||

"k8s.io/client-go/plugin/pkg/client/auth/gcp",

|

||||

|

||||

18

Makefile

18

Makefile

@@ -4,7 +4,10 @@ VERSION_MINOR:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4

|

||||

PATCH:=$(shell grep 'VERSION' pkg/version/version.go | awk '{ print $$4 }' | tr -d '"' | awk -F. '{print $$NF}')

|

||||

SOURCE_DIRS = cmd pkg/apis pkg/controller pkg/server pkg/logging pkg/version

|

||||

run:

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info -metrics-server=https://prometheus.istio.weavedx.com

|

||||

go run cmd/flagger/* -kubeconfig=$$HOME/.kube/config -log-level=info \

|

||||

-metrics-server=https://prometheus.iowa.weavedx.com \

|

||||

-slack-url=https://hooks.slack.com/services/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 \

|

||||

-slack-channel="devops-alerts"

|

||||

|

||||

build:

|

||||

docker build -t stefanprodan/flagger:$(TAG) . -f Dockerfile

|

||||

@@ -25,12 +28,13 @@ test: test-fmt test-codegen

|

||||

go test ./...

|

||||

|

||||

helm-package:

|

||||

cd charts/ && helm package flagger/ && helm package podinfo-flagger/ && helm package grafana/

|

||||

cd charts/ && helm package flagger/ && helm package grafana/

|

||||

mv charts/*.tgz docs/

|

||||

helm repo index docs --url https://stefanprodan.github.io/flagger --merge ./docs/index.yaml

|

||||

|

||||

helm-up:

|

||||

helm upgrade --install flagger ./charts/flagger --namespace=istio-system --set crd.create=false

|

||||

helm upgrade --install flagger-grafana ./charts/grafana --namespace=istio-system

|

||||

|

||||

version-set:

|

||||

@next="$(TAG)" && \

|

||||

@@ -52,10 +56,10 @@ version-up:

|

||||

|

||||

dev-up: version-up

|

||||

@echo "Starting build/push/deploy pipeline for $(VERSION)"

|

||||

docker build -t stefanprodan/flagger:$(VERSION) . -f Dockerfile

|

||||

docker push stefanprodan/flagger:$(VERSION)

|

||||

docker build -t quay.io/stefanprodan/flagger:$(VERSION) . -f Dockerfile

|

||||

docker push quay.io/stefanprodan/flagger:$(VERSION)

|

||||

kubectl apply -f ./artifacts/flagger/crd.yaml

|

||||

helm upgrade --install flagger ./charts/flagger --namespace=istio-system --set crd.create=false

|

||||

helm upgrade -i flagger ./charts/flagger --namespace=istio-system --set crd.create=false

|

||||

|

||||

release:

|

||||

git tag $(VERSION)

|

||||

@@ -68,3 +72,7 @@ release-set: fmt version-set helm-package

|

||||

git tag $(VERSION)

|

||||

git push origin $(VERSION)

|

||||

|

||||

reset-test:

|

||||

kubectl delete -f ./artifacts/namespaces

|

||||

kubectl apply -f ./artifacts/namespaces

|

||||

kubectl apply -f ./artifacts/canaries

|

||||

|

||||

257

README.md

257

README.md

@@ -2,13 +2,12 @@

|

||||

|

||||

[](https://travis-ci.org/stefanprodan/flagger)

|

||||

[](https://goreportcard.com/report/github.com/stefanprodan/flagger)

|

||||

[](https://codecov.io/gh/stefanprodan/flagger)

|

||||

[](https://github.com/stefanprodan/flagger/blob/master/LICENSE)

|

||||

[](https://github.com/stefanprodan/flagger/releases)

|

||||

|

||||

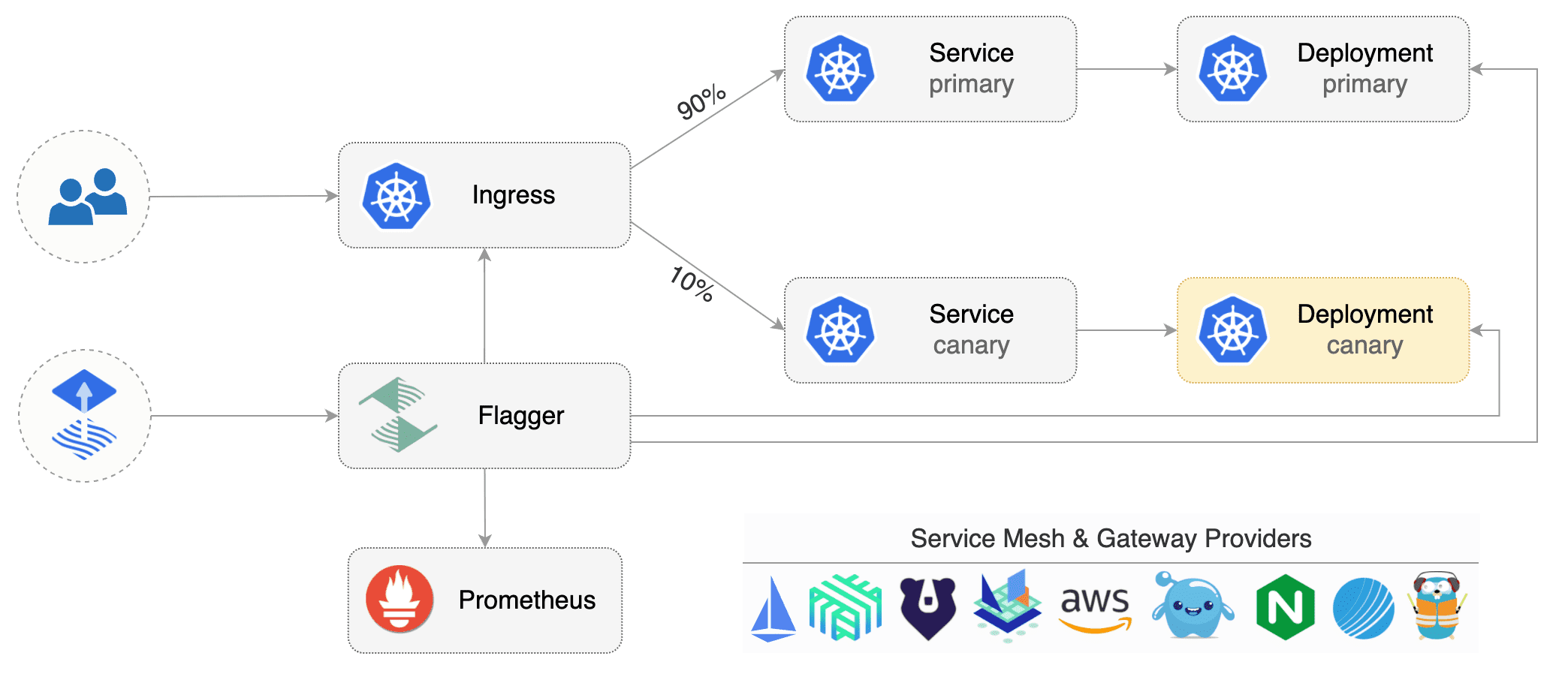

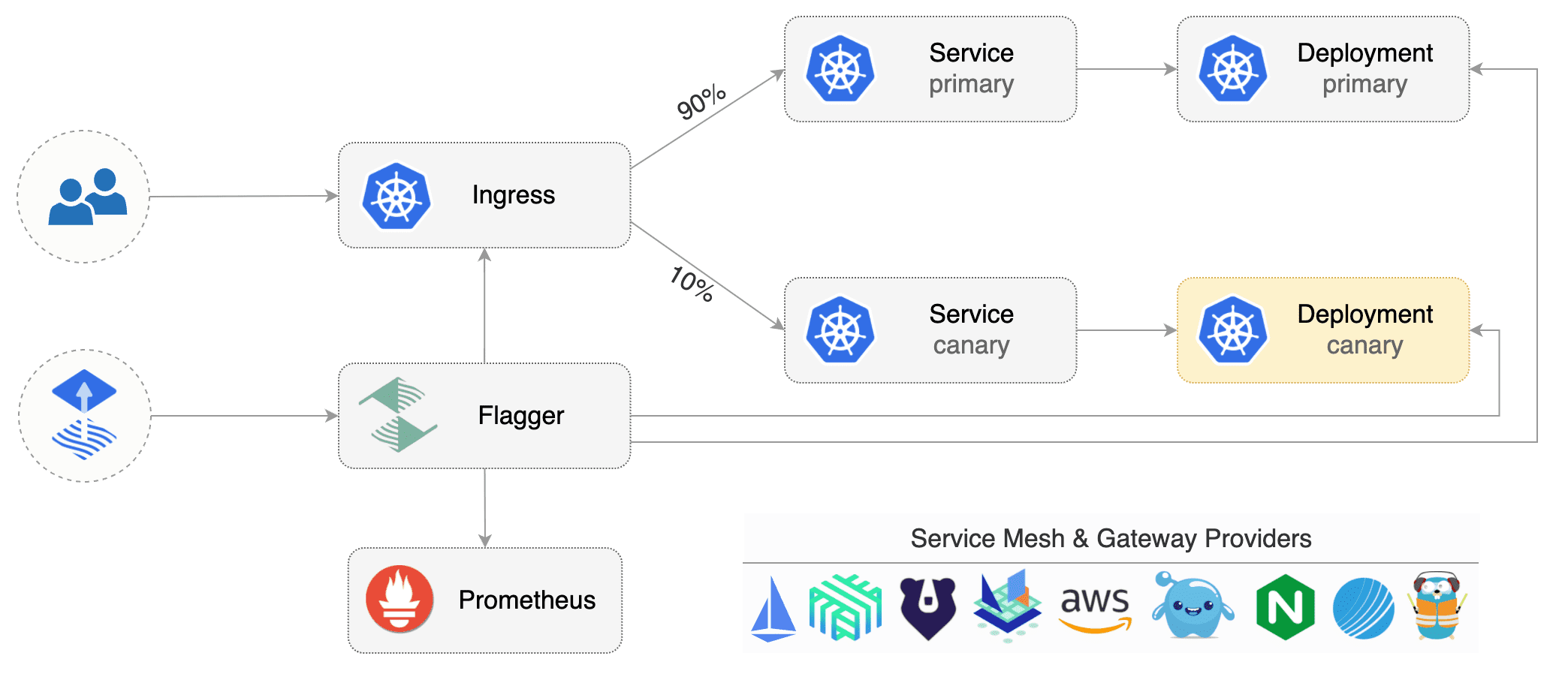

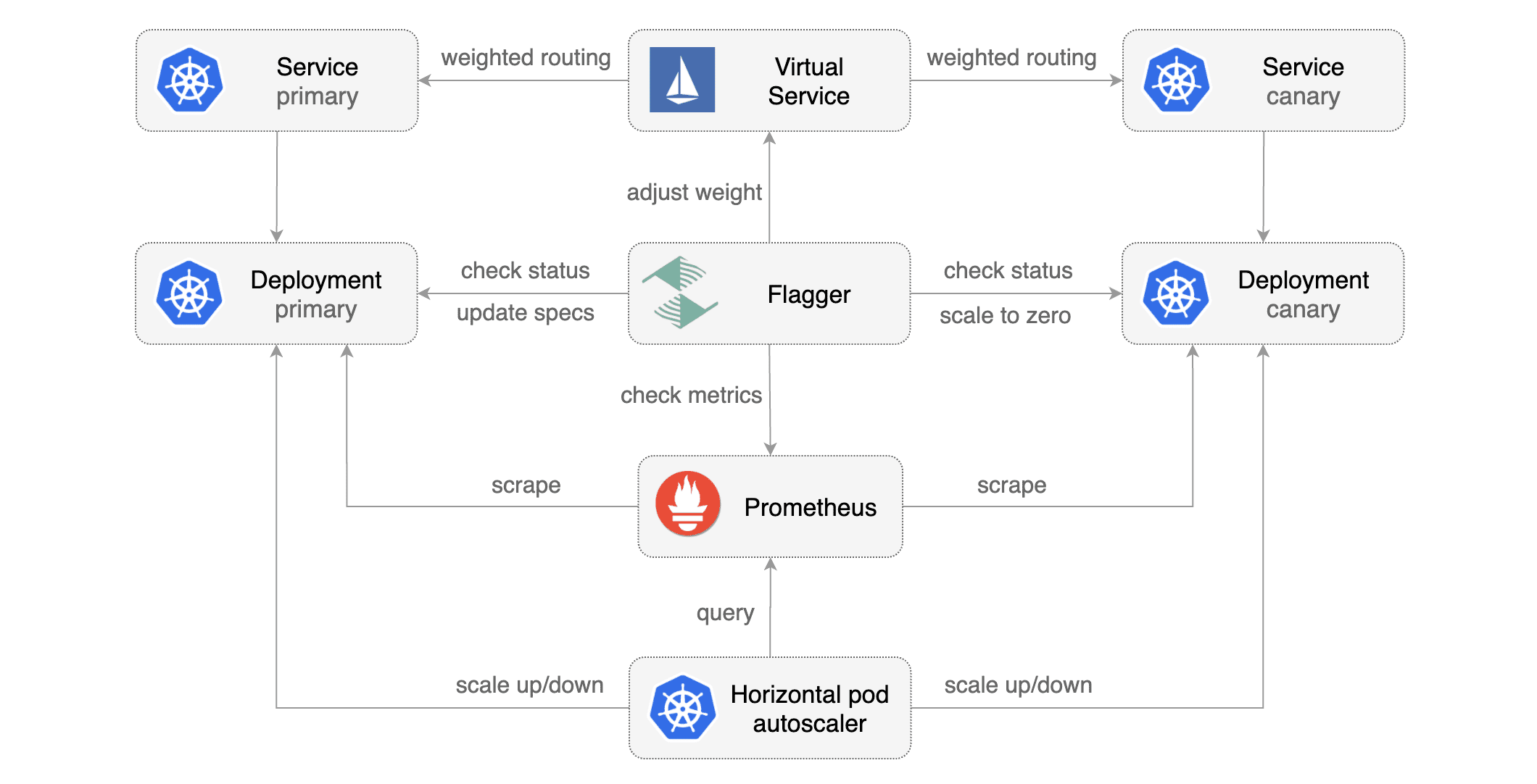

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

The project is currently in experimental phase and it is expected that breaking changes

|

||||

to the API will be made in the upcoming releases.

|

||||

|

||||

### Install

|

||||

|

||||

@@ -19,7 +18,7 @@ Deploy Flagger in the `istio-system` namespace using Helm:

|

||||

|

||||

```bash

|

||||

# add the Helm repository

|

||||

helm repo add flagger https://stefanprodan.github.io/flagger

|

||||

helm repo add flagger https://flagger.app

|

||||

|

||||

# install or upgrade

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

@@ -32,10 +31,11 @@ Flagger is compatible with Kubernetes >1.10.0 and Istio >1.0.0.

|

||||

|

||||

### Usage

|

||||

|

||||

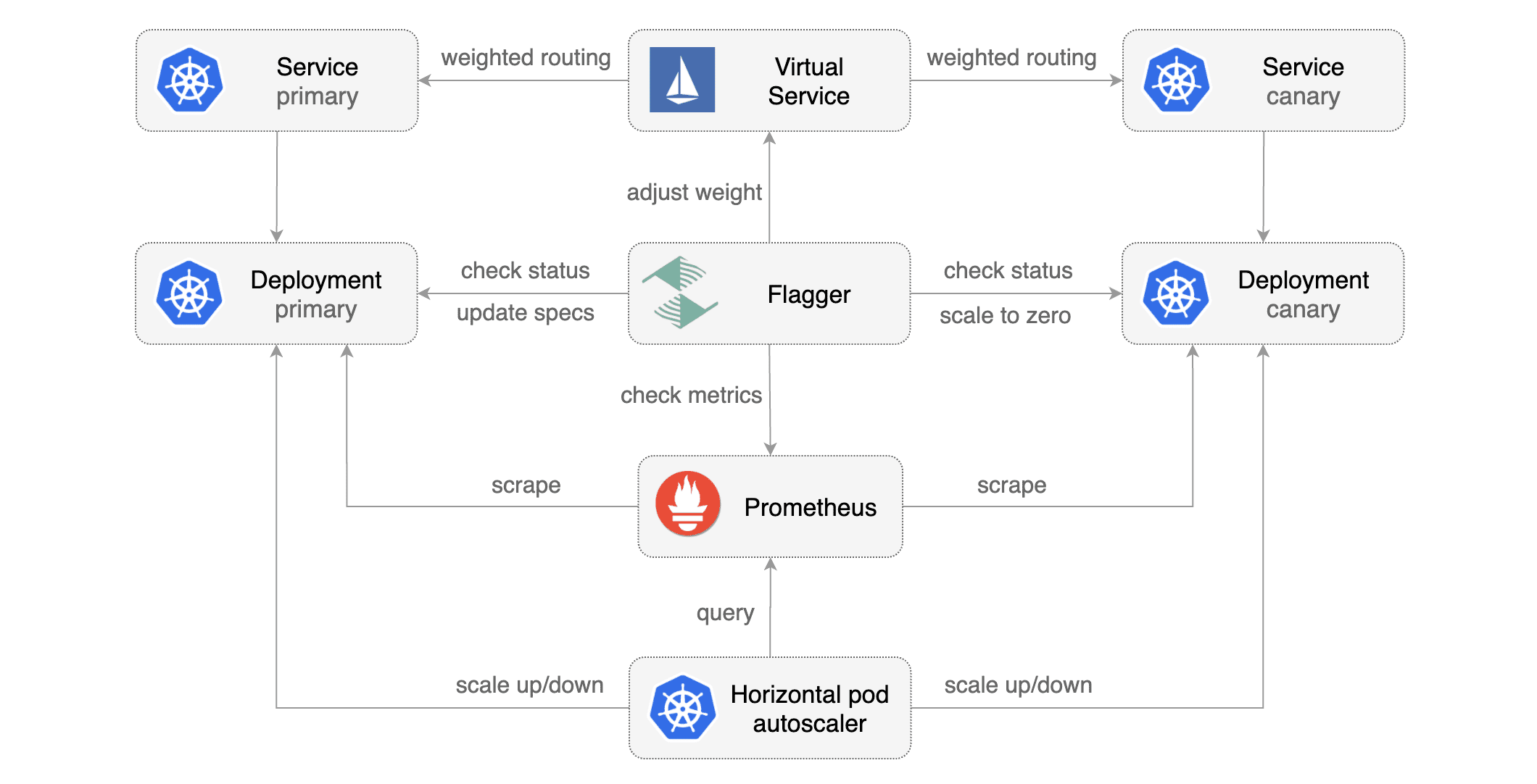

Flagger requires two Kubernetes [deployments](https://kubernetes.io/docs/concepts/workloads/controllers/deployment/):

|

||||

one for the version you want to upgrade called _primary_ and one for the _canary_.

|

||||

Each deployment must have a corresponding ClusterIP [service](https://kubernetes.io/docs/concepts/services-networking/service/)

|

||||

that exposes a port named http or https. These services are used as destinations in a Istio [virtual service](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#VirtualService).

|

||||

Flagger takes a Kubernetes deployment and creates a series of objects

|

||||

(Kubernetes [deployments](https://kubernetes.io/docs/concepts/workloads/controllers/deployment/),

|

||||

ClusterIP [services](https://kubernetes.io/docs/concepts/services-networking/service/) and

|

||||

Istio [virtual services](https://istio.io/docs/reference/config/istio.networking.v1alpha3/#VirtualService))

|

||||

to drive the canary analysis and promotion.

|

||||

|

||||

|

||||

|

||||

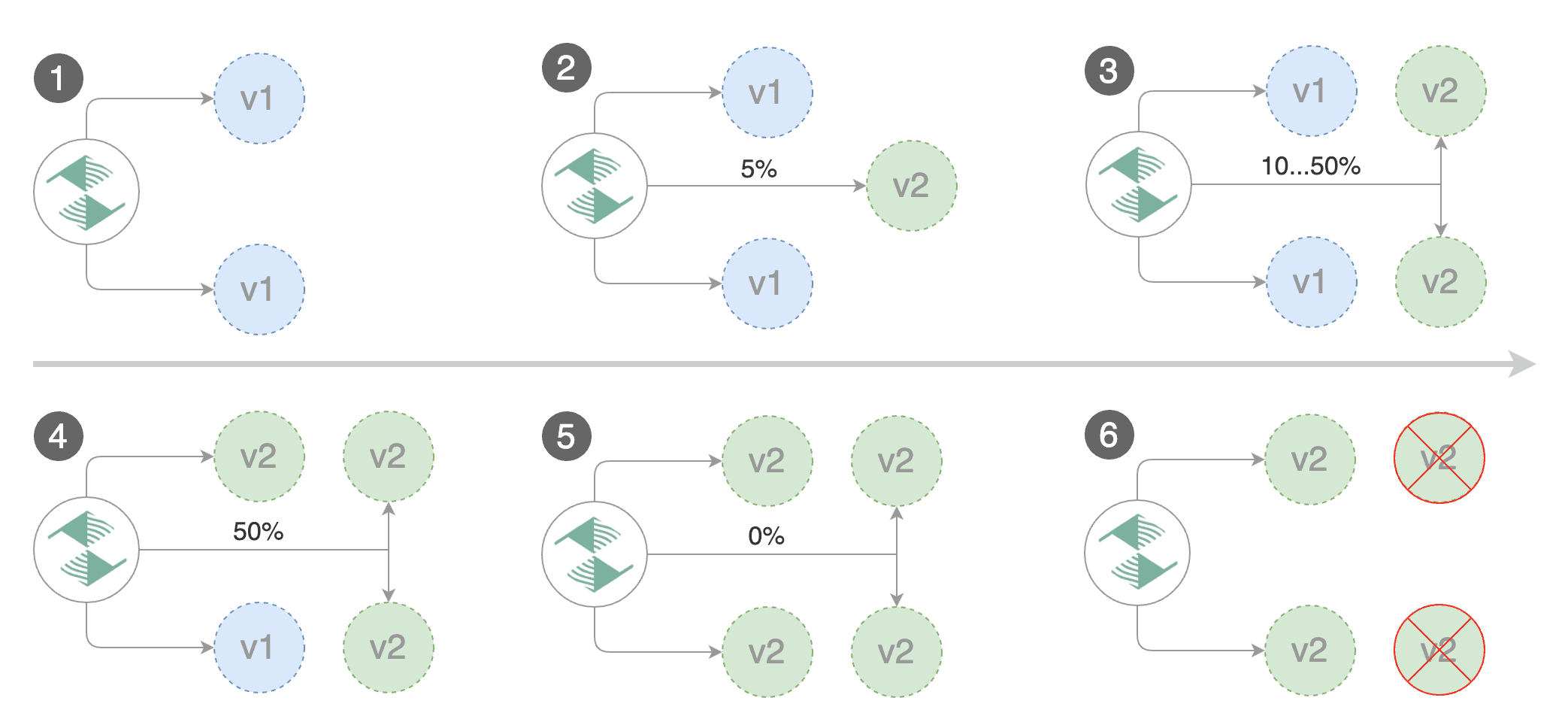

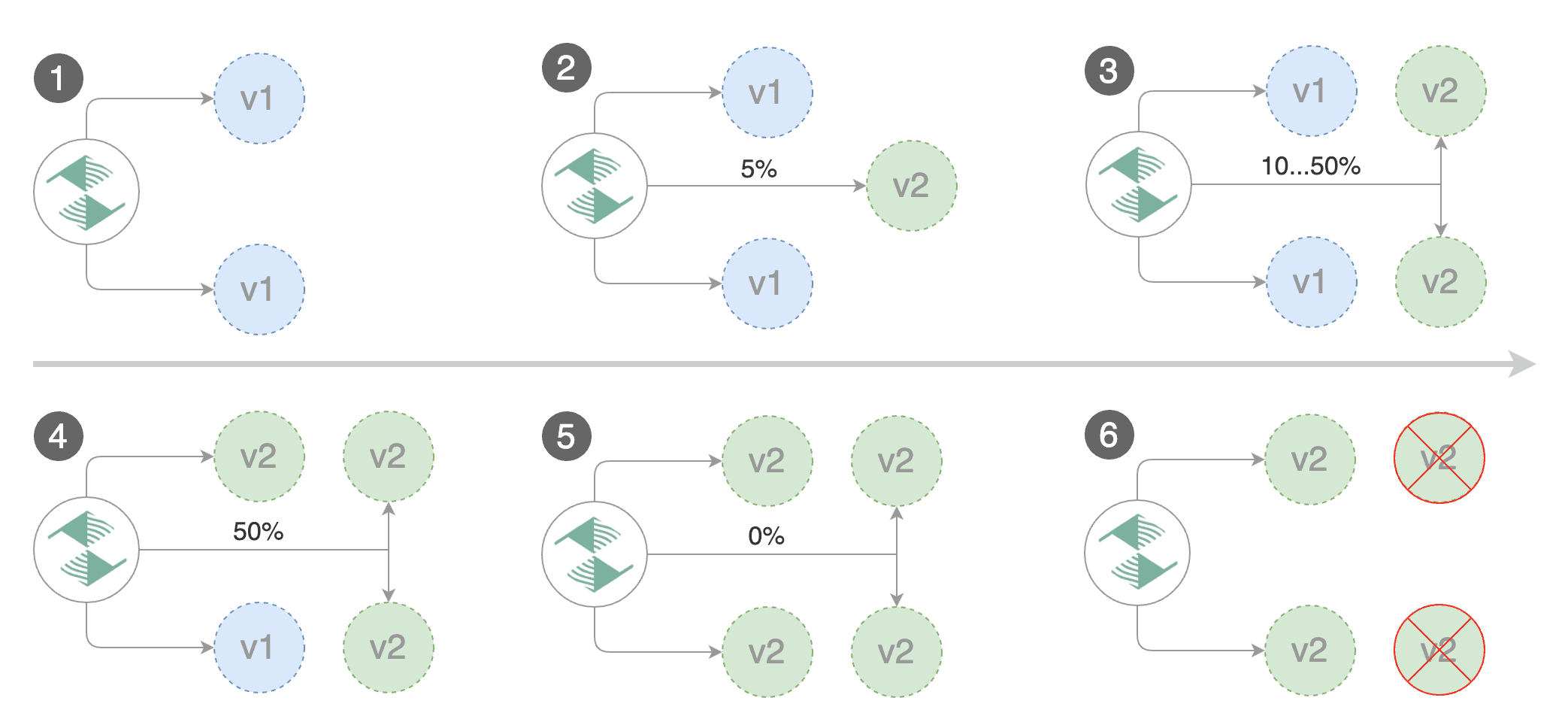

@@ -44,25 +44,25 @@ Gated canary promotion stages:

|

||||

* scan for canary deployments

|

||||

* check Istio virtual service routes are mapped to primary and canary ClusterIP services

|

||||

* check primary and canary deployments status

|

||||

* halt rollout if a rolling update is underway

|

||||

* halt rollout if pods are unhealthy

|

||||

* halt advancement if a rolling update is underway

|

||||

* halt advancement if pods are unhealthy

|

||||

* increase canary traffic weight percentage from 0% to 5% (step weight)

|

||||

* check canary HTTP request success rate and latency

|

||||

* halt rollout if any metric is under the specified threshold

|

||||

* halt advancement if any metric is under the specified threshold

|

||||

* increment the failed checks counter

|

||||

* check if the number of failed checks reached the threshold

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment and mark it as failed

|

||||

* wait for the canary deployment to be updated (revision bump) and start over

|

||||

* increase canary traffic weight by 5% (step weight) till it reaches 50% (max weight)

|

||||

* halt rollout while canary request success rate is under the threshold

|

||||

* halt rollout while canary request duration P99 is over the threshold

|

||||

* halt rollout if the primary or canary deployment becomes unhealthy

|

||||

* halt rollout while canary deployment is being scaled up/down by HPA

|

||||

* halt advancement while canary request success rate is under the threshold

|

||||

* halt advancement while canary request duration P99 is over the threshold

|

||||

* halt advancement if the primary or canary deployment becomes unhealthy

|

||||

* halt advancement while canary deployment is being scaled up/down by HPA

|

||||

* promote canary to primary

|

||||

* copy canary deployment spec template over primary

|

||||

* wait for primary rolling update to finish

|

||||

* halt rollout if pods are unhealthy

|

||||

* halt advancement if pods are unhealthy

|

||||

* route all traffic to primary

|

||||

* scale to zero the canary deployment

|

||||

* mark rollout as finished

|

||||

@@ -70,76 +70,43 @@ Gated canary promotion stages:

|

||||

|

||||

You can change the canary analysis _max weight_ and the _step weight_ percentage in the Flagger's custom resource.

|

||||

|

||||

Assuming the primary deployment is named _podinfo_ and the canary one _podinfo-canary_, Flagger will require

|

||||

a virtual service configured with weight-based routing:

|

||||

For a deployment named _podinfo_, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

```yaml

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: VirtualService

|

||||

metadata:

|

||||

name: podinfo

|

||||

spec:

|

||||

hosts:

|

||||

- podinfo

|

||||

http:

|

||||

- route:

|

||||

- destination:

|

||||

host: podinfo

|

||||

port:

|

||||

number: 9898

|

||||

weight: 100

|

||||

- destination:

|

||||

host: podinfo-canary

|

||||

port:

|

||||

number: 9898

|

||||

weight: 0

|

||||

```

|

||||

|

||||

Primary and canary services should expose a port named http:

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: podinfo-canary

|

||||

spec:

|

||||

type: ClusterIP

|

||||

selector:

|

||||

app: podinfo-canary

|

||||

ports:

|

||||

- name: http

|

||||

port: 9898

|

||||

targetPort: 9898

|

||||

```

|

||||

|

||||

Based on the two deployments, services and virtual service, a canary promotion can be defined using Flagger's custom resource:

|

||||

|

||||

```yaml

|

||||

apiVersion: flagger.app/v1beta1

|

||||

apiVersion: flagger.app/v1alpha1

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

targetKind: Deployment

|

||||

virtualService:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

primary:

|

||||

# hpa reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

host: podinfo

|

||||

canary:

|

||||

name: podinfo-canary

|

||||

host: podinfo-canary

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.istio.weavedx.com

|

||||

canaryAnalysis:

|

||||

# max number of failed checks

|

||||

# before rolling back the canary

|

||||

threshold: 10

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 5

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

stepWeight: 10

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

@@ -150,7 +117,7 @@ spec:

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 1m

|

||||

interval: 30s

|

||||

```

|

||||

|

||||

The canary analysis is using the following promql queries:

|

||||

@@ -198,8 +165,6 @@ histogram_quantile(0.99,

|

||||

|

||||

### Automated canary analysis, promotions and rollbacks

|

||||

|

||||

|

||||

|

||||

Create a test namespace with Istio sidecar injection enabled:

|

||||

|

||||

```bash

|

||||

@@ -208,66 +173,72 @@ export REPO=https://raw.githubusercontent.com/stefanprodan/flagger/master

|

||||

kubectl apply -f ${REPO}/artifacts/namespaces/test.yaml

|

||||

```

|

||||

|

||||

Create the primary deployment, service and hpa:

|

||||

Create a deployment and a horizontal pod autoscaler:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/workloads/primary-deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/workloads/primary-service.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/workloads/primary-hpa.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/hpa.yaml

|

||||

```

|

||||

|

||||

Create the canary deployment, service and hpa:

|

||||

Create a canary promotion custom resource (replace the Istio gateway and the internet domain with your own):

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/workloads/canary-deployment.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/workloads/canary-service.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/workloads/canary-hpa.yaml

|

||||

kubectl apply -f ${REPO}/artifacts/canaries/canary.yaml

|

||||

```

|

||||

|

||||

Create a virtual service (replace the Istio gateway and the internet domain with your own):

|

||||

After a couple of seconds Flagger will create the canary objects:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/workloads/virtual-service.yaml

|

||||

# applied

|

||||

deployment.apps/podinfo

|

||||

horizontalpodautoscaler.autoscaling/podinfo

|

||||

canary.flagger.app/podinfo

|

||||

# generated

|

||||

deployment.apps/podinfo-primary

|

||||

horizontalpodautoscaler.autoscaling/podinfo-primary

|

||||

service/podinfo

|

||||

service/podinfo-canary

|

||||

service/podinfo-primary

|

||||

virtualservice.networking.istio.io/podinfo

|

||||

```

|

||||

|

||||

Create a canary promotion custom resource:

|

||||

|

||||

|

||||

Trigger a canary deployment by updating the container image:

|

||||

|

||||

```bash

|

||||

kubectl apply -f ${REPO}/artifacts/rollouts/podinfo.yaml

|

||||

kubectl -n test set image deployment/podinfo \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

```

|

||||

|

||||

Canary promotion output:

|

||||

Flagger detects that the deployment revision changed and starts a new rollout:

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 16271121

|

||||

Failed Checks: 6

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger Starting canary deployment for podinfo.test

|

||||

Normal Synced 3m flagger New revision detected podinfo.test

|

||||

Normal Synced 3m flagger Scaling up podinfo.test

|

||||

Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Warning Synced 3m flagger Halt podinfo.test advancement request duration 2.525s > 500ms

|

||||

Warning Synced 3m flagger Halt podinfo.test advancement request duration 1.567s > 500ms

|

||||

Warning Synced 3m flagger Halt podinfo.test advancement request duration 823ms > 500ms

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 20

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 25

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 30

|

||||

Warning Synced 1m flagger Halt podinfo.test advancement success rate 82.33% < 99%

|

||||

Warning Synced 1m flagger Halt podinfo.test advancement success rate 87.22% < 99%

|

||||

Warning Synced 1m flagger Halt podinfo.test advancement success rate 94.74% < 99%

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 35

|

||||

Normal Synced 55s flagger Advance podinfo.test canary weight 40

|

||||

Normal Synced 45s flagger Advance podinfo.test canary weight 45

|

||||

Normal Synced 35s flagger Advance podinfo.test canary weight 50

|

||||

Normal Synced 25s flagger Copying podinfo-canary.test template spec to podinfo.test

|

||||

Warning Synced 15s flagger Waiting for podinfo.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo-canary.test

|

||||

Normal Synced 25s flagger Copying podinfo.test template spec to podinfo-primary.test

|

||||

Warning Synced 15s flagger Waiting for podinfo-primary.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

During the canary analysis you can generate HTTP 500 errors and high latency to test if Flagger pauses the rollout.

|

||||

@@ -313,45 +284,8 @@ Events:

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 55.06% < 99%

|

||||

Normal Synced 2m flagger Halt podinfo.test advancement success rate 47.00% < 99%

|

||||

Normal Synced 2m flagger (combined from similar events): Halt podinfo.test advancement success rate 38.08% < 99%

|

||||

Warning Synced 1m flagger Rolling back podinfo-canary.test failed checks threshold reached 10

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo-canary.test

|

||||

```

|

||||

|

||||

Trigger a new canary deployment by updating the canary image:

|

||||

|

||||

```bash

|

||||

kubectl -n test set image deployment/podinfo-canary \

|

||||

podinfod=quay.io/stefanprodan/podinfo:1.2.1

|

||||

```

|

||||

|

||||

Steer detects that the canary revision changed and starts a new rollout:

|

||||

|

||||

```

|

||||

kubectl -n test describe canary/podinfo

|

||||

|

||||

Status:

|

||||

Canary Revision: 19871136

|

||||

Failed Checks: 0

|

||||

State: finished

|

||||

Events:

|

||||

Type Reason Age From Message

|

||||

---- ------ ---- ---- -------

|

||||

Normal Synced 3m flagger New revision detected podinfo-canary.test old 17211012 new 17246876

|

||||

Normal Synced 3m flagger Scaling up podinfo.test

|

||||

Warning Synced 3m flagger Waiting for podinfo.test rollout to finish: 0 of 1 updated replicas are available

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 5

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 10

|

||||

Normal Synced 3m flagger Advance podinfo.test canary weight 15

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 20

|

||||

Normal Synced 2m flagger Advance podinfo.test canary weight 25

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 30

|

||||

Normal Synced 1m flagger Advance podinfo.test canary weight 35

|

||||

Normal Synced 55s flagger Advance podinfo.test canary weight 40

|

||||

Normal Synced 45s flagger Advance podinfo.test canary weight 45

|

||||

Normal Synced 35s flagger Advance podinfo.test canary weight 50

|

||||

Normal Synced 25s flagger Copying podinfo-canary.test template spec to podinfo.test

|

||||

Warning Synced 15s flagger Waiting for podinfo.test rollout to finish: 1 of 2 updated replicas are available

|

||||

Normal Synced 5s flagger Promotion completed! Scaling down podinfo-canary.test

|

||||

Warning Synced 1m flagger Rolling back podinfo.test failed checks threshold reached 10

|

||||

Warning Synced 1m flagger Canary failed! Scaling down podinfo.test

|

||||

```

|

||||

|

||||

### Monitoring

|

||||

@@ -388,18 +322,55 @@ Advance podinfo.test canary weight 40

|

||||

Halt podinfo.test advancement request duration 1.515s > 500ms

|

||||

Advance podinfo.test canary weight 45

|

||||

Advance podinfo.test canary weight 50

|

||||

Copying podinfo-canary.test template spec to podinfo-primary.test

|

||||

Scaling down podinfo-canary.test

|

||||

Promotion completed! podinfo-canary.test revision 81289

|

||||

Copying podinfo.test template spec to podinfo-primary.test

|

||||

Halt podinfo-primary.test advancement waiting for rollout to finish: 1 old replicas are pending termination

|

||||

Scaling down podinfo.test

|

||||

Promotion completed! podinfo.test

|

||||

```

|

||||

|

||||

Flagger exposes Prometheus metrics that can be used to determine the canary analysis status and the destination weight values:

|

||||

|

||||

```bash

|

||||

# Canaries total gauge

|

||||

flagger_canary_total{namespace="test"} 1

|

||||

|

||||

# Canary promotion last known status gauge

|

||||

# 0 - running, 1 - successful, 2 - failed

|

||||

flagger_canary_status{name="podinfo" namespace="test"} 1

|

||||

|

||||

# Canary traffic weight gauge

|

||||

flagger_canary_weight{workload="podinfo-primary" namespace="test"} 95

|

||||

flagger_canary_weight{workload="podinfo" namespace="test"} 5

|

||||

|

||||

# Seconds spent performing canary analysis histogram

|

||||

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="10"} 6

|

||||

flagger_canary_duration_seconds_bucket{name="podinfo",namespace="test",le="+Inf"} 6

|

||||

flagger_canary_duration_seconds_sum{name="podinfo",namespace="test"} 17.3561329

|

||||

flagger_canary_duration_seconds_count{name="podinfo",namespace="test"} 6

|

||||

```

|

||||

|

||||

### Alerting

|

||||

|

||||

Flagger can be configured to send Slack notifications:

|

||||

|

||||

```bash

|

||||

helm upgrade -i flagger flagger/flagger \

|

||||

--namespace=istio-system \

|

||||

--set slack.url=https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK \

|

||||

--set slack.channel=general \

|

||||

--set slack.user=flagger

|

||||

```

|

||||

|

||||

Once configured with a Slack incoming webhook, Flagger will post messages when a canary deployment has been initialized,

|

||||

when a new revision has been detected and if the canary analysis failed or succeeded.

|

||||

|

||||

|

||||

|

||||

### Roadmap

|

||||

|

||||

* Extend the canary analysis and promotion to other types than Kubernetes deployments such as Flux Helm releases or OpenFaaS functions

|

||||

* Extend the validation mechanism to support other metrics than HTTP success rate and latency

|

||||

* Add support for comparing the canary metrics to the primary ones and do the validation based on the derivation between the two

|

||||

* Alerting: Trigger Alertmanager on successful or failed promotions (Prometheus instrumentation of the canary analysis)

|

||||

* Reporting: publish canary analysis results to Slack/Jira/etc

|

||||

* Extend the canary analysis and promotion to other types than Kubernetes deployments such as Flux Helm releases or OpenFaaS functions

|

||||

|

||||

### Contributing

|

||||

|

||||

|

||||

45

artifacts/canaries/canary.yaml

Normal file

45

artifacts/canaries/canary.yaml

Normal file

@@ -0,0 +1,45 @@

|

||||

apiVersion: flagger.app/v1alpha1

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

# deployment reference

|

||||

targetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

# HPA reference (optional)

|

||||

autoscalerRef:

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

name: podinfo

|

||||

service:

|

||||

# container port

|

||||

port: 9898

|

||||

# Istio gateways (optional)

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

# Istio virtual service host names (optional)

|

||||

hosts:

|

||||

- app.iowa.weavedx.com

|

||||

canaryAnalysis:

|

||||

# max number of failed metric checks before rollback

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 5

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

66

artifacts/canaries/deployment.yaml

Normal file

66

artifacts/canaries/deployment.yaml

Normal file

@@ -0,0 +1,66 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

minReadySeconds: 5

|

||||

revisionHistoryLimit: 5

|

||||

strategy:

|

||||

rollingUpdate:

|

||||

maxUnavailable: 0

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

image: quay.io/stefanprodan/podinfo:1.3.0

|

||||

imagePullPolicy: IfNotPresent

|

||||

ports:

|

||||

- containerPort: 9898

|

||||

name: http

|

||||

protocol: TCP

|

||||

command:

|

||||

- ./podinfo

|

||||

- --port=9898

|

||||

- --level=info

|

||||

- --random-delay=false

|

||||

- --random-error=false

|

||||

env:

|

||||

- name: PODINFO_UI_COLOR

|

||||

value: blue

|

||||

livenessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/healthz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

- podcli

|

||||

- check

|

||||

- http

|

||||

- localhost:9898/readyz

|

||||

initialDelaySeconds: 5

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

cpu: 2000m

|

||||

memory: 512Mi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 64Mi

|

||||

19

artifacts/canaries/hpa.yaml

Normal file

19

artifacts/canaries/hpa.yaml

Normal file

@@ -0,0 +1,19 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

minReplicas: 2

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

- type: Resource

|

||||

resource:

|

||||

name: cpu

|

||||

# scale up if usage is above

|

||||

# 99% of the requested CPU (100m)

|

||||

targetAverageUtilization: 99

|

||||

@@ -4,9 +4,9 @@ metadata:

|

||||

name: canaries.flagger.app

|

||||

spec:

|

||||

group: flagger.app

|

||||

version: v1beta1

|

||||

version: v1alpha1

|

||||

versions:

|

||||

- name: v1beta1

|

||||

- name: v1alpha1

|

||||

served: true

|

||||

storage: true

|

||||

names:

|

||||

@@ -19,30 +19,30 @@ spec:

|

||||

properties:

|

||||

spec:

|

||||

required:

|

||||

- targetKind

|

||||

- virtualService

|

||||

- primary

|

||||

- canary

|

||||

- canaryAnalysis

|

||||

- targetRef

|

||||

- service

|

||||

- canaryAnalysis

|

||||

properties:

|

||||

targetKind:

|

||||

type: string

|

||||

virtualService:

|

||||

targetRef:

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

kind:

|

||||

type: string

|

||||

name:

|

||||

type: string

|

||||

primary:

|

||||

autoscalerRef:

|

||||

properties:

|

||||

apiVersion:

|

||||

type: string

|

||||

kind:

|

||||

type: string

|

||||

name:

|

||||

type: string

|

||||

host:

|

||||

type: string

|

||||

canary:

|

||||

service:

|

||||

properties:

|

||||

name:

|

||||

type: string

|

||||

host:

|

||||

type: string

|

||||

port:

|

||||

type: number

|

||||

canaryAnalysis:

|

||||

properties:

|

||||

threshold:

|

||||

@@ -64,4 +64,3 @@ spec:

|

||||

pattern: "^[0-9]+(m)"

|

||||

threshold:

|

||||

type: number

|

||||

|

||||

|

||||

@@ -22,7 +22,7 @@ spec:

|

||||

serviceAccountName: flagger

|

||||

containers:

|

||||

- name: flagger

|

||||

image: stefanprodan/flagger:0.0.1

|

||||

image: quay.io/stefanprodan/flagger:0.1.0

|

||||

imagePullPolicy: Always

|

||||

ports:

|

||||

- name: http

|

||||

@@ -41,6 +41,7 @@ spec:

|

||||

- --timeout=2

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

readinessProbe:

|

||||

exec:

|

||||

command:

|

||||

@@ -50,6 +51,7 @@ spec:

|

||||

- --timeout=2

|

||||

- --spider

|

||||

- http://localhost:8080/healthz

|

||||

timeoutSeconds: 5

|

||||

resources:

|

||||

limits:

|

||||

memory: "512Mi"

|

||||

|

||||

@@ -1,42 +0,0 @@

|

||||

# monitor events: watch "kubectl -n test describe rollout/podinfo | sed -n 35,1000p"

|

||||

# run tester: kubectl run -n test tester --image=quay.io/stefanprodan/podinfo:1.2.1 -- ./podinfo --port=9898

|

||||

# generate latency: watch curl http://podinfo-canary:9898/delay/1

|

||||

# generate errors: watch curl http://podinfo-canary:9898/status/500

|

||||

# run load test: kubectl run -n test -it --rm --restart=Never hey --image=stefanprodan/loadtest -- sh

|

||||

# generate load: hey -z 2m -h2 -m POST -d '{test: 1}' -c 10 -q 5 http://podinfo:9898/api/echo

|

||||

apiVersion: flagger.app/v1beta1

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

targetKind: Deployment

|

||||

virtualService:

|

||||

name: podinfo

|

||||

primary:

|

||||

name: podinfo

|

||||

host: podinfo

|

||||

canary:

|

||||

name: podinfo-canary

|

||||

host: podinfo-canary

|

||||

canaryAnalysis:

|

||||

# max number of failed metric checks

|

||||

# before rolling back the canary

|

||||

threshold: 5

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 10

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

@@ -1,36 +0,0 @@

|

||||

apiVersion: flagger.app/v1beta1

|

||||

kind: Canary

|

||||

metadata:

|

||||

name: podinfoc

|

||||

namespace: test

|

||||

spec:

|

||||

targetKind: Deployment

|

||||

virtualService:

|

||||

name: podinfoc

|

||||

primary:

|

||||

name: podinfoc-primary

|

||||

host: podinfoc-primary

|

||||

canary:

|

||||

name: podinfoc-canary

|

||||

host: podinfoc-canary

|

||||

canaryAnalysis:

|

||||

# max number of failed metric checks

|

||||

# before rolling back the canary

|

||||

threshold: 10

|

||||

# max traffic percentage routed to canary

|

||||

# percentage (0-100)

|

||||

maxWeight: 50

|

||||

# canary increment step

|

||||

# percentage (0-100)

|

||||

stepWeight: 10

|

||||

metrics:

|

||||

- name: istio_requests_total

|

||||

# minimum req success rate (non 5xx responses)

|

||||

# percentage (0-100)

|

||||

threshold: 99

|

||||

interval: 1m

|

||||

- name: istio_request_duration_seconds_bucket

|

||||

# maximum req duration P99

|

||||

# milliseconds

|

||||

threshold: 500

|

||||

interval: 30s

|

||||

34

artifacts/routing/match.yaml

Normal file

34

artifacts/routing/match.yaml

Normal file

@@ -0,0 +1,34 @@

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: VirtualService

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

hosts:

|

||||

- podinfo.iowa.weavedx.com

|

||||

- podinfo

|

||||

http:

|

||||

- match:

|

||||

- headers:

|

||||

user-agent:

|

||||

regex: ^(?!.*Chrome)(?=.*\bSafari\b).*$

|

||||

route:

|

||||

- destination:

|

||||

host: podinfo-primary

|

||||

port:

|

||||

number: 9898

|

||||

weight: 0

|

||||

- destination:

|

||||

host: podinfo

|

||||

port:

|

||||

number: 9898

|

||||

weight: 100

|

||||

- route:

|

||||

- destination:

|

||||

host: podinfo-primary

|

||||

port:

|

||||

number: 9898

|

||||

weight: 100

|

||||

25

artifacts/routing/mirror.yaml

Normal file

25

artifacts/routing/mirror.yaml

Normal file

@@ -0,0 +1,25 @@

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: VirtualService

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

hosts:

|

||||

- podinfo.iowa.weavedx.com

|

||||

- podinfo

|

||||

http:

|

||||

- route:

|

||||

- destination:

|

||||

host: podinfo-primary

|

||||

port:

|

||||

number: 9898

|

||||

weight: 100

|

||||

mirror:

|

||||

host: podinfo

|

||||

port:

|

||||

number: 9898

|

||||

26

artifacts/routing/weight.yaml

Normal file

26

artifacts/routing/weight.yaml

Normal file

@@ -0,0 +1,26 @@

|

||||

apiVersion: networking.istio.io/v1alpha3

|

||||

kind: VirtualService

|

||||

metadata:

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

spec:

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

hosts:

|

||||

- podinfo.iowa.weavedx.com

|

||||

- podinfo

|

||||

http:

|

||||

- route:

|

||||

- destination:

|

||||

host: podinfo-primary

|

||||

port:

|

||||

number: 9898

|

||||

weight: 100

|

||||

- destination:

|

||||

host: podinfo

|

||||

port:

|

||||

number: 9898

|

||||

weight: 0

|

||||

@@ -1,10 +1,10 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo-canary

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo-canary

|

||||

app: podinfo

|

||||

spec:

|

||||

replicas: 1

|

||||

strategy:

|

||||

@@ -13,13 +13,13 @@ spec:

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo-canary

|

||||

app: podinfo

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo-canary

|

||||

app: podinfo

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

|

||||

@@ -1,13 +1,13 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo-canary

|

||||

name: podinfo

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo-canary

|

||||

name: podinfo

|

||||

minReplicas: 2

|

||||

maxReplicas: 3

|

||||

metrics:

|

||||

|

||||

@@ -1,14 +1,14 @@

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: podinfo-canary

|

||||

name: podinfo

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo-canary

|

||||

app: podinfo

|

||||

spec:

|

||||

type: ClusterIP

|

||||

selector:

|

||||

app: podinfo-canary

|

||||

app: podinfo

|

||||

ports:

|

||||

- name: http

|

||||

port: 9898

|

||||

|

||||

@@ -1,10 +1,10 @@

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

metadata:

|

||||

name: podinfo

|

||||

name: podinfo-primary

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

app: podinfo-primary

|

||||

spec:

|

||||

replicas: 1

|

||||

strategy:

|

||||

@@ -13,13 +13,13 @@ spec:

|

||||

type: RollingUpdate

|

||||

selector:

|

||||

matchLabels:

|

||||

app: podinfo

|

||||

app: podinfo-primary

|

||||

template:

|

||||

metadata:

|

||||

annotations:

|

||||

prometheus.io/scrape: "true"

|

||||

labels:

|

||||

app: podinfo

|

||||

app: podinfo-primary

|

||||

spec:

|

||||

containers:

|

||||

- name: podinfod

|

||||

|

||||

@@ -1,13 +1,13 @@

|

||||

apiVersion: autoscaling/v2beta1

|

||||

kind: HorizontalPodAutoscaler

|

||||

metadata:

|

||||

name: podinfo

|

||||

name: podinfo-primary

|

||||

namespace: test

|

||||

spec:

|

||||

scaleTargetRef:

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

name: podinfo

|

||||

name: podinfo-primary

|

||||

minReplicas: 2

|

||||

maxReplicas: 4

|

||||

metrics:

|

||||

|

||||

@@ -1,14 +1,14 @@

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: podinfo

|

||||

name: podinfo-primary

|

||||

namespace: test

|

||||

labels:

|

||||

app: podinfo

|

||||

app: podinfo-primary

|

||||

spec:

|

||||

type: ClusterIP

|

||||

selector:

|

||||

app: podinfo

|

||||

app: podinfo-primary

|

||||

ports:

|

||||

- name: http

|

||||

port: 9898

|

||||

|

||||

@@ -8,18 +8,19 @@ metadata:

|

||||

spec:

|

||||

gateways:

|

||||

- public-gateway.istio-system.svc.cluster.local

|

||||

- mesh

|

||||

hosts:

|

||||

- app.istio.weavedx.com

|

||||

- podinfo.istio.weavedx.com

|

||||

- podinfo

|

||||

http:

|

||||

- route:

|

||||

- destination:

|

||||

host: podinfo

|

||||

host: podinfo-primary

|

||||

port:

|

||||

number: 9898

|

||||

weight: 100

|

||||

- destination:

|

||||

host: podinfo-canary

|

||||

host: podinfo

|

||||

port:

|

||||

number: 9898

|

||||

weight: 0

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

apiVersion: v1

|

||||

name: flagger

|

||||

version: 0.0.1

|

||||

appVersion: 0.0.1

|

||||

version: 0.1.0

|

||||

appVersion: 0.1.0

|

||||

description: Flagger is a Kubernetes operator that automates the promotion of canary deployments using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

home: https://github.com/stefanprodan/flagger

|

||||

|

||||

@@ -1,14 +1,20 @@

|

||||

# Flagger

|

||||

|

||||

Flagger is a Kubernetes operator that automates the promotion of canary deployments

|

||||

[Flagger](https://flagger.app) is a Kubernetes operator that automates the promotion of canary deployments

|

||||

using Istio routing for traffic shifting and Prometheus metrics for canary analysis.

|

||||

|

||||

## Installing the Chart

|

||||

|

||||

Add Flagger Hel repository:

|

||||

|

||||

```console

|

||||

helm repo add flagger https://flagger.app

|

||||

```

|

||||

|

||||

To install the chart with the release name `flagger`:

|

||||

|

||||

```console

|

||||

$ helm upgrade --install flagger ./charts/flagger --namespace=istio-system

|

||||

$ helm install --name flagger --namespace istio-system flagger/flagger

|

||||

```

|

||||

|

||||

The command deploys Flagger on the Kubernetes cluster in the istio-system namespace.

|

||||

@@ -30,9 +36,16 @@ The following tables lists the configurable parameters of the Flagger chart and

|

||||

|

||||

Parameter | Description | Default

|

||||

--- | --- | ---

|

||||

`image.repository` | image repository | `stefanprodan/flagger`

|

||||

`image.repository` | image repository | `quay.io/stefanprodan/flagger`

|

||||

`image.tag` | image tag | `<VERSION>`

|

||||

`image.pullPolicy` | image pull policy | `IfNotPresent`

|

||||

`controlLoopInterval` | wait interval between checks | `10s`

|

||||

`metricsServer` | Prometheus URL | `http://prometheus.istio-system:9090`

|

||||